Kalman Filter Made EasySTILL WORKING ON THIS DOCUMENT ...Kalman Filter - Da TheoryYou may happen to come across a fancy technical term called Kalman Filter, but because of all those complicated math, you may be too scared to get into it. This article provides a not-too-math-intensive tutorial for you and also me because I do forget stuff from time to time. Hopefully you will gain a better understanding on using Kalman filter.If you ever design an embedded system, you will very likely to come across with some noisy sensors. To deal with these shity sensors, Kalman filter comes to rescue. Kalman filter has the the ability to fuse multiple sensor readings together, taking advantages of their individual strength, while gives readings with a balance of noise cancelation and adaptability. How wonderful! Let's suppose you just meet a new girl and you have no idea how punctual she will be. (Girls are, in fact, not too punctual based on my personal experencie.) Based on her history, you has an estimation to when she will arrive. You don't want to come early, but at the same time, you don't want to be yelled for being late, so you want to come at exactly the right time. Now the girl comes and she is 30 mintues late (and that's how she interpret the meaning of "to the hour"), you now correct your own estimation for this current time frame, and use this new estimation to predict when she will come next time you meet her. A filter is exactly that; they use the same principle. Now say you are back to work. You need to know the a system state based on some noisy, perhaps a few redundant, sensors. Now read this carefully: given a sensor readings, the system is going to correct its own predicted states made by last step, and use these corrected estimations to make new predictions for the next time step. Doesn't sound too complicated, huh? We can then write the above concept mathematically

If we need to control some robot arm or whatever. corrected_state is what you want. Next, I will then use the sensor input for next prediction. The estimation will be

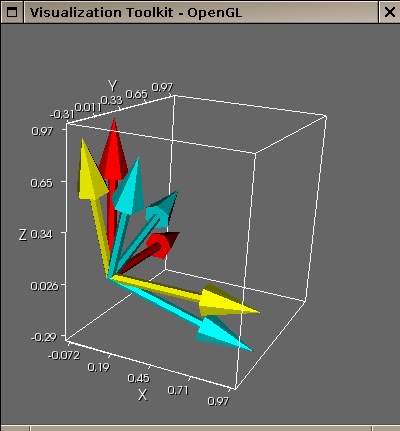

Kalman Filter - General StrategyThe general strategy to have a good filter is to sample as fast as possible. That's because sensors are noisy. And by averaging a lot of data, we are able to reduce the noise and have more adaptivity. However, it may not be possible to do so with a 10 MHz computer considering all those complicated computations; thus, do use fixed point instead of floating point to do multiplication, division, summation, trigonometry and integration. It will SIGNIFICANTLY speed up the processing time. If you decide using fixed point, DO NOT use floating point for ANYTHING AT ALL (not even a single floating point declaration) because your declaration or insturction will likly propagate, so do make sure you skim through the assembly code to see if there is any floating point instruction. And finally, you may want to probe around in matlab for testing purpose, but after you are done, please do implement it in C. It is also important to eliminate noise in the hardware. That means reducing resistance (the length of the wire) will help (noise is proportional to reistance and temperature), and applying your layout magic to remove crosstalk. Adding a tunable hardware low pass, high pass filter may give you a much better time. Application - Sensor ChoiceWARNING! WORK STILL IN PROGRESS Let's say we we want to find the orientation of the system in order to control our robot, plane, missle, or whatever. Everyone knows that a gyroscope will come into handy because of its sensitivity. With three angles provided by the gyroscope, we can easily define a 3d space precisely, but unfortunately, as time goes on, error will gradually build up. Therefore, we need to have other sensor to compensate for such error accumulation. Accelerometer will be a good choce to derive angles because of its non drifting quality, but it only gives you ONE 3d vector pointing to the ground. To define a 3d space precisely, we need to have two 3d vectors. In other words, we need one more sensor. Perhaps a GPS will be nice, but it is expensive, power hungry and also heavy in weight. I have considered a lot of alternatives, but using a 3d compass seems to be unavoidable. 3d compass is notorious for its difficulty in calibration, and its inaccuracy due to electromagnetic interference, especially if you have a motor or servo somewhere in your robot. Yet, no matter how reluntant I am towards using a compass, there are no other alternatives that can give you a cheap and non drifting readings. I guess people have been using a compass since stone age, so why not give it a try. Application - Coordinate Syste,To avoid confusion, we will use a right hand coordinate system with forward as y direction, right as our x direction, up as our z direction. Once we defined the coordinate system, we can then find out what we are trying to find here. Notice that with a acclerometer, which gives the vector sum of gravity and body acceleration, is not able to detect rotation on the z axis. It will have the same [0 0 -9.8] not matter how you rotate on the z axis; therefore with an accelerometer we can only obtain a yz and xz rotations. Also, I understand that if our robot accelerate, the sensor output is no long the gravity anymore, therefore, we are only able to obtain a gravity sample only when the magnitude of acceleration is 9.8, or somewhere close to it. Thus, we are going to use a gyroscope for short term state estimation and an sporadic acclerometer values for long term offset correction. Futhermore, I am not going to bother with rotation around the z axis since I believe I am missing one degree of freedom. If I do want to find out the rotation around the z axis, I may need a 2d compass or something. I don't think it is a good idea to rely on gyroscope alone for xy rotation without any offset compensation. (As a side not, it turns out that for digital compass, there is a twist to it. If the measurement is not sampled while the sensor is lying flat, it is not going to be correct. It needs to be compensated. Search google for compensated compass for more infomation) Now back to equations. My state is

There are two things I need. I need to correct the current state based on sensor output using some equations, and I need to predict the next state based on again sensor output. To do prediction, assume that the corrected state is correct and assume that our sensor is noisyless. What do we do if the world is so perfect? This is what I would do

We have everything now, but the gain. Ideally, we can carefully pick a gain manually, but that is no easy task. This is where the dude Kalman comes in. This dude took the derivative of the gain with respect to the error, set it to zero, and give a gain such that error can be minimized. He supplied you with a bunch of equations to calculate such gain. Let's stare at more pseudo code instead of staring at a bunch of equations. Before going to equations, lets find out the values for ... Let's define H as the partial dervative of state2output function for each state. What the hell is that mean? state2output return [velocity_x velocity_y angle_x angle_y], the entry (column = 1, row = 1) is the partial derivative of velocity_x in terms of angle_x, the entry(column = 2, row = 1) is the partial derivative of velocity_x in terms of velocity_x.. etc.. You get the point and we will, in the end, have a matrix of size(sensoroutput) x size(state). Let's define F as the partial deriviative of predict function for each state, just like what we did to H, but it should be a matrix of size(state) x size(state). Let's define R as the measurement noise variance matrix of size(sensoroutput) x size(sensoroutput). This matrix is very similar to the identity matrix mostly filled with zero. The diagonal, however, is the standard deviation square, or the variance of each sensor. I as the idenitity matrix of size(state) x size(state) And finally Q as the state noise variance of size(state) x size(state). What I would do is define a vector of estimated standard deviation of the state. o = [a b c d ... ] and I do Q = o' x o. You should make sure the standard deviation are proportional to each other. The displacement should be velocity times sample period, for example.

It is important to apply those equations in exact order. Given an output, you do correction of the current state and then use that corrected state to make new prediction. You may ask whether these equations are the original version or the extended kalman filter. To tell you the truth, both! They have the exact same equations, but for extended kalman filter we just have H and F as a partial derivative matrix that change with the variables, whereas for original kalman filter it is just a plain old constant matrix. Another difference is that the gain of the original kalman filter will converge to some value, while the gain for extended kalman filter will vary in time. One problem comes up quite often is that the state keeps on diverging but error remains small. Say the the real velocity is 3, the state velocity is 5, offset is 3. In next time frame, the velocity is again 3, but the state velocity is 1000, the offset is 997, for example. Diverging can be quite painful to debug, and requires deep understanding of what is going on. All right, I am out. If you want to talk about this, you are very welcomed to send me an email through the contact page. Thanks for stopping by. I will be happy to hear from you on what I wrote on this page.

| ||||||||||||

|