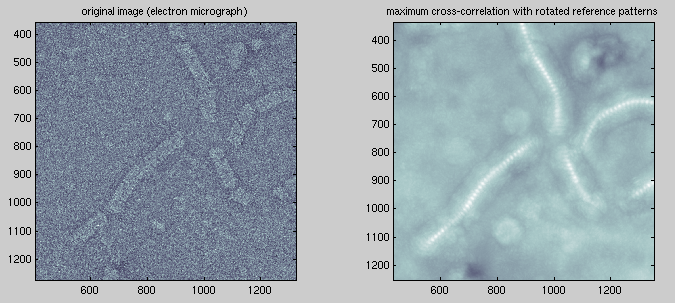

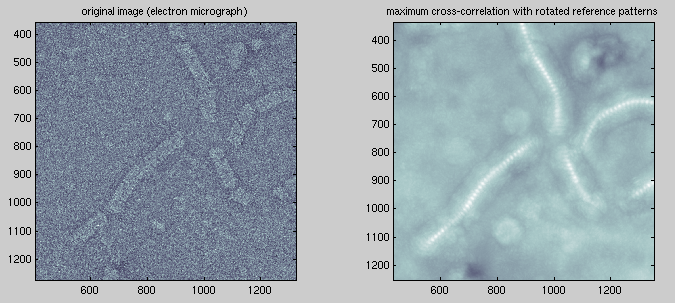

Cross-correlation is a remarkably effective method for locating specified patterns within a signal. The following result (right) was generated by computing the two-dimensional cross-correlation between a reference image (below) and the electron micrograph (left) for each of 45 rotations (with an increment of 4° for a total range of 180°) of the reference image, and taking the maximum of each pixel value.

The reference image used was this:

The cross-correlation operation is simply convolution with some normalization applied and with the second argument reversed in time (1d) or mirrored in space (2d). Time-domain convolution is equivalent to frequency-domain multiplication, so it's convenient to compute a convolution by simply mutiplying elementwise the fourier transforms of the signals to be convolved. To form the cross-correlation, it's necessary to take the complex conjugate of the fourier transform of the second argument, which is equivalent to mirroring the signal in the time-domain.

It's actually faster to do convolution / cross-correlation in this way; convolution performed in the "naïve" sense (in the time domain) for a 1d signal requires time proportional to n2 while the fast fourier transform requires only Θ(n log n). (c.f. CLRS §30)

In MATLAB the two dimensional cross correlation between two 2d arrays A and B may be computed easily in this way: ifft2(fft2(A).*conj(fft2(B)))

The reference image needs to be padded with zeroes to form an array the same size as the image.

Obviously the fourier transform of the image to be searched need only be done once. Rotation generally commutes with the fourier transform for continuous two-dimensional signals, so it would be nice if we could calculate the transform of the reference image only once; unfortunately the discrete fourier transform doesn't in general commute with rotation for a discrete, sampled image. Also perhaps we could somehow do the zero-padding in fourier space (presumably the padding operation would be easier than taking transform of the large image).

This process is well suited to parallelization, both at the level of obviously independent tasks (the various rotations of the reference image) and at the level of the FFT algorithm. The former is quite well suited to a network of workstations, and the latter to shared-memory multiprocessing.

I implemented this in MATLAB.

PD command in SPIDER)$Id: matching.html,v 1.2 2002/06/17 15:06:26 tobin Exp $