math214:04-15

Differences

This shows you the differences between two versions of the page.

| Next revision | Previous revision Last revision Both sides next revision | ||

|

math214:04-15 [2020/04/15 00:15] pzhou created |

math214:04-15 [2020/04/15 00:33] pzhou |

||

|---|---|---|---|

| Line 15: | Line 15: | ||

| $$ [X_\gamma, X_\sigma] = \nabla_{X_\gamma} X_\sigma - \nabla_{X_\sigma} X_\gamma = \omega_\sigma^\beta(X_\gamma) X_\beta - \omega_\gamma^\beta(X_\sigma) X_\beta$$ | $$ [X_\gamma, X_\sigma] = \nabla_{X_\gamma} X_\sigma - \nabla_{X_\sigma} X_\gamma = \omega_\sigma^\beta(X_\gamma) X_\beta - \omega_\gamma^\beta(X_\sigma) X_\beta$$ | ||

| Hence | Hence | ||

| - | $$- \theta^{\alpha}([X_\gamma, | + | $$LHS = - \theta^{\alpha}([X_\gamma, |

| And on RHS | And on RHS | ||

| $$RHS = \theta^\beta \wedge \omega_\beta^\alpha ( X_\gamma, X_\sigma) = \theta^\beta(X_\gamma)\omega_\beta^\alpha(X_\sigma) - \theta^\beta(X_\sigma)\omega_\beta^\alpha(X_\gamma)= \omega_\gamma^\alpha(X_\sigma) - \omega_\sigma^\alpha(X_\gamma)$$ | $$RHS = \theta^\beta \wedge \omega_\beta^\alpha ( X_\gamma, X_\sigma) = \theta^\beta(X_\gamma)\omega_\beta^\alpha(X_\sigma) - \theta^\beta(X_\sigma)\omega_\beta^\alpha(X_\gamma)= \omega_\gamma^\alpha(X_\sigma) - \omega_\sigma^\alpha(X_\gamma)$$ | ||

| + | How to interpret this $\omega^\alpha_\beta$? | ||

| + | of $E$, so we have a local trivial connection $d$, it acts on local section $u \In \Gamma(U, TM)$ as | ||

| + | $$u = u^\alpha X_\alpha, \quad d(u) = d(u^\alpha) \otimes X_\alpha \in \Omega^1(U, TM) $$ | ||

| + | That is, $d X_\alpha = 0$, $d$ views $X_\alpha$ as constant section. Thus | ||

| + | $$ \nabla = d + A $$ | ||

| + | where $A$ is a matrix valued (or $End(TM)$ valued) 1-form, we get | ||

| + | $$ \nabla X_\alpha = A (X_\alpha) = \omega_\alpha^\beta X_\beta $$ | ||

| + | $A$ is valued not just in $End(E)$, but in $\mathfrak{so}(E)$, | ||

| + | |||

| + | Given this understanding, | ||

| + | $$ R^\alpha_\beta = d \omega^\alpha_\beta + \omega^\alpha_\gamma \wedge \omega^\gamma_\beta. $$ | ||

| + | |||

| + | ==== Application: | ||

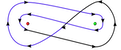

| + | Suppose we have coordinate that looks like the following | ||

| + | $$ g_{ij} = h_i^2 \delta_{ij} $$ | ||

| + | then, we may choose the orthonormal frame $X_i$ as (no summation of repeated indices here) | ||

| + | $$X_i = \frac{1}{h_i} \d_{x^i}, \theta^i = h_i dx^i $$ | ||

| + | Then, we can get $\omega_i^j$ by solving | ||

| + | $$ d \theta^i = d(h_i) \wedge dx^i $$ | ||

| + | Now, one need to figure out what is $\omega_i^j$ in each specific cases. Once that is done, one can easily get the curvature. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

math214/04-15.txt · Last modified: 2020/04/15 00:55 by pzhou