Oscar Xu's review notes

A brief review of key concepts in Math 104. The notes are organized in the order of chapters with respect to Professor Peng Zhou's lectures.

1: Introduction

$\mathbb{Z}$: integers, has subtraction and zero. $\mathbb{Z}$ is an example of “ring”.

$\mathbb{Q}$: rational number, ($\frac{n}{m}, n,m \in \mathbb{Z}$)

What is the root of $x^2 - 2 = 0$?

Definition of algebraic numbers: A number is called an algebraic number if it satisfies a polynomial equation

$c_n x^n + c_{n-1} x^{n-1} + \cdots + c_1 x + c_0 = 0$

Where the coefficients $c_0, c_1, …, c_n$ are integers,

Rational Zeros Theorem: Supose $c_0, c_1, \cdots, c_n$ are integers and $r$ is a rational number satisfying the polynomial equation

$c_n x^n + c_{n-1} x^{n-1} + \cdots + c_1 x + c_0 = 0$

where $n \geq 1$, $c_n \neq 0$, $c_0 \neq 0$. Let $r = \frac{c}{d}$, where c, d are relatively prime, then $c$ divides $c_0$ and d divides $c_n$

Completeness Axiom: the very axiom that gives us the density of $\mathbb{R}$

Definiton of max and min: Let $S \subset R$, we say an element $\alpha \in S$ is a maximum if $\forall \beta \in S, \alpha \geq \beta$. Similarly, $\alpha \in S$ is a minimum if $\forall beta \in S$, $\alpha \leq \beta$.

Definition of upper bound, lower bound, and bounded: If a real number $M$ satisfies $s \leq M$ for all $s \in S$, then $M$ is called an upper bound of $S$ and the set $S$ is said to be bounded above.

If a real number $m$ satisfies $m \leq s $ for all $s \in S$, then $m $ is called a lower bound of $S and the set $S$ is said to be bounded below.

Note: Far from being unique

The set $S$ is said to be bounded if it is bounded above and bounded below,

Definition of supremum and infimum: Let $S$ be a nonempty subset of $\mathbb{R}$.

If S is bounded above and $S$ has a least upper bound, denote it by $\sup S$

If S is bounded below and $S$ has a greatest lower bound, denote it by $\inf S$

Completeness Axiom Every nonempty subset $S$ of $\mathbb{R}$ that is bounded above has a least upper bound. In other words, $\sup S$ exists and is a real number.

Note that comleteness axiom has many equivalent forms, and this one is known as least upper bound property. As long as one completeness axiom holds, all other holds. The greatest lower bound property holds by easy proof.

Archimedian property

If $a > 0$ and $b > 0$, then for some positive integer $n$, we have $na > b$

Denseness of $\mathbb{Q}$

If $a, b \in \mathbb{R}$ and $a < b$, then there is a rational $r \in \mathbb{Q}$ such that $a < r < b$

Reading

The symbols $\infty$ and $-\infty$: $\sup S = +\infty$ if $S$ is not bounded above, and $\inf S = -\infty$ if $S$ is not bounded below,

2. Sequences

Sequences are functions whose domain are positive integers.

Definition of Convergence:

A sequence $(s_n)$ of real nbumbers is said to converge to the real number provided that

for each $\epsilon > 0 $ there exists a number $N$ such that $n > N$ implies $|s_n - s| < \epsilon$

Theorem: Convergent sequences are bounded

Theorem: If the seuquence $(s_n)$ converges to $s$ and $k$ is in $\mathbb{R}$, then the seuqnece $ks_n$ converges to $ks$. That is, $\lim(ks_n)=k \cdot \lim s_n$

Theorem: If $(s_n)$ converges to $s$ and $(t_n)$ converges to $t$, then $s_n + t_n$ converges to $s + t$. That is,

$\lim (s_n + t_n) = \lim s_n + \lim t_n$

Theorem: If $(s_n)$ converges to $s$ and $(t_n)$ converges to $t$, then $s_n t_n$ converges to $(\lim s_n)(\lim t_n)$. That is,

$\lim (s_n t_n) = (\lim s_n)(\lim t_n)

Lemma, if $s_n$ converges to $s$, if $s_n \neq 0$ for all $n$, and if $s \neq 0$, then $(1/s_n)$ converges to $1/s$

Theorem: Suppose $(s_n)$ converges to $s$ and $(t_n)$ converges to $t$. If $s \neq 0$ and $s_n \neq 0$ for all $n$, then $(t_n/s_n)$ converges to $\frac{t}{s}$

Theorem: $\lim_{n \to \infty} (\frac{1}{n^p}) = 0$ for $p > 0$

$\lim_{n \to \infty} a^n = 0$ if $|a| < 1.$

$\lim (n^{1/n}) = 1.$

$\lim_{n \to \infty} (a^{1/n}) = 1$ for $a > 0$

Definition: For a sequence $(s_n)$, we write $\lim s_n = + \infty$ provided for each $M > 0$ there is a number $N$ such that $n > N$ implies $s_n > M$.

Section: Monotone sequence and cauchy sequence

Definition: A sequence that is increasing or decreasing will be called a monotone sequence or a monotonic sequence.

Theorem: All bounded monotone sequences converge.

Definition: Let $(s_n)$ be a sequence in $R$. We define

$\lim \sup s_n = \lim_{N \to \infty} \sup \{s_n : n > N\}$

$\lim \inf s_n = \lim_{N \to \infty} \inf \{s_n : n > N\}$

Theorem: Let $(s_n)$ be a sequence in $\mathbb{R}$.

If $\lim s_n$ is defined, then $\lim inf s_n = \lim s_n = \lim \sup s_n$

If $\lim \inf s_n = \lim \sup s_n$, then $\lim s_n$ is defined and $\lim s_n = \lim \inf s_n = \lim \sup s_n$

Definition of Cauchy Sequence: A sequence $(s_n)$ of real numbers is called a Cauchy Sequence if

for each $\epsilon > 0$ there exists a number $N$ such that $m, n > N$ implies $s_n - s_m < \epsilon$

Theorem: Convergent sequences are cauchy sequences.

Lemma: cauchy sequences are bounded.

Theorem: A sequence is a convergent sequence iff it is a cauchy sequence.

Definition: A subsequence of this sequence is a sequence of the form $(t_k)_{k \in N}$ where for each $k$ there is a positive integer $n_k$ such that $n_1 < n_2 < \cdots < n_k < n_{k+1} < \cdots$ and $t_k = s_{n_k}$

Lemma: If $(s)n) is convergenet with limit in $\mathbb{R}$, then any subsequence converges to the same point.

Lemma: If $\alpha = \lim_n s_n$ exist in $\mathbb{R}$, then there exists a subsequence that is monotone.

Theorem: If $t \in \mathbb{R}$, then there is a subsequence of $(s_n)$ converging to $t$ if and only if the set $\{n \in \mathbb{N}: |s_n - t| < \epsilon$ is infinite for all $\epsilon > 0$.

Theorem: If the sequence $(s_n)$ converges, then every subsequence converges to the same limit.

Theorem: Every sequence $(s_n)$ has a monotonic subsequence.

Bolzano-Weierstrass Theorem: Every bounded sequence has a convergent subsequence.

Theorem: Let $(s_n)$ be any sequence. There exists a monotonic subsequence whose limit is $\lim \sup s_n$ and there exists a monotonic subsequence whose limit is $\lim \inf s_n$

Theorem: Let $(s_n)$ be any sequence in $\mathbb{R}$, and let $S$ denote the set of subsequential limits of $(s_n)$. S is nonempty. $\sup S = \lim \sup s_n$ and $\inf S = \lim \inf s_n$. $\lim s_n$ exists if and only if $S$ has exactly one element, namely $\lim s_n$

Section: lim sup's and lim inf's

Theorem: Let $(s_n)$ be any sequence of nonzero real numbers. Then we have

$\lim \inf |\frac{s_{n+1}}{s_n}| \leq \lim \inf |s_n|^{1/n} \leq \lim \sup |s_n|^{1/n} \leq \lim \sup |\frac{s_{n+1}}{s_n}$

Corollary: If $\lim |s_{n+1}|$ exists and equals $L$, then $\lim |s_n|^{1/n}$ exists and equals $L$.

After Midterm 1

Definition of metric space:

A metric space is a set S together with a distance function $d : S \times S \to \mathbb{R}$, such that

$d(x, y) \geq 0$, and $d(x, y) = 0$ is equivalent to $x = y$

$d(x, y) = d(y, x)$

$d(x, y) + d(y, z) \geq d(x, z)$

Cachy sequence in a metric space $(S, d)$

Def: A sequence $1) < \epsilon$

Completeness: A metric space $(S, d)$ is complete, if every cauchy sequence is convergent.

Bolzano-Weierstrass theorem: Every bounded sequence in $\mathbb{R}^k$ has a convergent subsequence.

Definition of interior and open: Let $(S, d)$ be a metric space. Let $E$ be a subset of $S$. An element $s_0 \in E$ is interior to $E$ if for some $r > 0$ we have

$\{s \in S: d(s, s_0) < r\} \subseteq E$

We write $E^\circ$ for the set of points in $E$ that are interior THe set $E$ is open in $S$ if every point in $E$ is interior to $E$.

Discussion: S is open in $S$

The empty set $\emptyset$ is open in $S$.

The union of any collection of open sets is open.

The intersection of finitely many open sets is again an open set.

Definition: $E \subset S$ is a closed subset of $S$ if the complement $E^C = S\E$ is open.

Propositions: $S, \emptyset$ are closed.

The union of a collection of closed sets is closed.

The set $E$ is closed if and only if $E = E^-$

The set $E$ is closed if and only if it contains the limit of every convergent subsequence of points in $E$.

An element is in $E^-$ if and only if it is the limit of some sequence of points in $E$.

A point is in the boundary of $E$ if and only if it belongs to the closure of both $E$ and its complement.

Series is an infinite sun $\sum_{n=1}^\infty a_n$

Partial sum $s_n$ = $\sum_{i=1}^n a_i$

A series converge to $\alpha$ if the corresponding partial sum converges.

Cauchy condition for series convergence: sufficient and necessary condition

$\forall \epsilon$ we have there exists an $N > 0$ such that $\forall n, m > N, |\sum_i={n+1}^m a_i| < |epsilon|$

Corollary: If a series $\sum a_n$ converges, then $\lim a_n = 0$

Comparison test:

If $\sum a_n$ converges and $|b_n| \leq |a_n|$ for all $n$, then $\sum b_n$ converges

If $\sum a_n = \infty$ and $|b_n| \geq |a_n|$ for all $n$, then $\sum b_n = \infty$

Corollary: Absolutely convergent series are convergent.

Ration test:

A series $\sum a_n$ of nonzero terms

converges absolutely if $\lim \sup |\frac{a_{n+1}}{a_n}| < 1$

diverges if $\lim \inf |\frac{a_{n+1}}{a_n}| > 1$

otherwise $\lim \inf |\frac{a_{n+1}}{a_n}| \leq 1 \leq \lim \sup |a_{n+1}/a_n|$ and the test gives no information.

Root test:

Let $\alpha = \lim \sup |a_n|^{\frac{1}{n}}$ Then the series $\sum a_n$

converges absolutely if $\alpha < 1$

diverges if $\alpha > 1$

Alternating series test: Let $a_1 \geq a_2 \geq \cdots $ be a monotone decreasing series, $a_n \geq 0$. And assuming $\lim a_n = 0$. Then $\sum_{n=1}^\infty (-1)^{n+1}a_n = a_1 - a_2 + a_3 - a_4$ converges. The partial sums satisfy $|s - s_n| \leq a_n$ for all $n$.

Integral test: If the terms $a_n$ in $\sum_n a_n$ are non-negative and $f(n) = a_n$ is a decreasing function on $[1, \infty]$ then let $\alpha = \lim_{n \to \infty} \int_1^n f(x) dx$

If $\alpha = \infty$, the series diverge. If $\alpha < \infty$, the series converge.

Functions

Definition of function: A function from set $A$ to set $B$ is an assignment for each element $\alpha \in A$ an element $f(\alpha) \in B$.

Injective, Surjective, Bijective

Definition of Preimage: Given $f: A \to B$. Given a subset $E \subset B$, we have $f^{-1}(E) = \{\alpha \in A | f(\alpha) \in E\} is called the perimage of $E$ under f

Definition of limit of a function: Suppose $p\in E'$(set of limit points of $E$), we write $f(x) \to q(\in Y)$ as $x \to p$ or $\lim_{x\to p} f(x) = q$ if $\forall \epsilon >0,\, \exists \delta >0$ such that $\forall x \isin E,\, 0<d_X(x,p)<\delta \implies d_Y(f(x),q)<\epsilon$.

Theorem: $\lim_{x\to p} f(x) = q$ if and only iff $\lim_{n\to\infty} f(p_n) = q$ for every sequence $(p_n)$ in $E$ such that $p_n \neq p, \lim_{n\to\infty} p_n = p$.

Theorem: For $f,g: E \to \mathbb{R}$, suppose $p\in E;$ and $\lim_{x\to p} f(x) = A, \lim_{x\to p} g(x) = B$, then $\lim_{x\to p} f(x) +g(x)= A+B$ $\lim_{x\to p} f(x)g(x) = AB$ $\lim_{x\to p} \frac{f(x)}{g(x)} = \frac{A}{B}$ if $B\neq 0$ and $g(x)\neq 0 \, \forall x\isin E$ $\forall c\in \mathbb{R}$, $\lim_{x\to p} c*f(x)=cA$

Definition of pointwise continuity: Let $(X,d_X), (Y,d_Y)$ be metric spaces, $E\subset X$, $f:E \to Y$, $p \isin E$, $q=f(p)$. We say $f$ is continuous at $p$, if $\forall \epsilon>0, \exists \delta>0$ such that $\forall x\in E$ with $d_X(x,p) <\delta \implies d_Y(f(x),q)<\epsilon$.

Theorem: Let $f:X \to \mathbb{R}^n$ with $f(x) = (f_1(x), f_2(x), …, f_n(x))$. Then $f$ is continuous if and only if each $f_i$ is continuous.

Definition of continuous maps:

$f$ is continuous if and only if $\forall p\in X$, we have $\forall \epsilon >0, \exists \delta >0$ such that $f(B_{\delta}(p)) \subset B_{\epsilon}(f(p))$

$f$ is continuous if and only if $\forall V\subset Y$ open, we have $f^{-1}(V)$ is open

$f$ is continuous if and only if $\forall x_n \to x$ in $X$, we have $f(x_n) \rightarrow f(x)$ in $Y$

Theorem: Given that $f$ is a continuous map from a compact metric space $X$ to another compact metric space $(Y)$, then $f(X) \subset Y$ is compact.

Midterm 2 T/F question here:

Let $f: X \to Y$ be a continous map between metric spaces. Let $A \subset X$ and $B \subset Y$. If A is open, then $f(A)$ is open. False

If A is closed, then $f(A)$ is closed. False

If A is bounded, then $f(A)$ is bounded. False

If $A$ is connected, then $f(A)$ is connected. True

If $A$ is compact, then $f(A)$ is compact. True

If $B$ is open, then $f^{-1}(B)$ is open. True

If $B$ is closed, then $f^{-1}(B)$ is closed. True

If $B$ is bounded, then $f^{-1}(B)$ is bounded. False

If $B$ is connected, then $f^{-1}(B)$ is connected. False

If $B$ is compact, then $f^{-1}(B)$ is compact. False

Definition of uniform continuous function: $f: X \to Y$. Suppose for all $\epsilon > 0$, we have $\sigma > 0$ such that $\forall p, q \in X$ with $d_X(p, q) < \sigma$, $d_Y(f(p), f(q)) < \epsilon$. Then $f$ is a uniform continous function.

Theorem: Suppose $f:X \to Y$ is a continuous function between metric spaces. If $X$ is compact, then $f$ is uniformly continuous.

Theorem: If $f:X\to Y$ is uniformly continuous and $S\subset X$ subset with induced metric, then the restriction $f|_S:S\to Y$ is uniformly continuous.

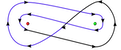

Definition of connected: Let $X$ be a set. We say $X$ is connected if $\forall S\subset X$ we have $S$ is both open and closed, then $S$ has to be either $X$ or $\emptyset$.

Lemma: $E$ is connected if and only if $E$ cannot be written as $A\cup B$ when $A^- \cap B = \emptyset$ and $A\cap B^- = \emptyset$ (closure taken with respect to ambient space $X$).

Definition of discontinuity: $f:X\to Y$ is discontinuous at $x\in X$ if and only if of $X$ and $\lim_{x\to p} f(q)$ either does not exist or $\neq f(x)$.

Definition of monotonic functions: A function $f:(a,b)\to \mathbb{R}$ is monotone increasing if $\forall x>y$, we have $f(x) \geq f(y)$.

Theorem: If $f$ is monotone, then $f(x)$ only has discontinuity of the first kind/simple discontinuity.

Theorem: If $f$ is monotone, then there are at most countably many discontinuities.

Definition of pointwise convergence of sequences of sequences:

Definition of uniform convergenece of sequences of sequences:

Definition of pointwise convergence of sequence of functions:

Definition of uniform convergence:

Given a sequence of functions $(f_n): X\to Y$, is said to converge uniformly to $f:X \to Y$, if $\forall \epsilon >0$, we have there exists $N>0$ such that $\forall n>N, \forall x\isin X$, we have $\lvert f_n(x) - f(x) \rvert <\epsilon$.

Theorem: Suppose $f_n: X\to \mathbb{R}$ satisfies that $\forall \epsilon >0,\exists N>0$ such that $\forall x\in X, \lvert f_n(x) - f_m(x) \rvert < \epsilon$, then $f_n$ converges uniformly.

Theorem: Suppose $f_n \to f$ uniformly on set $E$ in a metric space. of $E$, and suppose that $\lim_{t\to x} f_n(t) = A_n$. Then $\{ A_n\}$ converges and $\lim_{t\to x} f(t) = \lim_{n\to\infty} A_n$. In conclusion, $\lim_{t\to x} \lim_{n\to\infty} f_n(t) = \lim_{n\to\infty} \lim_{t\to x} f_n(t)$.

After Midterm 2

Definition of derivatives: Let $f: [a, b] \to \mathbb{R}$ be a real valued function. Define $\forall x \in [a, b]$

$f'(x) = \lim_{t \to x} \frac{f(t) - f(x)}{t - x}$

This limit may not exist for all points. If $f'(x)$ exists, we say $f$ is differentiable at $x$.

Proposition: if $f:[a, b] \to \mathbb{R}$ is differentiable at $x_0 \in [a, b]$ then $f$ is continuous at $x_0$. $\lim_{x \to x_0} f(x) = f(x_0)$

Theorem: Let $f, g: [a, b] \to \mathbb{R}$. Assume that $f, g$ are differentiable at point $x_0 \in [a, b]$, then

$\forall c \in \mathbb{R}$, $(c \dot f)')x_0) = c \dot f'(x_0)$

$(f + g)'(x_0) = f'(x_0) + g'(x_0)$

$(fg)'(x_0) = f'(x_0) \cdot g(x_0) + f(x_0) \cdot g'(x_0)$

if $g(x_0) \neq 0$, then $(f/g)'(x_0) = \frac{f'g - fg'){g^2(x_0)}$

Mean value theorem: Say $f: [a, b] \to \mathbb{R}$. We say $f$ has a local minimum at point $p \in [a, b]$. If there exists $\delta > 0$ and $\forall x \in [a, b] \cap B_S(p), we have $f(x) \leq f(p).

Proposition: Let $f: [a, b] \to \mathbb{R}$ If $f$ has local max at $p \in (a, b)$, and if $f'(p)$ exists, then $f'(p)=0$.

Rolle theorem: Suppose $f: [a, b] \to \mathbb{R}$ is a continuous function and $f$ is differentiable in $(a, b)$. If $f(a) = f(b)$, then there is some $c \in (a, b)$ such that $f'© = 0$.

Generalized mean value theorem: Let $f, g: [a, b] \to \mathbb{R}$ be a continuous function, differentiable on $(a, b)$. Then there exists $c \in (a, b)$, such that

$(f(a) - f(b)) \cdot g'© = [g(a) - g(b)] \cdot f'©$

Theorem: Let $f: [a, b] \to \mathbb{R}$ be continous, and differentiable over $(a, b)$. Then there exists $c \in (a, b)$, such that

$[f(b) - f(a)] = (b - a) \cdot f'©$

Corollary: Suppose $f:[a, b] \to \mathbb{R}$ continous $f'(x)$ exists for $x \in (a, b)$, and $|f'(x)| \leq M$ for some constant $M$, then $f$ is uniformly continous,

Corollary: Let $f:[a, b] \to \mathbb{R}$ continuous, and differentiable over $(a, b)$. If $f'(x) \geq 0 $ for all $x \in (a, b)$, then $f$ is monotone increasing.

If $f'(x) > 0$ for all $x \in (a, b)$, then $f$ is strictly increasing.

Theorem: Assume $f, g: (a, b) \to \mathbb{R}$ differentiable, $g(x) \neq 0$ over $(a, b)$. If either of the following condition is true

(1) $\lim_{x \to a}f(x) = 0, \lim_{x \to a}g(x) = 0

(2) $\lim_{x \to a}g(x) = \infty$

And if $\lim_{x \to a} \frac{f'(x)}{g'(x)} = A \in \mathbb{R} \cup {\infty, -\infty}$

then $\lim_{x \to a} \frac{f(x)}{g(x)} = A$

Higher derivatives: If $f'(x)$ is differentiable at $x_0$, then we define $f''(x_0) = (f')'(x_0)$. Similarly, if the $(n - 1)$-th derivative exists, n-th derivative.

Definition of smooth: $f(x)$ is a smooth function on $(a, b)$ if $\forall x \in (a, b)$, if $\forall x \in (a, b)$, any order derivative exists.

Taylor theorem: Suppose $f$ is a real function on $[a,b]$, $n$ is a positive integer, $f^{(n-1)}$ is continuous on $[a,b]$, $f^{(n)}(t)$ exists for every $t\isin (a,b)$. Let $\alpha, \beta$ be distinct points of $[a,b]$, and define $P(t) = \sum_{k=0}^{n-1} \frac{f^{(k)}(\alpha)}{k!} (t-\alpha)^k$. Then there exists a point $x$ between $\alpha$ and $\beta$ such that $f(\beta) = P(\beta) + \frac{f^{(n)}(x)}{n!}(\beta - \alpha)^n$.

Taylor series for a smooth function If $f$ is a smooth function on $(a,b)$, and $\alpha \isin (a,b)$, we can form the Taylor Series:

$P_{\alpha}(x)=\sum_{k=0}^{\infty} \frac{f^{(k)}(\alpha)}{k!} (x-\alpha)^k$.

Definition of Nth order taylor expansion:

$P_{x_o,N}(x) = \sum_{n=0}^{N} f^{n)}(x_o) * \frac{1}{n!} (x-x_o)^n$.

Definition of Partition: Let $[a,b]\subset \Reals$ be a closed interval. A partition $P$ of $[a,b]$ is finite set of number in $[a,b]$: $a=x_0 \leq x_1 \leq … \leq x_n=b$. Define $\Delta x_i=x_i-x_{i-1}$.