Table of Contents

Ryota Inagaki's Math 104 Webpage

Here you will find review material for this semester's final.

Topics of the class/Review Site will be organized as follows:

1. Sets and sequences. (Ch 1- 10 in Ross)

2. Subsequences, Limsup, Liminf. (Ch 10 - 12 in Ross)

3. Topology (Ch 13 in Ross, Chapter 2 in Rudin)

4. Series (Ch 14)

5. Continuity, Uniform Continuity, Uniform Convergence (Chapter 4, 7 in Rudin)

6. Derivatives (Chapter 5 in Rudin)

7. Integration (Chapter 6 in Rudin)

Click here to access my webpage of exercises

Preface

This website particularly exists so a) I can review and organize my knowledge of basic real analysis and b) can help other students in the class to rehash on material.

In order to more kinesthetically review the material, I have decided to type out this project myself on the course webpage rather than cobbling together photos and notes. I have also given my takes for 51 important questions that come to mind on This webpage of exercises. These are meant to partially compensate for the more theoretical nature of lectures and readings.

Please enjoy.

Section 1: Sets and Sequences

Supremums and Infimums

When you were probably 5 years old, you probably learned about what a maximum or minimum element of the set is. Such concepts seem clear cut; however, things get confusing when we introduce certain open sets (e.g. (-5, 5).

Trick question: What is the maximum element of (-5, 5)? Answer: there is no maximum. Likewise, there's no minimum.

However, we know that (-5, 5) has a supremum, which is defined as the minimum upper bound of the set, of 5. (-5, 5) has an infimum, which is defined as the maximum lower bound of the set, of -5.

More mathematical way of putting this (supremum is sup and infimum is inf): $$\sup S = x \iff (\forall s \in S, s \leq x) \land (\forall y < x, \exists s_b \in S: y < s_b \leq x)$$ $$\inf S = x \iff (\forall s \in S, s \geq x) \land (\forall y > x, \exists s_b \in S: y > s_b \geq x)$$

E.g.

$$\sup \{x \cdot y: x, y \in \{ 1, 2, 3, 4\}\} = 16$$ since $\{x \cdot y: x, y \in \{ 1, 2, 3, 4\}\} = \{1, 2, 3, 4, 6, 8, 9, 12, 16\}$. All elements in the set are less than or equal to 16 and for any real number x less than 16, there exists a number in the set greater than x and less than or equal to 16; hence making 16 is an upper bound. Consider that for any z under 16 we always have an element in the set that is greater than z, e.g. 16. This makes 16 a minimum upper bound of the set.

$$\inf \{x \cdot y: x, y \in \{ 1, 2, 3, 4\}\} = 1$$. All elements in the set are greater than or equal to 1; hence making it an upper bound. Consider that for any z over 1 we always have an element in the set that is less than z, e.g. 1. This makes 1 a maximum lower bound of the set.

E.g. Let $$S = {(-12, 1) \cup (2, 14)}$$

$$\sup{S} = 14$$ since: 1. all elements in the set are less than or equal to 14 and for any number x less than 14, there exists a number in the set that is greater than x and less or equal to than 14; hence 14 is an minimum upper bound of the set

$$\inf{S} = 14$$. All elements in the set are greater than or equal to -12; hence making it a lower bound. Consider that for any z over -12 we always have an element in the set that is less than z, e.g. 1. This makes -12 a minimum lower bound of the set.

Remarks I would like to make: $\inf S = - \infty$ iff S is not bounded from below and $\sup S = \infty$ iff S is not bounded from above.

Useful results and Miscaleneous from First Week of Class:

- From Homework 1: $\sup \{s + u: s \in S, u \in U \} = \sup S + \sup U$.

- From homework: $\inf (S + T) = \inf S + \inf T$

- Remark: not necessarily true that $\inf (S - T) \neq \inf S - \inf T$

- Archimedian Property. Given $a, b \in \mathbb{R}: a \neq b$, we know that $\exists q \in \mathbb{Q}:$ q is between a and b.

- Fundamental Theorem of Algebra: given polynomial function $P(x) = c_nx^n + c_{n-1}x^{n-1} + c_{n-2}x^{n-2} + … + c_1x + c_0$ whose coefficients are integers, we know that any rational zero of $P(x)$ can be written in the form

$\frac{q_1}{q_2}$ where $q_1, q_2$ are two integer factors of $c_0$ and $c_n$ respectively.

Sequences

A sequence is an ordered set of numbers/terms. If a sequence $(x_n)$ in metric space $M$ converges to limit l, then we know that $$\forall \epsilon > 0, \exists N > 0: \forall n > N, d(x_n, l) < \epsilon$$. As a sidenote, d is a metric of metric space M. (We'll go over metric spaces in Section 2, but when dealing with $\mathbb{R}^n$ d is the Cartesian distance function in $\mathbb{R}^n$.

Furthermore, a sequence $(x_n)$is defined to be Cauchy iff $\forall \epsilon >0, \exists N > 0: \forall n, m > N, d(x_n, x_m) < \epsilon$. An interesting property to note is that if a cauchy sequence is a sequence of real numbers, then it is also cauchy sequence in the space of real numbers and vice versa. However, note that not all cauchy sequences are convergent.

Definition: $(\lim f_n = \infty) \iff (\forall M > 0: \exists N > 0: \forall n > N, f_n > M)$.

Definition: $(\lim f_n = -\infty) \iff (\forall M < 0: \exists N > 0: \forall n > N, f_n < M)$.

E.g. $$x_n = \frac{4n + n^2}{\sqrt{n^4 + 1}}$$ converges to 1. We can take the limit $$ \lim_{n \to \infty} x_n = \lim_{n \to \infty} \frac{4n + n^2}{\sqrt{n^4 + 1}} = 1$$.

E.g. $$x_n = n$$ diverges since as n increases to infinity $x_n$ blows up to infinity, $x_n$ does not approach a real number.

E.g. $$x_n = - \sum_{i=1}^{n}{\frac{1}{i}}$$ diverges to $- \infty$ since as n increases to infinity, $x_n$ decreases and hence diverges to negative infinity (since it is commonly known that harmonic series $\sum_{i=1}^{n}{\frac{1}{i}}$ does not approach a real number).

E.g. $$x_n = (-1)^n$$ diverges since as n just oscillates between 1 and -1, $x_n$ does not approach a real number.

Useful Results (given both $f_n$ and $g_n$ converge)

- Addition Rule $\lim (f_n + g_n) = \lim f_n + \lim g_n$

- Multiplication Rule $\lim (f_ng_n) = \lim f_n \cdot \lim g_n = $ (Note this works for finite sums).

- Division Rule (only if both numerator and denominator converge as $n \to \infty$.

Monotonically Increasing and Decreasing

Although it may be redundant, I thought that it would be best to establish the definitions of monotonically increasing and decreasing. This will come in handy in the next subsection.

A sequence $x_n$ is said to be monotonically increasing iff $x_{n+1} \geq x_n$.

A sequence $x_n$ is said to be monotonically decreasing iff $x_{n+1} \leq x_n$.

For instance $x_n = e^n$ monotonically increases as $x_{n+1} = e^{n+1} = (e)(e^n) = ex_n \geq x_n$ (since $x_n \geq 0$ by the nature of the exponential function).

E.g $x_n = e^{-n}$ monotonically decreases as $x_{n+1} = e^{n+1} = (\frac{1}{e})(e^{-n}) = (\frac{1}{e})x_n \leq x_n$ (since $x_n \geq 0$ by the nature of the exponential function).

Section 2: Subsequences, Limsup, Liminf

We define $\limsup s_n = \lim_{N \to \infty} (\sup \{s_n: n \leq N\})$ and $\liminf s_n = \lim_{N \to \infty} (\inf \{s_n: n \leq N\})$.

One important point to note is that $\sup \{s_n: n \leq N\}$ and $\inf \{s_n: n \leq N\}$ are, respectively, monotonically decreasing and increasing functions. Furthermore, it is very important to note that $\limsup s_n = \liminf s_n =\lim s_n$ iff $\lim s_n$ converges.

E.g. Consider $x_n = (- 1)^ n$. We know that $\limsup x_n$ = 1 because of how $x_n$ alternates between -1 and 1 with 1 occurring on even n and -1 occurring on odd n. Thus, we can also say that $\liminf x_n$ = 1.

Useful results:

- Given that $a_n$ is a sequence of nonzero real numbers, $\liminf |a_{n+1}/a_n| \leq \liminf |a_n|^\frac{1}{n} \leq \limsup |a_n|^\frac{1}{n} \leq \limsup |a_{n+1}/a_n|$

- Given that $\lim |a_{n+1}/a_n| = L$ we know that $\lim |a_n|^{\frac{1}{n}} = L$.

Subsequences

We define a subsequence $s_{n_{k}}$ of $s_n$ as an ordered set of points in $s_n$ such that $\forall k \in \mathbb{N}, n_k < n_{k+1}$.

Important result: We know that $\forall x_{n_k} \in (x_n), x_{n_k} \to x$ iff $x_n \to x$.

Another useful limit theorem is that for all $\forall (x_n): |x_n| \leq M$, we know that there exists a converging subsequence of points in $x_n$. This is called the Bolzano-Weitrass theorem .

E.g. Let $x_n = (-1)^n$. Then, although we definitely know that $x_n$ doesn't converge, that sequence is bounded (i.e. $|x_n| \leq 1$). We also see a convergent subsequence of it, $x_{2k}$ converges, specifically $x_{2k} \to 1$ because $x_{2k} = (-1)^{2k} = 1$.

Useful Result: Given sequence of nonzero real numbers $s_n$, we know $\liminf |\frac{s_{n+1}}{s_n}| \leq \liminf |s_n|^{\frac{1}{n}} \leq \limsup |s_n|^{\frac{1}{n}} \leq \limsup |\frac{s_{n+1}}{s_n}|$. We also know from this theorem that $\lim |s_n|^{\frac{1}{n}} = \lim |\frac{s_{n+1}}{s_n}|$ when $\lim |s_n|^{\frac{1}{n}}, \lim |\frac{s_{n+1}}{s_n}|$ exist (and that includes when these limits are $\pm \infty$).

E.g. Consider $x_n = (4)^{\frac{1}{n}}.$ Consider that $x_n = (4)^{\frac{1}{n}} = |4|^{\frac{1}{n}} = |a_n|^{\frac{1}{n}}$. Without using any complicated identities, $\lim |\frac{a_{n+1}}{a_n}| = \lim |\frac{4}{4}| = 1$. Applying the consequence from the previous “Useful Result”, we get that $\lim |a_n|^{\frac{1}{n}} = \lim 4^{\frac{1}{n}} = 1$.

Section 3: Topology

In order to generalize and make more precise the discussion on limits and later functions, we need to create a language with which to discuss domain, range, and sets. In pure math, not everything is in the set of real numbers. Therefore, we introduce the concepts of metric spaces, balls, and generalized notions of compactness and open sets.

Metric Spaces

For most of our math lives up to Math 53, we have dealt with the cartesian space. We learned that distance between two points in 3d space is $d(x, y) = \sqrt{(x_0 - y_0)^2 + (x_1 - y_1)^2 + (x_2 - y_2)^2}$. Then in Math 54 (or in Physics 89) we met linear spaces in terms of vectors, functions, polynomials, and all the familiar constructs we could think of. Now, we use an ever more generalized idea of a space and distance.

A metric space (A, d) is a metric space iff the metric d satisfies the following for all $x, y \in A$

1. $$d(x, y) \in (\mathbb{R} \setminus \mathbb{R}^-)$$.

2. $$d(x, y) = 0 \iff x = y$$

3. $$d(x, y) = d(y, x)$$

4. $$d(x, y) + d(y, z) \geq d(x, z)$$

A set is S said to be open in (A, d) iff $\forall s \ in S, \exists r > 0: \{x \in A: d(x, s) < r\} \subseteq S$. As a note $\{x \in A: d(x, s) < r\} = B_{r}(s)$ where $ B_{r}(s)$ is called an open ball of radius r around point s.

A limit point s of S is a point such that we can construct a sequence of points in S that are not equal to s but nonetheless converge to s.

The closure of a set S is defined to be the set of all limit points of S union S itself.

Using this idea, a set S is said to be closed iff all limit points of S are in S.

E.g. Consider the space $(-1, 1)$ with metric $d$ defined as $d(x, y) = 1$ iff $x \neq y$ and $d(x, y) = 0$ iff $x = y$. Denote our metric space by $(M, d)$. It can be established that d(x, y) is a valid metric since $d(x, y)$.

This is because:

- $\forall x, y \in (-1, 1), d(x, y) \geq 0$. Also note by definition of $d$ that $d(x, y) = 0$ iff $x = y$.

- By definition of the function we know that $d(x, y) = d(y, x)$. Specifically when $x = y$, $d(x, y) = 0 d(y, x)$ and when $x \neq y$, $d(x, y) = 1 = 1 =d(y, x)$.

- Finally the triangle inequality holds in this metric space. Suppose it did not hold. Then $\exist x, y, z \in (-1, 1), d(x, y) + d(y, z) < d(x, z)$. The only way this is possible given how this metric is set up is if $d(x, y) + d(y, z) = 0$ and $d(x, z) = 1$. In such case, we know $d(x, y) = 0 = d(y, z)$ since our metric can never spit out a negative value. Thus we have to conclude $x = y, y = z$ and therefore $x = z$. However this leads us to say that $d(x, z) = 0 \neq 1$, which is contradiction.

In this set we can also say that $[0, 0.5]$ is open in $(M, d)$. We can say for any $x \in [0, 0.5]$ , $\exists r > 0: \{y \in M: d(y, x) < r\} \subseteq [0, 0.5]$. Specifically we can make $r$ be any number less than 1. That way regardless of what $x \in M$ is , we know that $\{y \in M: d(x, y) < r\} = \{x\} \subseteq [0, 0.5]$.

Interestingly, we can prove that $[0, 0.5]$ is closed in $(M, d)$. In order to show this we need to show $ [0, 0.5]= E = \overline{E} = E \cup E'$ (we denote $E'$ as the set of limit points of E).

Consider that $E$ has no limit points! In order to show this, we can show that for arbitrary p and $\forall p_n \to p$ where $p \in M$, $p \in p_n$. This follows from the definition of the limit, which shows tells us that $\forall \epsilon > 0, \exists N: \forall n> N, d(p_n, p) < \epsilon$. Now consider what happens when $0 < \epsilon < 1$. In that case, we know that $\forall n > N, p_n = p$ because of how the metric $d$ is defined and the only way for $d(p_n, p) < 1$ is for $p_n = p$ so that $d(p_n, p) = 0$. Since $\forall x_n \to p$, $p \in x_n$, $p$ can never be a limit point.

Therefore $E' = \emptyset$. And therefore $E = \overline{E}$ and thus $[0, 0.5]$ is closed. This illustrates that just because $E$ is open does not necessarily mean that it is not closed.

Useful results on Open Sets Closed Sets

- A set is open iff its complement is closed.

- Given that $\bigcup S_i$ is a union of open sets, $\bigcup S_i$ is open.

- Given that $\bigcap S_i$ is the intersection of closed sets, $\bigcap S_i$ is closed.

- Given that $\bigcup S_i$ is a union of finitely many closed sets, $\bigcup S_i$ is closed.

- Given that $\bigcap S_i$ is the intersection of open sets, $\bigcap S_i$ is open.

Induced Topology

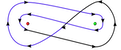

Sometimes, we want to create our own metric space and transfer over properties. How these properties transfer over can be summarized by the following drawing (credits to Peng Zhou).

In this diagram there are two ways to create induced topology. We can either obtain the topology from an induced metric or from the old set itself.

One key idea to note is that (and I quote from course notes) Given $A \subseteq S$ and (S, d) is a metric space, we can equip A with an induced metric. $E \subseteq A$ is open iff $\exists$ open set $ E_2 \subseteq S$ such that $E = E_2 \cap S$. From how a complement of an open set in ambient space is closed, we can use this theorem to tell alot topologically about the set.

Other Basic Topology Definitions

- A Set is countable iff there exists a one to one mapping between the elements of the set to the set of natural numbers.

- An open ball (or neighborhood as referred to in Rudin), about point x and with radius r is $B(r; x) = \{y \in M: d(y, x) < r\}$ where (M, d) is the ambient metric space of x.

- $p$ is a limit point of $S$, a subset of $M$ where $S$'s ambient metric space is $(M, d)$, iff we know that $\forall r > 0, \exists p_2 \neq p: p_2 \in B(r; p)$.

- $S$ is bounded iff $\exists r: \forall p, q \in S, d(p, q) \leq r$.

- $S$ is dense in $X$ iff every element in X is in $S$ or is a limit point of $S$.

- $S$ is a perfect set iff $S$ is closed and $S$ does not have any isolated points.

- $diam(S)$, the diameter of $S$ is given to be $diam(S) = \sup_{x, y \in S} d(x, y)$.

E.g.: We can say that in the set $\{\frac{1}{n}: n in \mathbb{N}\}$ has a limit point $0$ since we know that $\lim \frac{1}{n} = 0$ and therefore $\forall \epsilon > 0, \exists N \in \mathbb{N}: \forall n \geq N, d(\frac{1}{n}, 0) = |\frac{1}{n} - 0| < \epsilon$. Therefore, $\forall \epsilon > 0, B(\epsilon; x)$

Compactness

In a very abstract way, we can think of compact as the mathematical way of saying controlled, contained or small; to get a gist of what compactness mean, one may take some of the interesting analogies/metaphors/layman concepts expressed in this article from the Scientific American.

Compactness is a tool that is hard to master but is VERY useful once you get it. For instance, we can link compactness to discuss uniform continuity of a function, compactness of the output of a function that is inputted a compact set, preserving the openness of a set, and even convergence of subsequences. Because of how compactness is related to many other ideas, understanding this very thoroughly is very important if you want to continue in analysis at the undergraduate and graduate levels.

An open cover of set S is a collection of open sets $\{C_{\alpha}\}$ such that $S \subseteq \bigcup C_{\alpha}$.

A set S is defined to be compact iff $ \forall$ Open covers of $\{C_{\alpha}\}$, there exists a finite subcover $ \{C_{\alpha}\}$.

E.g. we can say that $(0, 1)$ is not a compact set. This is a classic example. Consider what happens when we make our subcover $C_\alpha$ = $\{(\frac{1}{n+2}, \frac{1}{n}): n \in \mathbb{N}\}$. We know that C has infinitely many elements. However there is no finite subcover of C since if I get rid of even one of the elements in C; there exist elements in $(0, 1)$ that would not be included in the collection of remaining elements in C. Thus, there cannot be a finite subcover of C. C is not compact.

One shortcut applicable to compactness and dealing with real numbers is that a subset of $\mathbb{R}$ is compact iff that subset is closed and compact; that is called the Heine-Borrel theorem. Notice that we can only apply this to subsets of $\mathbb{R}$.

However, one can truly be sure that if a set is closed then it is closed and bounded in some metric space. For instance consider the set $[-1, 1] \cap \mathbb{Q}$. The set, as you may know from the exam, is compact. Furthermore, we know the set is bounded and also it is closed (since $[-1, 1] \cap \mathbb{Q} = E \cup E'$ (union of points in E and limit points of E).

More Useful Results for Compactness

- Sequential Compactness = Compactness. Sequential compactness specifically means that given any sequence of points $s_n$ in the set $S$, we know that $\exists$ subsequence $s_{n_k}$ that converges to a point in $S$.

- We also know given that $f$ is a continuous map from $X \to Y$ and $S$ is a compact subset of $$

- Closed subsets of a compact set are compact.

- Given that $\{C_{\alpha}\}$ is a collection of compact sets such that the intersection of any finite number of sets in the collection is nonempty, we know that $\bigcap C_{\alpha}$ is nonempty.

Connectedness

We know that $A$ are connected iff we cannot write $A$ as a union of two disjoint open sets.

E.g. (Based on problem from midterm 2). $\mathbb{Q}$ is NOT a connected set. I can write it as a union of two disjoint open sets such as $(-\infty, \sqrt{2}) \cap \mathbb{Q}$ and $(\sqrt{2}, \infty) \cap \mathbb{Q}$.

E.g. On the other hand, $\mathbb{R}$ is a connected set. Suppose that I can write $\mathbb{R}$ as a union of disjoint sets. This inevitably leaves points missing from the $\mathbb{R}$. (Perhaps an exercise to prove).

Section 4: Series

This section proceeds in a way similar to how we think of sequences; after all, we are working with indices and hence countable number of elements. We can think of $ \lim_{n \to \infty} S_n= \sum_{i=0}^{\infty}s_i$ where $S_N = \sum_{i=0}^{N}s_i$ Similar terminology and ideas apply, such as a form of sandwich theorem, the idea of convergence. However, this concept also ties into the properties of Taylor expansions, later on, and there are some additional, or rephrased, rules that need to be kept in mind when examining the convergence/divergence of sums.

$\sum_{i=0}^{\infty}s_i$ converges $ \longrightarrow \lim_{n \to \infty}s_n = 0$ (Infinite Limit Theorem),

The following are two comparison tests:

$|a_n| \leq b_n \land \sum_{i = 0}^{\infty} b_i$ converges $ \longrightarrow \sum_{i=0}^{\infty}a_i$ converges.

$a_n \geq b_n \geq 0 \land \sum_{i = 0}^{\infty} b_i$ diverges $ \longrightarrow \sum_{i=0}^{\infty}a_i$ diverges.

E.g. Let $\sum_{i=0}^{\infty}2^{-i}|\sin{i}|$. We know that $|\sin{i}| \leq 1$ by definition of the sine function; this makes $2^{-i}|\sin{i}| \leq 2^{-i}$. Also $\sum_{i=0}^{\infty}2^{-i} \to \frac{1}{1 - 1/2} = 2$ as we learned from high school math. Since $\sum_{i=0}^{\infty}2^{-i}$ converges and $0 \leq 2^{-i}|\sin{i}| \leq 2^{-i}$, we use the comparison test to conclude that $\sum_{i=0}^{\infty}2^{-i}|\sin{i}|$.

E.g. Let $\sum_{i=1}^{\infty}\frac{2^i}{i}$. Notice that $2^i\frac{1}{i} \geq \frac{1}{i} \geq 0$. Furthermore $\sum_{i=1}^{\infty}\frac{1}{i}$ is a well known divergent sequence (harmonic). Thus, we know that $\sum_{i=1}^{\infty}\frac{2^i}{i}$ diverges by the comparison tests.

Another useful test we can use is the ratio test:

Let $\sum_{i=0}^{\infty}a_i$ where $a_i$ is nonzero. We know that the series converges if $\limsup |\frac{a_{n+1}}{a_n}| < 1$. We know that the series diverges if $\liminf |\frac{a_{n+1}}{a_n}| > 1$. Otherwise the test is inconclusive.

E.g. Let $\sum_{n=1}^{\infty}\frac{n^2}{4^n}$. We know $$\limsup |\frac{a_{n+1}}{a_n}| = \lim |\frac{a_{n+1}}{a_n}| = \lim{|\frac{\frac{(n+1)^2}{4^{n+1}}}{\frac{n^2}{4^n}}|} = \lim{|\frac{(n/n+1/n)^2}{4^{1}}|} = 1/4 < 1 $$ Thus $\sum_{n=1}^{\infty}\frac{n^2}{4^n}$ converges.

E.g. Let $\sum_{n=1}^{\infty}n2^n$. We know $$\liminf |\frac{a_{n+1}}{a_n}| = \lim |\frac{a_{n+1}}{a_n}| = \lim{|(1 + 1/n)2|} = 2 > 1 $$. Thus $\sum_{n=1}^{\infty}n2^n$ diverges.

And then the root test:

Given $\sum_{n=0}^{\infty} a_n$, if $\limsup{|a_n|^{\frac{1}{n}}} < 1$, then we know that the series converges. Otherwise if $\limsup{|a_n|^{\frac{1}{n}}} > 1$, the series diverges.

E.g. Consider $\sum_{n=0}^{\infty}(\frac{1}{3})^n$. We know that $\limsup{(\frac{1}{3})^n} = 0 < 1$ and hence that series converges.

E.g. Consider $\sum_{n=0}^{\infty}(\frac{4}{3})^n$. We know that $\limsup{(\frac{4}{3})^n} = \infty > 1$ and hence that series diverges.

And consider the Integral Test:

Given $\sum_{n=0}^{\infty} a_n$ and that $f(n) = a_n$ and that $f(n)$ is monotonically decreasing and is nonnegative, if $\int_{0}^{\infty}f(x)dx$ converges then the series converges. If $\int_{0}^{\infty}f(x)dx$ diverges then the series diverges.

E.g. $\sum_{n = 0}^{\infty}\frac{1}{n+1}$. We know that $f(x) = \frac{1}{x+1}$ monotonically decreases on $x \in [0, \infty)$ and is nonnegative on that interval as well. Hence we apply the integral test. $\int_{0}^{\infty}f(x)dx = [\ln(x+1)]^{\infty}_{0} = \infty$. Thus $\sum_{n = 0}^{\infty}\frac{1}{n+1}$ diverges.

E.g. $\sum_{n = 0}^{\infty}\frac{1}{(n+1)^2}$. We know that $f(x) = \frac{1}{(x+1)^2}$ monotonically decreases on $x \in [0, \infty)$ and is nonnegative on that interval as well. Hence we apply the integral test. $\int_{0}^{\infty}f(x)dx = [\frac{-1}{x+1}]^{\infty}_{0} = 1$. Thus $\sum_{n = 0}^{\infty}\frac{1}{(n+1)^2}$ diverges.

Speaking of the integral test we can derive the p-series test. This test states that given series $\sum_{n=1}^{\infty}\frac{1}{n^p}$, if $p \leq 1$, then the series diverges and if $p > 1$ the series converges.

Finally there is the alternating series test:

Given $\sum_{n=1}^{\infty} (-1)^n a_n$ where $a_n > 0$ and $\lim a_n = 0$ and $a_n$ monotonically decreases, we know that $\sum_{n=1}^{\infty} (-1)^n a_n$ converges.

If $\sum_{n=1}^{\infty} a_n$, series $\sum_{n=1}^{\infty} (-1)^n a_n$ is defined to converge absolutely.

E.g. Consider $\sum_{i=1}^{\infty}(-1)^n\frac{1}{n^2 + n}$. We know that $a_{n+1} = \frac{1}{(n+1)^2 + (n+1)} = \frac{1}{n^2 + 2n + 1 + (n+1)} = \frac{1}{n^2 + 3n + 2} \leq \frac{1}{n^2 + n} = a_n$ and $\lim a_n = \lim \frac{1}{n^2 + n} = 0$. By alternating series test $\sum_{i=1}^{\infty}(-1)^n\frac{1}{n^2 + n}$ converges. Furthermore the series converges absolutely as $0 \leq |a_n| = \frac{1}{n^2 + n} \leq \frac{1}{n^2}$ and

Some interesting things you can do with these series is to compute sums of the series. This can be done though things like Fourier Series or even using common McLaurin expansions of series. You may have seen these in some hard math 1b exams.

Section 5: Continuity

This section of the course picks up from the 1-2 weeks in AP Calc BC that was dedicated to continuity. Back in that day, the domain of a real function is on the real line we know that f is continuous at $x_0$ iff $\lim_{x \to x_0^+}f(x) = \lim_{x \to x_0^-}f(x) = f(x_0)$. This made things very easy to prove. We also know that continuity, for granted, creates things like the intermediate value theorem and how if a function is differentiable then it is continuous. Now, once we start to deal with different metrics and less physically intuitive spaces (e.g. even hard-to-draw 3D space), we need to consider many more dimensions and nuances of continuity. This requires redefining/generalizing from scratch the limit of a function and then formalizing the many behaviors of a continuous function.

Limits and The Many Ways to Say "Continuous"

We can say that a function f has a limit of $l$ at p iff $(\forall p_n: p_n \to p), \to p, \lim f(p_n) = l$. I have to emphasize here $\forall p_n: p_n \to p$ since this is what makes it harder to show that a limit exists than it is to show that a limit doesn't exist. You may have learned this from doing Math 53 (Multivariable Calc) limits.

In calculus class we have learned that function $f$ is continuous at p iff $\lim_{x \to p}f(x) = f(p)$. $f$ is continuous (implicit over S) if $\forall p \in S, \lim_{x \to p}f(x) = f( p )$.

Another way of saying this is that $\forall p_n: p_n \to p, \lim_{n \to \infty} f(p_n) = f(p)$.

Other statements equivalent to continuity of $f: X \to Y$, given x (element in metric space (X, d)): The epsilon and delta definition that $\forall \epsilon > 0$,

- $\exists \delta > 0: \forall x_2 \in X, d_X(x_2, x) < \delta \to d_Y(f(x_2), f(x)) < \epsilon$.

- Given arbitrary $V \subseteq Y$ that is open, $f^{-1}(V)$ is open on $X$. ( Note: $f^{-1}(V) = \{x \in X: f(x) \in V\}$.)

One thing to also note is how compactness is preserved through continuous functions. More precisely: given $X_2 \subseteq X$ is compact, we know that $f(X_2)$ is also compact. This is proven in Problem 1 of my problems page.

Uniform continuity

$\forall \epsilon > 0: \exists \delta > 0: (\forall x_1, x_2 \in X), (d_X(x_1, x_2) < \delta) \longrightarrow (d_Y(f(x_1), f(x_2)) < \epsilon)$,

Furthermore, from The Problem Book in Real Analysis we know $f$ is not uniformly continuous iff $(\exist \epsilon, x_n, y_n : |x_n - y_n| < \frac{1}{n}: |f(x_n) - f(y_n)| > \epsilon$.

Be careful to not confuse uniform continuity with mere continuity!

Uniform continuity vs regular continuity

This is a TIGHTER condition when compared to regular continuity. E.g. $f(x) = \frac{1}{x}$ on $(0, 1)$. (Probably a simple example from the Problem Book for Real Analysis).

We know that $f(x) = \frac{1}{x}$ is continuous (this can be a problem for another time). However we also know that $f(x) = \frac{1}{x}$ is NOT uniformly continuous over $(0, 1)$. This is since for epsilon and the sequences in (0, 1) $\epsilon = 1/2, x_n = \frac{1}{n}, y_n = \frac{1}{2n}.$, we know that $|x_n - y_n| = \frac{1}{2n} < \frac{1}{n}$ and $|f(x_n) - f(y_n)| = |n - 2n| = n > \frac{1}{2} = \epsilon$.

On the other hand, we know that the exponentially decaying function,$f(x) =e^{-x}$ is uniformly continuous on $[1, \infty)$. This is since for all $\epsilon > 0, \exists \delta > 0: \forall x \in (1, \infty):d(x, y) < \delta \longrightarrow |f(x) - f(y)| < \epsilon$. Specifically once can notice that $|e^{-x} - e^{-y}|\leq 1|x - y|$ on $(1, \infty)$. Thus, we can make $\delta = \epsilon$. That way, if we make $|x - y| <\delta$, then $|e^{-x} - e^{-y}|\leq 1|x - y| < \delta = \epsilon$ on $(1, \infty)$ (Extra challenge: prove the Lipschitz continuity of $f$ on $[1 , \infty)$ using mean value theorem or some other means). Thus we can say that $\forall \epsilon >0, \exists \delta > 0: (|x - y| < \delta) \longrightarrow (|f(x) - f(y)|) < \epsilon$. Thus $f$ on $[1, \infty)$ is uniformly continuous.

Some useful results:

- If $S$ is a closed and compact subset of the domain of continuous function of $f$, we know that $f$ is uniformly continuous on $S$.

Uniform Convergence

Another interesting topic of continuity seems almost the same as the first parts of this course regarding sequences. However, we first change things by using continuous functions as elements in our sequence $f_n$. Secondly, we make sure that the end function that the sequence converges to $f_n$ is going to be so that $\forall \epsilon > \epsilon, \exists N: \forall n > N, d_Y(f_n(x), f(x)) < \epsilon$ for any x in the domain of $f$.

Looks familiar. Yes. Is this exactly the same as saying that $f_n \to f$ pointwise? NO!

After all, consider the definition of pointwise convergence, which we have learned before: $\forall x \in X, \exists \epsilon > 0: \exists N > 0: \forall n > N : d_Y(f_n(x), f(x)) < \epsilon $. The definitions look DIFFERENT.

E.g. of a non-uniformly convergent function that converges pointwise: Consider $f_n(x) = \sqrt{1-x^2/n^2}$. We know $f_n(x) \to f(x) = 1$ pointwise. However notice that it is not the case that $\forall \epsilon > \epsilon, \exists N: \forall n > N, d_Y(f_n(x), f(x)) < \epsilon$.

Suppose that it is a case: then $\forall \epsilon > 0, \exists N: \forall n>N, |\sqrt{1-x^2/n^2} -1| \epsilon$ for all x in $\mathbb{R}$. Consider that $|\sqrt{1 - x^2/n^2} - 1|$ is maximized when $x = n$. This makes $|\sqrt{1 - x^2/n^2} - 1| = |\sqrt{1 - n^2/n^2} - 1| = 1$. Such applies for all n. Hence, if I make $\epsilon = 1/2$ it is not true that $\exists N: \forall n>N, |\sqrt{1-x^2/n^2} -1| \epsilon = 1/2$ for all x in $\mathbb{R}$. Thus, contradiction has been reached.

In other words $f_n(x) = \sqrt{1-x^2/n^2} \to f(x)=1$ pointwise but not uniformly.

E.g. of a uniformly convergent function. Consider the function $f_n(x) = \frac{x}{1 + 4^{-n}}$. We know that $f_n \to f$ pointwise where $f(x) = x$. We can prove that $f_n \to f$ uniformly. Consider that $|\frac{x}{1 + 4^{-n}} - x| = |\frac{-x4^{-n}}{1+4^{-n}}| = |\frac{x}{4^n + 1}|$ converges to 0 as n increases regardless of x. Thus we know that $\forall \epsilon > 0, \exists N: \forall n > N: |f_n(x) -f(x)| <\epsilon$ for all x. By definition of uniform convergence, we know that $f_n \to f$ uniformly.

Some useful results regarding uniform convergence:

- $\lim_{n \to \infty} \sup\{|f_n(x) - f(x)|: x \in X\} \iff f_n \to f$ uniformly.

- If $|f_n(x)| \leq M_n$ $\forall x \in X, n \in \mathbb{N}$ and $\sum_{i=0}^nM_i$ converges, we know that $f_n(x) \to f(x)$ (where $f(x) = \sum_{i=0}^{\infty}f_i(x)$) uninformly and vice versa. This is the Weitrass M-Test .

- $(\forall \epsilon > 0, \exists N > 0: \forall m, n > N, |f_m(x) - f_n(x)| < \epsilon, \forall x \in X) \iff f_n \to f$ uniformly. This is the Cauchy Criterion for uniform convergence.

Important results for Continuity

- Given that A is a connected subset of $X$ the domain of continuous function $f: X \to Y$, we know that $f(A)$ also has to be a connected set.

- Given that C is a compact subset of X and given continuous $f: X \to Y$, we know that $f$ is uniformly continuous on X.

- The Intermediate Value Theorem: Given f continuous on $[a, b]$, $a < b$, and $y$ in between $f(a), f(b)$, we know that there exists $x \in (a, b)$ such that $f(x) = y$.

E.g. (Credits to Chris Rycroft's Practice Spring 2012 Math 104 Final) :

We would like to show that $\forall n \in \mathbb{N}, p(x) = x^{2n+1} - 4x + 1$ has at least three zeroes.

This problem may seem daunting, but we can use the intermediate value theorem + the properties of odd exponents.

Consider the following and how $2n + 1$ is odd and at least 3. We obtain that $$f(-1) = (-1)^{2n+1} -4(-1) + 1 = -1 + 4 + 1 = 4 > 0$$ $$ f(-3) = (-3)^{2n+1} -4(-3) + 1 < (-3)^3-4(-3) + 1 = -14 < 0$$ $$f(1) = (1)^{2n+1} -4(1) + 1 = 1 - 4 + 1 = -2 < 0$$ $$f(2) = (2)^{2n+1} -4(2) + 1 \geq (2)^{3} -4(2) + 1 = 1 > 0$$ .

We now can use intermediate value theorem to say that $\exist x_1 \in (-3, -1): f(x_1) = 0$, $\exist x_2 \in (-1, 1): f(x_2) = 0$, and $\exist x_3 \in (1, 2): f(x_3) = 0$. This is since a) $p$, a polynomial function, is continuous on $\mathbb{R}$ and on $[-3, -1]$, $[-1, 1]$, and $[1, 2]$ and b) $0$ is in $(f(-3), f(-1))$, $(f(-1), f(1))$, and on $(f(1), f(2))$.

We can furthermore declare that $x_1 \neq x_2 \neq x_3$ because we know that each of these x's correspond to disjoint intervals.

Therefore, I can say that there are at least 3 points $x_1, x_2, x_3$ in $\mathbb{R}$ such that $p(x_1) = 0 = p(x_2) = p(x_3)$. QED.

Section 6: Derivatives

Definition of derivative and differentiability:

We know that $f$ is differentiable iff $\forall x \in \mathbb{R}, \lim_{h \to 0}\frac{f(x+h)-f(x)}{h}$ converges.That limit is known as the derivative of $f$.

Another alternate definition: We know that $f$ is differentiable iff $\forall x \in \mathbb{R}, \lim_{h \to x}\frac{f(x)-f(h)}{x - h}$ converges.

Basic E.g. Prove that $f(x) = x^2$ is differentiable. We know that $\lim_{h \to 0}\frac{f(x) - f(h)}{x - h} = \lim_{h \to x}\frac{x^2 - h^2}{x - h} = \lim_{h \to x}(x + h) = 2x$. Hence $f(x)$ is differentiable with a derivative of 2x.

Basic E.g. Prove that $f(x) = |x|$ is NOT differentiable. Try x= 0 and $\lim_{h \to 0}\frac{f(0) - f(h)}{|0| - |h|} = \lim_{h \to 0}\frac{|h|}{0 - h} = \lim_{h \to x}\frac{-|h|}{h}$. We know that, from common example that $\lim_{h \to 0^+} |h|/h = 1 \neq -1 = \lim_{h \to 0^-} |h|/h$. Thus $\lim_{h \to 0}\frac{f(0) - f(h)}{0 - h} = \lim_{h \to 0}\frac{|h|}{0 - h} = \lim_{h \to x}\frac{-|h|}{h}$ diverges. Thus $f(x) = |x|$ is not differentiable.

Mean Value Theorem

The most well known form of the Mean Value Theorem tells us that if $f$ is real and continuous on $[x_1, x_2]$ and is differentiable on $(x_1, x_2)$, we know that $\exists x \in (x_1, x_2): f(x_2) - f(x_1) = (x_2 - x_1)f(x)$. We've seen alot of this in Math 1A type calculus.

E.g. Consider the function $f(x) = x \sin x - x$. Consider equation $1 = x \cos x + \sin x$. In an interesting twist we can show that $f(x)$ does have a zero.Consider interval $[0, \pi/2]$. In this case we know that the equation does have a solution. For instance consider that $f(0) = 0 \sin 0 - 0$ and $f(0) = 0 \sin 0 - 0$ and $f(\pi/2) = \pi/2 \sin \pi/2 - \pi/2 = 0$. Thus using the mean value theorem and knowing $f$ is continuous and differentiable on $[0, \pi/2]$ and on $(0, \pi/2)$ respectively, $\exists x \in (0, \pi/2): f'(x)(\pi/2 - 0) = f(\pi/2) - f(0) = 0$. Thus we know that for. some $x \in (0, \pi/2)$, $f'(x) = x \cos x + \sin x -1 =0$. Thus we know that $\exists x: 1 = x \cos x + \sin x$.

Speaking of the previous problem, the mean value theorem can be used to arrive at the Rolle's theorem but

Nonexample. Consider the function $f(x) = |x|$. We know that $f(1) = f(-1)$. Furthermore $f$ is continuous on $\mathbb{R}$. You can even draw a tangent horizontal line at the origin that is tangent to the curve of $f$. However, this does not mean that $\exists x \in (-1, 1): f'(x)(1 - (-1)) = f(1) - f(-1) = 1 -1 = 0$. This is since $f$ is not differentiable on $(-1, 1)$. Notice that there is a cusp at $x = 0$ (lefthand derivative is -1 and righthand derivative is +1).

A more generalized form of the mean value theorem is given as follows:

Given real functions $f, g$ continuous over interval $[x_1, x_2]$ and differentiable over $(x_1, x_2)$. We know that $\exists x \in (x_1, x_2): (f(x_2) - f(x_1))g'(x) = (g(x_2) - g(x_1))(f'(x))$.

Intermediate Value Theorems for Derivatives

In order to make an analog to the intermediate value theorem for continuous functions, we use make the following statement: Given that f is differentiable over $[a, b]$, $f'(a) < f'(b)$, and $\mu$ is in $(f'(a), f'(b))$, I know that $\exists c \in (a, b): f'(x_0) = \mu$.

One important thing to note here is that this does not result from the intermediate value theorem for continuous functions. Rather, it originates from the idea of global minima. The proof is very simple:

Consider $g(x) = f(x) - \mu x$. We know $g'(a) = f'(a) - \mu < 0$ and $g'(b) = f'(a) - \mu > 0$. Now,since $[a, b]$ is compact, we know that there exists a global minimum for $f$. It cannot be $a, b$ since $f'(a) < 0, f'(b) > 0$ are nonzero. Therefore, we must say that the global minimum for $f$ must be $x_0 \in (a, b)$. Since $f$ is continuous on $[a, b]$ it must be the case that $x_0$ is a local minimum as well. Thus, $f'(x_0) = 0$.

L'Hopital's Rule

Given that $f, g: (a, b) \to \mathbb{R}$ are differentiable, $\forall x \in (a, b), g(x) \neq 0$, and one of the following (given that c is in $(a, b)$):

- $\lim_{x \to c} f(x) = \lim_{x \to c} g(x) = 0$

- \lim_{x \to c} g(x) = \pm \infty$

, then we can write $\lim_{x \to c}{\frac{f(x)}{g(x)}} = \lim_{x \to c}{\frac{f'(x)}{g'(x)}}$.

The proof for this theorem is actually quite long; one part of the proof is in the April 8th notes for this course and the rest is to be completed in the problem sheet I created.

This theorem can be quite useful.

E.g. Spin off From Chris H. Rycroft's Spring 2012 final. Consider $f(x) = \frac{x}{e^x - 1}, x \neq 0$. We know that $f(x) = c$ for some constant at $x = 0$. What should c be to make $f$ continuous at x=0?

We can make sure that $\lim_{x \to 0} f(x) = f(0)$, by one definition of continuity. In other words, make $c = f(0) = \lim_{x \to 0} f(x) = \lim_{x \to 0}\frac{x}{e^x - 1}$ (we encounter a $0/0$ situation, so we use L'Hopital) $ = \lim_{x \to 0}\frac{1}{e^x} = 1$.

Thus make $c = 1$; that way $f$ is continuous at 0.

Taylor's Theorem

Given a function f and its n-1 st order derivative that is continuous on on interval $[a, b]$, and given $\alpha, \beta \in [a, b]$, we know that $$f(\beta) = \sum_{i=0}^{n-1}\frac{f^{(i)}(\alpha) (\beta - \alpha)^i}{i !} + \frac{f^{(n)}(x)(\beta - \alpha)^n}{n!}$$ for some x between $\alpha$ and $\beta$. Notice that if I make $n=1$, Taylor's theorem would seem very similar (if not identical) to the mean value theorem.

E.g. Find the bound between $f(x) = \sin x - x$ on $[\pi/2 , 3\pi/2]$. We know that $x$ is the 1st order Taylor polynomial of $g(x) = \sin x$. By taylor's theorem, we know that $\exists x_2 \in [\pi/2 , x]: \sin x - x = \frac{g^{(2)}(x_2)}{2!}(x - \pi/2)$. We can bound, $\sin x - x = \frac{g^{(2)}(x_2)}{2!}(x - \pi/2)^2$.

We can provide a rough bound first and foremost.

This would be made by maximizing the absolute values of value of $\frac{g''(x_2)}{2!}$ and of $(x - \pi/2)^2$. We know that $|\frac{g^{(2)}(x_2)}{2}| \leq \frac{1}{2}$ because $g$ is a sine function and $|(x - \pi/2)^2 \leq \pi^2$ because $x \in [\pi/2, 3\pi/2]$. Thus we can bound $f(x) = \sin x - x$ with $|f(x)| \leq \pi^2/2$.

The Taylor Series, whose error bound can be found from application of Taylor's theorem, of $f$ about $x_0$ can be defined by

$\sum_{n=0}^{\infty} \frac{f^{(n)}(x_0)(x - x_0)^n}{n!}$. The set of points around $x_0$ where the taylor series of $f$ about $x_0$ converges can be defined as the interval of convergence I. $|\sup{I} - x_0|$ distance from center of the interval of convergence to the boundary of the interval of convergence is defined as the radius of convergence.

E.g. Consider the taylor series of $\ln(x + 1)$ about x =0, which is defined as $\sum_{n=0}\frac{(-1)^nx^{n+1}}{n+1}$. We can find the interval of convergence solving the following inequality:

$\lim |\frac{\frac{(-1)^{n+1}x^{n+2}}{n+2}}{\frac{(-1)^nx^{n+1}}{n+1}}| = \lim |(-1)\frac{n+1}{n+2}x| < 1$

This leads to

$\lim |(-1)\frac{n+1}{n+2}x| = |x| \lim |(-1)\frac{n+1}{n+2}| = |x| < 1$.

We can tell that the radius of convergence of the taylor series of $\ln(x + 1)$ about x =0 is 1. We also know that the the taylor series converges for $x \in (-1, 1)$.

Now to be sure about the true interval of convergence, we need examine what the taylor series does (a) at $x=x_0 -$(radius of convergence) and (b) at $x=x_0 +$(radius of convergence).

Consider (a). In this case we know that the taylor series at $x=-1$ equals $\sum_{n=0}^{\infty}\frac{(-1)^n(-1)^{n+1}}{n+1} = \sum_{n=0}^{\infty}\frac{(-1)^{2n+1}}{n+1} = \sum_{n=0}^{\infty}\frac{-1}{n+1} = -\sum_{n=0}^{\infty}\frac{1}{n+1}$. We know by the way that $\sum_{n=0}^{\infty}\frac{1}{n+1}$ does NOT converge by the integral test. (See section on series for why).

Consider (b). In this case we know that the taylor series at $x=-1$ equals $\sum_{n=0}^{\infty}\frac{(-1)^n(1)^{n+1}}{n+1} = \sum_{n=0}^{\infty}\frac{(-1)^{n}}{n+1}$. We know by the way that $\sum_{n=0}^{\infty}\frac{(-1)^n}{n+1}$ does NOT converge by the alternating series test. (Since this alternating series is such that 1) $a_{n+1} = \frac{1}{n+1 + 1} \leq \frac{1}{n+1} = a_{n}$ and 2) $\lim a_{n} = \lim \frac{1}{n+1} = 0$.

Thus we can conclude that the taylor series of $\ln(x+1)$ about 0 converges on $(-1, 1]$.

Section 7: Integrals

From Math 1A, we have all learned that the (kind-of and practical) antonym for differentiation is integration. In this case, we have also learned that integrals are really just a sum of many rectangles. In this subpart of analysis, we formalize and generalize this intuition even further.

New vocabulary:

Partition of $[a, b]$ :denoted by P, a finite sequence of points in the interval including a and b.

Riemann Integrals

We use the riemann integral to be defined as follows: $\int_{a}^{b}f(x)dx = \lim_{n \to \infty}\sum_{i=0}^{n}f(s_i)\triangle x_i$ where $\triangle x_i = x_{i+1} - x_{i}$ where $x_{i-1} < x_{i}$ and both are consecutive points in the Riemann sum and where $s_i$ is a point in $[x_{i}, x_{i+1}]$. $x_0, x_1, … ,x_n, x_{n+1}$ are points in partition P of $[a, b]$ and $x_0, x_{n+1}$ are a and b, respectively.

The upper Riemann sum is defined to be $U(f, P) = \lim_{n \to \infty}\sum_{i=0}^{n}\sup_{x \in [x_i, x_{x+1}]}f(x)\triangle x_i$. Similarly, The lower Riemann sum is defined to be $U(f, P) = \lim_{n \to \infty}\sum_{i=0}^{n}\inf_{x \in [x_i, x_{x+1}]}f(x)\triangle x_i$.

A function is said to be Riemann integrable on $[a, b]$ iff “upper integral” $ = U(f) = \inf U(f, P) = \sup L(f, P) = L(f) = $ “lower integral” where the inf's and sup's are over all possible partitions P of $[a, b]$. In that case $\inf U(f, P) = \sup L(f, P) = \int_{a}^{b}f(x)dx$

This seems pretty basic, but integrals can be generalized and studied even further through the Stieltjes integral. There we use a $d \alpha$.

Stieltjes Integral

Let $\alpha$ be a monotonically increasing function. We denote our Stieltjes integral over $[a, b]$ through the following.

$\int f d\alpha$.

Like the Riemann integral, we utilize partitions and sums. Given $P = \{x_0=a, x_1, x_2, … , x_{n+1} = b\}$

$U(f, P, \alpha) = \sum_{i=1}^{n+1} (\sup_{x \in [x_{i-1}, x_i]}f(x))\triangle\alpha_i = \sum_{i=1}^{n+1} (\sup_{x \in [x_{i-1}, x_i]}f(x)) (\alpha(x_{i}) - \alpha(x_{i-1})))$.

$L(f, P, \alpha) = \sum_{i=1}^{n+1} (\inf_{x \in [x_{i-1}, x_i]}f(x))\triangle\alpha_i = \sum_{i=1}^{n+1} (\sup_{x \in [x_{i-1}, x_i]}f(x)) (\alpha(x_{i}) - \alpha(x_{i-1})))$.

$\inf U(f, P, \alpha) = U(f, \alpha) = $ “Upper integral”

$\sup L(f, P, \alpha) = L(f, \alpha) = $ “Lower integral”

a function f is stieltjes integrable with respects to $\alpha$ (denote by $f \in R(\alpha)$) on $[a, b]$ iff $U(f, \alpha) = L(f, \alpha)$ for $[a, b]$.

Remarks:

Do NOT confuse the Stieltjes integral with the u-substitution, change of variables you have learned from Math 1B.

One can think of the Riemann integral as a specialization of the Stieltjes integral with the $\alpha(x) = x$.

E.g. Consider $\alpha(x) = H(x - 1/2)$ (where H is the Heaveside Step Function. Consider $f(x) = x^2$. Now we may want to consider the integral over $[-5, 5]$ with respects to our $\alpha$. In that case we consider that $\int_{x = -5}^{x = 5}f(x) d\alpha$. Initially, one may be tempted to answer that this yields in infinity. However, notice that for any partition of $[-5, 5]$, we know that unless the interval $[x_i, x_{i+1}]$ created from the partition contains $1/2$ and some points less than $1/2$. then $\triangle\alpha =\alpha(x_{i+1}) - \alpha(x_{i}) = H(x_{i+1}-1/2) - H(x_{i}-1/2)=1$ by definition of the Heaveside function. For all other intervals $\triangle\alpha = 0$. In that case, letting $I = [x_{m}, x_{m+1}]$ be the interval created by the partition points such that $1/2$ is in that interval and that interval contains a number less than $1/2$, we can say that $U(f, P, \alpha) =\sup_{x \in I}f(x) \cdot 1$ and $L(f, P, \alpha) =\inf_{x \in I}f(x) \cdot 1$. Now if we find $U(f, \alpha) = \inf_{P \in \mbox{partition of [-5, 5]}}U(f, P, \alpha)$ and $L(f, \alpha) = \sup_{P \in \mbox{partition of [-5, 5]}}L(f, P, \alpha)$ we get that $U(f, \alpha) = L(f, \alpha) = f(1/2)$. In that case we can conclude that $\int_{x = -5}^{x = 5}f(x) d\alpha = f(1/2) = 1/4$.

This is a special case of the Stieltje's integral that is explained in more detail and precision in theorem 6.15 in Rudin. Further information about this topic can be found in theorem 6.16 in Rudin.

More Useful Properties

More on Refinements We define a refinement Q of a partition P as a partition such that $P \subset Q$. The refinement can be thought of something that makes a finer grain approximation for the integral of f over $[a, b]$. This is formally stated in the following theorem:

Given Q is a refinement of P, we know $U(f, Q, \alpha) \leq U(f, P, \alpha)$ and $L(f, P, \alpha) \leq L(f, Q, \alpha)$.

Given two refinements P, Q of $[a, b]$, we can call $P \bigcup Q$ the common refinement of P and Q.

More on Proving that a function is integrable with respects to $\alpha$

Cauchy Criterion: If we can prove that $\forall \epsilon > 0, \exists$ Partition $P$ of [a, b] $: U(f, P, \alpha) - L(f, P, \alpha) < \epsilon$, then we can say that $f \in R(\alpha)$ on $[a, b]$.

Continuity: If $f$ is continuous on $[a, b]$, then $f \in R(\alpha)$ (where $\alpha$ is an increasing function) .

Composition of a function: Given that $f \in R(\alpha)$ for $[a, b]$ and $g$ is continuous over $f([a, b])$, we know that $g \circ f$ is integrable with respects to $\alpha$ over $[a, b]$.

Addition, Multiplication of integrable functions: Given that $\forall f, g \in R{\alpha}$, we know that $f + g \in R(\alpha)$ and $f \cdot g \in R(\alpha)$.

There indeed is a way to “convert” from an integral with respects to $\alpha$ to a regular, friendly Riemann integral. We know that given an increasing $\alpha$ such that $\alpha'$ is Riemann integrable on $[a, b]$ and that f is a bounded real function on $[a, b]$, we know that $\int_{x=a}^{x=b} fd\alpha = \int_{x=a}^{x=b} f\alpha'(x) dx$. Note that this applies to differentiable $\alpha$ so it does not apply to the step function example from previous section.

E.g. Just so that we don't confuse this with u-substitution, we can try the following easy example. On $[0, 1]$,consider $\alpha(x) = \sin(x)$ (which by the way is increasing on that specified interval) and consider $f(x) = \cos(x)$. Since $\alpha(x)$ is differentiable and $\alpha'$ is riemann integrable and $f$ is bounded, we know that $\int_{x=0}^{x = 1}f(x)d\alpha = \int_{x=0}^{x=1}\cos(x)\alpha'(x)dx = \int_{x=0}^{x=1}\cos(x)\alpha'(x)dx = \int_{x=0}^{x=1}\cos(x)\cos(x)dx = \int_{x=0}^{x=1}\frac{cos(2x)+1}{2}dx = [\frac{\frac{1}{2}\sin(2x) + x}{2}]^{x=1}_{x=0} = \frac{\sin(2)}{4}+\frac{1}{2}$

We can also discuss change of variables, but in the context of the Stieltjes integral. Specifically we can use that given $\varphi(x)$ is a strictly increasing continuous function mapping from $[A, B] \to [a, b]$ , $f \in R(\alpha)$, and that $\beta(y) = \alpha(\varphi(y)), g(y) = f(\varphi(y))$, we can say that $\int_{A}^{B}g d\beta = \int_{a}^{b}f d\alpha$. Here it is important to note that the u-substitution takes advantage of a specific form of this, where $\alpha(x) = x$ and $\phi' \in R$ on $[a, b]$ and $\int_{a}^{b}fdx = \int_{A}^{B}f(\varphi(x))\varphi'(x)dx$.

Fundamental Theorem of Calculus

In order to connect derivatives, integrals, mathematicians have established the fundamental theorems of calculus, the first one giving an almost obvious statement about the area under the curve of $f$ as a difference of the antiderivative taken at two points and the second one giving an interface to convert from antiderivative to the original function.

One interesting idea to consider is the relationship between the function f and its antiderivative on $[a, b]$. The second fundamental theorem of calculus Theorem 6.20 tells us that given an Riemann integrable $f$ on $[a, b]$ , $F(x) = \int_{a}^{x}f(t)dt$ is continuous on $[a, b]$ and if $f$ is continuous at $x_0 \in [a, b]$, then $F$ is differentiable on $[a, b]$ and $f(x_0) = F'(x_0)$.

E.g. we can consider $f(x) = x^2$ on $[-1, 1]$. We know that $F(x) = \int_{t = -1}^{t = x}f(t)dt = x^3/3 +1/3$. As the theorem would predict, $F$ is definitely continuous and since $f$ is continuous, we know $F$ is differentiable and $F'(x) = f(x) = x^2$, which sounds just about right from the Second Fundamental Theorem of Calculus.

Precisely stated, the fundamental theorem of calculus can be written as the following:Given $f \in R$ for $[a, b]$ and $F$ is assumed to be a differentiable function on $[a, b]$ with $F' = f$, we know that $\int_{a}^{b}f(x)dx = F(b) - F(a)$.

This is quite a basic result (I learned this back in sophomore year of high school just to do really fun integrals in a row), but deriving this in class has shown us how intricate mathematics can truly be. This is about as high as we go.

All that is left in this course is some detail about the uniform convergence of integrals and derivatives….

Uniform Convergence of integrals and derivatives

We know that generally if $f_n \to f$ uniformly and $f_n$ is integrable (whether Riemann integrable or with respects to some increasing function $\alpha$), then f is also integrable.

E.g. Consider the function $f_n(x) = \frac{x^2}{n^2 + 1}$ on $[0, 1]$. We know that $f_n(x)$ is Riemann integrable because it is continuous on $[0, 1]$. Now, consider that $f_n \to f$ where $f(x) = 0$ on $[0, 1]$. We can also prove that $f_n \to f$ uniformly because $\lim_{n \to \infty}\sup_{x \in [0, 1]}|f_n(x) - f(x)| = \lim_{n \to \infty}\sup_{x \in [0, 1]}|\frac{x^2}{n^2+1}| = \lim_{n \to \infty}\frac{1}{n^2+1} = 0$. Therefore, we can consider $f(x) = 0$ (for $x \in [0, 1]$) to be integrable. This makes sense as we can calculate the trivial integral $\int_{0}^{1}f(x)dx = \int_{0}^{1}0dx = 0$.

It is NOT generally true that if $f_n \to f$ uniformly and $f_n$ is differentiable, then f is also differentiable. We can also say that HOWEVER, if ${f_n}$ is a sequence of differentiable functions (differentiable on $[a, b]$) such that $f_n'(x) \to g(x)$ uniformly and there exists $x \in [a, b]: f_n(x)$ converges., then we know that $f_n \to f$ uniformly and that $f'(x) = g(x)$.

E.g. (Credits to Prof. Sebastian Eterovic's Spring 2020 Math 104 Practice Midterm 2) We know that $f_n(x) = \frac{e^{-nx}}{n}$ does uniformly converge to $0$ on $[0, \infty)$. This is since $\lim_{n \to \infty}\sup_{x \in [0, \infty)}|f_n(x) - 0| = \lim_{n \to \infty}\sup_{x \in [0, \infty)}\frac{e^{-nx}}{n} = \lim_{n \to \infty}\frac{1}{n} = 0$.

However notice $f_n'(x) = \frac{-ne^{-nx}}{n} = -e^{-nx}$. Pointwise, $\lim_{n \to \infty}f_n'(x) = g(x)= 0$ for (positive x) and $\lim_{n \to \infty}f_n'(x) = g(x)= 1$ for (x = 0). Now we can take $\sup_{x \in [0, \infty)}|f_n'(x) - g(x)| = 1$. Therefore $\lim_{n \to \infty}\sup_{x \in [0, \infty)}|f_n'(x) - g(x)| = 1 \neq 0$. Therefore $f_n'(x)$ does not uniformly converge.

The moral of the story here is that just because $f_n \to f$ uniformly and $f_n$ is differentiable over the domain of $f$, we cannot say that $f_n' \to g$ uniformly for some function g.

Now this concludes my review notes for Math 104

Sources:

- Elementary Analysis by Ross

- Principles of Mathematical Analysis by Walter Rudin

- A Problem Book in Real Analysis by Askoy, Khamsi

- What Does Compactness Really Mean? by Evelyn Lamb (Scientific American)

- Past exams written by Peng Zhou, Ian Charlesworth, Sebastian Eterovic, Charles Rycroft. (UC Berkeley)

- Wikipedia