Table of Contents

2020-01-24, Friday

What is a tensor?

If you say a vector is a list of numbers, $v=(v^1, \cdots, v^n)$, index by $i=1, \cdots, n$, then a (rank 2) tensor is like a square matrix, $T^{ij}$, where $i, j = 1, \cdots, n$.

But there is something more to it.

How do a vector's coefficients change as we change basis?

As we learned last time, a vector is a list of numbers $v^i$, together with a specification of a basis, $e_i$, and we should write $$ v = \sum_{i=1}^n v^i e_i. $$

In this way, the change of basis formula looks easy. Say, you have another basis, called $\gdef\te{\tilde e} \te_i$. We can express each of the new basis vector $\te_i$ in terms of the old basis $$ \te_i = \sum_{j} A_{i}^{j} e_j $$ and the old basis vectors in terms of the new basis $$ e_i = \sum_j B_i^j \te_j $$

Hence, we can write the vector $v$ as linear combinations of the new basis $$ v = \sum_{i=1}^n v^i e_i = \sum_{i=1}^n v^i (\sum_j B_{i}^j \te_j) = \sum_j (\sum_i v^i B_i^j ) \te_j $$ Thus, if we define $\gdef\tv{\tilde v} \tv^j = \sum_i v^i B_{i}^j$, we can get $$ v = \sum_j \tv^j \te_j. $$

So, how do a tensor's coefficients change as we change basis?

In the same spirit, we should write a tensor by specifying the basis $$\tag{*} T = \sum_{i,j} T^{ij} \, e_i \otimes e_j $$

Wait a sec, what is $e_i \otimes e_j$ (the $\otimes$ is read as 'tensor')? I guess I need to tell you the following general story then.

The $\otimes$ construction

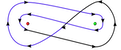

Let $V$ and $W$ be two vector spaces over $\R$, we will form another vector space denoted as $V \otimes W$. $V \otimes W$ contains finite sum of the following terms $v \otimes w$ where $v \in V$ and $w \in W$. A general element in $V \otimes W$ looks like the following $$ v_1 \otimes w_1 + \cdots + v_N \otimes w_N $$ for some finite number $N$. And we have the following equivalence relations $$ (c v) \otimes w = v \otimes cw, \quad v \in V, w \in W, c \in \R $$ $$ (v_1 + v_2) \otimes (w_1+w_2) = v_1 \otimes w_1 + v_1 \otimes w_2 + v_2 \otimes w_1 + v_2 \otimes w_2, $$ where $v_1,v_2 \in V, w_1,w_2 \in W$ ($v_1,v_2$ are vectors, not coordinates) The second rule is called the distribution rule .

Lemma If $V$ has a basis $\{e_1, \cdots, e_n\}$ and $W$ has $\{d_1, \cdots, d_m\}$, then $$ e_i \otimes d_j, \quad i=1,\cdots, n, \; j = 1, \cdots, m$$ is a basis for $V \otimes W$.

Proof: It suffice to show that any element in $V \otimes W$ can be written as a finite sum of $e_i \otimes d_j$. A general element in $V \otimes W$ looks like the following $$ v_1 \otimes w_1 + \cdots + v_N \otimes w_N $$ for some finite number $N$. If we can decompose each term in the above sum as linear combination of $e_i \otimes d_j$, then we are done. So it suffices to consider the decomposition of such an element $v \otimes w \in V \otimes W$.

Suppose $v = \sum_i a^i e_i$ and $w = \sum_j b^j d_j$, then $$ v \otimes w = (\sum_i a^i e_i) \otimes (\sum_j b^j d_j) = \sum_{i=1}^n \sum_{j=1}^m a^i b^j e_i \otimes d_j. $$ where we used the distribution rule of tensor. Indeed, $v \otimes w$ can be written as a linear combination of $e_i \otimes d_j$. That finishes the proof. $\square$

So, how do a tensor's coefficients change as we change basis?

How to interpret Eq $(*)$ then?

$T$ is an element of $V \otimes V$, and this equation $$ T = \sum_{i,j} T^{ij} \, e_i \otimes e_j $$ decomposes $T$ as a linear combination of the basis vectors $e_i \otimes e_j$ of $V \otimes V$, with coefficients $T^{ij}$.

If we change basis, say using the new basis $\te_i$ as above, then we have a new basis for $V \otimes V$ $$ \te_i \otimes \te_j = (\sum_l A_i^l e_l) \otimes (\sum_k A_j^k e_k) = \sum_{l,k} A_i^l A_j^k e_l \otimes e_k $$ Similarly $$ e_i \otimes e_j = \sum_{k,l} B_i^l B_j^k \te_l \otimes \te_k $$

In the new basis, we have $$ \gdef\tT{\tilde T} T = \sum_{ijkl} T^{ij} B_i^l B_j^k \, \te_l \otimes \te_k = \sum_{kl} \tT^{lk} \te_l \otimes \te_k $$ where $$ \tT^{lk} = \sum_{ij} T^{ij} B_i^l B_j^k. $$

Einstein notation

It means, if you see a pair of repeated indices, you sum over it, called “contract the repeated indicies”. For example, instead of writing $$ v = \sum_{i=1}^n v^i e_i $$ we can omit the summation sign, and write $$ v = v^i e_i. $$

It is often used in physics literatures, since it makes long computation formula shorter.

My notation differs from Boas's book, because I used both upper and lower indices. And the Einstein contraction is only between one upper and one lower indices.

Alternative Definition of a Tensor product

$V \otimes W$ is the space of bilinear function on $V^* \times W^*$. (see the next lecture about dual space). Recall that linear function on $V^*$ is just $V$ itself. This is a generalization.