The computer model represented an enormous change in the way we thought about memory. It completely refocused memory research. Instead of looking at retention by an undifferentiated memory system, cognitive psychologists began to examine the properties of different storage structures. For a while, most of this research was focused on the sensory registers and primary memory.

At the lowest end of the multistore model of memory lie the sensory

registers -- one for each sensory modality: the icon, the

echo, and other

analogous sensory memories, in which information arriving from

the sensory

surfaces is held for a brief time before being copied to primary

memory.

We

know

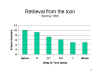

this from experiments employing a paradigm devised by George

Sperling (1960), in

which subjects were briefly presented (for a few hundred

milliseconds) with a

3x4 visual array of letters. After the array disappeared,

there was a

retention interval of only 0-5 seconds, and then the subjects

were asked to

report the contents of the array.

know

this from experiments employing a paradigm devised by George

Sperling (1960), in

which subjects were briefly presented (for a few hundred

milliseconds) with a

3x4 visual array of letters. After the array disappeared,

there was a

retention interval of only 0-5 seconds, and then the subjects

were asked to

report the contents of the array.

The implication was that the entire array was actually represented in iconic memory, because the subjects could report accurately the contents of any randomly selected row -- indicating that the contents of all the rows were available. However, in the whole report condition, subjects could not extract all the information in the array before it rapidly decayed.

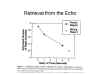

A similar experiment was

performed in the auditory domain -- the echo

-- by Darwin, Turvey, and Crowder (1972). In this case,

the subjects were

presented with 3 lists of 3 items each, for a total of 9 items,

presented over

stereo headphones in such a way that each list was perceived as

coming from a

different part of space -- left, middle, and right.

Performance in

whole-report and partial-report conditions, with the partial

reports cued

visually, paralleled those obtained by Sperling.

A similar experiment was

performed in the auditory domain -- the echo

-- by Darwin, Turvey, and Crowder (1972). In this case,

the subjects were

presented with 3 lists of 3 items each, for a total of 9 items,

presented over

stereo headphones in such a way that each list was perceived as

coming from a

different part of space -- left, middle, and right.

Performance in

whole-report and partial-report conditions, with the partial

reports cued

visually, paralleled those obtained by Sperling.

Apparently, the function of the sensory registers is to hold briefly presented stimulus information long enough to allow attention to be directed to it. In this way, the sensory registers exemplify an important function of memory, which is to free behavior from control by whatever stimulus happens to be present in the environment (because, in this case, the stimulus is no longer present).

This is all very well and good, and the Sperling experiment stimulated several years' worth of research activity on the part of a number of investigators. But it's not clear how useful the sensory registers are. In the real world, stimuli usually remain present in the environment for longer than just a few hundred milliseconds -- and this ecological fact may obviate any need for the sensory registers. As Ralph Haber once put it, in a famous paper, iconic memory may only be useful for reading at night in a lightning storm!

Still the sensory registers are memory storage structures --

the point where

sensory information first makes contact with the cognitive

system. And

they do give an organism the opportunity to react to very brief

events.

At the highest end of the multistore model is a long-term store or long-term memory, also known as secondary memory -- a term that, as noted, was adopted from William James. In theory, secondary memory is the permanent repository of all stored knowledge, both declarative and procedural. For our purposes, these three terms are interchangeable. And, also in theory, the representations in secondary memory reflect considerable processing and transformation. Atkinson and Shiffrin proposed that the capacity of secondary memory was essentially unlimited. Information might be lost from the sensory registers or from primary memory through decay or displacement, but there is essentially no forgetting from secondary memory. Once encoded, memories remain available in storage, and "forgetting" is a matter of gaining access through retrieval processes.

Primary

memory, as conceived in the multistore model, is a

short-term store located

between the sensory registers and secondary memory. For

our purposes, we

may use the terms primary memory, short-term memory,

and short-term

store interchangeably.

In the multistore model, information in the

sensory registers is

not simply subject to attentional selection it is subject to

some actual

information processing as well.

Here is where the dotted arrow in A&S's diagram comes in. In the theory, knowledge of meaningful patterns is stored in secondary memory. But pattern recognition requires direct contact between the sensory registers and secondary memory. In the Atkinson & Shiffrin model, both feature detection and pattern recognition are unconscious -- precisely because they skirt primary memory, which is the (implied) locus of consciousness in the modal model.

In any event, information in primary memory is subject to further, more extensive processing, as it makes further contact with information stored in, and retrieved from, secondary memory.

In the original theory, information was represented in memory in acoustic form -- as, for example, the pronunciation of the letters, numbers, and words in a display or list studied by the subject; or, put another way, as the name of an item, or a description of what the name sounds like.

Primary memory has limited capacity, as reflected in George Miller's famous "magical number 7, plus or minus 2". That is, primary memory can hold about 7 items of information.

There's a story here. Miller made the capacity of short-term memory famous, but -- as he acknowledged -- it wasn't his discovery. Credit for that goes to John E. Kaplan (1918-2013), a psychologist and human-factors researcher who spent his career at Bell Laboratories, the research arm of AT&T. Prior to the 1940s, telephone exchanges typically began with the first two letters of a familiar word, followed by 5 digits -- as in the Glenn Miller tune, "Pennsylvania 6-500" (the actual telephone number of the Pennsylvania Hotel, often claimed as the oldest telephone number in New York City), or the film Butterfield 8 (after an exchange serving the upscale Upper West Side of Manhattan. But the telephone company (there was only one at the time!) worried about running out of phone numbers, and so switched to an all-digit system. This raised the question of how many digits people could hold in memory. Kaplan did the study, and determined that the optimal number was 7. (Kaplan also determined the optimal layout for the letters and numbers on the rotary, and then the touch pad telephone -- reversing the conventional placement on a hand calculator or computer keyboard -- but that's another story).

However, Miller noted that the effective capacity of primary memory can be increased by chunking, or grouping related items together. Thus, the 18-item string

LBJIRTUSALSDFBICIA

would, ordinarily, be too long to keep in primary memory. But if chunked as follows, the whole thing fits very nicely:

LBJ IRT USA LSD FBI CIA.

So, the capacity of primary memory is roughly 7 chunks, not 7 items. If the chunks are big enough -- yes, you can chunk chunks -- primary memory can hold an immense amount of information.

Of course, chunking in primary memory depends a lot on knowledge stored in secondary memory. You have to recognize a string like LBJ as meaningful -- in fact, the initials of an American president -- before you can chunk it (IRT is the acronym for a subway line in New York City). In fact, the whole 18-letter string is the chorus of a song, "Initials", from HAiR: The American Tribal Love-Rock Musical (lyrics by James Rado & Gerome Ragni): once you've heard the song, you never forget the letters.

The distinction between primary and secondary

memory is supported by the serial-position curve,

which plots the probability of recalling any individual item

as a function of its position in the study list. The

serial-position curve is typically bowed, meaning that items

at the beginning and the end of the list have a higher

probability of recall than

items in the middle.

A common interpretation of the primacy and

recency effects is

that they reflect the operations of two different memory

stores:

This interpretation is supported by two lines of experimental evidence:

Slowing

the pace of list presentation increases the primacy effect,

but has no effect on

recency. Apparently, increasing the spacing between

items gives the

subject more time to rehearse each item, and more opportunity

to recode items

into secondary memory.

Increasing

the

retention interval, and filling the retention interval with

distracting

material, reduces the recency effect but has no effect on

primacy. The

distraction effectively displaces items from primary memory,

but has no effect

on items already encoded into secondary memory.

The distinction between primary and secondary

memory is also

supported by neuropsychological evidence from brain-damaged

patients.

Both the experimental and clinical evidence indicates that primary and secondary memory can be dissociated:

However, the strict separation of primary and secondary memory can also be challenged on empirical grounds. For example, primary memory is sometimes referred to as immediate memory. The idea is that items in primary memory are already in consciousness, and immediately available for various operations. By contrast, items in secondary memory appear to be in a latent state: they must be retrieved and brought into consciousness. But it turns out that items in primary memory also need to be retrieved.

This

was shown clearly by a classic memory-scanning

experiment by Sternberg

(1960). In this experiment, subjects were presented with

sets of 1 to 6

digits, which they were asked to hold in primary memory (note

that 6 digits is

well within the normal memory span). Then, they were

presented with a

probe digit, and asked to say whether the probe was in the

memorized list.

This is of course an extremely simple tasks, and subjects

rarely make any errors

in it. But it turns out that the search process takes

time. the more

items there are in the memory set, the longer it takes

subjects to say

"yes" or "no". Thus, items in primary memory are not

immediately available after all. Primary memory must be

searched, just

like secondary memory.

As another example, it turns out that there are

serial-position

curves in retrieval from secondary memory.

Thus, primacy and recency effects do not necessarily reflect retrieval from different memory stores.

What Murdock (1967) called "the modal model of memory" -- the division of memory into three classes of storage structures, and a set of control processes which moved information between them -- had enormous heuristic power. It represented a huge change in the way we thought about memory -- it both exemplified and consolidated the cognitive revolution in the study of memory; and it generated a large number of clever experiments intended to explore the properties of the various storage structures (especially the icon, the echo, and primary memory) and control processes (especially attention). But it also very quickly began to decline.

As noted earlier, the sensory registers are hardly memories at all.

And then there were the doubts raised about the structural distinction between short-term primary memory and long-term secondary memory. Some of these had been raised by Melton (1963), a leading figure in the verbal-learning tradition, even before the modal model had been clearly articulated. But other doubts quickly followed (Murdock, 1972; Craik & Lockhart, 1973; Wickelgren, 1974).

The case against a structural distinction was

put forth most

strongly by Craik and Lockhart (1972), in their analysis of

the capacity of

primary memory. Certainly primary memory seems

to be capacity

limited, s in Miller's "magical number 7". But the

precise

nature of this limitation is unclear.

And then there is what they called the paradox of chunking. Chunking seems to increase the capacity of primary memory, but chunking also relies on knowledge in semantic memory -- it goes beyond a mere acoustic representation, and includes the results of meaning analysis -- like the meaning of trigrams like LBJ and IRT. This begs the question of coding differences between primary and secondary memory. Primary memory is supposed to represent information in acoustic form -- or, at least, some kind of physical code. But with chunking, the information is clearly not in a purely acoustic (or other physical) form any longer.

And

then there is the function of forgetting from primary memory,

famously mapped

out by Brown (1958) and Peterson and Peterson (1959) in what

has come to be

known as the Brown-Peterson paradigm. These

investigators presented

subjects with a consonant trigram such as BNX.

Then, they asked the

subject to count backwards by 3s from a three-digit number,

such as 417,

for an interval of 3 to 18 seconds, and then to report the

trigram. They

observed extremely rapid forgetting: Without distraction,

virtually everyone

remembered the three letters (though, you do have to wonder

about those 5% who

didn't!). But , after only 18 seconds of distraction,

retention dropped to

less than 20% -- a figure that Brown and Peterson attributed

to retrieval from

secondary memory.

The Brown-Peterson function is consistent with the rapid forgetting claimed for primary memory, but there's a problem. To see what the problem is, try the Brown-Peterson experiment on a friend. Give him a consonant trigram, such as BNX, and have him count backwards by 3s from the number 417 for 18 seconds, and then ask him to write down the trigram. He almost certainly got it right. And if you try it out on your entire dormitory, you might get the occasional instance of forgetting, but nothing like the 80%+ observed by Brown and Peterson.

But that's not exactly how Brown and Peterson did their experiments. Instead of having only a single trial per subject, and averaging over subjects, they gave each subject many trials, and averaged across trials, and then across subjects. Apparently, the Brown-Peterson function is observed only after subjects have gone through many trials.

To

check this, Keppel and Underwood (1962) analyzed the

Brown-Peterson function on

individual trials, with retention tested after 3, 9, and 18

seconds. On

the first trial, there was essentially no forgetting, even

after 18 seconds of

distraction. On later trials, however, they observed a

progressive

increase in forgetting. They concluded that the

Brown-Peterson function

represents the build-up of proactive inhibition.

Apparently, items from earlier trials were apparently interfering with items on later trials. But those items from the early trials are no longer in primary memory -- it doesn't have enough capacity. Thus, the Brown-Peterson experiment, which seemed to confirm rapid forgetting from primary memory, isn't really about primary memory after all, because the items that cause the forgetting aren't being held there.

Interestingly,

we

can also show release from proactive inhibition by

changing the

category of the item being memorized. Wickens (1972)

gave subjects a

series of three trials, with all items drawn from the same

category -- e.g., 3

consonants. In this phase of the experiment, he observed

a progressive

buildup of PI, just as in Keppel and Underwood (1962).

On the 4th trial,

Wickens shifted the item type for half the subjects -- e.g.,

from 3 consonants

to 3 digits. In this condition, PI disappeared, and

recall returned to the

level observed on Trial 1. As the 5th and 6th trials

continued with the

new item type, PI continued to build up again -- again, just

as in Keppel and

Underwood.

Release from PI shows that the interference effect is controlled by the categorization of the item. But these categories are a matter of secondary memory, which go way beyond any acoustic representation. Forgetting in the Brown-Peterson paradigm is not a function of primary memory; rather, the buildup and release of PI must reflect interference from secondary memory. This phenomenon provides further evidence that the forgetting function determined in the Brown-Peterson paradigm doesn't bear on the nature of primary memory, separate from secondary memory.

The general thrust of these kinds of experiments is to cast doubt on the structural distinction between primary (short-term) and secondary (long-term) memory -- which is the sine qua non of the multistore model.

Still,

there is something special about primary memory, which is that

we can

deliberately maintain items in an active state by means of

rehearsal.

Baddeley and Hitch (1974) proposed that we rename this memory

structure working

memory -- because this is where we perform cognitive

activities.

According to Baddeley and Hitch, working memory consists of a

central

executive which controls several slave systems:

The slave systems maintain items in an active state. These are very transient memories: once rehearsal stops, the items disappear from working memory; and newly incoming information will replace old information.

So what's the difference between working memory and primary memory? While primary memory was considered as an intermediary between the sensory registers and secondary memory, this is not the case for working memory. Baddeley and Hitch propose that information is processed directly into secondary memory, with no need for an intermediate way-station like primary memory. Then, when the cognitive system wants to use stored information, it copies it into working memory.

John Anderson (1983) has proposed an expanded

conception of

working memory, which he considers to consist of those

representations --

whether acquired through perception or retrieved from memory

-- that is

currently in a high state of activation. Other

representations are also

part of working memory:

Baddeley and Hitch's conception of working memory has become extremely popular among cognitive psychologists, and has stimulated cognitive neuroscientists to search for the neural substrates of working memory in the prefrontal cortex.

This page last modified 05/27/2014.