Note: This paper was presented at a symposium, "Scientific Foundations of Clinical Psychology at the Beginning of the 21st Century -- Victories, Setbacks, and Challenges for the Future" sponsored by Division 12 (Clinical Psychology), at the annual meeting of the American Psychological Association, Honolulu, July 31, 2004.

Links to other papers that discuss clinical training issues will be found in the reference section. Appended to this paper are comments on certain of its proposals, and my replies to them.

See also: Kihlstrom, J.F. (2005). What qualifies as evidence of effective practice? Scientific research. In J.C. Norcross, L.E. Beutler, & R.F. Levant (Eds.), Evidence-based practices in mental health: Debate and dialogue on the fundamental questions (pp. 23-31, 43-45). Washington, D.C.: American Psychological Association. LInk to prepublication draft.

I greatly appreciate the invitation to join this symposium on the future of scientific clinical psychology. Although I have never been on the core faculty of a clinical training program, I do have clinical training, including an internship, and I have tried though my research and teaching to help build bridges between clinical psychology and the other subfields of the discipline, as well as between clinical psychology as a science and clinical psychology as a profession -- bridges that should run both ways (Kihlstrom & Canter Kihlstrom, 1998).

Let me begin with a little personal history. My own clinical training was in the classic scientist-practitioner model, with a decided emphasis toward science and away from practice. I applied to Penn because I was interested in doing hypnosis research with Martin Orne, and because I was also interested in personality -- I wrote on my personal statement that I wanted to quantify the concepts of existentialist theories of personality, leading Burt Rosner, the chair of Penn's Psychology Department at the time, to tell me that they had accepted me just to see what I looked like -- so I sent my application to the Program of Research Training in Personality and Experimental Psychopathology. I was very happy, the next spring, to get a thick envelope from Philadelphia -- but also dismayed to find that Penn's offer of admission was signed by Julius Wishner as head of the Clinical Training Program. I immediately called Julie, who ran both programs, and told him I didn't want to be in the Clinical Training Program; in that charmingly gruff way that Julie had, he replied "Don't worry: you're not", and hung up the phone. It turned out that Julie was prevaricating a little -- I was in a clinical training program, but it just wasn't called that, but at least it wasn't oriented toward preparing students for careers in clinical practice. Nevertheless, the students in that program did everything that clinical students did -- some proseminars, a weekly research seminar, a course on assessment, a course on treatment, a little practicum. It was enough to prepare us for our internships. Having completed our dissertation research before leaving on our internships, we wrote our dissertations at night and on weekends, and then we were out.

It's been said that everybody favors the training model in which they themselves were trained, and I suppose that I'm no exception. For all these years, I have been a staunch advocate of the scientist-practitioner model, and dismissive of such alternatives as the practitioner-scholar model. To be honest with you, I'm not even all that thrilled with the clinical scientist model, which is probably the closest to the version of the scientist-practitioner model implemented at Penn at that time I was there -- not just because the very term "clinical science" strikes me as scientistic (Noam Chomsky once said that you know a science is in trouble when it has to call itself a science; he had political science in mind, but the criticism might apply equally well to cognitive science and neuroscience), but because it's uninformative about what the person actually does: the person isn't a clinical scientist -- he or she is a clinical psychologist. Also, the clinical-scientist model, like the practitioner-scholar model, seems to dig a ditch, rather than build a bridge, between science and practice. However, it now strikes me that the scientist-practitioner model has outlived its usefulness, and creates more problems than it solves. In my view, it is now time to train some students for science, and other students for practice -- even if this training must take place in quite different programs, and even if it must take place in quite different institutions.

The reason for my change of heart is that I have become increasingly convinced that the traditional scientist-practitioner model works to the disadvantage of both types of students. For example, the student training for an academic career in teaching and research must spend precious time preparing for a career of clinical practice that he or she will never pursue, and in which he or she has no particular interest. It's one thing for budding clinical researchers to take a couple of courses in assessment and treatment methods, see a couple of patients during practicum, and then get full-time exposure to "the living material of the field" during their internships before taking up posts in universities and medical centers. It's another thing entirely for students who are really interested in clinical research to spend as much as one or even two thousand hours during their graduate studies acquiring and polishing practical skills to make themselves more attractive in the competition for internship placements.

At Berkeley, for example, students in our "clinical science" program must complete, in addition to a two-semester clinical proseminar (no harm in that), and one or two courses in methods and statistics (everybody does that), two or three courses in assessment, two courses in intervention, and the equivalent of more than six courses of practicum -- not to mention three to four "breadth" courses covering the biological, cognitive-affective, social, and individual bases of behavior. That's a total of 13 to 15 courses -- almost two full years' worth.

This doesn't count an unspecified number of courses on diversity issues, and the requirement that each clinical student take a minor outside clinical. Nor does it take into account the fact that clinical "students... are encouraged to take as many as possible of the major courses and seminars offered by the core Clinical Science Faculty": at last count, there were approximately 15 such courses in the General Catalog. All this while their research-oriented peers in cognitive, social, and developmental psychology, having completed their proseminar and methods requirements in their first year or so of graduate study, are happily working in their advisors' laboratories developing their individual research portfolios, and taking the occasional seminar offered by their advisor or someone in a closely related area of research. Research, including research-related courses and seminars, should have pride of place in the training of these research-oriented students. Instead, both research training and research itself are subordinated to the requirements of training for practice.

Note added 2005: As of 2004-2005, the UCB area in Developmental Psychology has reduced its proseminars from 4 to 2, further increasing the disparity between Clinical Science students and their Developmental counterparts.

Note added 2011: The Clinical Science area subsequently revised its curriculum, easing the course requirements somewhat. As of the 2008-2009 academic year, students are required to take one proseminar course, one or two courses in statistics, two courses in clinical assessment, one course in intervention, two specialty clinics, and four breadth courses -- in addition to attending staff meetings when working in the Psychology Clinic. Diversity, ethnic minority, ethics, and professional issues can now be addressed in the context of these courses. That is the equivalent of 11-12 courses (depending on whether the student takes a second statistics course). This is certainly a reduction from the 13-15 courses specified in the previous requirement, but still well above the norms for the other graduate areas. And while students are no longer "encouraged to take as many as possible of the major courses and seminars offered by the core Clinical Science Faculty", they are still "encouraged to take as many elective courses as their schedules will allow", as well as "additional courses in diversity and ethnic minority issues".

Students heading for careers

in clinical practice face a different set of issues. Because

they take the same courses as the other graduate students do,

they have to sit through "breadth" and methods courses that

are expressly intended for students preparing for scholarly

careers in various subdisciplines of the field. I don't doubt

that budding practitioners would benefit from a concise

overview of cognitive psychology (at the very least, such a

course might have spared us the excesses of the

recovered-memory movement). But that's not what they usually

get, because for the most part clinical students take breadth

courses that are designed not for them, but rather for

graduate students in other subfields of psychology. Instead of

a concise overview of the cognitive psychology that every

clinician ought to know, they get 15 weeks' worth of lectures

comparing prototype and exemplar theories of conceptual

structure, or connectionist and rule-based theories of the

acquisition of the past tense of verbs. These courses

are not listed because they are designed and taught for the

purpose of providing budding clinicians -- practitioners or

scientists -- with a breadth of exposure to psychology.

They are listed simply because they are available.

Students heading for careers

in clinical practice face a different set of issues. Because

they take the same courses as the other graduate students do,

they have to sit through "breadth" and methods courses that

are expressly intended for students preparing for scholarly

careers in various subdisciplines of the field. I don't doubt

that budding practitioners would benefit from a concise

overview of cognitive psychology (at the very least, such a

course might have spared us the excesses of the

recovered-memory movement). But that's not what they usually

get, because for the most part clinical students take breadth

courses that are designed not for them, but rather for

graduate students in other subfields of psychology. Instead of

a concise overview of the cognitive psychology that every

clinician ought to know, they get 15 weeks' worth of lectures

comparing prototype and exemplar theories of conceptual

structure, or connectionist and rule-based theories of the

acquisition of the past tense of verbs. These courses

are not listed because they are designed and taught for the

purpose of providing budding clinicians -- practitioners or

scientists -- with a breadth of exposure to psychology.

They are listed simply because they are available.

Something similar happens with

the methods and statistics requirement. Again, clinical

practitioners-in-training are subjected to exercises in

counterbalancing and Latin squares, discussions of the

comparative advantages and disadvantages of the Bonferroni and

Tukey multiple-range tests, principal components versus

principal factor analysis, and how to calculate the proper

degrees of freedom in discriminant function analysis. Clinical

researchers need this kind of instruction but it is simply

lost on those headed for clinical practice -- not because they

aren't smart enough, or even because they're not particularly

interested (who in their right mind is?), but because once out

of graduate school they will never have occasion to apply this

knowledge in their entire careers. Just as budding

schizophrenia researchers don't have to know how to do

psychotherapy, budding psychotherapists do not need to be

taught how to do statistics. Instead, they need to be

taught how to consume statistics -- and, in

particular, to respect statistical evidence as the core of the

scientific base of clinical practice.

Something similar happens with

the methods and statistics requirement. Again, clinical

practitioners-in-training are subjected to exercises in

counterbalancing and Latin squares, discussions of the

comparative advantages and disadvantages of the Bonferroni and

Tukey multiple-range tests, principal components versus

principal factor analysis, and how to calculate the proper

degrees of freedom in discriminant function analysis. Clinical

researchers need this kind of instruction but it is simply

lost on those headed for clinical practice -- not because they

aren't smart enough, or even because they're not particularly

interested (who in their right mind is?), but because once out

of graduate school they will never have occasion to apply this

knowledge in their entire careers. Just as budding

schizophrenia researchers don't have to know how to do

psychotherapy, budding psychotherapists do not need to be

taught how to do statistics. Instead, they need to be

taught how to consume statistics -- and, in

particular, to respect statistical evidence as the core of the

scientific base of clinical practice.

Part of the problem here, frankly, is the APA

accreditation scheme, which continues to be based, at least

implicitly, on the scientist-practitioner model. Because it

doesn't distinguish between research-oriented and

practice-oriented students, it imposes the same requirements

on both. The result is that research-oriented students are

forced to take courses that they don't need, and

practice-oriented students are forced to take courses that

aren't appropriate for them. Part of the solution, I think, is

for research-oriented, "clinical science" programs to simply

drop their accreditation. That will permit them to develop

their own curricula, just like their colleagues in cognitive,

developmental, and social psychology, free of outside

infringements on academic freedom. It will also make them less

attractive to applicants who are not really interested in

research, but who say they are in order to gain admission to

high-prestige programs. Without the pressures of

accreditation, clinical research training programs would look

more like their counterparts in cognitive, developmental, and

social psychology.

Note added 2020: In 2007, an alternative accreditation scheme, known as the Psychological Clinical Science Accreditation System, was established under the auspices of the Association for Psychological Science, the Academy of Clinical Science, and the Council of Graduate Departments of Psychology, intended to better meet the needs of "Clinical Science" programs that were closely oriented to the scientific basis of clinical practice.

After years of maintaining dual accreditation with both APA and PCSAS, (thus doubling the expenses associated with accreditation), UC Berkeley took the radical step of terminating its APA accreditation (technically, it will not renew it when its current accreditation expires).

Part of the problem, too, is the changing nature of the clinical internship. When internships were originally proposed, by David Shakow (Shakow, 1938), they were intended to be opportunities for graduate students interested in clinical problems to get out of the classroom, and out of the laboratory, and become acquainted with "the living material of the field". Most of the student's clinical experience was to take place on the internship itself. Actually, I've always thought that every graduate student ought to do some sort of clinical internship, for just this reason: students of visual perception could spend some time in an optometry clinic, and students of memory could spend some time with Alzheimer's patients -- but I digress. But that's not the case anymore, because internships are increasingly construed as sources of revenue rather than vehicles of training, and so pre-doctoral students must devote increasing amounts of time preparing to provide reimbursable services with a minimum of costly supervision. The solution to this problem is for research-oriented programs to develop their own, in-house or "captive" internships, to give their students the kind of broad and deep encounter with "the living material of the field" that is consistent with the research-training goals of their PhD programs.

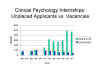

Actually, I have long believed

that every clinical psychology program, whether

science-oriented or practice-oriented, should have its own

in-house or "captive" internship program (Kihlstrom &

Canter Kihlstrom, 1998). Such an arrangement would avoid the

situation that exists now, and has existed at least since the

1990s, where there are more students graduating from clinical

training programs than there are internship slots. For

example, data from the Association of Professional Psychology

Internship Centers (APPIC) indicates that, from 1986 to 1997,

the number of internship applicants unplaced three weeks after

Uniform Notification Day increased more than 700%, from 65 to

469, while the number of internship vacancies decreased

by more than 50%, from 79 to 34.

Actually, I have long believed

that every clinical psychology program, whether

science-oriented or practice-oriented, should have its own

in-house or "captive" internship program (Kihlstrom &

Canter Kihlstrom, 1998). Such an arrangement would avoid the

situation that exists now, and has existed at least since the

1990s, where there are more students graduating from clinical

training programs than there are internship slots. For

example, data from the Association of Professional Psychology

Internship Centers (APPIC) indicates that, from 1986 to 1997,

the number of internship applicants unplaced three weeks after

Uniform Notification Day increased more than 700%, from 65 to

469, while the number of internship vacancies decreased

by more than 50%, from 79 to 34.

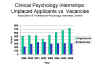

Things improved somewhat after

1998, possibly by the addition of more unaccredited internship

slots, but still, from 1999 through 2004, unmatched applicants

outnumbered unfilled vacancies by a ratio of 2:1. In 2004,

there were 611 unmatched applicants, and 304 positions

remained unfilled.

Things improved somewhat after

1998, possibly by the addition of more unaccredited internship

slots, but still, from 1999 through 2004, unmatched applicants

outnumbered unfilled vacancies by a ratio of 2:1. In 2004,

there were 611 unmatched applicants, and 304 positions

remained unfilled.

The trend described in 2004 has continued, and worsened, as documented clearly in the Match Statistics posted annually to the website of the Association of Professional Psychology Internship Centers (APPIC).

The match process is

improving, but mostly because applicants from accredited doctoral

training programs must settle for unaccredited internships.

If every accredited program had its own internship, every graduate of every accredited program would be guaranteed an accredited internship slot -- more like the match system for medical internships, where the number of slots actually exceeds the number of American graduates. But equally important, from a pedagogical point of view, if every program had its own internship, the pressure on interns to provide reimbursable services would be relieved, and the training goals of internships could be more closely articulated with the training goals -- towards science or towards practice -- of the student's predoctoral program.

As a footnote, let me say that I also believe it is a mistake for clinical training programs to "farm out" practicum experiences to community practitioners, who do not necessarily share the scientific values of the doctoral training program. It's a prescription for disaster when students learn in the classroom that the Rorschach isn't worth the paper it's printed on (Wood, Nezworski, Lilienfeld, & Garb, 2003), and then they go out into the world to score Rorschachs for someone who thinks that they are the epitome of clinical assessment. Medical students do their clerkship rotations in academic health-science centers; similarly, clinical training programs should keep tight control of their students' externship and practicum experiences.

a So what would training look

like if we separated training for practice from training for

science? Frankly, it would look a lot like the training

provided in medical schools, which offer quite different

curricula for research-oriented students headed for the PhD,

and practice-oriented students headed for the MD. Berkeley

doesn't have a medical school, but there is a very nice one

just across the bay at the University of California, San

Francisco, which also offers PhDs in some 17 health-science

fields from Biochemistry and Molecular Biology to Sociology,

and there is no overlap between the two sets of programs. For

example, medical students take an "Essential Core" of 9

interdisciplinary courses, beginning with an 8-week review of

basic biological and behavioral science followed by 8-week

blocks devoted to the various organ systems, cancer, infection

and immunity, and life-span human development. These block

courses run parallel to a foundational course in patient care

that runs for two years, covering clinical skills,

professional issues, and clinical reasoning; this course then

leads to a longitudinal clinical experience in the third year

and advanced rotations in the fourth. Medical students get

some biochemistry, but it is not the same basic biochemistry

course taken by biochemistry PhD students; and they get some

neuroscience, but not the same basic neuroscience course taken

by neuroscience PhD students.

So what would training look

like if we separated training for practice from training for

science? Frankly, it would look a lot like the training

provided in medical schools, which offer quite different

curricula for research-oriented students headed for the PhD,

and practice-oriented students headed for the MD. Berkeley

doesn't have a medical school, but there is a very nice one

just across the bay at the University of California, San

Francisco, which also offers PhDs in some 17 health-science

fields from Biochemistry and Molecular Biology to Sociology,

and there is no overlap between the two sets of programs. For

example, medical students take an "Essential Core" of 9

interdisciplinary courses, beginning with an 8-week review of

basic biological and behavioral science followed by 8-week

blocks devoted to the various organ systems, cancer, infection

and immunity, and life-span human development. These block

courses run parallel to a foundational course in patient care

that runs for two years, covering clinical skills,

professional issues, and clinical reasoning; this course then

leads to a longitudinal clinical experience in the third year

and advanced rotations in the fourth. Medical students get

some biochemistry, but it is not the same basic biochemistry

course taken by biochemistry PhD students; and they get some

neuroscience, but not the same basic neuroscience course taken

by neuroscience PhD students.

That's essentially the vision I have for the future of training in clinical psychology. Training for clinical research should look more like research training in the rest of psychology, and training for clinical practice should look more like medical school. If the proposal on the practice side looks like a PsyD program, that's intentional. I always thought that the PsyD was a good idea, even if I also thought it was usually a poorly implemented one (Yu et al., 1997). The PsyD recognized that there is an inherent difference between training for science and training for practice. If some PsyD programs were established to enable students (and faculty) to escape from science, and I am sure that they were, the solution is not to abandon the PsyD format, but to reform it so that future generations of practitioners are trained to respect science as the base of practice, and of the status and autonomy of their profession as well -- just as current and future generations of physicians are.

There is no reason that training for science and training for practice cannot proceed on parallel tracks within the same department -- though with different curricular requirements. Schools of public health and social work have no problems with these divided functions -- nor, for that matter, do schools of law and business. At Berkeley we have a School of Optometry that trains both researchers in vision science and professional optometrists, as well as a College of Chemistry that houses separate departments of chemistry and chemical engineering. In both places everybody seems to get along just fine, but the two curricula are very, very different. Housing the two programs under the same institutional roof would allow current and future scientists and practitioners to benefit from contact with each other, but it would require some adjustments: clinical faculty would probably have to expand, to cover all the various aspects of clinical practice; and nonclinical faculty would have to agree to mount basic-science courses that are geared to the needs of future clinical practitioners.

But there are lots of alternative arrangements possible. Universities might develop "schools" of psychology, like Berkeley's School of Optometry, which would train both scientists and practitioners with an expanded faculty. Or, training for practice could be removed to academic health-science campuses like UCSF, which already house schools of medicine, dentistry, and nursing; this would bring practice-oriented students into closer contact with patients, but it would also require expansion of the basic behavioral science faculty in these institutions -- not incidentally, creating jobs for the products of the research training programs.

Training for practice might also be removed to free-standing schools of professional psychology. As with the PsyD, there is nothing wrong with such schools in principle. UCSF is for all intents and purposes a free-standing medical school, and it is not inferior to Harvard because it lacks departments of classics and political science. In fact, UCSF is the equal to Harvard (or its superior) precisely because, in addition to training medical students, it hosts a world-class cadre of basic scientists who do basic and applied research relevant to health care. This is a feature that most free-standing professional schools lack -- and which may contribute to their relatively poor performance (Cherry, Messenger, & Jacoby, 2000; Maher, 1999; Yu et al., 1997). If professional schools are going to train practitioners properly, they are going to have to invest a lot more in their basic-science and research infrastructure than most of them seem inclined to do at present.

Now, I'm told that this room holds 113 people, so I bet that there are at least 113 objections to this proposal. I have answers for all of them, but time permits me to respond to only four or five.

First, I seem to be advocating the segregation of science and practice within clinical psychology, when clinical practice has already veered far from its scientific base, and managed care is putting a greater emphasis on evidence-based practices (Kihlstrom & Kihlstrom, 1998). But I'm not: science and practice are not like science and religion, Steven Jay Gould's "Non-Overlapping Magisteria" that have nothing to say to each other (Gould, 2003). Science needs input from the real world of practice, and practice must be placed on a firm scientific base. But science and practice are different, and they deserve curricula that respect these differences. The fact that MDs don't get the same coursework and research experience as biochemistry PhDs doesn't make medicine any less science-based. Medicine is "scientific" not because physicians are scientists, but because medical practice is based on scientific evidence.

Second, my view of clinical practice may be outmoded. I have heard it said that the future of clinical psychology is not in the direct delivery of clinical services, but rather in the design and evaluation of assessment and treatment programs that will be delivered by other professionals. If so, we might have to invent an entirely different practice-training model, but it still wouldn't be the model of PhD research training. Moreover, we're probably going to need a lot fewer clinical psychologists than we're training at present, and if so someone should inform the thousands of undergraduates who will be applying for positions in clinical training programs next year, and the thousands more each year after that, with the expectation that their careers will be devoted to testing and therapy of individuals and groups.

Third (and maybe fourth, depending on how you count), I seem to be going against history, abandoning the scientist-practitioner model at precisely the time when more and more physicians are training to do research, and basic researchers in fields like cognitive neuroscience are becoming more interested in studying patients. As a matter of fact, that's why I enrolled in an experimental psychopathology program to begin with -- because long ago I realized that psychopathology offered a unique perspective on normal mental life. But you don't have to be trained to practice to take pathology seriously, or to do research with patients. There's nothing that cognitive neuroscience does that physiological psychology didn't do before it, and I have yet to see a piece of medical research that couldn't have been done just as well by a "mere" PhD. My graduate advisor was an MD/PhD, and I have two MDs in my own department. Martin was a wonderful researcher, and so are my Berkeley colleagues; but such exceptions merely test the rule, and I'm generally an advocate of the division of labor. To take people who are trained to treat patients, and then divert them into research, simply diminishes the human resources available for healthcare (Kihlstrom, 2000). Scientists who want to study patients should collaborate with the practitioners who treat them, and practitioners who want to do research should collaborate with the scientists who know how.

Fourth (or fifth, depending on how you counted), I've abandoned the thing that I, as a devout generalist within psychology, ought to prize most: the breadth of exposure to the entire field of psychology that clinical psychologists must get, whether they are training for science or training for practice, by virtue of the APA accreditation standards. Now, I freely admit that the one thing I really like about the standards is that they contain a breadth requirement. And I think that a breadth requirement should be preserved in training for practice, just as there is a broad basic-science requirement in medical school. But as much as I don't like it, the fact is that psychology as a science is going the way of specialization, if not super-specialization. We have students in visual perception who don't know anything about memory, students studying working memory who don't know anything about autobiographical memory, students in psycholinguistics who are so focused on the processing of individual words that they don't know what a sentence is (thanks to my former Arizona colleague Ken Forster for that last example). It's a shame, indeed it is, and I think it works to the detriment of psychology as a science that we produce graduate students who are so narrowly specialized that cognitive students know nothing about social psychology and social students know nothing about development. It won't kill budding clinical researchers to take four breadth courses -- what kills budding clinical researchers is training for practice (and, for that matter, vice-versa!). But in the final analysis, responsibility for breadth of training should fall on departments as a whole, not on clinical psychology alone.

Frankly, I'm sorry to see the scientist-practitioner model go, because it was a lovely idea. But it was also a rhetorical device constructed, in large part, to aid an upstart clinical psychology's professional competition with the psychiatric establishment. Psychiatrists had at least the cosmetic advantage of medical training (although as far as I can tell not many of them ever practiced that much medicine), and the scientist-practitioner model seemed to say, "We're doctors too, but we're not just doctors, we're also scientists". (The practitioner-scholar model adopts the same conceit -- "We're not just practitioners, we're also scholars": Funny, but physicians don't have to defend their professional status by referring to themselves as scholars.) Neither the scientists nor the practitioners need to do that anymore. Clinical scientists have enough to learn without learning practice as well, and clinical practitioners have enough to learn without acquiring research skills as well -- especially in the new era of managed care and evidence-based healthcare. I'm simply proposing that our training programs reflect that fact of life. People who want to treat patients should be trained to treat patients, with the best methods that science provides, and people who want to do research should be trained to do research, so that our clinical knowledge and practices constantly improve.

Note Added on thePCSAS Accreditation ProposalIn 2008, Timothy Baker, Richard McFall, and Varda Shoham published a critique of current "APA" system for accrediting clinical training in Psychological Science in the Public Interest, a journal of the rival Association for Psychological Science. The article proposed a new accreditation system, known as the Psychological Clinical Science Accreditation System, geared toward the interests of "clinical science" training programs.

The critique generated a furor within the practitioner community, and no little controversy among academics engaged in clinical training, resulting in quite a bit of coverage in the professional and popular press. Because I am a researcher with clinical training and strong clinical interests, and had expressed skepticism about the proposal (though not the critique) over the listserv maintained by the Society for a Science of Clinical Psychology (of which I am a longtime loyal and disciplined member), I was interviewed via e-mail about the proposal for an article in the APA Monitor on Psychology ("Disputing a Slam Against Psychology" by Michael Price, December 2009). Due to the exigencies of editing, my remarks didn't quite get across the way they might have, and I was slightly misquoted:

In fact, of course, PhD programs and medical schools are accredited differently. Medical schools are accredited by the American Medical Association, just as clinical psychology programs are accredited by the American Psychological Association. But PhD programs in biology, like PhD programs that are geared to training research psychologists, aren't accredited at all -- except insofar as their larger institutions, such as the University of California, Berkeley, are accredited by their regional board. In what follows I reprint my interview in full for the record.

Do you think there's a discrepancy between the content of the APS journal article and how it's been portrayed by media reports of it? No, from what I have read, the media reports have it pretty much right. The article is very critical of the current state of both clinical practice and clinical training, and by extension the "APA" accreditation process (I put "APA" in quotes because I know that, technically, APA doesn't do the accreditation -- but, as a matter of historical fact, APA devised the current accreditation system, put it in place, and maintains it; similarly, I understand that APS isn't proposing to do accreditation either, but we'll use "APS" accreditation as a shorthand for what is being proposed in the article). For all too long, research psychologists, who work in academic settings, have treated clinical practice, and clinical training, with benign neglect. What we got were trends like the recovered-memory movement, which has been an utter disaster for psychotherapy as a profession. That's all over now. Clinical psychology owes its professional status, its autonomy from psychiatry, and its eligibility for 3rd-part payments, to the assumption that what clinical psychologists do -- in terms of assessment and treatment -- is supported by a firm scientific base. Now, an increasing number of research psychologists, including those who are engaged in clinical research, have become actively involved in making sure that this assumption is valid. The article is not the opening salvo in this struggle, but it is an important milestone -- rather than avoiding the problems that beset clinical psychology as a profession, the scientific community now is confronting them head-on. This is good: it will make clinical practice more effective, and the science of psychology more relevant.

Do you think there's a need for a new accreditation system? I spoke to one of the co-authors, Richard McFall, and he's advocating a kind of dual accreditation system for institutions who want to show that they're teaching students competent research techniques. Do you think a dual system is a good idea? I'm on record (on the SSCP listserv) as opposing the new accreditation system, but on mostly practical grounds. Medicine doesn't have two competing accreditation systems, and the establishment of a second accreditation system can only give the appearance of a field in disarray, which can only give aid and comfort to those who would like to diminish the status of clinical psychology as an independent mental-health profession. Accreditation isn't necessary for those institutions who want to teach competent research techniques. We don't accredit research training programs in biological psychology, or cognitive or clinical psychology, or developmental or social or personality psychology. The only reason for accreditation is to insure that professional practitioners are competent -- meaning that they adhere to practices (in assessment, treatment, and prevention) that are based on science -- either those that have actual empirical evidence for their efficacy, or those which are reasonably grounded in established psychological theory. What should happen is that the APA accreditation system should be strengthened, so as to insure that prospective clinical practitioners are taught scientific psychology, and a respect for evidence-based practices, the same way that medical students are taught the basics of anatomy, physiology, biochemistry, pharmacology, etc., as well as respect for clinical research that identifies which treatments work and which do not. In my view, APA accreditation has failed to do this -- certainly at the level of accrediting doctoral training programs, and possibly at the level of accrediting internships as well (I have particular views about the clinical internship, which I've also expressed in a paper). If the new accreditation system prompts APA to strengthen its own accreditation standards, then the APS initiative will have done a good thing. But there's a paradox here, which is that the APS accreditation system is directed toward doctoral programs that are oriented toward training clinical researchers, not clinical practitioners. And, as I've indicated before, research training needs no additional accreditation. Therefore, I have to agree with Scott Lilienfeld that the new accreditation system, because it's not directed toward those programs that focus on the training of clinical practitioners, won't hit its intended target.

Some of the people in the practice community I've spoken with feel like the authors painted practitioners with too broad of a brush. Is the science-disconnect between practitioners and evidence really that bad, and if so, how much at fault is APA's accreditation system? As far as I can tell, from my vantage point as a researcher with an active interest in clinical practice, and who has observed the training of clinical practitioners for almost 40 years, the authors have pretty much got it right. It might be too extreme to say that there is a science-practice war, but there is definitely a science-practice disconnect. All too often, research psychologists treat clinical practitioners with benign neglect, and clinical students treat scientific psychology as something that has to be tolerated as a price for getting a professional credential. That's one reason, I think, why free-standing professional schools of clinical psychology, offering a PsyD degree instead of a PhD, have become so popular. They don't typically have non-clinical scientists on their faculties, and their students get only minimal exposure to scientific psychology. So, as a group, they actually fail in this respect. And APA has been complicit in this, by accrediting so many free-standing programs whose scientific grounding is so weak. The proposed APS system reflects the pent-up frustration of the scientific community with the lax standards of the APA system.

You mention that you think that clinical practice training and clinical science training should be split. Would an accreditation system like the one APS has proposed help solve some of the problems you mention? Just as background, I'm a product of the scientist-practitioner training model. My PhD is from the University of Pennsylvania, which established the first university-based psychological clinic in the United States, if not the world, and which -- at least when I was there, along with such noted clinical researchers as Susan Mineka, Lynn Abramson, and Lauren Alloy -- was a real model for the kind of clinical science programs that we see today. But I've now come to the conclusion that the scientist-practitioner model was a failure. Medicine doesn't train scientist-practitioners. It trains practicing physicians who understand and respect science. And, these days, physicians' understanding of and respect for science is reinforced by managed care (broadly construed), which simply says to physicians: If there isn't evidence that it works, we're not going to pay for it. As a result, physicians have gone back and put even their tried and true practices -- like using a stethoscope to detect cardiovascular problems -- to empirical test. For a long time, clinical psychologists were invulnerable to these pressures, because they relied on out-of-pocket payments from their patients, and because the scientific community generally left them alone (that's the benign neglect). But now they are increasingly coming under the same kinds of pressure. Parity for mental-health services is a two-edged sword: psychotherapists become eligible for third-party payments, but at the same time they have to abide by a new set of rules. And there's no getting away from this: if physicians and surgeons can't resist the demand for evidence-based practices, what in the world makes psychotherapists think that they'll succeed in doing so? The solution is not to give everyone PhD-level research training. Medical students don't get that. The solution is to make sure that clinical students are thoroughly grounded in scientific psychology, and are oriented around evidence-based practices. That's the kind of training that medical students get, in anatomy and physiology and biochemistry and pharmacology, and outcomes research, and those who want to be psychotherapists should get the same kind of training in psychology -- the science of mind and behavior. I now believe that it is a mistake to try to do training for clinical practice in the context of the usual sort of PhD program -- whether it's Berkeley's, or Yale's, or Arizona's, or Wisconsin's, or Penn's). These programs are just not big enough. It's like medical training before the Flexner Report. Training of clinical psychologists needs to look a lot more like medical school, and it needs to be done in an environment that looks more like medical school. That's my story, and I'm sticking with it.

The APS journal article takes a pretty hard swipe against the PsyD program, and you mention in your piece that you favor the idea of a PsyD program in theory. Is there a way to reconcile the need for specific training in practice with the scientific community's apprehension that the science gets distorted or goes unused in practice? I always thought that the PsyD was a good idea, in principle. It's directly analogous to the MD. We don't make physicians take PhDs in biology or biochemistry. Why should we make psychotherapists take PhDs in psychology? We don't make physicians complete a doctoral dissertation. Why should we impose this requirement on erstwhile psychotherapists? And, frankly, we don't expect physicians to be researchers. My graduate advisor, Martin Orne, was an MD-PhD, I loved him dearly, and he taught me almost everything; and I have colleagues here in my Psychology Department at Berkeley who are MDs, whose scientific accomplishments I respect very much. But there's nothing that any of them ever did, as researchers, that they couldn't have done as PhDs. I now believe that there should be a strict separation between training for research, which should be done in the context of more-or-less traditional PhD programs, and training for practice, which should be done in programs that offer something like a PsyD, analogous to the MD, in training program that look a lot like medical school (but with a focus on psychology, not biology). But that doesn't mean that PsyD programs don't need reform. All the evidence suggests that, although of course there are exceptions, both at the programmatic and the individual level, the typical PsyD program is very weak scientifically. They don't have research psychologists on their full-time, tenured staff (as medical schools do). And, it appears, they don't do enough to inculcate in their students a respect for scientific evidence, and adherence to evidence-based practices. When I was editor of Psychological Science, I published a couple of articles that showed, on empirical grounds, that PsyD programs (as a whole) were doing a pretty bad job of training their students to respect scientific psychology. For example, Yu and his colleagues (1997) showed that graduates of PsyD programs did more poorly on the Examination for Professional Practice in Psychology than did graduates of more traditional, scientist-practitioner, PhD programs. And the late Brendan Maher (1999), who was my colleague at Harvard, showed that there were big differences between those professional training programs that were situated in a traditional university context and those that were not. This is not an argument for doing clinical training in the context of a traditional PhD program. And it's not an argument against free-standing professional schools. UC San Francisco is, essentially, a free-standing medical school, but its lack of a traditional Psychology department -- or, for that matter, departments of Classics or Physics or Political Science -- doesn't prevent it from being the world's greatest medical school. UCSF is the world's greatest medical school because it puts science first. Free-standing PsyD programs can achieve the same kinds of goals, but they've got to put science first. The APS accreditation system, as proposed, won't force them to do this, because it's explicitly not directed at them. The APA accreditation system, precisely because it's oriented toward clinical practice, and not clinical research, has the right and responsibility to hold these programs' feet to the fire. That APA doesn't do this is a shame. It's maybe even a sin. |

On Requiring an In-House Internshipas a Condition for Accreditation

My proposal that all accredited clinical training programs be required, as a condition of accreditation, to offer an in-house internship with enough space to accommodate all of their own students who are in good standing, has engendered considerable discussion on the listserv of the Society for a Science of Clinical Psychology. Here are the comments, accumulated over a period of years, and my responses to them.

Correspondent #1: Thank you for your comments, John. They bring up the question of "what's an internship". They also raise the often forgotten principle of clinical training as a continuum, from practicum to internship and beyond. In an ideal world, there should be such continuity, but that doesn't mean that all programs should offer all students an in-house internship training. We certainly don't want to empower accreditation bodies to impose such a one-size-fits-all game-changer on our clinical science training. That said, it would be great if we could hear of success stories by programs who offer some of their students an in-house, high-quality internship training. I fully agree that having a degree requirement that programs cannot guarantee is untenable. Thanks for getting this discussion going. Response: I think that is exactly the function of accreditation -- to identify minimum standards that all training programs in a particular domain must meet. That's what high-school and college accreditation does. That's what accreditation does for law, medical, dental, nursing, and engineering schools. I don't see why professional training in clinical psychology should be any different. If you want to train clinical practitioners, then you need to be accredited. I think we're now at the point where we need to impose this particular standard. If a program can't meet it, then it simply shouldn't be accredited. Why should we impose this new requirement? The reasons are both pedagogical and practical. On the pedagogical side, we already have several different models for clinical training -- the old, classic, scientist-practitioner model is only one of them. An in-house internship -- or, perhaps, a consortium of internship settings that agree to accept each others' students -- would make sure that the internship is tailored to the philosophy of the student's training program. There is no reason why a student from a science-oriented PhD program should have to administer Rorschachs in order to get into a more traditional internship. But the chief argument, as before, is practical, and motivated by the problems with The Match. Altogether too many students from accredited programs are failing to match to an accredited internship, for the simple reason that there are not enough accredited internship slots to accommodate all applicants. This is simply unfair, and perhaps unethical -- we (collectively) train students, who spend their time and in many cases their money (including student loans) training to enter a profession which, through no fault of their own, denies them the final step toward entry into their career. If we're going to train clinical practitioners, and the internship is deemed necessary to complete that training, then we can't take their time and money for 4, 5, or 6 years and then say "Geez, that's tough -- Good luck!".

Correspondent 2: I am sure that all on this listserv share the sentiment that the internship system is broken and that the students are the victims. certainly in the short term requiring that programs provide internships for all students makes sense. but as my new mayor has pointed out previously, a crisis is too important to waste (minus the expletives). this is a time for us to truly fix what is broken by reconsidering the premise for a required full year internship. for those who have attended academy meetings you know that we have this discussion most every year and I am sure we will again this year. Dick McFall is leading the new accreditation system and described heroic efforts at the state level to change the requirements for licensure (in this case to be accredited by a body other that CoA). I am not the one to describe this but simply I want to say that Tim Fowles and Ryan Beveridge of the University of Delaware are leading an academy task force that is considering new models for clinical intervention training. there are some exciting ideas out there and now is the time for us to engage in real system reform. change is hard but the status quo is unacceptable. Response: I don't see any practical alternative to an internship. In fact, the only alternative I can envision is one in which students accomplish the equivalent of an internship during those predoctoral years when they would ordinarily be engaged in classroom learning, graded practicum experiences with assessment and intervention, and research. That would, effectively, extend the period of doctoral training by at least a year. Like it or not, the internship requirement is based on the medical model of psychopathology -- which, as I've pointed out elsewhere, is not based on an assumption of biological etiology, but rather on medical training and practice. We've argued for an internship year of intensive supervised clinical experience precisely because that's what is done, and done successfully, in medicine. It will take a lot of rethinking, and a lot of argument, to now turn around and say to state licensing boards "Oh, never mind". Especially when it's patently obvious that any proposal to abandon the internship is based less on pedagogical considerations than on practical ones.

Correspondent 3: We tried an optional in-house internship some time ago and it failed, mostly because it took too much faculty teaching time and because we couldn't make enough money from the clients our interns were seeing to pay the interns. We don't take insurance in our training clinic, because most insurance won't reimburse if the student is the therapist. Also, to meet APA internship accreditation standards, we had to take a "diversity of interns", meaning folks from other programs, who weren't always prepared. Of course we didn't need to try to meet APA standards, but that would have made us less attractive to applicants. I think one answer is closer to your recommendation that programs not be accredited if they can't guarantee internships - that answer is to not accredit programs that take too many students. We have too many students in the pipeline. Response: Somehow medicine managed to solve this problem. But I'd only point out that your in-house internship didn't fail because it was "in-house". It failed because it couldn't support itself financially -- just as many other internship programs have failed to thrive for financial reasons that have more to do with how healthcare -- and particularly psychological services -- are paid for than anything else. The funding problem is critical. But my basic point is completely congruent with yours: programs should not be accredited if they can't guarantee internships. In my view, the most reliable way to offer such a guarantee is to have an in-house internship -- though, as indicated earlier, a consortium of internships might do the trick. But somehow the accreditation system -- and this goes for APA and APCS accreditation alike -- has to guarantee that there is an internship slot available for each and every one of the students who are in good standing in each and every one of their accredited programs.

Correspondent 4: I've long advocated for in-house internships - but as a former DCT and former internship director, I am keenly aware that the reinforcement contingencies in academia are usually not consistent with intensive, high-quality internship training. It can be done with creative planning of the integration of research and clinical functions, as well as didactics - but it takes "selling" within the department, the college and the university - and most of all and the hardest to come by, a supportive and selfless faculty willing to take time from active course planning and research to do a solid job of clinical supervision. The other points raised by Susan are also quite valid, but I think can be worked around with enough time and thought. Unless things change quickly in ways I consider doubtful, foregoing accreditation in order to "force" an in-house internship would hurt students and the academic program. It is rare that a good, clinical scientist or scientist practitioner doctoral program can pull all of those factors together. If it happens, it's beautiful. Response: We can't just let it happen, and be happy when it does. We have to make it happen.

I concur with the earlier point, that forcing students to take an in house internship may be counterproductive. Response: I am less concerned with forcing students to take an in-house internship than I am in requiring that training programs provide one. If every training program guaranteed an internship slot to each of its students, then there would be at least one internship slot for every applicant in the market, and that would create the possibility that students could move around. But at the very least, every student in good standing would be insured that, by virtue of being enrolled in an accredited program, s/he could complete the minimal requirements to pursue his or her chosen professional career.

Final response here - requiring 100% internship placement from a doctoral program is totally unrealistic. Perhaps none of you has dealt with a student who decided to do the intern app process his or her way rather than yours, or who have simply not taken the time and effort to do the applications carefully - but I've seen many from good schools who did not get placed/matched due to sloppy or simply naive approaches to the process. Slamming a doctoral program for one such unwise student is unacceptable. Perhaps there is a minimum proportion of successful matches that might be required, but 100% ain't it. Response: Not only is it totally realistic, it is also totally necessary, as our recent experiment demonstrates. We impose lots of other requirements on clinical training programs. Why not require that they actually complete clinical training. A student who wishes to go outside the house for an internship is free to apply anywhere s/he wants. If the application fails, there will still be a spot available in his or her home program's in-house internship. And with an in-house internship, a student wouldn't have to apply at all -- the slot would be here waiting for them at the appropriate time.

I'd love to see university departments develop cooperative internship arrangements with local clinical facilities adhering to practice model consistent with the university's training model - a provision of internship opportunities for students in exchange for faculty time donated to supervision and clinical research support in the clinical facility. Still difficult, but probably a bit more generally feasible than internship programs totally within an traditional university department. Response: But this is just one way of organizing an in-house internship. Every college campus has a health center with a mental health unit. There are inpatient and outpatient psychiatric facilities in most general hospitals. There are lots of places, everywhere, where an in-house internship could be put together.

Another interesting possibility is the "professional school" as articulated 40 years ago by George Albee - NOT what we currently call "professional schools", but schools within universities that are similar to schools of other professions, like medicine and dentistry, where clinical training, basic education, and research are all valued and reinforced. There are big problems with this model as well, but it is probably also more feasible than in-house psych- dept run internships. Response: In the 2004 talk referenced above, I specifically propose that clinical training be separated from research training, along the lines of medical school, and along the lines of a PsyD program. But that is a topic for

Just some rambling thoughts on a very important topic. I would love to see a bunch of DCTs put together stellar in-house internship programs to prove my misgivings unfounded. Response: Yes, it would be nice if the APA would actually do something proactively, instead of merely wringing its hands over this problem.

Correspondent 5: Why oh why can we not just go the medicine route and move the internship post-doc. Then the students who wanted to practice or sharpen their clinical skills or learn a clinical specialty could go do that. Is that just too simple? Response: This is not the solution to the problem, because there are still fewer internship slots available than there are qualified applicants. There are virtues to making the internship post-doctoral, not the least of which is that MDs would be required to address psychologists as "Doctor". But unless every graduate of an accredited clinical psychology program is guaranteed an internship slot, the current problem will persist, and a significant number of students will have invested their time and money and effort training for a profession that they are prevented from practicing because they cannot complete the minimum requirements for licensure.

Correspondent 6: That may well happen, and it will be interesting if it does - it would certainly take the pressure off of the academic programs by eliminating the situation of relying on others to provide a requirement that the university mandates. Response: Yes, but the pressure at present is almost entirely on the students. It would actually increase the pressure on the academic programs, because they would have to insure that their qualified graduates could complete their education, and be eligible for licensure.

Potential problems? 1. The academic department loses all control over where a student chooses to put the capstone on her/his training. Response: But departments don't have this control now. Students apply to a bunch of places, and they're accepted to some, or none, and the department has not control over either the application or its result. This maximizes the control of the academic department, because it requires that the department have its own internship, right there, available for students at the appropriate time.

2. I suspect that the imbalance between those who want internships and available slots would continue to be a problem. Response: No. Because every clinical training program would be required to host an in-house internship of sufficient size to accommodate all of its own graduates, there would be, by definition, no imbalance. If a CTP could offer a bigger internship, and attract applicants from outside, all the better. But that is a different issue entirely.

3. Any semblance of quality control over internships by academia or professional associations would likely evaporate. I would expect the abuse of "interns" used for free or cheap labor to skyrocket. Response: Absolutely not. In my scheme, the clinical training programs would have complete quality control, precisely because it's their own program. Interns, whether medical or psychological, are already used as cheap labor, and that is the cost to them of completing their professional training. It's the way the professional world works, and they had better get used to it.

Correspondent 7: I'd like to echo Bob's comment below from the perspective of someone who didn't match in the 2006 match. My university (USC) had had a 100% placement rate for about 10 years and then my year, two of us didn't match. It was a shock to us and our program. We both had applied to selective, mostly child programs (or integrated adult/child programs) even though our training was probably about 2/3 adult and 1/3 child because we both wanted to head towards child psych careers (albeit research careers). We both had applied to schools outside of our the LA metro area, wanting a change of pace. Previously, people had done more selective adult/other focus (e.g., health, neuropsych) outside of the area, or had done child programs within the metro area, so we were both the first to try a different approach. What it did was open the program's eyes up to the need for more child and adolescent placements/externships and opportunities in our own training center. They did a really nice job with this in the years to come and several of our students have since gotten matched to very strong child programs outside of LA (UC Davis, UWashington). I'd hate for any program to be penalized when a program can clearly use a non-match as feedback with which to improve their program. Response: Your program would have continued to have a 100% placement rate if it had its own in-house internship. There may be a problem with an "adult-oriented" training program that hosts an "adult-oriented" in-house internship that isn't great for a student whose interests are in children, and maybe that is an issue that can be addressed by something like a post-internship residency in a specialty. That is another question, about the scope of training programs. All medical students, and I believe all medical interns, go through a rotation system in which they are systematically exposed to the practice of medicine, from anesthesia to urology.

Correspondent 8: CUDCP took a careful look at the match imbalance at our annual meeting a couple years ago with the intention of coming up with solutions. One of the presentations we heard put to rest the myth that medical training has some tight controls that match residencies with student admissions. That apparently is not the case at all. In fact, their match imbalance is worse than ours. See the following figure: Response: Somehow I lost the figure, which I'd be very interested in examining. But are we talking about residencies or internships? It's possible that I have overstated the situation with respect to medicine, and it is also possible that medicine has created some imbalance by holding out slots for foreign medical graduates. But the fact is that the AMA regulates the number and size of medical schools very carefully, with an eye to the market. As far as I can tell, every graduate of an onshore medical school is guaranteed an internship somewhere. If they don't, they should, and for the same reason: medical students have invested a great deal of time, money, and effort in acquiring a professional education: they have a right to be able to complete the minimal requirements for licensure. Otherwise,

Correspondent 9: I think this is an important discussion and there are two additional issues I'd like to raise. 1. The actual imbalance is much, much worse than APPIC statistics show simply because the vast majority of students in most of the very large California PsyD. Programs never enter into the APPIC match. As just one example, and I apologize for not having the time to do this systematically, I just looked up the Internship statistics at Alliant in LA's PsyD program. According to information on their website, in 2010 they had 136 students apply for internship but ONLY 8 applied to APPIC internships (and two of them received it). That is, only 2 out of 136 obtained an APPIC internship but, I believe, APPIC statistics would show a 25% match rate, 2 of 8, implying a much better if still dismal state of affairs than the <2% which is the percentage who go on to APPIC internships. In case you're wondering, the vast majority of the rest went to CAPIC internships (which is a whole other story). That is, the troubling statistics reported by APPIC are not the whole story which gets worse the more you look into it. I don't mean to single out any single campus, I encourage anyone with the time to look these data up on each program's web page. Response: I suspect that the free-standing PsyD programs are causing a lot of this problem, including the "invisible" portion of it that you mention -- though not all of it, when even students from long-established, highly prestigious programs fail to match. But notice what CAPIC has done. This is, essentially, an alliance of free-standing clinical psychology programs (Alliant, Argosy, John F. Kennedy, etc.) who have banded together, for the good of their students (and their own bottom line) to enable their students to get some semblance of an internship. At least, to some extent, they're trying to service their students, instead of simply taking their money and leaving their students to twist slowly, slowly in the wind.

2. In my opinion, the problem with eliminating the predoctoral internship is that it will have the de facto effect of reducing the responsibility for schools to place their students in internships. Those leaders of professional schools that I have spoken to would love to get rid of the internship requirement because it is a huge pain for them and makes them look bad and is bad business. Pushing the internship requirement out to be postdoctoral just takes pressure off schools that do a poor job of placing their students. Thus, there is, in my opinion, an "unholy alliance" between some of our most prominent research universities and some of the largest professional schools towards eliminating predoctoral internships.The motives of the two stakeholders, however, are very different. Response: I'm actually in favor of a post-doctoral internship, because (1) the student's academic work, including the dissertation, will be complete; and (2) MDs will have to address psychologists as "Doctor". But I'm not concerned with whether the internship is pre- or post-doctoral. The internship is a necessary milestone on the way to licensure and professional practice, and for good reasons. Therefore, in my view clinical training programs should make sure that one is available for each of their students. And the only way to insure this is to make it a requirement of accreditation. It is not good enough just to throw students on the mercies of the market. There is time enough for that after they have completed their training, and go in search of employment.

Correspondent #10: Has anyone brought up the huge number of clinical students from programs that have extremely large classes relative to the numbers of faculty who can provide high-level education and training (kindly note my use of the word "education" in addition to "training"). The argument was made in the mid-1960s that there was a serious shortage of doctoral-level clinical/counseling/school psychologists graduating from (true) Boulder model programs. Wad this true back then? Is it true now? Response: I'm sure that it was true then, and despite the proliferation of free-standing clinical psychology programs it may still be true. The wars in Afghanistan and Iraq, for example, are creating mental-health problems that will require large amounts of professional resources for years to come. And it was to address this need, as well as to create "power in numbers" both within and outside the APA, that "the Dirty Dozen" and others pushed for the expansion of clinical training programs beyond "the Establishment". Unfortunately, nobody seems to have thought about the output end -- that is, whether all those new clinical students would actually be able to complete the internship required to enter on a career in professional practice. That, combined with various economic factors (shrinking budgets), and, I suspect, hostility on the part of many psychiatrists who were ultimately responsible for many hospital-based internships, led to the current crisis. It was probably even more complicated than this, and the historians of clinical psychology will have to straighten it all out. But the vast and rapid proliferation of clinical psychology training programs, including free-standing programs, couldn't have helped. We can't do anything about the economy, or psychiatry, but we can do something about internships, which is to think creatively about how to solve the problem ourselves. But there is no incentive to engage in this creative thinking unless programs are required to insure an internship slot for every one of their students. And the only way to do this is to mount an in-house internship.

Correspondent #11: [Correspondent #10] has a very good point. APA has, as an institution, held the position for several decades that the path to power for psychology as a field is through a very large N. This position can be found articulated by the "Dirty Dozen" in many of their writings, including their self-indulgent group auto-biography. It has become ingrained in the attitudes of, and is a presumption in many of the pronouncements from, APA central office even when it is not explicitly articulated. This attitude is at the core of almost all the poor decisions that APA has made and continues to, from an entrenched "we can't be wrong" position, defend - despite overwhelming evidence to the contrary. There are too many APA approved Clinical programs, that is the fundamental problem. Too many want to work on the internship side of the equation and pretend that the other end is just fine. Too many programs, too many students, too few genuine faculty at all too many of the programs, no genuine experimental exposure at far too many of the "new model" programs, etc., etc., etc. Response: I'm sure that the proliferation of clinical training programs is a major cause of this problem. But I also suspect that economic and guild factors are also major contributors, and they will make solving the internship problem even more difficult. Requiring each clinical training program to mount an in-house internship of sufficient size to accommodate all of its own students would, as I have indicated earlier, solve the problem -- if not overnight, then by the end of the the usual cycle for renewal of accreditation. Programs that could not provide an opportunity for their own students to actually complete their professional training would lose their accreditation -- and presumably their students. But even if they were able to continue to attract new students, at least APA would have done its part to address the problem. And when I say "APA", I mean whatever "independent" organization actually accredits clinical training programs. And, just to be clear, I would impose exactly the same requirement on the new PCSAS organization (though longtime listmembers will know that I am opposed to the establishment of PCSAS on other grounds).

APA will NEVER address this problem because it would mean a) admitting an error, b) cutting out some of its core constituents and ever recycled insiders, and c) go against the core misbelief that power comes from shear numbers alone. It's an example, though a loose one, of the need for a paradigm shift - the "old timers" will never get it or admit the evidence counters their cherished beliefs. Response: But my proposal is so easy in this respect. All APA has to do is to create a new requirement, and give currently accredited programs time -- until their next renewal -- to solve it.

At the very least there should be honesty in what is said to prospective students. The problem with that is that our younger would-be colleagues often mistakenly believe that they can't be in the 45% not placed and that it will happen to the other guy. Response: Yes, all accredited programs should be required, as of tomorrow, to post their success rates in the APPIC match. They can post whatever else they wish, in terms of placement into unaccredited internships, but they must be required to post information with respect to APPIC. Or, might I suggest that APPIC do this itself.

Correspondent #12: [This] solution has real merit IMO. The simple requirement that you cannot be accredited unless you can guarantee the availability of program requirements. Also, for people who would like to cap the number of students entering the big enrollment places, this would crush that number, or it would force them to spend some of those big tuition dollars on internship slots. I will just go hold my breath waiting for the APA do the right thing. I seem to recall years ago a prominent member of this list saying that the APA always does the right thing.....after it has tried everything else. (though I think this view may be too generous) I would personally be totally OK with either [this] solution or with eliminating the predoc internship. Response: In my view, the internship is a critical element in clinical training -- one intensive year in which the student can devote him- or herself to actual clinical work. I'm agnostic about whether it should be predoctoral or postdoctoral. A postdoctoral internship has the advantage that MDs would have to call psychologists "doctor". In addition, although students embarking on a "predoctoral" internship are supposed to have completed all the other requirements for their degree, we know that large numbers of them will actually write their dissertations while on their internship (if I am the only person who did this, please don't tell anyone). The predoctoral internship would have the advantage of making it even clearer that it is the student's home institution that is responsible for insuring that every student gets an internship, because completing an internship would be a requirement of a degree, and institutions are legally and ethically obligated to insure that their students can complete degree requirements in a reasonable period of time. But shifting from a predoctoral to a postdoctoral internship shouldn't just be a tactic for absolving the student's department from its responsibilities. Predoctoral or postodoctoral, every accredited program should be required to insure that its students can complete an accredited internship, and requiring an "in-house" internship is the most efficient way of doing this.