In the introductory lecture, I noted that the existence of the self creates a qualitative difference between social and nonsocial cognition.

The self lies at the center of mental life. As William James (1890/1981, p. 221) noted in the Principles of Psychology,

Every thought tends to be part of a personal consciousness.... It seems as if the elementary psychic fact were not thought or this thought or that thought but my thought, every thought being owned.... On these terms the personal self rather than the thought might be treated as the immediate datum in psychology. The universal conscious fact is not "feelings and thoughts exist" but "I think" and "I feel"....

In other words, conscious experience requires that a particular kind of connection be made between the mental representation of some current or past event, and a mental representation of the self as the agent or patient, stimulus or experiencer, of that event (Kihlstrom, 1995). It follows from this position that in order to understand the vicissitudes of consciousness, and of mental life in general, we must also understand how we represent ourselves in our own minds, how that mental representation of self gets linked up with mental representations of ongoing experience, how that link is preserved in memory, and how it is lost, broken, set aside, and restored.

James went

on to distinguish between three aspects of selfhood:

My Avatar, My Selfie, My Shelfie, My Self?

James

was onto something with his concept of the material

self, and that is that our possessions, like our

avatars, are expressions of our selves. I'm the kind

of person who buys a Subaru, and I'm not the kind of

person -- whatever that is -- who would buy a

Lamborghini, even if I could afford it. At least

some of our possessions are, quite literally,

expressions of ourselves - -what we might call the material

culture of the self. There are studies to be done here (hint, hint). One possibility, if you could get around the obvious confidentiality problem, would be a study of people's passwords, and why they chose them. Yes, your passwords are supposed to be random numbers, letters, and symbols; but they aren't. Yes, you're supposed to use different passwords for different sites; but you don't. Yes, you're supposed to change them all the time; but you never do. And it's not just a matter of laziness, or the demands on memory. It's that our passwords are part of us, they represent something about ourselves. For more on "keepsake" passwords, see "The Secret Life of Passwords" by Ian Urbina, who coined the term, New York Times Magazine, 11/23/2014. Some excerpts:

The

Covid-19 pandemic offered another opportunity

to study the material culture of the

self. During the lockdown, with many

people working from home and social distancing

strongly encouraged, many people communicated

with each other via Skype, Zoom, and similar

utilities. This circumstance allowed

people to look inside friends' and coworkers'

homes for the first time; and, accordingly,

many people deliberately arranged the

backgrounds of their videos in such a way as

to make a statement -- about themselves by

displaying favorite books, mementoes, and the

like. Not only that, Room

Rater, an account on Twitter, emerged

with screenshots of various backgrounds, such

as those appearing on news programs,

accompanied by brief comments and a 1-10

rating. The trend was noted by popular

magazines such as House Beautiful

("Room Rater is the Best Thing to Come Out of

Lockdown" by Hadley Keller, June 2020). Developmental psychologists (and parents) note that object attachment begins very early in life (think of your childhood teddy bear); and while attachment to specific objects (like that teddy bear) may drop off in later childhood, our attachment to certain objects remains very strong throughout life. Our possessions help define who we are. Russell Belk, a specialist in consumer behavior, calls this the extended self (e.g., Belk & Tian, 2005). To some extent, we are what we own. So much so, that we can be traumatized when we lose them, or they are taken from us. Certainly we are reluctant to give them up. In a classic study, Kahneman and his colleagues (1990) gave college students coffee mugs embossed with their college logo, and then allowed them to trade them in a kind of experimental marketplace. Interestingly, they found that the students were very reluctant to sell their mugs -- even though they hadn't owned one before the experiment, and they hadn't paid anything for them in the first place. Selling prices were very high, and buying offers were very low. This is known as the endowment effect -- a reflection of loss aversion. The subjects simply didn't want to lose something that they now owned. |

self is one of the most prominent

topics in personality

and social psychology, as indicated by the

listings in the indexes of

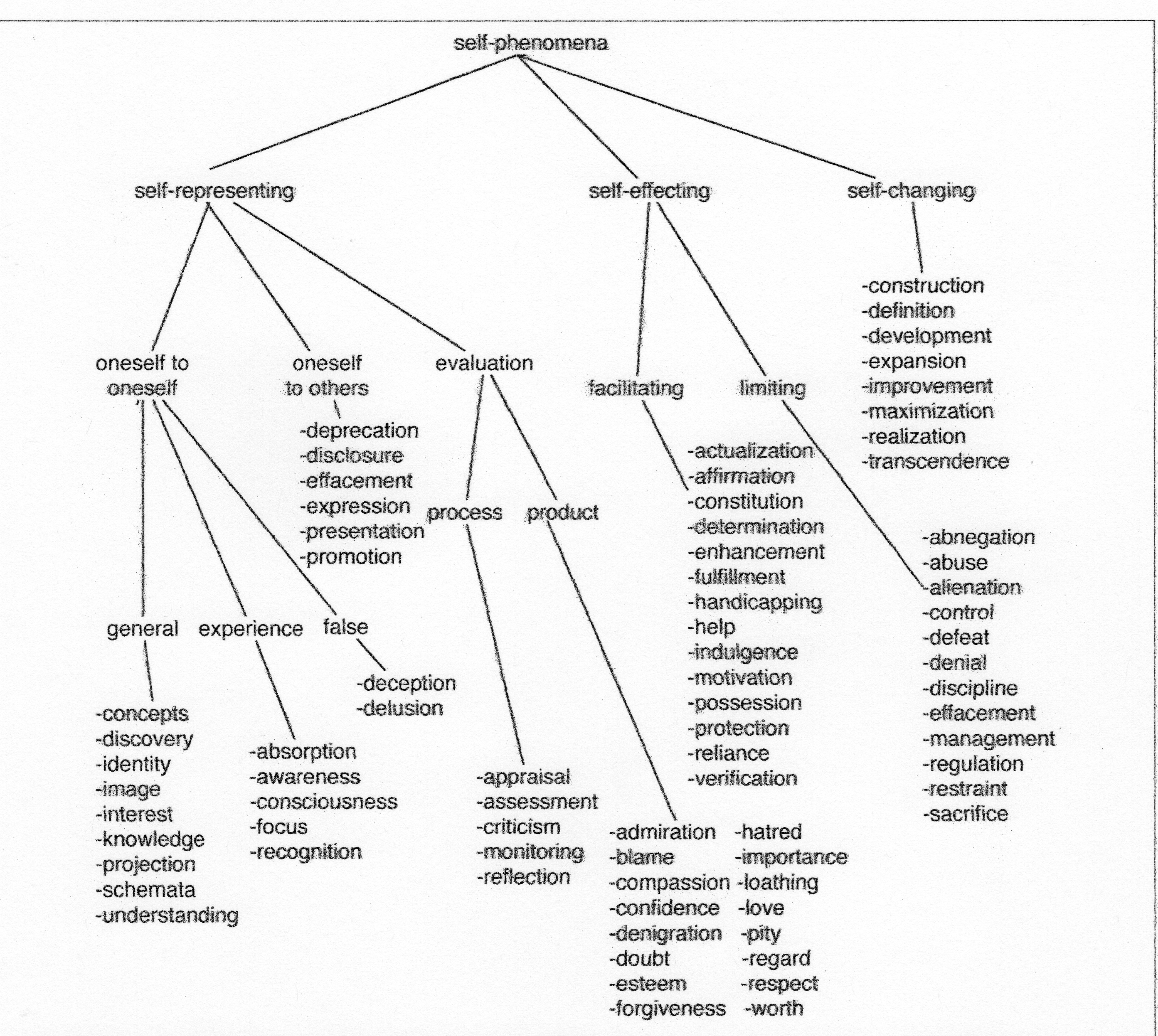

recent textbooks. Along much the same lines, Thagard

and Wood (2015) identified some 80 self-related

phenomena.

self is one of the most prominent

topics in personality

and social psychology, as indicated by the

listings in the indexes of

recent textbooks. Along much the same lines, Thagard

and Wood (2015) identified some 80 self-related

phenomena.

This puzzling problem arises when we ask, "Who is the I who knows the bodily me, who has an image of myself and sense of identity over time, who knows that I have propriate strivings? I know all these things and, what is more, I know that I know them. But who is it who has this perspectival grasp...? It is much easier to feel the self than to define the self.

One way to define the self is

simply as one's mental representation of oneself

(Kihlstrom & Cantor, 1984) -- a knowledge structure that

represents those attributes of oneself of which one is

aware. As such, the self contains information about a

variety of attributes:

Within personality and social psychology, the self-concept is commonly taken as synonymous with self-esteem, but within the social-intelligence framework on personality (Cantor & Kihlstrom, 1987) the self-concept can be construed simply as one's concept of oneself, a concept no different, in principle, than one's concept of bird or fish. From this perspective, the analysis of the self-concept can be based on what cognitive psychology has to say about the structure of concepts in general (Smith & Medin, 1981).

As a first pass, we may define the self as a list of attributes that are characteristic of ourselves, and which serve to differentiate ourselves from other people.

One possible way to assess the self-concept is simply to give people an adjective checklist and have them indicate the degree to which each item is self-descriptive. But such a procedure does not distinguish between those attributes which are shared with other people, and those that are truly distinctive about oneself. Nor does it distinguish those attributes that are trivial from those that are truly critical to one's self-concept.

For that reason,

Hazel Markus (1977) introduced the notion of the self-schema.

In her research, she presented her subjects with the usual sort

of adjective checklist, but asked them to make two different

ratings for each item:

Markus' notion of "self-schematicity" (pardon the

neologism) was an important advance in the assessment of the

self-concept, but it was not entirely satisfactory. As

Robert Dworkin and his colleagues noted, her method essentially

confounds self-descriptiveness and self-importance.

Subjects who have a moderate standing on a particular trait

(e.g., midway between dependence and independence) must

be classified as aschematic for this trait, even if their

moderate standing is extremely important to their

self-concept. This doesn't seem right. The

implication of Dworkin's argument is that the only rating that

really matters, so far as the self-concept is concerned, is

self-importance.

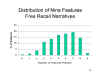

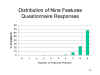

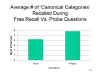

Of course, even the fact that subjects give high self-importance ratings to some attribute doesn't mean that it's really part of their self-concept. Strictly speaking, the self-concept should focus on how people naturally, spontaneously, think about themselves. Following this logic, William McGuire and his colleagues put forward the notion of the spontaneous self-concept -- in which subjects define themselves in their own terms. In his procedure, McGuire essentially presents subjects with a blank piece of paper with the instruction "Tell me about yourself". This results in a free listing of attributes that is entirely ideographic in nature -- that is, subjects are not forced to use terms chosen by the experimenter, or terms that might also be used by other subjects. They are allowed to define their self-concepts freely.

In one study of

sixth graders, McGuire and his colleagues performed a content

analysis of the spontaneous self-concept. Interestingly,

self-esteem played a relatively minor role, accounting for only

about 7% of the attributes listed. Habitual activities, and

other people, played a much larger role in the way these

children thought about themselves.

In one study of

sixth graders, McGuire and his colleagues performed a content

analysis of the spontaneous self-concept. Interestingly,

self-esteem played a relatively minor role, accounting for only

about 7% of the attributes listed. Habitual activities, and

other people, played a much larger role in the way these

children thought about themselves.

In his

research, McGuire has been particularly interested in testing

what he has called the distinctiveness postulate:

In testing the

distinctiveness postulate, McGuire and his colleagues classified

their subjects (again, sixth graders) as typical or atypical

on various objectively measured attributes, such as age or

birthplace. Across a large variety of such attributes,

they found that children were more likely to mention a feature

if they were atypical on that feature with respect to their

classmates. For example, left-handed children are more

likely to mention handedness in their spontaneous self-concepts

than are right-handed children.

In testing the

distinctiveness postulate, McGuire and his colleagues classified

their subjects (again, sixth graders) as typical or atypical

on various objectively measured attributes, such as age or

birthplace. Across a large variety of such attributes,

they found that children were more likely to mention a feature

if they were atypical on that feature with respect to their

classmates. For example, left-handed children are more

likely to mention handedness in their spontaneous self-concepts

than are right-handed children.

An interesting feature of distinctiveness is that "atypicality" is not defined in the abstract, with respect to population statistics, but rather concretely, with respect to the immediate social context. This was shown clearly by McGuire's analysis of the appearance of sex or gender in subjects' spontaneous self-descriptions. There are, roughly 50% each males and females, so gender can't really be atypical. But it turned out that gender was mentioned more frequently by sixth-graders who were atypical for gender with respect to the distribution of the sexes in their classrooms or households.

Thus, we

can redefine distinctiveness for purposes of exploring the

distinctiveness postulate further:

More on DistinctivenessThere's lots more research to be done on

McGuire's distinctiveness postulate. For

example:

But here's one idea: One could employ an adjective checklist such as Markus used, and use the self-descriptiveness ratings as a basis for assessing typicality, and the self-importance ratings as a proxy for the spontaneous self-descriptions. The prediction is that subjects are likely to be self-schematic for psychosocial attributes in which they describe themselves as atypical. Anybody who wants to do such a study: YOU READ IT HERE FIRST! |

From the time of Aristotle until only just recently, concepts were characterized as proper sets: summary descriptions of entire classes of objects in terms of defining features which were singly necessary and jointly sufficient to identify an object as an instance of a category. Thus, the category birds includes warm-blooded vertebrates with feathers and wings, while the category fish included cold-blooded vertebrates with scales and fins.

In

principle, at least, a classical proper-set structure could be

applied to the self-concept. Thus, the self-concept could

be a summary description of oneself, whose defining features

consist of those attributes that are singly necessary and

jointly sufficient to distinguish oneself from all others.

This is possible in principle, but in practice it seems not

terribly useful, as defining features would probably be

restricted to the individual's birth date, place, and time, and

the names of his or her parents.

But both philosophical considerations and the results of experiments in cognitive psychology have persuaded us not to think about concepts in terms of proper sets and defining features, but rather in terms of family resemblance, in which category members tend to share certain attributes, but there are no defining features as such. According to this view of categories as fuzzy sets, category instances are summarized in terms of a category prototype which possesses many, but not necessarily all, of the features which are characteristic of category membership.

In the late 1970s and early 1980s the idea that the self, too, was represented as a category prototype was popular, and some very interesting experiments were done based on this assumption (Rogers, 1981). But category prototypes are abstracted over many category instances. How does one talk about family resemblance, or abstract a prototype, when there is only one member of the category -- oneself? The notion of self-as-prototype, taken literally, seems to imply that the self is not unitary or monolithic. We do not have just one self: rather, each of us must have several different selves, the characteristic features of which are represented in the self-as-prototype.

In fact, the idea that we have a multiplicity of selves can be traced to the very beginnings of social psychology.

Taken to the extreme, the self-concept as a

set of exemplars is exemplified (sorry) by dissociative

identity disorder, formerly known as multiple

personality disorder. In this exceedingly rare

psychiatric syndrome, the patient posses two or more different

identities, each associated with a different set of

autobiographical memories. The different identities, in

turn, are separated by an interpersonality amnesia,

which is typically asymmetrical. For example, in the

famous case of the Three Faces of Eve, there were three

different "personalities", or identities,

within a single Georgia housewife:

But one does not have to have a dissociative disorder to have a multiplicity of selves. Traditional personologists assume that behavior is broadly stable over time and consistent over space, and that this stability and consistency reflect traits which lie at the core of personality. Viewed cognitively, the self might be viewed as the mental representation of this core. But social psychologists have argued that behavior is extremely flexible, varying widely across time and place.

Accordingly,

a person might have a multiplicity of context-specific selves,

representing what we are like in various situations, and

reflecting our awareness of the contextual variability of our

own behavior. For example:

If

so, then the self-concept should represent this context-specific

variability, so that each of us possesses a repertoire of

context-specific self-concepts -- a sense of what we are like in

different classes of situations (Kihlstrom et al. 1995). The

self-as-prototype might be abstracted from these contextual

selves. Thus, we might begin to think about a hierarchy of selves,

with more or less context-specific selves at lower levels, and a

very abstract prototypical self at the highest level.

If

so, then the self-concept should represent this context-specific

variability, so that each of us possesses a repertoire of

context-specific self-concepts -- a sense of what we are like in

different classes of situations (Kihlstrom et al. 1995). The

self-as-prototype might be abstracted from these contextual

selves. Thus, we might begin to think about a hierarchy of selves,

with more or less context-specific selves at lower levels, and a

very abstract prototypical self at the highest level.

This is all well and good, but

maybe there is not a prototype after all. Another trend in

cognitive psychology has been to abandon the notion entirely

that concepts are summary descriptions of category members

(Medin, 1989). Rather, according to the exemplar view of

categories, concepts are only a collection of instances,

related to each other by family resemblance perhaps, and with

some instances being in some sense more typical than others, but

lacking a unifying prototype at the highest level. Some very

clever experiments have lent support to the exemplar view, but

as yet it has not found its way into research on the

self-concept. Nevertheless, the general idea of the

exemplar-based self-concept is the same as that of the

context-specific self, only lacking hierarchical organization or

any summary prototype.

Regardless of whether this

"family" of context-specific selves is united by a summary

prototype, or exists simply as a set of exemplars, it is

possible for these multiple selves to come into conflict -- as when,

speaking metaphorically, the angel

on your right

shoulder tells you to do one thing, and the devil on your

left shoulder tells you to do something

else. T.C. Schelling, an economist,

has suggested that these alternate selves can

work against each other in a process he calls

self-binding: one of your selves wants

to eat that Little Debbie, the other wants to

stay thin, and they duke it out in our head.

The three views of categorization presented so far -- proper sets, fuzzy sets, and exemplars -- all assume that the heart of categorization is the judgment of similarity. That is, instances are grouped together into categories because they are in some sense similar to each other. But similarity is not the only basis for categorization. It has been proposed that categorization is also based on one's theory of the domain in question; or, at least, that people's theories place constraints on the dimensions which enter into their similarity judgments (Medin, 1989).

Epstein's views have not been translated into programmatic experimental research on the self, but we can perhaps see examples of theory-based construals of the self in the variety of "recovery" movements in American society today (Kaminer, 1992). Whether we are healing our wounded inner child, freeing our inner hairy man, dealing with codependency issues, or coping with our status as an adult child of alcoholics or a survivor of child abuse, what links us to others, and literally constitutes our definition of ourselves, is not so much a set of attributes as a theory of how we got the way we are. And what makes us similar to other people of our kind is not so much that they resemble us but that they went through the same kind of formative process, and hold the same theory about themselves as we do of ourselves. Dysfunctional or not, it may well be that we all have theories -- we might call them origin myths -- about how we became what we are, and these theories are important parts of our self-concept. Such self-theories could give unity to our context-specific multiplicity of selves, explaining why we are one kind of person in one situation, and another kind of person in another (Kihlstrom et al., 1995).

The Self and Identity PoliticsFor many people, a major part of their self-concept concerns their identity with some sociodemographic group -- usually, though not always, a minority group or other outgroup. Partly, this reflects McGuire's distinctiveness postulate -- that people include in their self-concepts those features that tend to distinguish them from others, which infrequent features do almost by definition. But since the 1970s, as a result of the civil rights and feminist movements in the United States and elsewhere, group identity has also taken on a political dimension, as social and political activists influenced by Marxist notions of class analysis and consciousness-raising. See, for example, the work of Arthur Schlesinger, Jr., a historian who in The Disuniting of America that identify politics threatened to destroy the common culture that was, in his view, necessary for liberal democracy to thrive. Identity politics can also infect majorities and ingroups, as well, as we can see in the predominantly white, Euro-centric "Tea Party" movement that came on the scene in the wake of the 2008 financial crisis. As a result of identity politics, terms like "black", "feminist", "LGBT" (for Lesbian-Gay-Bisexual-Transgender) stand for political identities as well as for features of one's own personal self-identity. And, accordingly, one's identity with these political movements can become incorporated into one's self-concept. And a person's identification with some group becomes an important element in how that person will be perceived by others. A dramatic example of this came in 2010, when President Barack Obama filled out his form for the U.S. Census. Viewed objectively, Obama is of mixed race, and (beginning with the 2000 census) he could have identified himself as such on his census form. But he didn't -- he checked only the box for "Black, African-American, or Negro". Obama was criticized for this in some quarters, on the view that such an act betrayed his self-presentation as someone who is "post-racial". It's objectively true, of course, that Obama is the product of a mixed marriage, with a white mother and a black father. But that's not the way he subjectively identifies himself. Despite being raised by his white maternal grandparents, Obama has always cultivated an identity as a black person -- as indicated clearly in his memoir, Dreams From My Father. And, as he quipped to David Letterman, he "actually was black before the election". What makes Obama "post-racial", if anything, is that he is able to express pride in his black heritage without making whites uncomfortable. Transgender people who are objectively male can identify themselves as female ("I'm a woman trapped in a man's body"). Mixed-race people can identify themselves as black -- or white. Even more than gender, race is a social construction. A

It's important to

note, though, that neither of these

cases is a simple one of someone

claiming minority-group status in

order to take advantage of affirmative

action. Neither of these

individuals simply "talked the

talk". They both also "walked

the walk", as activists in behalf of

their putative groups. Their

identities, as Black or Indigenous,

were put into relevant action. In

large part because of the Dolezal

case, the topic of transracialism

-- e.g., being biologically

"white" but identifying oneself as

black -- has begun to be discussed by both

psychologists and philosophers

(see, e.g., "In Defense of

Transracialism" by Rebecca Tuvel, Hypatia,

2017). If

biology is not

destiny when it comes to

gender, and everyone agrees

that race is to a large extent

a social construction, then

why can't people

be transracial in the

same way that they can be

transgender? One

contrary argument

is that race, while

not a strictly

biological

concept, is an ancestral

one. That is,

to a large extent

being black entails

having parents,

grandparents,

or great-grandparents

who were

Black. The

point is

particularly

applied to

African-Americans,

whose ancestry

includes the

heritage of

slavery (for

this reason,

some scholars

consider Michele

Obama, whose ancestors

were slaves,

to be African-American

in ways that

Barack Obama,

whose

ancestors

remained in

Africa, are

not (these

same scholars

use "Black" as

a

superordinate

term for

anyone who has

African

ancestry); and

why some

African-Americans

and Hispanic-Americans

object

when

affirmative-action

positions are

offered to

candidates

from Africa or

Latin America

(or Spain or

Portugal, for

that matter)

who do not

have ancestors

who lived in

the United

States, or who

did not share

a history of

racial or

ethnic

discrimination.

On the other

hand, a

similar point

could be made

about

individuals

who are

transgender:

you can be "a

woman

trapped in a

man's body",

but as a man,

you did not

suffer the

kinds of

discrimination

faced by women

who were

always biologically

female.

It's

complicated,

and it will be

interesting to

see how the

debate plays

out.

Anyway, the basic point is that the

self-concept concerns how we identify ourselves

-- how we perceive ourselves, not how others

perceive us. (We can incorporate others'

perceptions of us into our self-concepts, but that

is not at all the same thing.) Another recent turn in identity

politics concerns intersectionality, a

term coined by Kimberle Crenshaw (University of

Chicago Legal Forum, 1989), a legal

scholar, to label the situation where an

individual finds him- or herself a member of

two or more groups.

For

example, an African-American woman

might find herself in

conflict between her identify

as a black woman

(distinguishing herself from other

women), and her identify as a black woman (distinguishing

herself from other black

people), and

identify herself instead as

a black woman -- a

completely different

category entirely. In Crenshaw's

argument, black women

constitute a multiply-burdened

[sic] class.

Intersectionality isn't just a matter

of a subordinate

category (black woman)

inheriting the features

associated with two

superordinate categories

(blacks and women);

rather, it has

special features

that inhere

to its

intersectionality.

Put

bluntly, black women

confront issues that are different

from those

that confront either

black people in

general, or women in

general. Although

the issue of

intersectionality originally arose

out of discussions of

feminist theory, it's

easy to imagine other

points of intersection

-- for example, a gay

African-American man -- where

a person posses the "markers"

of two or more different

minority identities.

|

Our

discussion of the self as concept and as story illustrates a

strategy that we have found particularly useful in our work:

beginning with some fairly informal, folk-psychological notion

of the self-concept, we see what happens when we apply our

technical understanding of what that form of self-knowledge

looks like. Much the same attitude (or, if you will, heuristic)

can be applied to another piece of ordinary language: the

self-image.

Schilder

(1938, p. 11) defined the self-image as "The picture of our own body which we form in our mind, that is to say, the way in which the body appears to ourselves".What follows from this? |

First, there is the question of whether, in talking about our mental images of ourselves, we should be talking about mental images at all. Beginning in the 1970s, a fairly intense debate raged about the way in which knowledge is stored in the mind. At this point, most cognitive psychologists are comfortable distinguishing between two forms of mental representation: meaning-based and perception-based (Anderson, 1983).

Perception-based representations represent the physical appearance of an object or event -- including the spatial relations among objects and features (up/down, left/right, back/front), and the temporal relations among objects and features (before/after). Perception-based representations are analog representations, comprising our "mental images" of things.

Meaning-based representations store knowledge about the semantic relations among objects, features, and events, that is abstracted from perceptual detail, such as their meaning and category relations. Meaning-based representations take the form of propositions -- primitive sentence-like units of meaning which omit concrete perceptual details.

The self-concept is a meaning-based representation of oneself -- regardless of whether it is organized as a proper set, a fuzzy set, a set of exemplars, or a theory. The self-as-story is also meaning-based.

The self-image is a perception-based representation of the self, which stores knowledge about our physical appearance.

Perception-based

representations are relatively unstudied in social cognition,

but it is quite clear that we have them.

In the laboratory, studies of the self-image qua image are very rare. One exception is a fascinating study by Mita, Derner, and Knight (1977) on the mere exposure effect (Zajonc, 1968), in which subjects view a series of unfamiliar objects (e.g., nonsense polygons or Turkish words), and later make preference ratings of these same objects and others which had not been previously presented. On average, old objects tend to be preferred to new ones, and the extent of preference is correlated with the number of prior exposures. In Mita et al.'s (1977) experiment, subjects were presented with pairs of head-and-shoulder photographs of themselves and their friends, and asked which one they preferred. In each pair, one photo was the original, and the other was a left-right reversal.

Some sense of the procedure in Mita et al.'s experiment is given by considering these two photographs of Marilyn Monroe. The left-hand photo is a true photograph of the actress, with the conspicuous beauty mark on her left cheek. The right-hand photo is mirror-reversed, so that the beauty mark appears on her right cheek. According to Zajonc's prediction, Monroe herself, if she were given the choice, would prefer the reversed photo on the right, because it reflects (sorry) the way she would see herself in the mirror. But other people would prefer the original photo on the left, because that is the way they have seen her in movies, on television, and photographs.

The result was as

predicted. When viewing photos of their friends, subjects

preferred the original view (that is, the view as seen through

the lens of the camera); but when viewing photos of themselves,

the same subjects preferred the left-right reversal (that is,

the view as would be seen in a mirror). Thus, our preferences

for pictures match the way we typically view ourselves and

others. Mita took this as evidence for the mere exposure effect,

which it is; but it is also evidence that we possess a highly

differentiated self-image which preserves information about both

visual details and the spatial relations among them.

The result was as

predicted. When viewing photos of their friends, subjects

preferred the original view (that is, the view as seen through

the lens of the camera); but when viewing photos of themselves,

the same subjects preferred the left-right reversal (that is,

the view as would be seen in a mirror). Thus, our preferences

for pictures match the way we typically view ourselves and

others. Mita took this as evidence for the mere exposure effect,

which it is; but it is also evidence that we possess a highly

differentiated self-image which preserves information about both

visual details and the spatial relations among them.

The Meta et al. study demonstrates clearly that we possess a highly detailed image of our own (and others') faces, but the point probably applies to the rest of our bodies as well.

A number of psychometric instruments have been devised for assessing aspects of the body image.

Historically speaking, perhaps the most popular assessment method has been the Goodenough-Harris Draw-a-Person Test, in which the subject is asked to draw pictures of a man, a woman, and other figures. The traditional assumption behind this projective technique is that the subject will project his own body image onto the drawings of other people. But this is a dubious assumption, unproven at best. Moreover, the assessment relies heavily on unwarranted assumptions that people can draw well (just try, in the privacy of your own home, to draw a picture of a man and a woman; then shred the results before any of your friends can see the products of your efforts!).

The DAP, like most projective tests, is a pretty crummy psychometric instrument, pretty much lacking anything resembling standardization, norms, reliability, or validity.

Loren and

Jean Chapman, two prominent schizophrenia researchers, developed

a questionnaire method, the Body-Image Aberration Scale,

for assessing body image aberrations (hence the title) in

patients with schizophrenia and other psychoses, and in

individuals hypothetically at risk for schizophrenia. The

scale consists of a number of subscales:

Note to readers prone to medical-student syndrome. The BIAS is actually not a particularly good predictor of later psychosis (it was an interesting idea that didn't really work out -- not least because, as we'll see a little later, body-image aberrations don't seem to be characteristic of schizophrenia), so don't worry too much if you said "yes" to most or all of the sample items given above. For details, see Chapman, Chapman, & Raulin, Journal of Abnormal Psychology, 1978.

By far the

most popular instrument used in research, this consists of line

drawings of males and females, clad in swimsuits, ranging from

thin to not-so-thin. The drawings are connected by a

continuous scale, on which subjects indicate their:

A "generational" study by Rozin

& Fallon (1988), compared college men and women to their

mothers and fathers. On average, mothers showed larger

Current-Ideal discrepancies than their daughters -- and the

fathers even more so!

A "generational" study by Rozin

& Fallon (1988), compared college men and women to their

mothers and fathers. On average, mothers showed larger

Current-Ideal discrepancies than their daughters -- and the

fathers even more so!

As with the self-concept, the self-image can be illustrated with clinical data.

Patients in the acute stage of schizophrenia often complain of distortions in their perception of their own bodies.

Some classic

studies of body image in acute schizophrenia employed

adjustable mirrors, such as used to be found (perhaps they still

are) in amusement-park "fun houses". These mirrors can be bent in three

planes to produce distorted reflections of the person. In

a a series of studies

by Taub, Orbach, and their colleagues, subjects presented with

distorted reflections of themselves, produced by bending certain

portions of the mirror concave or convex, and asked to adjust

the mirror until they looked right.

The

general finding was that the

patients' mirror images remained distorted even after adjustment

-- suggesting that schizophrenics really did have distorted body

images. But then the investigators made the "mistake" of

running a control condition, in which a rectangular picture

frame was placed in the mirror, and the subjects were asked to adjust that

image until it appeared normal. The

schizophrenics showed an aberration in perception of the frame,

as well, suggesting that their perceptual aberration was not

limited to their body image.

Much the same procedure can be used with computer "morphing" software.

In autotopagnosia (also known as body-image agnosia or somatotopagnosia), a neurological syndrome associated with focal lesions in the left parietal lobe, the patient can name body parts touched by the examiner, but cannot localize body parts on demand.

In phantom limb pain, amputees perceive their lost arms and legs as if they were still there.

In body dysmorphic disorder, the patient complains of bodily defects where there really aren't any.

In eating disorders such as anorexia and bulimia, the sufferer sees fat where the body is objectively normal, lean, or even gaunt.

But,

outside of the Traub-Orbach

studies of body image in schizophrenia, little of this

clinical folklore has been studied experimentally.

One exception is in the study of eating-disordered women (eating-disordered men haven't been studied much, though they do exist).

A study by Zellner

et al. (1989), using the BIA, found that eating-disordered women

showed a bigger current-ideal discrepancy than non-eating

disordered women, or males in a comparison group.

A study by Zellner

et al. (1989), using the BIA, found that eating-disordered women

showed a bigger current-ideal discrepancy than non-eating

disordered women, or males in a comparison group.

Another of the BIA,

study by Williamson et al.

Another of the BIA,

study by Williamson et al.  (1989) of women

with bulimia, a special form of eating disorder, confirmed this

difference. Even when women with bulimia were

statistically matched with non-bulimic women on actual weight,

the bulimic women showed a much greater current-ideal

discrepancy than the normals, and gave higher current IBS

ratings as well -- indicating not just that they have an

exaggerated ideal body image, but that they have an exaggerated

current body image as well.

(1989) of women

with bulimia, a special form of eating disorder, confirmed this

difference. Even when women with bulimia were

statistically matched with non-bulimic women on actual weight,

the bulimic women showed a much greater current-ideal

discrepancy than the normals, and gave higher current IBS

ratings as well -- indicating not just that they have an

exaggerated ideal body image, but that they have an exaggerated

current body image as well.

Perhaps inspired by the Traub-Orbach studies with the adjustable-mirror paradigm, more recent investigators have made use of computer software for image morphing to study body image in normal and eating-disordered individuals.

One

such

study employed the Body-Image Assessment

Software developed at the University of

Barcelona by Letosa-Porta, Garcia-Ferrer, and their

colleagues (2005). They

take numerous biomorphic measurements of subjects' bodies, and use these to create a

computer "avatar" that mimics the shape of the

subject's body. The subject is then asked to modify the image so that

it corresponds to his/her real and ideal

body image.

the discrepancy between the original, objective avatar and

the subject's real body image is taken as a measure of

perceptual distortion; the discrepancy between the

subjects real and ideal body images are then taken as a

measure of body image. A later study by

Ferrer-Garcia et al. (2008) showed that women with a diagnosis of

eating disorder, or at risk for ED, scored higher on

both measures than women who were not at risk.

Another

approach employs the Adolescent Body

Morphing Tool developed by Aleong et al. (2007) at

the University of Montreal. They first developed

an Adolescent Body-Shape database from front and side

photographs of 160 male and female Canadian adolescents.

The subjects were first photographed (front and side

views while dressed

in a body suit and ski mask (to protect anonymity).

Then trained judges applied virtual tags to various

points on the body image. A factor analysis of

various measurements yielded a multidimensional

representation

of the "average" adolescent body. The

resulting images can be morphed by increasing or

decreasing the size of these dimensions by a

certain percentage.

In

a later study,

Aleong et al. (2009) employed height, weight,

and body-mass index to match each of 182

normal (i.e., non-eating-disordered) males and

females to one of the images stored in the

Adolescent Body-Shape Database, and then

distorted the image, especially around the

hips, thighs, and calves. The subjects

were then asked to return the image to

"normal". In psychophysical

terms, the point of subjective

equality, reached when the subject

believed that the image had been returned

to normal, is a measure of the accuracy of

the body image: females were less accurate

than males, but only with the side image.

The difference

limen measured

how much morphing was

required for the subject to detect a

difference from normal: females were

more sensitive than males -- again, especially

when viewing side images.

But you don't have to suffer from mental

illness in order to have a self-image that is wildly discrepant

from objective reality. A clever demonstration of this took the

form of the "Dove

Beauty Sketches",

an advertising campaign mounted by Dove, a unit of Unilever that

makes a popular brand of bath soap, in 2013. Dove commissioned

Gil Zamora, a forensic artist who has worked with the San Jose

Police Department and the FBI, who prepared sketches of ordinary

women (i.e., not movie stars or other celebrities) from their

self-descriptions (the women sat behind a screen, so that Zamora

was able to work only from their verbal descriptions). Then

Zamora prepared another sketch, of the same women, based on

verbal descriptions of them by a randomly selected perceiver.

When the sketches were placed side by side, the sketch based on

the observer's description was more attractive than the one

based on the women's own descriptions. The moral of the

exercise, according to Dove: "You're more beautiful than you think you

are". ("Ad About Women's

Self-Image Creates a Sensation" by Tanzina Vega, New York Times

04/19/2013). Link to

a YouTube video of the Dove Real Beauty Sketches.

But you don't have to suffer from mental

illness in order to have a self-image that is wildly discrepant

from objective reality. A clever demonstration of this took the

form of the "Dove

Beauty Sketches",

an advertising campaign mounted by Dove, a unit of Unilever that

makes a popular brand of bath soap, in 2013. Dove commissioned

Gil Zamora, a forensic artist who has worked with the San Jose

Police Department and the FBI, who prepared sketches of ordinary

women (i.e., not movie stars or other celebrities) from their

self-descriptions (the women sat behind a screen, so that Zamora

was able to work only from their verbal descriptions). Then

Zamora prepared another sketch, of the same women, based on

verbal descriptions of them by a randomly selected perceiver.

When the sketches were placed side by side, the sketch based on

the observer's description was more attractive than the one

based on the women's own descriptions. The moral of the

exercise, according to Dove: "You're more beautiful than you think you

are". ("Ad About Women's

Self-Image Creates a Sensation" by Tanzina Vega, New York Times

04/19/2013). Link to

a YouTube video of the Dove Real Beauty Sketches.

How do we

know what we look like? We look in the mirror (which

reverses left and right), and we look down toward our feet

(which gives us a somewhat distorted view of what lies between),

and we feel our hearts beating (but not our blood

pressure). Like video, only more so, new virtual-reality

technology allows us to see ourselves as others see us, and also

allows us to experience what it would be like to live in other

bodies -- a process known as "VR embodiment". VR

embodiment also enables subjects to have "out of body

experiences" in which the self (at least the conscious self)

appears to leave the body.

Interoception, Proprioception, and the

Proto-Self

|

For most of its history the study of memory has been the study of verbal learning. And accordingly, many psychologists have come to think of memory as a set of words (or phrases or sentences), each representing a concept, joined to each other by associative links representing the relations between them, the whole kit and kaboodle forming an associative network of meaning-based knowledge (Anderson, 1983) -- Schank and Abelson's (1995) theory of knowledge as stories is explicitly opposed to this conventional thinking. It is also commonplace to distinguish between two broad types of verbal knowledge stored in memory (Tulving, 1983). Episodic memory is autobiographical memory for a person's concrete behaviors and experiences: each episode is associated with a unique location in space and time. Semantic memory is abstract, generic, context-free knowledge about the world. Almost by definition, episodic memory is part of the self-concept, because episodic memory is about the self: It is the record of the individual person's past experiences, thoughts, and actions. But semantic memory can also be about the self, recording information about physical and psychosocial traits of the sort that might be associated with the self-concept.

Within the verbal-learning tradition, knowledge about other people has been studied extensively in a line of research known as person memory (Hastie, Ostrom, Ebbesen, Wyer, Hamilton, & Carlston, 1980). Several different models of person memory have been proposed (Kihlstrom & Hastie, 1993), and some of these have been appropriated for the study of memory for one's self (Kihlstrom & Klein, 1994; Klein & Loftus, 1993). The simplest person-memory model is an associative network with labeled links. Each person (perhaps his or her name) is represented as a single node in the network, and knowledge about that person is represented as fanning out from that central node. The person-nodes are also connected to each other, to represent relationships among them, but that is another matter. The point is that in these sorts of models the various nodes are densely interconnected, so that each item of knowledge is associatively linked to lots of other items. In theory, the interconnections among nodes form the basis for associative priming effects, in which the presentation of one item facilitates the processing of an associatively related one.

Of course, knowledge about a person can build up pretty fast: consider how much we know about even our casual acquaintances. According to the spreading activation theory that underlies most associative network models of memory (Anderson, 1983), this creates a liability known as the fan effect: the more information you know about someone or something, the longer it takes to retrieve any particular item of information.

Is there any way around the fan effect in person memory? One possibility which has been suggested is that our knowledge about ourselves and others (especially those whom we know well) is organized in some way -- perhaps according to its trait implications. There is some evidence that organization does abolish the fan effect (Smith, Adams, & Schorr, 1978), but this evidence is rather controversial, and some have concluded that memory isn't really organized in this manner after all (Reder & Anderson, 1980). Nevertheless, a hierarchically organized memory structure is so sensible that many person-memory theorists, such as Hamilton and Ostrom, have adopted it anyway (Hastie et al., 1980; Kihlstrom & Hastie, 1993).

How

can we generalize from person

memory to the structure of memory for the self? Sure: The

simplest expedient is simply to take a generic

associative-network model of person memory, which has nodes

representing knowledge about a person fanning out from a node

representing that person him- or herself, -- following the "James Bartlett" example discussed

at length in the lecture supplement on Social Memory -- and

substitute a "Self" node for the "Person" node.

Actually,

there

are three possibilities here, distinguished particularly with

respect to the relations between behavioral and trait information,

or episodic and semantic knowledge.

Actually,

there

are three possibilities here, distinguished particularly with

respect to the relations between behavioral and trait information,

or episodic and semantic knowledge.

Research on person memory -- i.e., memories for other people depicts a model in which episodic self-knowledge is encoded independently of semantic self-knowledge -- or, put another way, in which knowledge of behaviors is represented separately from knowledge of traits. If the self is a person just like any other, we would expect that the representation of self in memory would have the same structure as memory representations of other people.

On the other hand, the self may be exceptional, in that memory for specific behavioral episodes might be organized by their trait implications. In this model, nodes representing traits fan off the node representing the self, and nodes representing specific episodes which exemplify these traits fan off the trait-nodes. This hierarchical model implies that retrieval has to pass through traits to access information about behaviors. Thus, traits will be activated in the course of gaining access to information about behaviors.

On the other hand, Bem's self-perception theory denies that we retain any knowledge about our traits and attitudes (because we don't really have any traits or attitudes); when we are asked about our traits and attitudes, we infer what they might be from knowledge of relevant behaviors. From this point of view, the self contains only episodic knowledge about experiences and behaviors, and that semantic knowledge about traits is known only indirectly, by inference. One such inferential process would involve sampling autobiographical memory, and integrating the trait implications of the memories so retrieved. In this computational model, retrieval must pass through behaviors in order to reach traits. Put another way, nodes representing behaviors will be activated in the course of recovering -- put precisely, in the course of constructing -- information about traits.

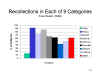

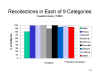

An extensive series of studies by Klein and

Loftus (1992) has produced a compelling comparative test of

these models. These studies adapted for the study of the self

the priming paradigm familiar in studies of language and

memory, in which presentation of one item facilitates the

processing of another associatively related item. Subjects were

presented with trait adjectives as probes, and performed one of

three tasks. In the define task, they simply defined

the word; in the describe task, they rated the degree to

which the term described themselves; in the recall task

they remembered an incident in which they displayed behavior

relevant to the trait. For each probe, two of these tasks were

performed in sequence -- for example, describe might be

followed by recall, or define by recall,

or recall by describe. There were nine possible

sequences, and the important data was the subject's response

latency when asked the second question of each pair.

An extensive series of studies by Klein and

Loftus (1992) has produced a compelling comparative test of

these models. These studies adapted for the study of the self

the priming paradigm familiar in studies of language and

memory, in which presentation of one item facilitates the

processing of another associatively related item. Subjects were

presented with trait adjectives as probes, and performed one of

three tasks. In the define task, they simply defined

the word; in the describe task, they rated the degree to

which the term described themselves; in the recall task

they remembered an incident in which they displayed behavior

relevant to the trait. For each probe, two of these tasks were

performed in sequence -- for example, describe might be

followed by recall, or define by recall,

or recall by describe. There were nine possible

sequences, and the important data was the subject's response

latency when asked the second question of each pair.

Because priming occurs as a function of overlap between the requirements of the initial task and the final task, systematic differences in response latencies will tell us whether activation passes through trait information on the way to behaviors, or vice-versa, or neither. When the two processing tasks were identical, there was a substantial repetition priming effect of the first one on the second. But when Klein and Loftus (1992) examined the effect of recall on describe, they saw no evidence of semantic priming compared to the effects of the neutral define task. Nor was there semantic priming when they examined the effect of describe on recall (again, compared to the effects of the neutral define task). Contrary to the hierarchical model, the retrieval of autobiographical memory does not automatically invoke trait information. And contrary to the self-perception model, retrieval of trait information does not automatically invoke memory for behavioral episodes. Because self-description and autobiographical retrieval do not prime each other, Klein and Loftus conclude that items of episodic and semantic self-knowledge must be represented independently of each other.

Parallel findings have been obtained in case studies of amnesic patients' self-knowledge.

The Case of K.C. The first such study was, in fact, inspired by Klein's (Klein & Loftus, 1993) claim, based on his priming studies, that episodic self-knowledge is encoded independently of semantic self-knowledge. In a commentary on Klein's paper, Tulving reported an experiment that he conducted with Patient K.C., who was rendered permanently amnesic as a result of a motorcycle accident at age 30. K.C. is an especially interesting case of amnesia, because he has a complete anterograde and retrograde amnesia: he has no conscious recollection of anything he has done or experienced throughout his entire life, both before and after his accident. Interestingly, K.C. also underwent a marked personality change as a result of his accident. Whereas his "premorbid" personality was rather extraverted (he was injured riding a motorcycle, after all!), his "postmorbid" personality became rather introverted.

Tulving was interested in

whether K.C. had any knowledge of what he was like as a person,

despite his dense amnesia in terms of explicit episodic

memory. Employing an adjective checklist supplied by

Klein, Tulving asked K.C. and his mother to rate both

their own and each other's personality.

Of course, much of this apparent

accuracy could be achieved if K.C. and his mother simply said

good things about each other. Accordingly, Tulving

conducted a more rigorous test in which K.C. and his mother were

asked to select the more descriptive adjective from pairs of

adjectives that were matched for social desirability.

The Case of W.J. Klein himself obtained similar findings from an 18 y/o college student who temporarily lost consciousness following a concussive blow to the head received when she fell out of bed during the second quarter of her freshman year (Klein, Loftus, & Kihlstrom, 1996). Although a medical examination revealed no neurological abnormalities, W.J. did show an anterograde amnesia covering the 45 minutes that elapsed after her injury (very common in cases of concussion), as well as a traumatic retrograde amnesia that covered the preceding 6-7 months -- essentially, the entire period of time between her high-school graduation and her concussion (organic amnesias that are bounded by personally significant events are rare, but they do occur, and they are very intriguing). This retrograde amnesia remitted over the next 11 days, but not before Klein was able to complete extensive memory and personality testing. The personality testing was particularly interesting because W.J., like many college students, showed rather marked changes in personality as a college student, compared to what she had been like in high school.

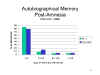

Memory testing of W.J. confirmed

her retrograde amnesia.

Memory testing of W.J. confirmed

her retrograde amnesia.  Employing the Galton-Crovitz

cued-recall technique, in which subjects are presented with a

familiar word and asked to retrieve a related autobiographical

memory, Klein et al. showed that she was much less likely than

control subjects to produce memories from the most recent 12

months of her life, and much more likely to produce memories

from earlier epochs. After W.J.'s retrograde amnesia

lifted, her performance on this task was highly similar to that

of the controls.

Employing the Galton-Crovitz

cued-recall technique, in which subjects are presented with a

familiar word and asked to retrieve a related autobiographical

memory, Klein et al. showed that she was much less likely than

control subjects to produce memories from the most recent 12

months of her life, and much more likely to produce memories

from earlier epochs. After W.J.'s retrograde amnesia

lifted, her performance on this task was highly similar to that

of the controls.

Personality testing revealed a

pattern of performance similar to that observed in patient

K.C.

Taken together, these neuropsychological case studies support the conclusions of Klein's priming studies of self-knowledge. Amnesic patients, who lack autobiographical memory for their actions and experiences, nevertheless retain substantial knowledge of their own personalities. This indicates that "semantic" trait information and "episodic" behavioral information are represented independently in memory.

Semantic Self-Knowledge as Implicit MemoryTechnically, in both cases the semantic

memory about the self preserved in cases of amnesia

accompanied by personality change probably reflects

spared implicit memory for the patient's

past personal experiences. Another

perspective on the relationship between memory and

identity is provided by Encircling, a

novel in three volumes by Carl Frode Tiller (2007;

translated from the Norwegian by Barbara Haveland,

2015; reviewed in "The Possessed" by Clair Wills, New

York Review of Books, 07/22/2021).

David, a Norwegian in his thirties who has lost most

of his memory, places a newspaper advertisement

asking for people who knew him to write to him and

fill in the gaps in memory. Vol. 1 covers his

teenage years; Vol. 2, childhood; Vol. 3, young

adulthood. David's recent memories have been

spared (so far), so in all there are nine different

accounts of his life (as well as nine different

perspectives on the recent social history of rural

Norway). The result is what Endel Tulving (and

I) have

called remembering by knowing -- abstract

knowledge of the past, knowing the events of one's

own life much as one can recount the major battles

of the Civil War. Because autobiographical

memory is an important part of identity, David

constructs (or maybe reconstructs) his

self-concept the same time. On the jacket of Vol.,

3, a blurb states that "Identity is not a monolith

but a collage", built up of fragments. On

the other hand, David's therapist suggests that

(quoting Wills quoting the book) "we are little

more than 'situation-appropriate personas', with

no coherent identity at all". Wills herself

has a different take: that Tiller "is not so much

interested in how we are formed by the

perspectives of other people as in how we are

destroyed by them. Again and again his

characters battle to maintain a sense of self in

their encounters with others, and again and again

they lose the battle". |

The Case of D.B.: Projecting Oneself into the Future

Another amnesic patient illustrates the same basic points. Patient D.B. (Klein, Loftus, & Kihlstrom, 2002) was a 78-year-old retired man who went into cardiac arrest while playing basketball (this patient is not the D.B. of "blindsight" fame). As a result, he experienced anoxic encephalopathy, or brain damage due to a lack of oxygen supply to the brain. A CT scan revealed no neurological abnormalities, but upon recovery he displayed a pattern of memory loss similar to that of patient K.C.: a dense anterograde amnesia covering memories for episodes since his accident, plus a dense retrograde amnesia covering his entire life before the accident.

D.B. was tested with both

episodic and semantic versions of the Galton-Crovitz

procedure. When asked to recall cue-related personal

experiences, D.B. showed a profound deficit compared to control

subjects. But when he was asked to recall cue-related

historical events, his performance improved greatly.

Again, these results are consistent with the idea that amnesia

affects episodic self-knowledge, but spares semantic

knowledge.

D.B. was tested with both

episodic and semantic versions of the Galton-Crovitz

procedure. When asked to recall cue-related personal

experiences, D.B. showed a profound deficit compared to control

subjects. But when he was asked to recall cue-related

historical events, his performance improved greatly.

Again, these results are consistent with the idea that amnesia

affects episodic self-knowledge, but spares semantic

knowledge.

In another part of the study,

D.B. was asked to imagine the future as well as to

remember the past, with respect to both personal experiences and

historical events. For example:

In another part of the study,

D.B. was asked to imagine the future as well as to

remember the past, with respect to both personal experiences and

historical events. For example:

Compared to controls, D.B. displayed almost no knowledge of his past experiences, but also no ability to project himself into the future. By contrast, he showed fairly good ability to recall public issues from the past, and to give reasonable predictions of issues that might emerge in the future.

These

findings on the experience of temporality are consistent

with the conclusion that amnesia impairs episodic

self-knowledge, but spares other forms of memory, including

semantic knowledge about the self and semantic knowledge about

the historical past. But D.B.'s data also makes the

intriguing suggestion that the ability to imagine the future

is related to the ability to remember the past. Both

are components of chronesthesia, or mental time

travel -- defined by Tulving (2005) as the ability to project

oneself, mentally, into the present and the future.

Both narrating the personal past

and projecting the personal future entail storytelling -- which

brings up yet another form of mental representation, knowledge

as stories. Recently, Schank and Abelson (1995, p. 80) have

asserted that "from

the point of view of the social functions of knowledge, what

people know consists almost exclusively of stories and the

cognitive machinery necessary to understand, remember, and tell

them. As they expand on the idea (p. 1).

Schank and Abelson concede that knowledge also can be represented as facts, beliefs, lexical items like words and numbers, and rule systems (like grammar), but they also argue that, when properly analyzed, non-story items of knowledge actually turn out to be stories after all; or, at least, they have stories behind them; or else, they turn out not to be knowledge (for example, they may constitute indexes used to organize and locate stories). Their important point is that from a functional standpoint, which considers how knowledge is used and communicated, knowledge tends to be represented as stories.

The idea of knowledge as stories, in turn, is congruent with Pennington and Hastie's (1993) story model of juror decision-making. According to Pennington and Hastie, jurors routinely organize the evidence presented to them into a story structure with initiating events, goals, actions, and consequences. According to Schank and Abelson (1995), each of us does the same sort of thing with the evidence of our own lives. From this point of view, the self consists of the stories we tell about ourselves -- stories which relate how we got where we are, and why, and what we have done, and what happened next. We rehearse these stories to ourselves to remind ourselves of who we are; we tell them to other people to encourage them to form particular impressions of ourselves; and we change the stories as our self-understanding, or our strategic self-presentation, changes. When stories aren't told, they tend to be forgotten -- a fact dramatically illustrated by Nelson's (1993) studies of the development of autobiographical memory in young children. Furthermore, when something new happens to us, the story we tell about it, to ourselves and to other people, reflects not only our understanding of the event, but our understanding of ourselves as participants in that event. Sometimes, stories are assimilated to our self-understanding; on other occasions, our self-understanding must accommodate itself to the new story. When this happens, the whole story of our life changes.

The twin ideas of self as

memory, and of self as story, bring us inevitably to the topic

of autobiographical memory (ABM) -- that is, a real

person's memories for his own actions and experiences, which

occurred in the ordinary course of everyday living.

Memory and Identity

ABM is an

obviously important aspect of the self, as it contains a record of the individual's own

actions and experiences.

In his Essay Concerning

Human Understanding (1690),

the philosopher John Locke went so far as to assert that

memory formed the basis for the individual's identity --

our sense that we are the same person, the same sense, now

as we were at some previous time. Locke's

idea sounds reasonable, but other

philosophers raised objections to his equation of identity

with memory.

Reid's point is a logical one, based on the principle of transitivity:Suppose a brave officer to have been flogged when a boy at school for robbing an orchard, to have taken a standard from the enemy in his first campaign, and to have been made a general in advanced life; suppose, also, which must be admitted to be possible, that, when he took the standard, he was conscious of his having been flogged at school, and that, when made a general, he was conscious of his taking the standard but had absolutely lost consciousness of the flogging.

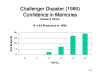

Note for Coincidence-Collectors: The day that Dean died, May 31, 2009, happened to be the 98th anniversary of the launching of the Titanic. And her older brother died on April 14, 1992, which was the 80th anniversary of the shipwreck. These are examples of what is sometimes called an anniversary reaction, in which people die on the anniversary of some important event in their lives. The deaths of John Adams and Thomas Jefferson, who both died on July 4, 1826, the 50th anniversary of the signing of the Declaration of Independence, are two other examples.

| For

more about memory and identity, see "Memory and the Sense

of Personal Identity" by Stanley B. Klein and Shaun

Nichols, Mind, 2012). |

Autobiographical

memory is

technically classified as episodic memory -- itself

a subset of declarative memory, consisting of

factual knowledge concerning events and experiences that

have unique locations in space and time (two events can't

occur at the same time in the same space). Episodic

memory is commonly studied with

variations on the verbal-learning paradigm, is explicitly

intended as a laboratory analogue of autobiographical memory:

each list, and each word on a list, constitutes a discrete

event, with a unique location in space and time. And, as

we'll see, ABM can be studied with variants on verbal-learning

procedures.

ABM is episodic memory, as opposed to semantic

memory or procedural knowledge, but ABM isn't just

episodic memory -- there's more to it than a list of items

studied at particular places and particular times (Kihlstrom,

2009).

I expand on these points below.

Self-Reference

Autobiographical memories are episodic memories, but they're not just episodic

memories. In an important essay, Alan Baddeley (1988) put

his finger on the difference: "Autobiographical

memory...

is particularly concerned with the past as it relates to

ourselves as persons" (p. 13). To really qualify

as autobiographical, a memory ought to have some auto in it, so

that the self is really psychologically present -- in

a way that it's not present in a memory like The hippie

touched the debutante.

Taking

a leaf from Fillmore's case-grammar approach to linguistics

(Fillmore, 1968; see also Brown & Fish, 1983), it seems

that in every autobiographical memory the self is

represented in terms of

But what is self-reference reference to? As discussed earlier, from a cognitive point of view, the self is, simply, one's mental representation of oneself -- no different, in principle, from the person's mental representation of other objects. This mental representation can be variously construed as a concept (think of the "self-concept"), an image (now think of the "self-image"), or as a memory structure. For present purposes, we can think of the self simply as a declarative knowledge structure that represents factual knowledge of oneself. And, like all declarative knowledge, declarative knowledge comes in two forms:

Within the framework of a

generic network model of memory (such as ACT), we can

represent the self as a node in a memory network, with links

to nodes representing other items of declarative

self-knowledge. Although this illustration focuses on

verbal self-knowledge, it should be clear that the self, as a

knowledge structure, contains both meaning-based and

perception-based knowledge about the self. Examples

follow:

For present purposes,

we're going to focus on verbalized recollections.

The Autobiographical Knowledge Base

Perhaps the most thorough

cognitive analysis of ABM has been provided by Michael Conway

and his colleagues (Conway, 1992, 1996; Conway &

Pleydell-Pearce, 2000) in terms of what Conway calls the autobiographical

knowledge base, which is presented in the form of a

generic associative memory network (although without the

operating computer simulation of ACT and similar formal

models).

In

Conway's theory, individual ABMs are represented as nodes

linked to various other elements in the network, and organized

in various ways.

What Conway calls the self-memory system

reflects the conjunction of the autobiographical knowledge

base with the working self. This structure,

analogous to working memory, and consists of an activated

self-schema as well as current personal goals and

current emotional state.

Conway and Pleydell-Pearce (2000) argue that ABMs are constructed (note the Bartlettian term) in two ways.

Autobiographical memory is not just about episodes, and it is not just about auto: it is also biographical. It is not enough to construe autobiographical memory as memories of one's own experiences, thoughts, and actions, strung together arbitrarily as if they were items on a word-list. Autobiographical memory is the story that the person tells about him- or herself or, at the very least, it is part of that story. As such, we would expect autobiographical memory to have something like an Aristotelian plot structure (see his Poetics): an "arrangement of the incidents" into a chronological sequence.

We can distinguish between two aspects of temporal organization:A good example (if I may say so) is recall of the events of hypnosis, which typically begins at the beginning of the session and proceeds in a more or less chronological succession to the end. Kihlstrom (Kihlstrom & Evans, 1973; Kihlstrom, Evans, Orne, & Orne, 1980) found that the temporal sequencing of recall was disrupted during suggested posthypnotic amnesia. The implication is that (1) episodic memories are marked with temporal tags; (2) episodic memories are organized according to the temporal relationships between then; and (3) that the retrieval process enters the sequence at the beginning and follows it until the end.

It should be noted, however, that in the hypnotic case subjects are given a retrieval cue that specifies the beginning of the temporal sequence. For example, the subject is asked to "recall everything that happened since you began looking at the target (a fixation point used in the induction of hypnosis by eye closure). If the instructions had been different -- for example, a request to recall "everything that happened while you were hypnotized", a somewhat different pattern of recall might be observed.

For current purposes, we'll focus on external organization, or how one autobiographical memory is related to others.

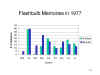

Despite infantile and childhood amnesia and the

reminiscence bump, the major feature of ABMs is the temporal

gradient. This is universally observed, no matter the

means by which ABMs are sampled.

Employing this

Crovitz-Robinson technique, Robinson (1976) observed

that the distribution of response latencies followed an

inverted-U-shaped function. Recall of very recent

memories occurred relatively quickly; as the age of the memory

increased, so did response latency. The exception was

rather short latencies associated with very remote

memories. Robinson suggested that these very remote

memories might be unrepresentative of ABMs in general: because

they are highly salient, they are quickly retrieved.

Otherwise, the distribution suggests that the retrieval of

ABMs follows a serial, backwards, self-terminating search.

That is, the retrieval process begins with the most recent

ABMs, and searches backward until it identifies the first

memory that satisfies the requirements of the cue.

The way to get around this

problem is to present subjects with retrieval cues that

constrain the time period from which the memory is to be

retrieved. This is what Chew (1979) did in an

unpublished doctoral dissertation from Harvard University (see

also Kihlstrom et al., 1988). Chew found that cues with

high imagery value produced shorter response latencies than

did those with low imagery value, and that imagery value

interacted with the epoch (remote or recent) from which the

memory was drawn. But still, memories drawn from the

more recent epoch were associated with shorter response

latencies than those drawn from the more remote one.

Moreover, within each epoch the distribution of memories was

characterized by a reverse J-shape. That is,

fewer memories were retrieved from the early portions of each

epoch. These findings are consistent with the hypothesis

that retrieval begins at the most recent end of a temporal

period, and proceeds backwards in time until it reaches an

appropriate memory.

At the same time, the idea that ABMs are

organized along a single continuous chronological sequence

seems unlikely to be true. First, it's very

inefficient. Yes, it would produce a fan effect, but as

subjects aged the memories would pile up, and retrieval would

be extremely inefficient. Rather, it seems likely that

the chronological sequence of ABMs is organized into chunks

representing smaller segments of time. There's an

analogy to the English alphabet. Yes, it's a single

temporal sequence, from A and B through L and M to X, Y, and

Z. But this large temporal string is also broken up into

smaller chunks.

Consider,

Consider,  for example, a telephone keypad (or, for