See

also the General Psychology

lectures on

"Psychological Development" |

Psychology offers three principal views of

development:

Although there

are developmental psychologists who are primarily interested

in development throughout the entire lifespan, from birth to

death, most work in developmental psychology is focused on

infants and children. In various ways, it addresses a

single question:

What is the difference between the mind of an adult and the mind of a child?

In answering this question,

developmental psychology has focused on two sorts of

differences:

In addition,

developmental psychology has been dominated by two views of

ontogenetic development:

The literature on newborns' preference for faces offers an example of the nativist-empiricist debate. Do infants come out of the womb already knowing something about, and interested in, faces? Or is their knowledge of, and interest in, faces acquired through experiences of being fed and comforted?

A series of studies by Morton, Johnson, and their colleagues (e.g., 1991) showed that newborns prefer to look at objects that resemble faces, over objects that have the same features arranged in a non-face-like configuration.

One

One

study presented

newborn infants, tested within the first hour or so after

birth, with a face-like stimulus, a stimulus with the same

features arranged in a non-face-like

configuration, and a blank stimulus.scrambled. The

infants were pretty uninterested in the blank stimulus, but

showed more interest in the face-like stimulus than in

the non-face-like one.

Another

study confirmed

this finding: newborn infants preferred the face-like stimulus to one that had a

face-like configuration but not face-like features, another

consisting of a linear arrangement of facial features, and

yet another that inverted the configural stimulus.

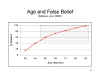

Interestingly,

however, a

further study of infants who were 5, 10, and 19 weeks old

showed no preference for faces (compared to the configural

stimulus) for the five-week-olds. Apparently, newborns

have a preference for faces that 5-week-olds have lost, and

then regain by 10 weeks!

The apparent paradox was

resolved by Morton and Johnson with a "two-process" theory

of face recognition.

Interestingly, infants also distinguish among faces by racial features. Scott (2012) found that 5-month-old infants matched happy sounds (like laughter) with happy faces equally well, regardless of the race of the face. But by 9 months of age, the infants more readily associated those same happy sounds with faces representing their own race, compared to other-race faces.

That social-cognitive

processes change over time was demonstrated in an early

study by Peevers and Secord (1973), who asked subjects in

various age groups (kindergarten, 3rd, 7th, and 11th grades,

and college students) to describe three friends and one

disliked person, all of the same gender as the

subject. The spontaneous descriptions emerging from

these interviews were then coded for various features:

Coding

for

descriptiveness indicated that kindergartners rarely used trait

terms, preferring to describe others in simple, superficial

terms. However, high-school and college students were more

likely to make trait attributions.

Coding

for

descriptiveness indicated that kindergartners rarely used trait

terms, preferring to describe others in simple, superficial

terms. However, high-school and college students were more

likely to make trait attributions.

Remarkably, the descriptions had very

little depth at any age. However, high-school and

college students did begin to show some appreciation that

other people's characteristics were more conditional than

stable and consistent.

Remarkably, the descriptions had very

little depth at any age. However, high-school and

college students did begin to show some appreciation that

other people's characteristics were more conditional than

stable and consistent.

Over the 20th century, developmental theories gradually shifted their focus away from debates over the cognitive starting point, as in the debate between nativism and empiricism, and toward debates over the cognitive endpoint. Thus, developmental psychology began to take on a "teleological" perspective, asking "What is the child developing toward?".

From one empiricist standpoint, for example, the notion of a cognitive endpoint doesn't make any sense: you start out knowing nothing, and you keep learning until you die.

An early example of this shift in viewpoint came with the work of Kurt Goldstein and Michael Scheerer (1941), who construed cognitive development as the development of abstract thinking.

But this shift really began

with Jean Piaget, whose theory of development combined

aspects of both nativism and empiricism. Piaget held

that the neonate comes into the world with an innate

cognitive endowment, in terms of a set of primitive

cognitive schemata. Just as a genotype develops into a

phenotype through interaction with the environment, Piaget

argued that development occurs as a dialogue between these

schemata and the environment:

Further, Piaget

argued that the child's developmental progress was marked by

a series of milestones. Most of these pertained to

"nonsocial" aspects of cognition:

In

the domain of social cognition, the principal Piagetian milestone

is the loss of egocentrism, or the child's "belief that

other people experience the world as the child does".

Egocentrism is commonly measured by the "three mountains" point

of view task (Piaget & Inhelder,

1967). Before age 4, children will say that the doll

can see the church. After age 7, children understand that

the doll cannot see the church, because the mountain is in the

way.

In

the domain of social cognition, the principal Piagetian milestone

is the loss of egocentrism, or the child's "belief that

other people experience the world as the child does".

Egocentrism is commonly measured by the "three mountains" point

of view task (Piaget & Inhelder,

1967). Before age 4, children will say that the doll

can see the church. After age 7, children understand that

the doll cannot see the church, because the mountain is in the

way.

The loss of

egocentrism marks the transition between the pre-operational

period and concrete operations, and typically occurs

sometime between ages 4 and 7. Egocentrism is related

to conservation, another milestone of this transition, in

that children who have lost egocentrism understand that the

same object can look different, depending on their point of

view. But in the context of social cognition, the loss

of egocentrism reflects the child's ability to appreciate

what is on another person's mind.

Egocentrism is related to the idea that cognitive development consists in the development of a theory of mind.

In the course of

a debate over nonhuman cognitive abilities, Premack and

Woodruff (1978) suggested that humans (and perhaps other

primates, especially great apes) had a theory of mind

-- the understanding that we have mental states of belief,

feeling, and desires, that other people also have mental

states, and that their mental states may differ from our

own.

With respect to psychology, the basic elements of a theory of mind are as follows:

Viewed in this way, the theory of mind is not just an aspect of cognitive development. It is very much the development of social cognition.

The concept of theory

of mind was quickly imported into human developmental

psychology, as exemplified by interest in the false

belief task, a variant on Piaget's "three mountain"

test of egocentrism.

The concept of theory

of mind was quickly imported into human developmental

psychology, as exemplified by interest in the false

belief task, a variant on Piaget's "three mountain"

test of egocentrism.

The false-belief task is new to psychology, but the basic idea is as old as the Hebrew Bible. Genesis 27 tells the story of Jacob and Esau. Rebekah bore Isaac twin sons: Esau, who was hairy, was delivered before Jacob, who was smooth-skinned; therefore Esau was, technically, the oldest son. However, Jacob cheated Esau out of his birthright (Genesis 25). As Isaac approached death (at this point he was 123 years old, and blind), he decided to bestow a blessing on his firstborn. However, Rebekah had heard a prophecy that Jacob would lead his people, and "the older shall bow down to the younger". Isaac sent Esau out to hunt game for a l meal. Meanwhile Rebekah prepared a meal, and instructed Jacob to present it to Isaac as if he were Esau. When Jacob protested that Isaac would not be fooled, because he had smooth skin. Rebekah covered his arms and back with goatskin. She knew that Isaac would be fooled into thinking that Jacob was really Esau. The ploy worked, Esau was cheated again, and -- to make a long story short -- the children of Jacob went on to become the Twelve Tribes of Israel.

A somewhat more recent example comes from the great Act II finale in Mozart's opera, the Marriage of Figaro (1786). Figaro is valet to Count Almaviva; he is about to marry Susanna, personal maid to the Countess. They learn that the Count intends to exercise his droit du siegneur on their wedding night. They plot with the Countess to fool the Count into thinking she is going to have an assignation with an Susanna, but instead he will encounter Cherubino, a page who is in love with the Countess. They also get Figaro to write a letter to the Count, informing him that the countess is having an assignation with an unknown man. While Susanna and Cherubino are exchanging clothes in the Countess's rooms, the Count appears unexpectedly. They hide Cherubino in a locked closet. The Count leaves to find tools to break down the closet door, taking the Countess with him. Meanwhile, Cherubino escapes through a window and Susanna takes his place. When the Count and Countess return, the Countess tries to explain why he's about to find Cherubino hiding in her closet. But when the Count opens the door, he finds Susanna instead. Susanna and the Countess also tell the Count that Figaro wrote the anonymous letter. Chagrined, the Count decides to forgive everyone. At this point, Figaro reappears. The Count, knowing everything, questions Figaro -- who, despite increasingly desperate hints from the Countess and Susanna, denies everything. And on it goes, for 20 minutes of duets, trios, quartets, quintets, and even a septet: all of it based on the idea that someone knows what someone else doesn't know.

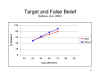

A large number of

studies (178, actually) reviewed by Wellman et al. (2001)

show clearly that children aged 3 or younger typically

fail the false belief task, while those aged 5 and older

typically pass. The older children apparently possess

an ability the younger ones lack -- the ability to infer the

contents of someone else's mind.

A large number of

studies (178, actually) reviewed by Wellman et al. (2001)

show clearly that children aged 3 or younger typically

fail the false belief task, while those aged 5 and older

typically pass. The older children apparently possess

an ability the younger ones lack -- the ability to infer the

contents of someone else's mind.

Interestingly, young

children apparently have the same problem reading their own minds. In

the representational change task,

a variant on the false-belief task, children are shown that a crayon

box actually contains

candies. When asked what they thought the

box contained before they looked inside,

children younger than 3 tend

to say that they thought it contained candies;

children older than five say

they thought it contained crayons. Age trends for performance on the

representational change task precisely

parallel those obtained for the standard

false-belief task.

Interestingly, young

children apparently have the same problem reading their own minds. In

the representational change task,

a variant on the false-belief task, children are shown that a crayon

box actually contains

candies. When asked what they thought the

box contained before they looked inside,

children younger than 3 tend

to say that they thought it contained candies;

children older than five say

they thought it contained crayons. Age trends for performance on the

representational change task precisely

parallel those obtained for the standard

false-belief task.

It's possible to push performance on the

false-belief test by various manipulations, but in general,

3-year-olds seem to fail, while 5-year-olds pass.

Egocentrism and the "theory of mind" suggest that, from the

point of view of social cognition, the endpoint of development

is the emergence of intersubjectivity.

Like William James' story of the turtles, it's intentionality all the way down.

Intersubjectivity in LiteratureWe've already seen one literary example of intersubjectivity: the story of Jacob and Esau, which revolves around Rebecca's (and Jacob's) belief about what Isaac will believe. Rebekah tells Jacob what Isaac will believe. But there are lots more, once you know to look for them.

Earlier, when Odysseus had demanded that he receive proper treatment as Polyphemus' guest (hospitality is a major theme in the Odyssey) Polyphemus had promised that he would eat Odysseus last. When Polyphemus, in turn, demanded the name of his "guest", Odysseus replied "Noman" (actually, of course, the Greek equivalent). Polyphemus then promised that "I will eat Noman last!". Big joke. But after Odysseus and his men had made their escape, Polyphemus called for assistance. When his fellow Cyclopes asked who was harming him, he cried "Noman is harming me". So nobody came to help. Odysseus knew what the other Cyclopes would infer from Polyphemus' response.

But then, when you think about it,

there are actually two more levels. In order

for the play to work,

According to Zunshine (2007), this

complex, recursive intentionality was essentially

Austen's invention. Certainly it only

becomes a major feature of English literature with

her work. In earlier English novels, such as

Henry Fielding's Tom

Jones (1749), there is much less portrayal of the

characters' mental states. The Economist, in an

unsigned article (07/15/2017) commemorating

the 200th anniversary of Austen's death, wrote

that:

Louis Menand, a literature scholar at Harvard reviewing a number of books about Austen in the New Yorker ("For Love or Money", 10.05/2020), notes that:

Going from the sublime to the less-sublime, consider this famous episode of the TV sitcom, Friends:

Actually, I'm one of the few people in the universe who's never watched Friends. I only learned about this from an article about Zunshine: "Next Big Thing in English: Knowing They Know That You Know" by Patricia Cohen (New York Times, 04/01/2010), from which some of this material is drawn  Somewhere

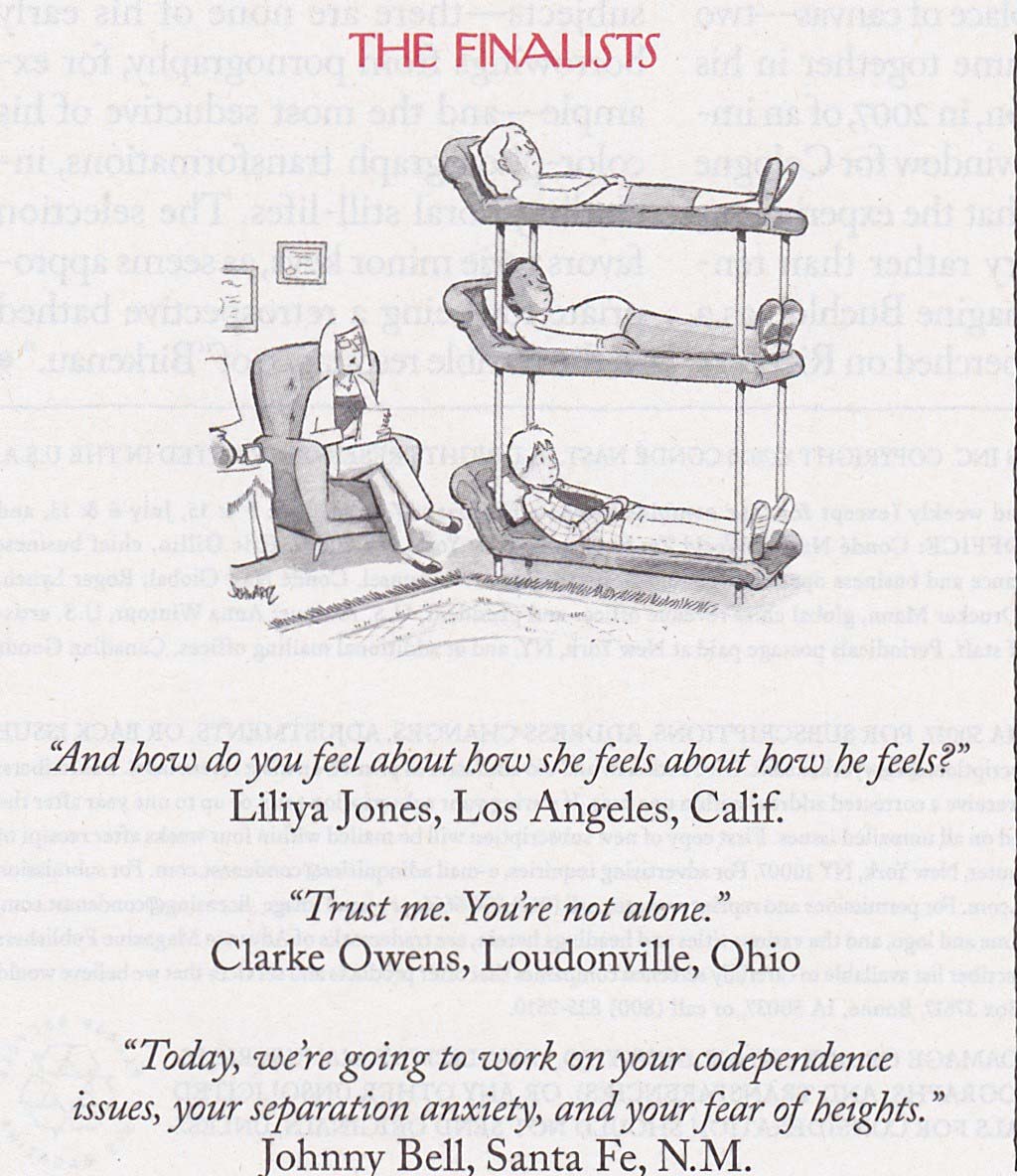

between the sublime and the ridiculous, here's the

same idea, depicted in an entry into the ongoing

Cartoon Caption Contest run by the New Yorker

magazine. The contest cartoon in the March 2,

2020 issue

depicted a psychotherapist talking with three

patients (or clients, if you prefer) arrayed out

on bunkbeds. On March 16, the

magazine published the three finalists for the

cartoon, one of which has the therapist asking, "And

how do you feel about how she feels about how you

feel?". As announced in the March 30 issue, that

caption won the contest. See what can happen if

you take a course on social cognition? Somewhere

between the sublime and the ridiculous, here's the

same idea, depicted in an entry into the ongoing

Cartoon Caption Contest run by the New Yorker

magazine. The contest cartoon in the March 2,

2020 issue

depicted a psychotherapist talking with three

patients (or clients, if you prefer) arrayed out

on bunkbeds. On March 16, the

magazine published the three finalists for the

cartoon, one of which has the therapist asking, "And

how do you feel about how she feels about how you

feel?". As announced in the March 30 issue, that

caption won the contest. See what can happen if

you take a course on social cognition?Back to Zunshine: She infers from psychological research that people can keep track of three levels of intentionality (X knows what Y knows about Z).

Zunshine (2011) has discussed the varying levels of intentionality in her concept of mental embedment -- by which she means the extent to which a narrative contains references to mental states. To take her examples:

Zunshine refers to the pattern

of embedment as sociocognitive complexity,

and argues that the "baseline" level of complexity

for fiction is the third level, "a mind within a

mind within a mind" -- the mind of the Reader, the

mind of the Narrator, and the mind of the

Character. Moving from the sublime to the

ridiculous, an interesting example of

higher-order intersubjectivity in a dyad is

found in the "battle

of wits" scene from Rob Reiner's film, The

Princess Bride (1987). For more on Zunshine's work, see her scholarly books: Zunshine is not the only

literature scholar to make use of psychology and

cognitive science. There is, of course, a long history of humanists drawing on Freudian psychoanalysis to interpret literature -- essentially, viewing characters and plots as Freudian case studies. an excellent example is the Anxiety of Influence by Harold Bloom, a professor of English at Yale who argued that the relationships between poets can be understood as the working out of the Oedipus complex. writers This form of literary scholarship is still popular. However, while psychoanalysis might be useful for understanding literary (and other artistic works) influenced by psychoanalytic thinking, in general it seems like a bad idea to interpret literature in general based on a psychological theory that has been wholly discredited by modern science. One of the earliest proponents of using psychoanalytic theory in literary criticism was UCB's Frederick Crews; later, he saw the light, and became a vigorous critic of psychoanalysis, both as a vehicle for literary criticism and for psychotherapy. See, for example, Out of My System (1975); Skeptical Engagements (1986); and The Memory Wars: Freud's legacy in Dispute (1995). Crews also published two satirical books, in which he illustrated various modes of literary scholarship as they might be applied to the "Winnie the Pooh" books: The Pooh Perplex (1963) and Postmodern Pooh (2001); both make wonderful reading for readers who want to understand literary criticism better -- provided, of course, that you've read A.A. Milne's The Wind in the Willows.first! And then there is literary

darwinism, which

recapitulates the mistakes of

the Freudians by reading fiction, poetry, and

drama through the lens of

Darwin's theory of

evolution by natural

selection. The only

difference is that Darwin's

theory is valid and

Freud's is not.

But really, do we really

need Darwin to

understand that Pride

and Prejudice is about

the importance of making a

good match?

Nah. For a

critical account of

literary Darwinism,

see "Adaptation", a

reviwe of several

books in this genre,

by William

Deresiewicz, a

literary critic, in

The Nation,

06/08/2009) |

Interestingly, you can have higher levels of

intersubjectivity without involving more than two people,

because one person can think about what a second person

thinks about what the third person thinks. And here,

too, it's "turtles all the way down".

Just as the false-belief task has served as

the "gold standard" for the acquisition of a theory of mind,

the false-belief task can be expanded indefinitely.

For example, Perner and Wimmer (1985) distinguished between

first-order and second-order false beliefs.

While 4- and

5-year-old children typically pass the 1st-order FB

task, the majority of children don't pass the

2nd-order FB task until age 9 or 10, unless they are

given special memory aids.

Of course, processing multiple levels of intersubjectivity makes great demands on cognitive resources. While in principle intersubjectivity can be infinitely recursive, there are cognitive constraints on what we can process.

For

example,

Kinderman et al. (1998) tested adult subjects on their

understanding of stories that had up to five levels of

intersubjectivity. As a check, they employed

stories that had multiple levels of causality, but no

intentionality. People could process four levels

of intentionality (i.e., three levels of

intersubjectivity) pretty well, but just fell apart at

the fifth (fourth) level. But they were able to

process up to six levels of causality perfectly

well. So the problem is not in level, in the

abstract, but specifically in level of

intentionality or level of intersubjectivity.

Given George Miller's "Magical Number Seven, Plus or

Minus Two", we might think that we could handle about

seven levels of intentionality, but we can't;

intersubjectivity itself requires cognitive resources,

and makes the limits on cognitive processing even more

severe.

Just as

first-order theory of mind is not the be-all and end-all

of theory of mind, neither is the false-belief

task. Wellman and Liu (1994) have offered a larger

catalog of theory of mind abilities, including:

Testing a group of 3-5-year-olds, they

found that some of these ToM tests were generally

easier than others.

Wellman and Liu (1994) suggested that their test battery constituted a Guttman scale of theory of mind abilities. A Guttman scale (named for Lewis Guttman, an American statistician) is one in which scale items are arranged in increasing order of difficulty, such that an individual who passes one item can be assumed to pass all earlier items. Guttman scales promote efficiency in ability testing and attitude measurement, because we can begin in the middle of the scale: if the respondent passes that item, it can be assumed that s/he would have passed all earlier items as well. The SAT and GRE, as administered by computer, employ a version of Guttman scaling to reduce the time required to administer the test.

So it seems that 4-year-olds have a theory of mind, as assessed by the FB test, but three-year olds don't. But then again, the FB test is very verbal in nature, and it might be that younger children would pass the test if it were administered in nonverbal form.

After all, as Gopnik and Wellman (1992, p. 150) long ago concluded:

The 2-year-old is clearly a mentalist and not a behaviorist. Indeed, it seems unlikely to us that there is ever a time when normal children are behaviorists.... It seems plausible that mentalism is the starting state of psychological knowledge. But such primary mentalism , whenever it first appears, does not include all the sorts of mental states that we as adults recognize. More specifically, even at two years psychological knowledge seems to be structured largely in terms of two types of internal states, desires, on the one hand and perceptions, on the other. However, this knowledge excludes any understanding of representation.

Do 2-year-olds really have some concept of desire? A classic experiment

suggests that they do.

And, in fact,

the infants looked longer on trials where an actor

behaved in a way that seemed to contradict her (the

actor's) understanding of where a plastic watermelon

slice had been hidden. Apparently, even infants

have some sense of what others believe, that their

beliefs might be different from their own, and that

their beliefs might be incorrect. If infants

expect others to behave in accordance with their

beliefs, and are surprised (and pay extra attention)

when they do not do so, then it can be said that even

infants, long before age 4, have a rudimentary theory

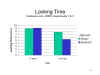

of mind.

Actually, it's not always the case that infants look longer at events that violate their expectations. In some case, they look for less time at counter-expectational events. Nobody quite knows why. But in either case, a difference in looking times indicates that, from the infant's point of view, something has gone wrong. And that's all the logic of the experiment requires.

The Theory of Mind as an Actual Theory

OK, so little kids are

mentalists, not behaviorists. But does their performance

on these experimental tasks really mean really that they have a

theory of the mind? Maybe they just have a concept,

or an idea, about the mind and mental life -- a concept

that matches adult folk-psychology. Gopnik and Wellman

(1992) argue that the "theory" part of the theory of mind should

be taken seriously.

OK, but do kids actually do this? Again, Gopnik and Wellman (1992) argue that they do.

In these and other

ways, the child's theory of mind is not just an idea

about the mind, but a real theory about the nature of mental

life.

An adult fMRI study suggests that

theory-of-mind processing may be performed by a

particular area in the brain. In this study, Saxe

and Kanwisher (2003) had adult subject read stories in

which they had to reason about either true and false

beliefs, mechanical processes, or human actions (without

beliefs).

These investigators found differential activation at the junction of the temporal and parietal lobes (also known as the temporo-parietal junction, or TPJ), which they tentatively named the temporo-parietal junction mind area. They also identified another area, in extra-striate cortex, which is activated when people think about body parts: they named this the extra-striate body area.

Simon

Baron-Cohen (a pediatric psychiatrist and yes,

cousin of the comedian Sacha) and others have argued

that there are at least four elements to the theory

of mind:

Baron-Cohen calls the theory of mind a capacity for mindreading. He further assumes that each aspect of mindreading is mediated by a separate cognitive module, and -- by extension -- a separate brain system.

Studies of biologically normal

children summarized by Baron-Cohen (1995) indicate

that most infants possess both ID and EDD by 9

months of age; they acquire SAM sometime between 9

and 18 months; and they acquire the ToMM sometime

in their 4th year of age (as demonstrated by

performance on the standard false-belief test).

Studies of biologically normal

children summarized by Baron-Cohen (1995) indicate

that most infants possess both ID and EDD by 9

months of age; they acquire SAM sometime between 9

and 18 months; and they acquire the ToMM sometime

in their 4th year of age (as demonstrated by

performance on the standard false-belief test).

For example, evidence of a shared-attention mechanism comes from infants' protodeclarative pointing gesture: an infant will point his fingers at some point in space, and then check the other person's gaze to make sure that she is looking there.

Scaife and Bruner (1975) argued that the mother will orient to the infant's gaze, and, within limits, the baby will orient to the mother's gaze. Based on Piagetian developmental theory, they argued that there were limits on egocentricity even before the child reached the stage of "concrete operations". Shared attention in infants shows that, even before "concrete operations", they appreciate that someone might be looking at something that they themselves cannot see.

Formal

evidence for a shared-attention mechanism comes from

a classic study by Butterworth & Cochran (1980),

which found that infants and mothers would

coordinate their gazes.

The principal finding was that the babies would almost always shift their gave when the mother looked at a point in the babies' field of vision, but not the mothers'; and also very frequently when the mother looked at a point that was already in their joint visual field. But they rarely shifted their gaze when their mothers looked at a field that was in the mothers' field of vision, but not the babies'.

However, Baron-Cohen's evidence for an intentionality detector in infants is somewhat indirect and anecdotal.

In a

formal experiment,,

In a

formal experiment,,  Kuhlmeier, Wynn, and Bloom

(2003) produced better evidence for an infant ID

in an experiment that employed a variant on the

animation techniques introduced by Michotte

(1946) and Heider & Simmel (1944). In

this experiment, 5- and 12-month-old infants

first habituated to a film depicting a triangle

"helping", or a square "hindering", a ball's

progress up a hill. In the test film, the

infants were presented with a film depicting

either the ball approaching the square (a plot

departure) or, alternatively, the ball

approaching the triangle (a plot

continuation).

Kuhlmeier, Wynn, and Bloom

(2003) produced better evidence for an infant ID

in an experiment that employed a variant on the

animation techniques introduced by Michotte

(1946) and Heider & Simmel (1944). In

this experiment, 5- and 12-month-old infants

first habituated to a film depicting a triangle

"helping", or a square "hindering", a ball's

progress up a hill. In the test film, the

infants were presented with a film depicting

either the ball approaching the square (a plot

departure) or, alternatively, the ball

approaching the triangle (a plot

continuation).

The 12-month-olds

discriminated between the two test films, while

the 5-month-olds did not. But note the

nature of the discrimination. In this

case, the 12-month-olds looked longer at

the plot continuation than they did at the plot

departure. This isn't exactly what we'd

expect, given the idea, discussed above, that

infants pay more attention, and thus look

longer, at counter-expectational

events. You were warned that this doesn't

always happen, and in fact it's possible that

the relationship between expectancy and looking

time is U-shaped. That is, infants will

look at counter-expectational events for either

a relatively long, or a relatively short,

time. But the important result in the

study was that the 12-month-olds did, in fact,

discriminate between the two test films -- which

suggests that they had some idea about the

intentionality behind the two scenarios.

The 12-month-olds

discriminated between the two test films, while

the 5-month-olds did not. But note the

nature of the discrimination. In this

case, the 12-month-olds looked longer at

the plot continuation than they did at the plot

departure. This isn't exactly what we'd

expect, given the idea, discussed above, that

infants pay more attention, and thus look

longer, at counter-expectational

events. You were warned that this doesn't

always happen, and in fact it's possible that

the relationship between expectancy and looking

time is U-shaped. That is, infants will

look at counter-expectational events for either

a relatively long, or a relatively short,

time. But the important result in the

study was that the 12-month-olds did, in fact,

discriminate between the two test films -- which

suggests that they had some idea about the

intentionality behind the two scenarios.

Subsequent

studies in the Wynn-Bloom, using variants on

this methodology, have pushed

back the acquisition of an intentionality.

In the Kuhlmeier et al.

(2003) study, the 5-month-old infants showed

no difference in looking times between the

"plot departure" and "plot continuation"

videos. But in an

experiment by Hamlin, Wynn, and Bloom

(2007), 5-month-olds reached for puppets

representing the "helping" and

"hindering" characters, and 3-month-olds spent more

time looking at the "helper" than the "hinderer"

(2010). So, even the 3-month-olds seemed

to have the concept of desire to help

and desire to hinder -- just the sort of

thing that would be a product of an

intentionality detector.

Baron-Cohen has also suggested that childhood autism is a case of mindblindness. He attributes the severe deficits in social behavior, communication, and imagination as specific impairments in social cognition, reflecting the child's lack of a theory of mind.

Childhood autism was first

described by Leo Kanner in 1943, and the case

studies that he presented certainly seem to

depict a child who does not understand (or, at

least, care) that other people are sentient

beings with thoughts, feelings, and desires (or,

at least, thoughts, feelings and desires that

are different from his or her own).

Kanner

illustrated his observations with a number of

case studies of autistic children.

At

about the same, but

independently, Hans Asperger, a Swiss

psychiatrist, made very similar observations,

illustrated with case studies, which he labeled "autistic

psychopathy". The two descriptions,

Kanner's and Asperger's, were remarkably

similar. Put bluntly, Asperger's

syndrome is autism with language. And

for that reason, it is

typically less severe

and more manageable.

These days, autistic syndrome is classified as a pervasive developmental disorder, along with Asperger's syndrome, first diagnosed in 1944, and a few other sub-types. According to the 4th edition of the Diagnostic and Statistical Manual for Mental Disorders (DSM-IV), published in 1994, autism was described as a disorder, emerging relatively early in development, affecting social functions, communication functions, and imagination.

DSM-IV distinguished

between clasic autistic disorder (sometimes

called "Kanner syndrome"), and "Asperger's

syndrome".

The

fifth edition of DSM (DSM-5),released

in 2013, combines classic autism and

Asperger's syndrome under a single category

of autism spectrum disorder, with

the implication that Asperger's syndrome is

just a a milder form of autism. But if,

indeed, Asperger's syndrome is autism with

language, it's not quite right to put the

two syndromes on a simple continuum of severity. There may be qualitative

differences between them.

If so, perhaps these qualitative differences

will be recognized in DSM-6!

Advocacy groups for Asperger's syndrome have complained that such a move would stigmatize individuals who are just "different" from "neurotypicals", and shouldn't be treated as if they are mentally ill at all. For a vigorous critique of DSM-5's treatment of autism and Asperger's syndrome, see The Autistic Brain: Thinking Across the Spectrum by Temple Grandin and Richard Panek (2013).

In these lectures, I am sticking with the DSM-IV classification.

One of the features of the modern novel is the view it gives of its characters' interior lives. But lately, several novelists have tried to write novels in which the central character is autistic -- someone who, given the clinical definition of autism, doesn't have much interior life. Eli Gottleib, who has written two novels inspired by his autistic brother -- one in the third person, the other in the first person -- reflected on this problem in an essay entitled "Giving a Voice to Autism" (Wall Street Journal, 01/05-06/2013). Gottlieb notes that the "Ur-text" for the "developmentally disabled" perspective in fiction is William Faulkner's The Sound and the Fury (1929), which is written from the perspective of Benjy, a developmentally disabled adult -- not autistic, but perhaps mentally retarded or just plain brain-damaged.

...Faulkner produces his effects by shattering the normally smooth perceptual continuum into discrete chunks: "Through the fence, between the curling flower spaces, I could see them hitting. They were coming towards where the flag was and I went along the fence. Luster was running in the grass by the flower tree. They took the flag out and they were hitting".The repetitive staccato sentences highlight Benjy's limited mental means, while the tone skates close to that literary cousin of the dysfunctional adult narrator: the child-voice. Books told from children's points of view often employ the same limited vocabulary, magical thinking, and emotional foreshortening as those of the developmentally disabled.

In the Curious Incident of the Dog in the Night Time, author Mark Haddon borrows from both camps and ventriloguizes the voice of a 15-year-old autistic [sic]: "Siobhan has long blond hair and wears glasses which are made of green plastic. And Mr. Jeavons smells of soap and wears brown shoes that have approximately 60 tiny circular holes in each of them.

In my own case, I'd originally cast the new book in the third person, but I found that I needed the ground-hugging intimacy available only with first-person narration. This, however, required a crucial adjustment away from the "literary" prose which is my default mode. a third-person sentence like this: "Sometimes his handlers would escort him to church, where the soaring, darkwood vault transfixed him, and the rich voice of the preacher as he spoke of hellfire an damnation moved the hair on the back of his neck," would end up transposed to this: "Sometimes she takes me to her mega-church where the Lord is so condensed that people faint and shout out loud at how much of the Lord there is. The preacher has a rich yelling voice and when the chorus sings it's like the bang of thunder that comes mixed with lightning."

Maybe physicists are right, after all, that the best thinking happens in childhood. My challenge in the new novel is to recover that buried perceptual-cognitive mix and haul it into the fictional light of day.

Despite the fact that Kanner and Asperger were seeing autistic children in the 1940s, and Rimland wrote a classic text on the syndrome in the 1960s, the literature on autism focuses almost exclusively on children, and we know relatively little about what happens when autistic children grow up. Autism promises to be a serious problem for mental health policy, as some provisions are going to be have to be made to take care of these individuals after their parents (and other family members) are no longer able to do so. The seriousness of the problem can be seen in the simple fact that diagnoses on the autism spectrum have increased exponentially over the past couple of decades.

The problem, and the possibilities of a good transition to

adulthood in autism, were highlighted in a series of articles

which appeared in the New York Times in 2011:

Interestingly, some autistic patients achieve what might be

called an "optimal outcome", in that they become able to manage

their social relations and navigate the social world (Fein et al.,

2013). These adults generally had a milder form of autism

when children.

The most prominent example of a person with autism who has made a

successful transition to adulthood is Temple Grandin, a professor

of animal science at Colorado State University, who was the

subject of a made-for-TV movie starring Claire Danes (of My

So-Called Life and Homeland fame). See

especially Grandin's recent book, The Autistic Brain: Thinking

Across the Spectrum (2013).

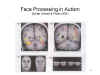

A study by Schultz,

Gerlotti, & Probar (2001) found that

autistic patients showed less activation

of the "fusiform face area" when asked to

discriminate between pairs of faces,

compared to normal subjects. Of

course, as we now know, the fusiform area

is also recruited by tasks that involve

perceptual recognition at subordinate

levels of categorization, regardless of

whether the stimuli are faces.

A study by Schultz,

Gerlotti, & Probar (2001) found that

autistic patients showed less activation

of the "fusiform face area" when asked to

discriminate between pairs of faces,

compared to normal subjects. Of

course, as we now know, the fusiform area

is also recruited by tasks that involve

perceptual recognition at subordinate

levels of categorization, regardless of

whether the stimuli are faces.

More to the point, autistic children appear to perform more poorly than normal children on the standard false-beliefs task.

A study by Peterson and

Siegel (1999) showed that autistic

children aged 9-10 years performed more

poorly on the false belief task than

normal children aged 4-5 years. A formal

meta-analysis by Happe (1995) showed that,

compared to normal

children, "high functioning" autistic

children are delayed by about 5 years in

passing the standard false-belief test.

The others may never pass it at all.

A study by Peterson and

Siegel (1999) showed that autistic

children aged 9-10 years performed more

poorly on the false belief task than

normal children aged 4-5 years. A formal

meta-analysis by Happe (1995) showed that,

compared to normal

children, "high functioning" autistic

children are delayed by about 5 years in

passing the standard false-belief test.

The others may never pass it at all.

However, the lack of a

ToMM may be a result, not a cause of

autism. Autistic individuals have

limited contact with other people, but so

do the deaf children of hearing parents,

who acquire sign-language late, and

therefore have had somewhat impoverished

interpersonal relationships. These

children, too, show problems on the

false-belief task, indicating that they,

too, have problems with the ToMM.

A

later study by Peterson, Wellman, and Liu

(2005), employing a larger set of ToM

tasks, confirmed these results, but also

revealed some surprises. In this

study, autistic and deaf children aged

5-14 years old were compared to typical

preschoolers, aged 4-6 years old.

The deaf children included both native

users of sign language, and children who

learned to sign relatively late in

childhood.

Baron-Cohen

(1995),

summarizing the available literature,

concluded that even mentally retarded

children possess ToMM. By

contrast, one group of autistic children

appears to lack the ToMM, while another

group of autistic children appears to

lack the SAM as well.

Baron-Cohen

(1995),

summarizing the available literature,

concluded that even mentally retarded

children possess ToMM. By

contrast, one group of autistic children

appears to lack the ToMM, while another

group of autistic children appears to

lack the SAM as well.

This general

conclusion has held up, although there

are not a few caveats

to consider (e.g., Tager-Flusberg,

2007).

As a matter of historical record, it should be

noted that, while Baron-Cohen usually (and rightly) gets credit

for the mindblindness hypothesis of autism, the general idea was

floating in the air before he formulated it in quite this

way. B-C did his graduate studies in the Developmental Psychology Unit

at University College London, working with a large group of

developmental psychologists including

Uta Frith and Alan Leslie. Frith, in fact, was one of the

first developmental psychologists to take an interest in

autism (for an autobiographical account of her research,

see Frith, 2012), following publication

of Bernard Rimland's classic book on

Infantile Autism (1964).

Following a pattern established earlier for the study of

schizophrenia, as discussed in the General Psychologicy lectures on "Psychopathology

and Psychotherapy", Frith and her colleagues embarked on a series of studies comparing various aspects of human information processing in autistic and normal children,

using paradigms derived from the experimental

study of cognition. These studies quickly

focused on autistic children's deficits in social

skills -- as Frith put is, "how it was that

procesing information about the physical world

could be so good, while processing information

about [the]social world could be so poor" (2012,

p. 2077). Alan Leslie suggested that the

problem in autism was a failure to create "metarepresentations" of physical reality -- that is,

what philosophers call intentional states

of believing, feeling, and wanting; or what the

psychologist John Morton called "mentalizing". From there it was

only a very short step to B-C's

idea that autistic children lack a theory of

mind -- they lack the understanding that

mental states are

representations of reality.

Frith, for her part,

is convinced that mindblindness is

only part of the problem in autism,

because -- as discussed by Tager-Flusberg

(2007) as well --

autistic children have problems in the

nonsocial domain as well. She

argues that they also suffer from weak

central coherence (WCC): "while the

ability to discern a wide variety of

things about the world around is

strong, the drive to make these

various things cohere is weak"

(2012, p. 2080). While B-C's

mindblindness hypothesis is grounded in the

neuroscientific Doctrine of

Modularity, as discussed in the

lectures on "Social-Cognitive

Neuroscience", WCC

supposes that the mind is not

entirely modular, and that coherence is a property

of the mind as a general-purpose

information-processing system.

Assuming that autistic

children really do lack a theory of

mind, there are at least three plausible

causal explanations for this (lack of)

correlation:

With

respect to a pure neurological account, one possibility is that

autistic children and adults suffer from a malfunctioning mirror

neuron system. Recall that mirror neurons, as the neural

basis for social cognition, were discussed in the lectures on "Social-Cognitive

Neuroscience". According to a popular theory,

mirror-neuron systems in the frontal and parietal cortex, and in

the limbic system, allow us to understand, and empathize with,

the actions and emotional expressions of other people. If

so, then it might be that autism, in which the patients

apparently cannot understand and empathize with the

actions and emotions of others, cannot do so because of a

malfunctioning mirror-neuron system.

Just such a

hypothesis has been proposed by Ramachandran and Oberman (2006)

in their "broken mirrors" theory of autism.

R&O accept the view of autism as a form of , but argue that

this theory, written at the psychological level of analysis,

simply restates and summarizes the symptoms; a proper theory, in

their view, would have something to say about the biological

mechanism that causes mindblindness. They suggest

that this biological mechanism can be thought of as a "broken"

mirror neuron system.

As evidence for their theory,

R&O report a study of "Mu"-wave blocking in autistic and

normal children. The Mu wave is observed in the EEG as a

regular, high-amplitude wave with a frequency of 8-13 cycles per

second. If that sounds like EEG "Alpha" activity, to you,

that's just what it is -- except that alpha activity is

typically recorded in the occipital (visual) cortex, while mu

activity is typically recorded in the frontal (motor)

cortex. A notable feature if alpha activity is blocking:

when the subject is presented with a surprising stimulus, alpha

activity disappears (or is greatly suppressed), and replaced by

high-frequency, low-amplitude "Beta" activity. It turns

out that a similar blocking phenomenon occurs with mu waves,

except that mu waves are blocked by engaging in, or watching,

voluntary muscle activity. But not in autistic

children. Comparing autistic and control children Oberman

et al. (2006) found no mu-blocking when either group of subjects

viewed balls in motion, and both groups showed substantial

blocking when they made a voluntary arm movement; by contrast,

the autistic children showed relatively little mu-blocking,

compared to normals, when watching someone make a hand

movement. Oberman et al. attribute this difference in

mu-wave blocking to a malfunction in the mirror neuron system.

Further evidence comes from an

fMRI study of the frontal MNS when autistic children were asked

to imitate, or just observe, facial expressions of

emotion. The extent of activation was negatively

correlated with severity of symptoms along the autistic

spectrum. Put another way, the more severe the child's

autism, the less activation was visible in the MNS (Dapretto et

al., 2006).

All of this seems quite

promising: Mirror neurons support mindreading; autistic

children appear to suffer from mindblindness; and autistic

children also seem to have problems with the functioning of the

mirror-neuron system that is the biological substrate of

mindreading. All well and good, until we recall, from the

lectures on "Social-Cognitive

Neuroscience", that there are problems with the

traditional interpretation of mirror neurons. They might

not be important for action understanding after all

(Hickok, 2011); and if they are not, there goes the whole

explanation of autism in terms of mirror neurons. Minor

detail.....

Even accepting the "mindreading"

hypothesis of mirror-neuron function, there are still problems

with the hypothesis that autistic individuals suffer from a

"broken" mirror-neuron system (Gernsbacher, 2011). Mostly,

findings such as those described above have proved difficult to

replicate.

According to

Gernsbacher's 2011 tally:

The theory of mind, and

similar theories, currently dominate discussions of

social-cognitive development, but there are other approaches to

social-cognitive development that are also fruitful. Among

the most interesting of these is the social-cognitive

developmental (SCD) approach to the development of aspects

of personality, proposed by Olson and Dweck ((2008).

Instead of looking at the development of social cognition, such

as the ability to read other people's minds, the SCD approach

examines the role of social and cognitive processes in

development generally. That is, SCD traces the role of

social and cognitive processes in the development of such

features as the individual's gender identity and role,

aggressiveness, and achievement motivation.

In a sense, the SCD approach reverses the concerns of the ToM or "theory theory" approach, because it takes social cognition as a given, and looks at its effects on other aspects of social development and behavior. But at the same time, these same findings can be used to illustrate the child's developing theories about himself and the social world.

Darwin's Origin of Species (1859) argued convincingly for a continuity between humans and other (sic), nonhuman, animals. The evolutionary perspective (the "modern synthesis") which defines modern biology was quickly imported into psychology, with assertions of continuity at the level of mind and behavior, not just anatomy and physiology. Interestingly, the stage for this importation was set by Darwin himself, who argued in the Descent of Man (1871) and The Expression of Emotions in Man and Animals (1872) that there were similarities between humans and other animals in the facial and other bodily expressions of emotions -- a position revived in our time by Ekman with his work on innate "basic emotions". The implication is that social cognition is part of our evolutionary heritage, and that it is shared with at least some nonhuman species. Which raises the question:

What is the

difference between the mind of a

human and the mind of an animal?

The phylogenetic view

of development raises the same question as the ontogenetic view:

whether development is continuous or discontinuous. Either

way, though, it might be, to paraphrase Kagan, that "animals are

a lot smarter than we think" -- and that their intelligence

might extend to at least some aspects of the theory of mind.

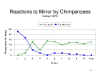

The first was the mirror

test of self-awareness developed

by Gallup (1970), based on observations

originally made by Darwin himself. The

initial response of chimpanzees (and

other animals) to exposure to a mirror

is to engage in other-directed behaviors

-- i.e., to treat the reflection as a

conspecific. With continued

exposure, however, chimps begin to

engage in self-directed behaviors.

Gallup hypothesized that the chimps came

to recognize themselves in the mirror.

The first was the mirror

test of self-awareness developed

by Gallup (1970), based on observations

originally made by Darwin himself. The

initial response of chimpanzees (and

other animals) to exposure to a mirror

is to engage in other-directed behaviors

-- i.e., to treat the reflection as a

conspecific. With continued

exposure, however, chimps begin to

engage in self-directed behaviors.

Gallup hypothesized that the chimps came

to recognize themselves in the mirror.

In

In  a formal test of

self-awareness, Gallup painted red marks

on the foreheads of mirror-habituated

chimpanzees. The painting was

performed while the chimps were

anesthetized and the paint was odorless,

so the animals could only notice the

spot when they looked at themselves in

the mirror. The chimps' response was to

examine the spots visually (by looking

at themselves in the mirror, touch the

spots, and visually inspect (and smell)

the fingers that had touched the spots.

Gallup argued that the chimpanzees

recognized a discrepancy between their

self-image and their image in the

mirror.

a formal test of

self-awareness, Gallup painted red marks

on the foreheads of mirror-habituated

chimpanzees. The painting was

performed while the chimps were

anesthetized and the paint was odorless,

so the animals could only notice the

spot when they looked at themselves in

the mirror. The chimps' response was to

examine the spots visually (by looking

at themselves in the mirror, touch the

spots, and visually inspect (and smell)

the fingers that had touched the spots.

Gallup argued that the chimpanzees

recognized a discrepancy between their

self-image and their image in the

mirror.

Later

experiments added methodological

niceties, such as a comparison of marked

and unmarked facial regions.

Later

experiments added methodological

niceties, such as a comparison of marked

and unmarked facial regions.

Another

Povinelli study indicated

Another

Povinelli study indicated  that

mirror self-recognition is relatively

rare, even among chimpanzees. It

happens, but it's rare.

that

mirror self-recognition is relatively

rare, even among chimpanzees. It

happens, but it's rare.

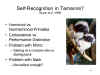

Thus, not all chimpanzees show self-recognition in mirrors. In general, the effect is obtained from chimps who are sexually mature (but not too old), have been raised in groups, and have had prior mirror exposure.

The same mirror

self-recognition effect is found in

orangutans and human infants, but not

usually in other primate species (the

status of gorillas, such as the famous

Koko, is controversial). However,

a comprehensive review by Gallup and

Anderson concludes that only human infants

and some species of great apes show

"clear, consisting, and convincing

evidence" for mirror self-recognition ("Self-recognition in

animals: Where do we stand 50 years

later? Lessons from cleaner wrasse

and other species", Psychology of

Consciousness, 2019).

Self-Recognition in SolarisIn the great science-fiction film Solaris (Russian-language original scripted and directed in 1972 by Andrei Tarkovsky, based on the novel of the same title by Stanislaw Lem; English-language remake, 2002, by Steven Soderburgh, starring George Clooney), a psychologist, Kris, visits a space station orbiting the planet Solaris that has been the site of mysterious deaths. It turns out that the planet is a living, sentient organism, which has inserted creatures into the station based on images stored in the cosmonauts' (repressed?) memories. As soon as Kris gets to the station, he encounters the spitting image of Hari (she's named Rheya in the book), his late wife, who had committed suicide 10 years before. Searching through his baggage, the woman comes upon a picture of herself, but she does not recognize the image until she views herself, while holding the picture, in a mirror. It is clear that until that moment she had no internal, mental representation of what she looked like. The episode does not appear in Lem's book, which appeared in 1961, long before Gallup's original article was published (at least I can't find it) -- though Lem does have some interesting remarks about Rheya's memory -- she doesn't have much, and one of her memories is illusory. But Tarkovsky's film appeared in 1972, so he would have had the opportunity to hear about Gallup's findings (which were prominently reported), and incorporate them into the script (losing the material about memory). |

If it

really turns out that orangutans have

self-recognition, but gorillas do not,

the implication is that the capacity

for self-recognition arose

independently at least twice in

primate evolution. This is

because the evolutionary line that led

to modern orangutans, who pass mirror

self-recognition, split

off from the main line of

primate evolution before the slit which created the

line which led to modern gorillas,

which do not pass the mirror test.

If it

really turns out that orangutans have

self-recognition, but gorillas do not,

the implication is that the capacity

for self-recognition arose

independently at least twice in

primate evolution. This is

because the evolutionary line that led

to modern orangutans, who pass mirror

self-recognition, split

off from the main line of

primate evolution before the slit which created the

line which led to modern gorillas,

which do not pass the mirror test.

The

matter is complicated, however,

because

The

matter is complicated, however,

because  Hauser

has obtained evidence for mirror

self-recognition in cotton-top

tamarinds, a species of monkeys that

are, in evolutionary terms, quite

distant from the great apes.

Hauser

has obtained evidence for mirror

self-recognition in cotton-top

tamarinds, a species of monkeys that

are, in evolutionary terms, quite

distant from the great apes.

Gallup has argued that chimpanzees, at least, have bidirectional consciousness: they are responsive to events in the external world, and they are also aware of the relationship between these events and themselves. In his view, this last form of awareness is the hallmark of consciousness.

Long

before

Baron-Cohen,

David Premack

and Guy

Woodruff

(1978) had

argued that

chimpanzees

(and perhaps

other

primates,

especially

great apes)

had a "theory

of mind".

This paper --

actually

written as a

commentary on

another paper

introduced

the concept of

ToM to

psychology,

philosophy,

and cognitive

science.

In

their study,

they reported

that at least

one chimp,

namely the

famous Sarah,

was able to

solve novel

problems that

entailed

attributing

mental states

to humans. Sarah

had been

raised since

infancy in the

company of

humans, and

had been

taught a

rudimentary visual

"language"

in which

tokens

represented

various

concepts.

After she

retired from

research, she

spent the last

13 years of

her life at

Chimp Haven, a

sanctuary for

chimpanzees,

and died in

2019 ("The

World's

Smartest Chimp

Has Died", bu

Lori Gruen, New

York Times,

08/10/2019).

The

experiments

were variants

on Kohler's

classic

demonstrations

of insight

problem-solving

in chimpanzees

(that's one of

Kohler's apes,

Sultan, in the

slide on the

left),

and took

advantage of

the fact that

Sarah loved to

watch

TV. So,

a series of

problems were

presented to

her via

30-second

video

vignettes.

Each

showed a human

confronting

some obstacle,

and trying to

solve the

problem.

Sarah was then

presented with

two still

pictures, one

of which

depicted the

correct

solution to

the

problem.

She was then

asked to

choose the

correct

solution, and

place the

corresponding

picture near

her TV set (as

she had already

been trained

to do for

other

purposes).

Finally, Sarah

received

verbal

feedback was

to whether

her choice was

correct.

All the

problems

involved

objects with

which she was

familiar.

Premack

and Woodruff concluded that Sarah did,

indeed, have a theory of mind -- which

is to say that she was able to connect

representations of problems with

representations of their

solutions. And

that, in turn, required Sarah to

impute mental states to humans:

Evidence for a theory

of mind is evidence for social cognition

in nonhuman animals -- extending not

only to conspecifics, but to members of

other species as well

-- a point to

which I'll return later.

Mindreading in Nonhuman Species

Elaboration

of the theory of mind by developmental

psychologists has led to further

research on animal awareness by

comparative psychologists, using

adaptations of the tasks developed for

investigating various components of

the theory of mind in infants and

children. Baron-Cohen's modular

theory of mindreading provides an

excellent framework for reviewing this

research.

For

example, developmental studies show

that infants consolidate the EDD by

about 18 months. Povinelli and

Eddy (1996) found that chimpanzees

will likewise follow the general

direction of the gaze of a human

experimenter.

For

example, developmental studies show

that infants consolidate the EDD by

about 18 months. Povinelli and

Eddy (1996) found that chimpanzees

will likewise follow the general

direction of the gaze of a human

experimenter.

However,

another experiment by Povinelli et al.

However,

another experiment by Povinelli et al. (1999)

suggested that chimpanzees lack

SAM. That is, they did not

interpret the pointing behavior of a

human experimenter as referring to

objects in the environment.

(1999)

suggested that chimpanzees lack

SAM. That is, they did not

interpret the pointing behavior of a

human experimenter as referring to

objects in the environment.

Research

by Josep (sic)

Cal and

Michael

Tomasello

(1998), using

a nonverbal

version of the

false-belief

task was the

first to

test for a

theory of mind

using a

nonverbal

version of the

false-belief

test with a mixed

sample

of orangutans

and chimpanzees

(as opposed to

the

case study of

Sarah), as

well as a

sample of 4-

and 5-year-old

children.

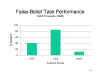

The

5-year-old

children, but not the 4-year olds,

performed well on both verbal and

nonverbal versions of the

test. In contrast, the

apes performed even more poorly than

the 4-year-old children.

The

5-year-old

children, but not the 4-year olds,

performed well on both verbal and

nonverbal versions of the

test. In contrast, the

apes performed even more poorly than

the 4-year-old children.

Herrmann

et al. (2007), working in Tomasello's

laboratory, recently

reported the performance of large

groups of chimpanzees (M age

= 10 years), orangutans (M age

= 6 years), and children (M age

= 2.5 years) on a Primate Cognition

Test Battery which included a number

of tests of social as well as physical

skills -- including two tests of of

the theory of mind. compared to

the toddlers, the apes did quite well

on physical skills involving space,

quantity, and causality. But the apes fell

down completely on tests of social

learning, and also did poorly on the

tests of ToM.

To

To summarize,

species comparisons suggest

that monkeys and even great apes are

sorely lacking in mindreading

abilities, even by the standards of

3-year-old human children.

David Povinelli,

a leading researcher in the area of

primate cognition, has concluded

that nonhuman primates appear

to lack a theory of mind.

Again,

we have to be careful, because this

area of research is rife with

potential confounds.

Frans

deWall, a

leading

primatologist

who has

written a

great deal

about the

social

behavior of

chimpanzees

and other

primates (he

pretty much

discovered the

bonobo, or

pygmy

chimpanzee,

which makes

love not war)

has

been particularly

critical of

these

experiments.

This conclusion may seem to violate the Darwinian doctrine of psychological continuity, but there have to be some discontinuities, somewhere, unless you want to start looking for evidence of ToM in cockroaches. In commenting on this situation, Povinelli has turned Darwin on his head, reminding us that, despite the continuities uniting species, the whole point of evolution is to add new traits that aren't possessed even by close relatives. For humans, language appears to be such a trait. Perhaps consciousness, in the form of ToM, is another.

Still, the line of research on mirror self-recognition initiated by Gallup seems to indicate that chimpanzees, at least, have a rudimentary sense of self. And that, as we shall see, reveals a rudimentary consciousness.

In

addition to the phylogenetic and

ontogenetic views familiar to

psychology, there is another view

of development that can be found

in other social sciences, such as

economics and political

science. This is a cultural

view of development, by which it

is held that societies and

cultures develop much like species

evolve and individuals grow.

Which raises

the question:

The Stages of Socio-Cultural DevelopmentThe

origins of this

cultural view of

development lie in

the political

economy of Karl

Marx, who argued

that all societies

went through four

stages of economic

development:

Marx further proposed that certain habits of mind, or modes of cognition, were associated with each stage of development. So, for example, it required a degree of consciousness raising to get peasants and workers to appreciate that they were oppressed by lords and management. Note that Marx didn't see any need for further consciousness raising for people to appreciate their oppression by communism. In

1960, the American

economic historian

W.W. Rostow offered

a non-Marxist

alternative

conception of

economic growth

which he called the

stages of growth:

Along

the same lines, in

1965 A.F.K.

Organski, a

comparative

political scientist,

proposed

stages of political

development:

Stage theories of political and economic development are about as popular in social science as stage theories of cognitive or socio-emotional development have been in psychology! |

Note, however, the implications of the term development, which suggests that some societies are more "developed" -- hence, in some sense better -- than others. Hence, the familiar distinction between developed or advanced and undeveloped or underdeveloped nations. The implication is somewhat unsavory, just as is the suggestion, based on a misreading of evolutionary theory, that some species (e.g., "lower animals") are "less developed" than others (i.e., humans). For this reason, contemporary political and social thinkers often prefer to talk of social or cultural diversity rather than social or cultural development, thereby embracing the notion that all social and cultural arrangements are equally good.

It

is this "diversity" view of

culture that prevails today --

which is why psychologists can

compare "Eastern" and "Western"

views of self and social

cognition, without any

implication that one view is

more developed than

another. They're simply

different -- cultural choices.

Early cross-cultural studies of mind were somewhat informal, in that they were not based on psychological theories of mental life, nor did they involves systematic inquiry about the theory of mind. Still, the observations are interesting (for details, see Lillard, 1997, 1998).

Picking up on Miller's theme, Angeline Lillard (1997, 1998, 1999) has noted that there are two broad ways to explain behavior of all sorts:

Recall Rogoff's caution against thinking that

things that are commonplace in Western, developed, modern

European-American culture are "natural" or "normal". The

theory of mind is one such thing, and Lillard concludes, based

on her survey of cross-cultural data, that the theory of mind

might not be innate after all. She suggests that mind

may be a concept in Western "folk psychology" that has no

counterpart in other psychologies. While

European-Americans may prefer to explain behavior in terms of

mental states, what she calls "Other Folks'"

psychology -- may be quite different (in fact, some

other folks might not have a psychology at all!). There

may well be profound cultural differences in the concept of

mind, and in such details as the difference between thinking and

feeling. If so, the concept of "mind" may be a learned

cultural product, acquired through socialization, and maintained

by cultural transmission -- not something that is pre-adapted,

modular, and embedded in the architecture of the brain.

Perhaps, but even

Lillard concedes that some

aspects of folk psychology may

reflect the operation of

something resembling Chomsky's

Universal Grammar (UG).

That is, we may possess an

innate cognitive module for

explaining behavior -- a

cognitive module that is, in

turn, in evolved

neuroanatomy. She notes

that no culture is

absolutely behavioristic -- abjuring any

reference to mental

states. And all languages

have words that refer to mental

states, even if these words are

not commonly used. As in

Chomsky's UG, she suggests that

cultures vary in parametric

settings just as languages do,

so that behavioral explanation

varies from culture to culture

in terms of its constraints and

preferences -- variance which

comes through in adults'

conceptualizations of the causes

of behavior. What's universal in this UG, she

speculates, is false

belief. That

is, we may be innately prewired

to take notice when behavior

does not accord with the way the

world really is -- as in the

standard false belief task or

its infant cognate.

Explaining this discrepancy may

take various culturally defined

forms. In modern, Western,

European-American culture, we

explain this discrepancy in

terms of how reality appears to the actor.

In other cultures, the same

discrepancy might be explained

in terms of witchcraft, or the

alignment of the stars.

So mentalistic

explanations of behavior may

not be innate. Even

these may be cultural

achievements.

With respect to self-awareness, paleontological data would suggests that it is very old indeed. Excavation of some of the earliest cave-dwellings has yielded evidence of beads and other adornments. These clearly indicate that these early human ancestors knew what they looked like -- and cared about it.

It

is even possible that

intersubjectivity -- the belief

that others have minds that

might differ from one's own,

that the contents of others'

minds might be interesting, and

that we could infer these

contents from their behavior --

might be a cultural invention,

like the wheel or written

language.

Setting aside this admittedly controversial reading of ancient (and modern) literary texts, proposals for something like a UG for behavioral explanation imply that there should be cross-cultural similarity in the way that young children explain behavioral discrepancies. The development of a theory of mind (or whatever the culture prefers) would then come later, as a result of socialization and acculturation. The result would be that, among adults, there is some cultural variance, or "choice", in explanations of behavior:

This page last revised 10/19/2020.