Psychological Development

So far in this course, we

have focused our attention on mental processes in mature,

adult humans. We have taken mind and personality as givens,

and asked two basic questions:

- How does the mind work?

- How does the mind mediate the individual's interactions

with the social environment?

Now we take up a new question:Where

do mind and personality come from?

Views of Development

In psychology, there are two broad

approaches to the question of the development of mind:

The ontogenetic

point of view is concerned with the development of mind in

individual species members, particularly humans. This is

developmental psychology as it is usually construed.

Reflecting the idea that mental development, like physical

development, ends with puberty, developmental psychologists

have mostly focused on infancy and childhood. More recently,

it has acquired additional focus on development across the

entire "life span" from birth to death, resulting in the

development of new specialties in adolescence, middle age, and

especially old age.

The ontogenetic

point of view is concerned with the development of mind in

individual species members, particularly humans. This is

developmental psychology as it is usually construed.

Reflecting the idea that mental development, like physical

development, ends with puberty, developmental psychologists

have mostly focused on infancy and childhood. More recently,

it has acquired additional focus on development across the

entire "life span" from birth to death, resulting in the

development of new specialties in adolescence, middle age, and

especially old age.

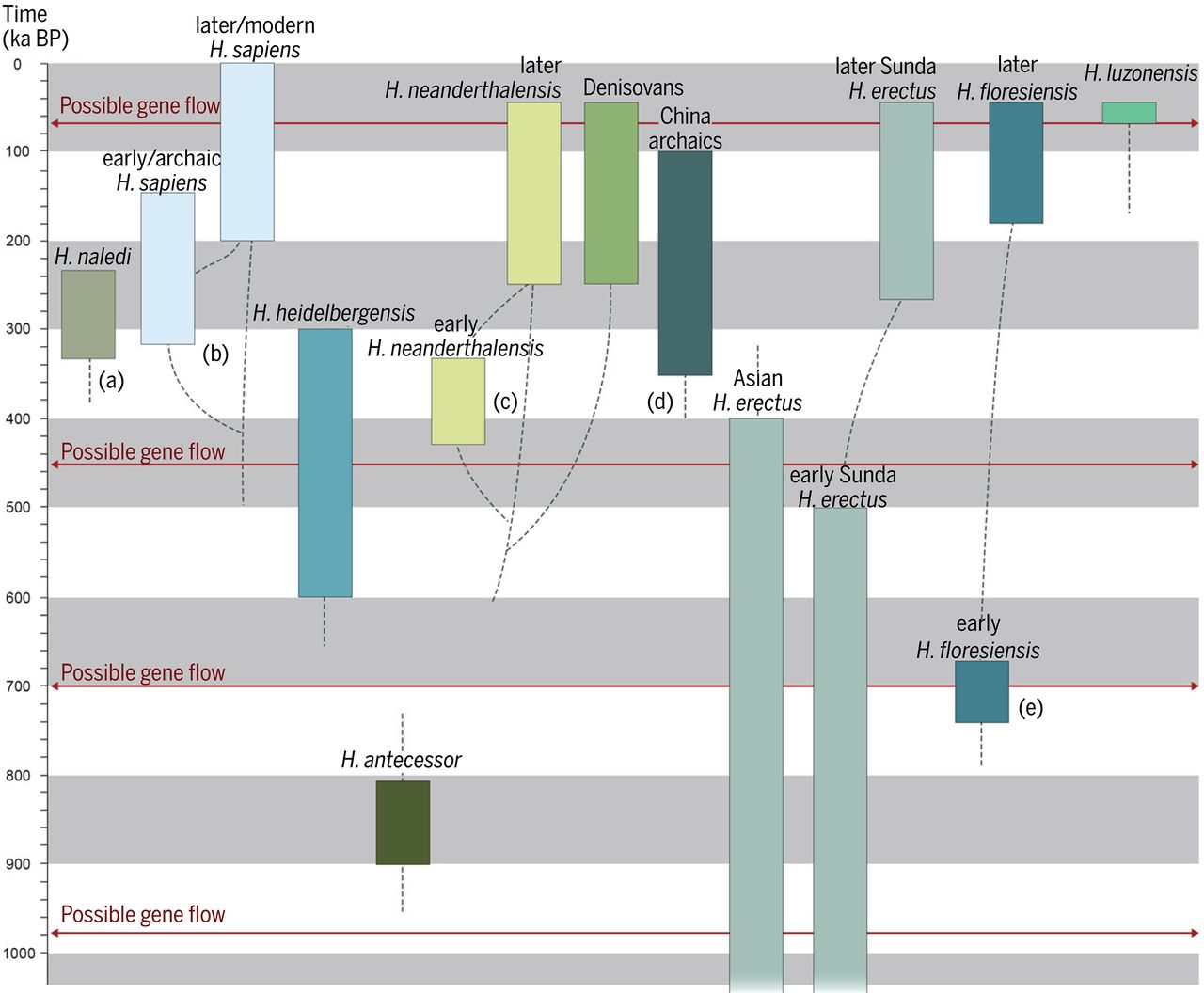

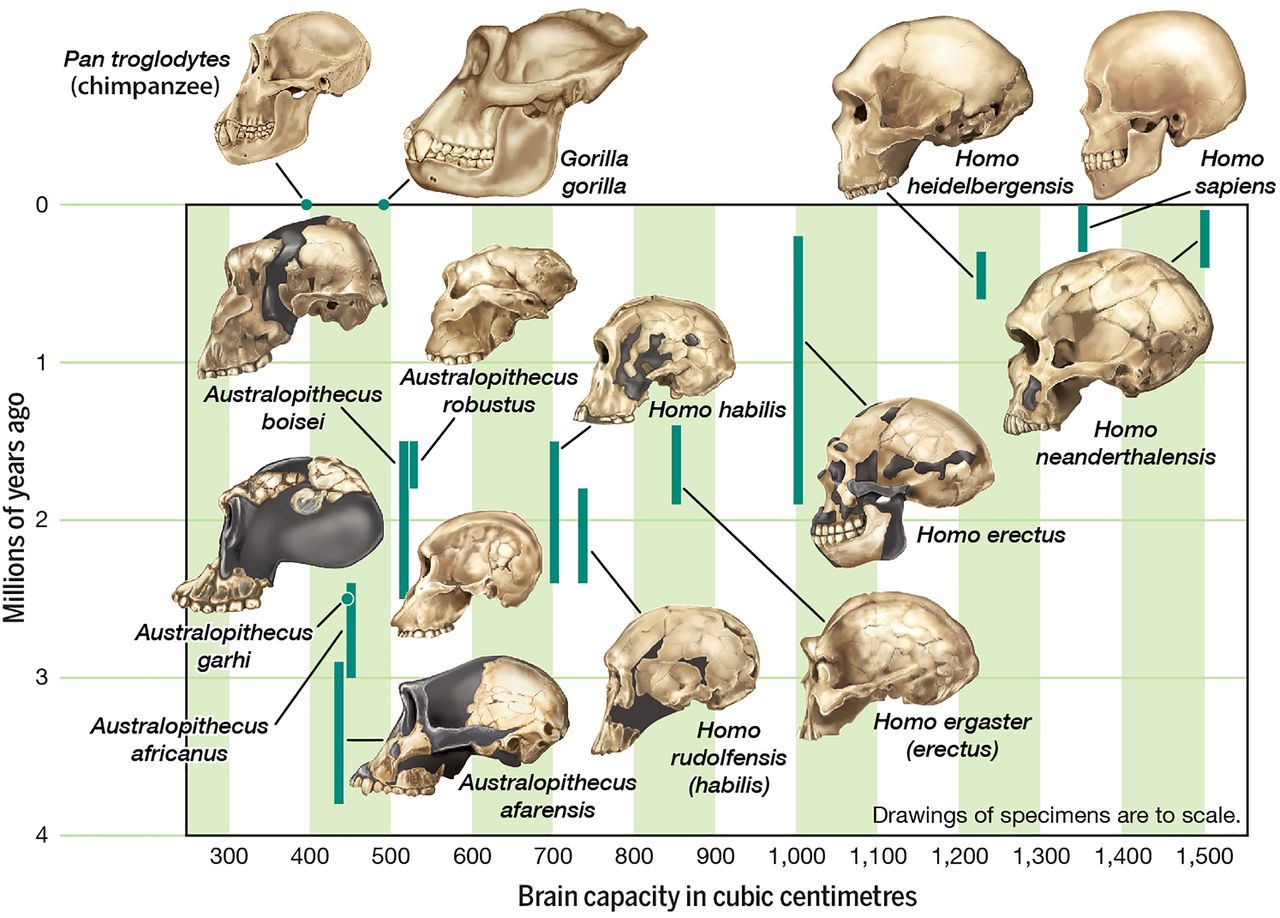

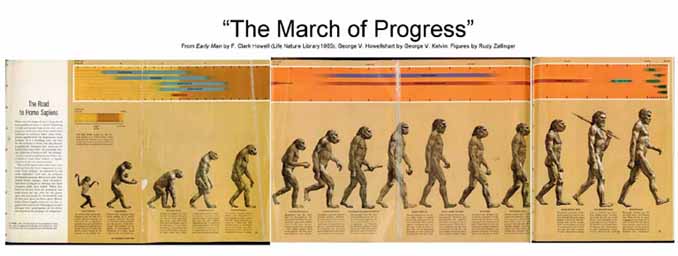

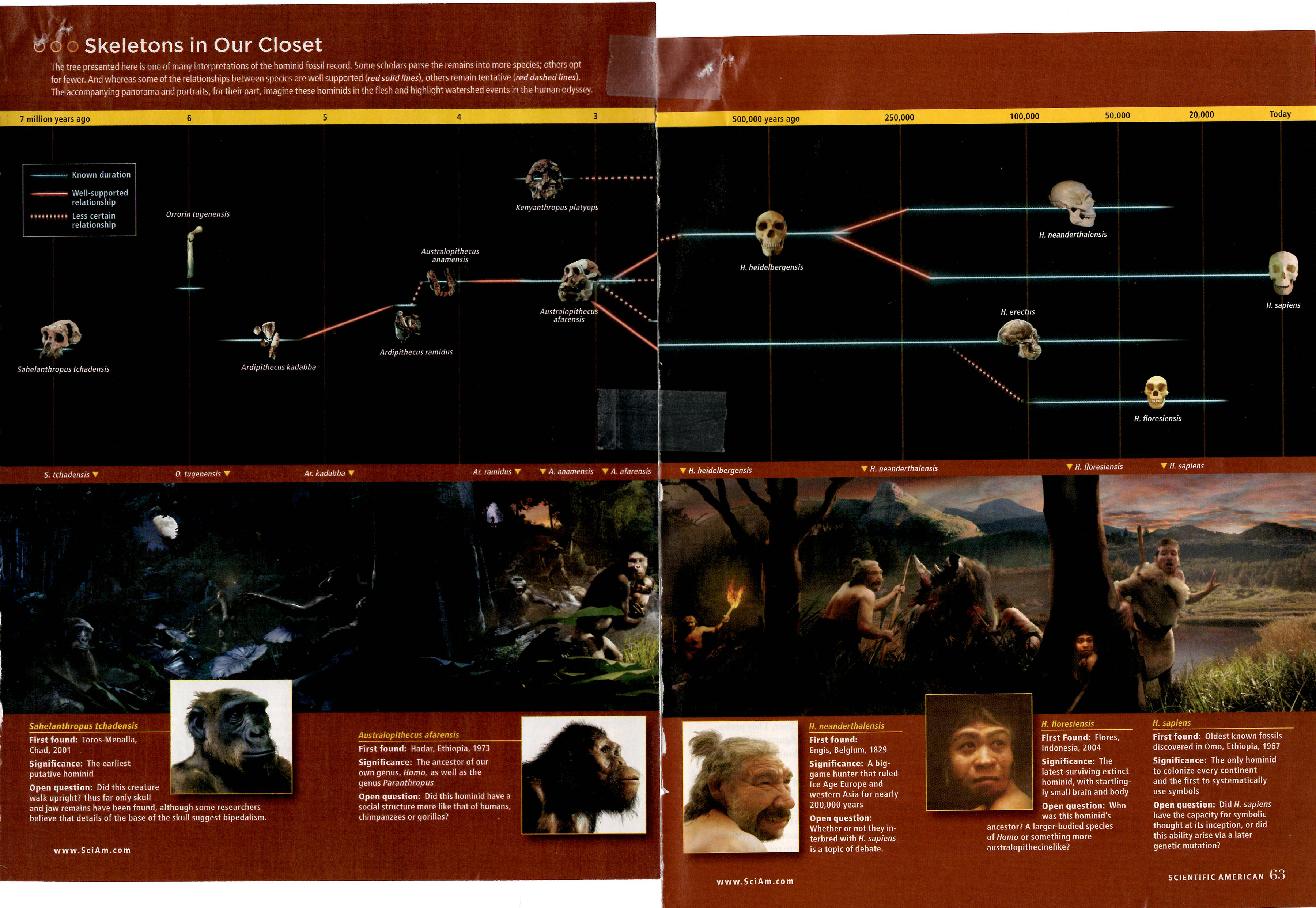

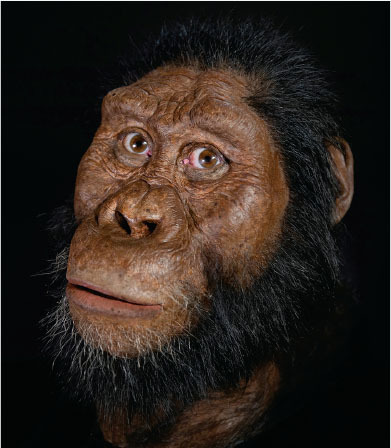

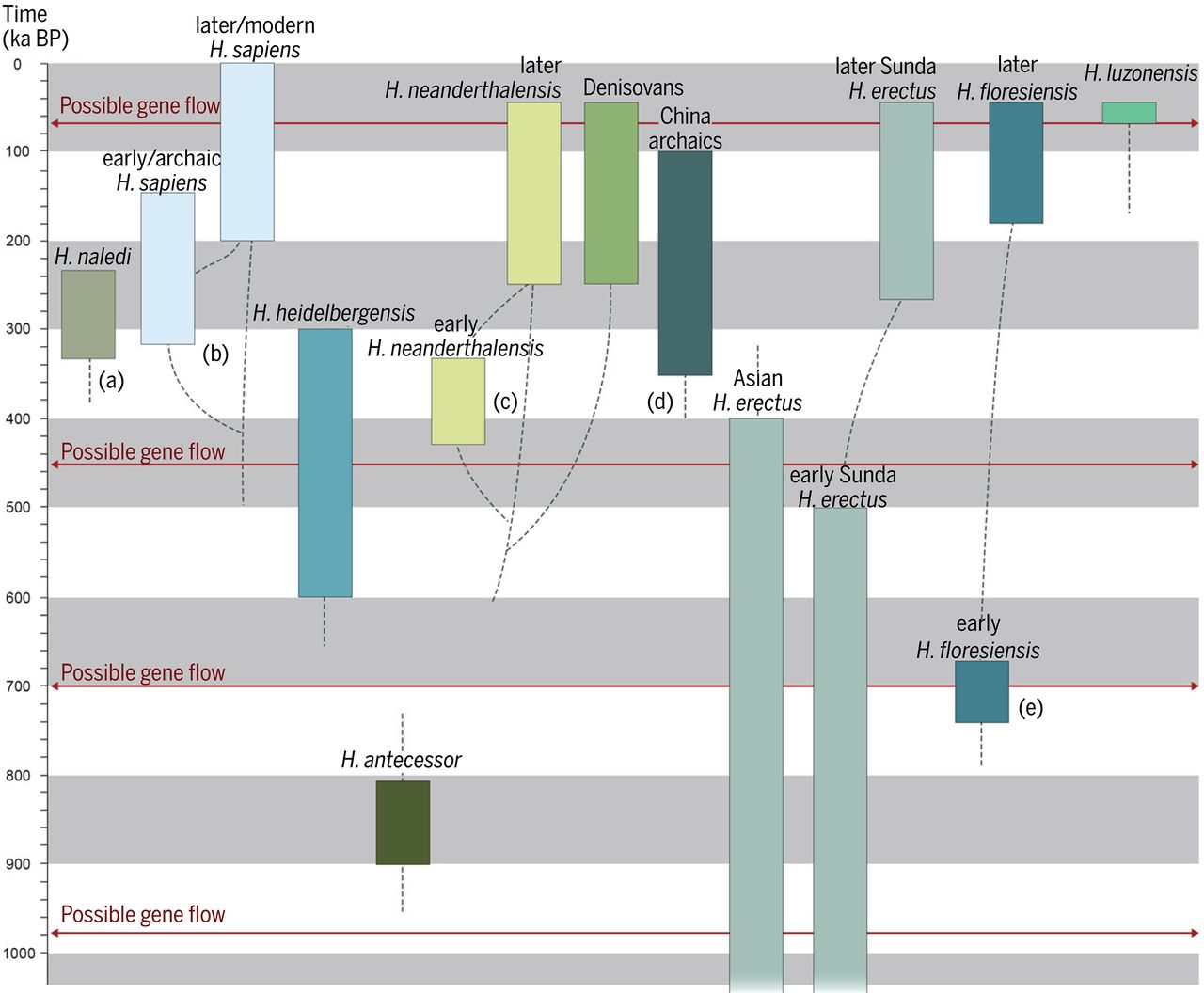

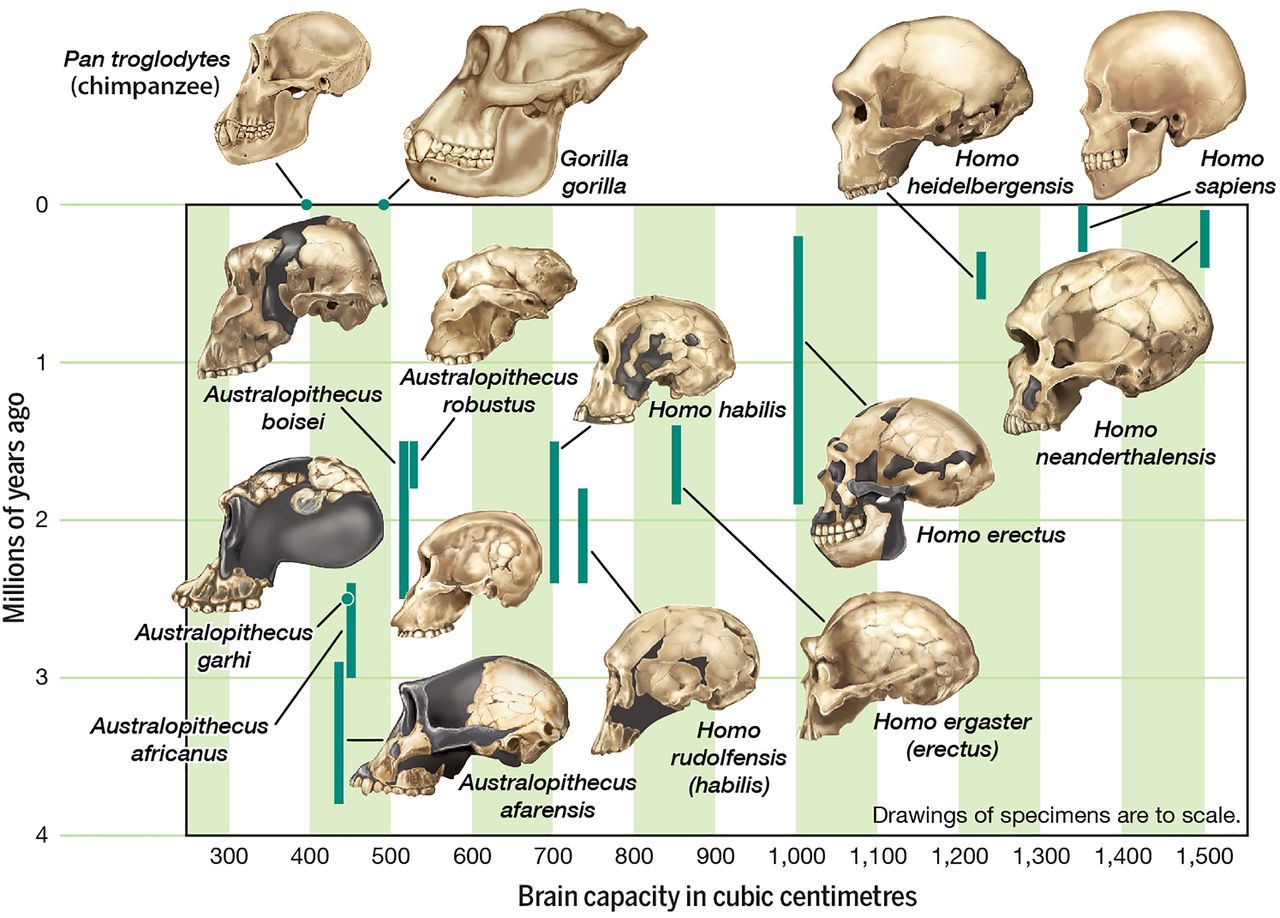

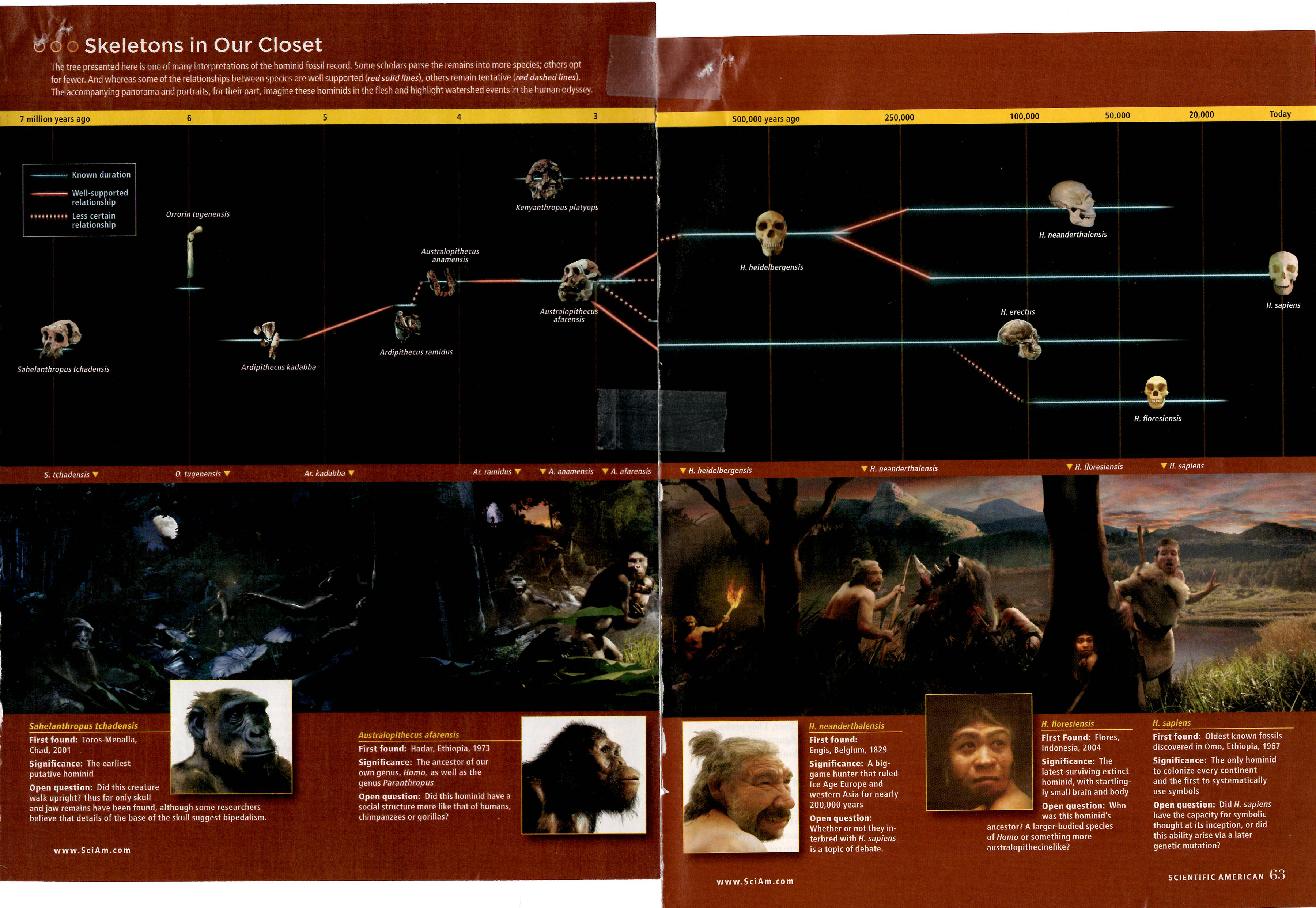

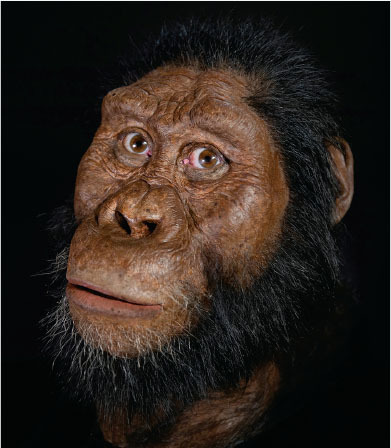

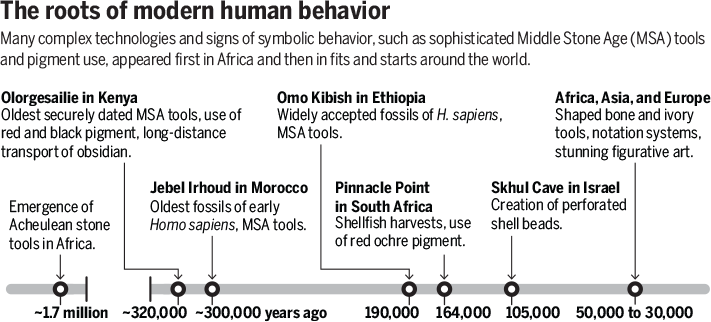

The phylogenetic

point of view is concerned with the development of mind across

evolutionary time, and the question of mind in nonhuman

animals. It includes comparative psychology, which (as

its name implies) is concerning with studying learning and

other cognitive abilities in different species (comparative

psychology is sometimes known as cognitive ethology);

and evolutionary psychology (an offshoot of sociobiology)

which traces how human mental and behavioral functions evolved

through natural selection and similar processes.Go to a discussion

of the phylogenetic view.

The phylogenetic

point of view is concerned with the development of mind across

evolutionary time, and the question of mind in nonhuman

animals. It includes comparative psychology, which (as

its name implies) is concerning with studying learning and

other cognitive abilities in different species (comparative

psychology is sometimes known as cognitive ethology);

and evolutionary psychology (an offshoot of sociobiology)

which traces how human mental and behavioral functions evolved

through natural selection and similar processes.Go to a discussion

of the phylogenetic view.

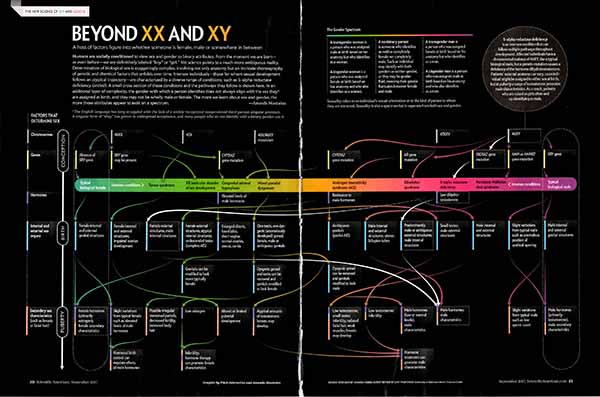

The

cultural point of view is concerned with the effects

of cultural diversity within the human species. This approach

has its origins in 19th-century European colonialism, which

asked questions, deemed essentially racist today, about

whether the "primitive" mind (meaning, of course, the minds of

the colonized peoples of Africa, Asia, and elsewhere) was

lacking the characteristics of the "advanced" mind (meaning,

of course, the minds of the European colonizers). Edward

Burnet Tylor, generally considered to be the founding father

of anthropology (he offered the first definition of "culture"

in its modern sense of a body of knowledge, customs and

values acquired by individuals from their native

environments), distinguished between those cultures

which more or less "civilized". Stripped of the racism,

the sub-field of anthropology known as anthropological

psychology is the intellectual heir of this work. More

recently, anthropological psychology has been replaced by cultural

psychology, which studies the impact of cultural

differences on the individual's mental life, without the

implication that one culture is more "developed" than another.Go to

a discussion of the cultural view.

The

cultural point of view is concerned with the effects

of cultural diversity within the human species. This approach

has its origins in 19th-century European colonialism, which

asked questions, deemed essentially racist today, about

whether the "primitive" mind (meaning, of course, the minds of

the colonized peoples of Africa, Asia, and elsewhere) was

lacking the characteristics of the "advanced" mind (meaning,

of course, the minds of the European colonizers). Edward

Burnet Tylor, generally considered to be the founding father

of anthropology (he offered the first definition of "culture"

in its modern sense of a body of knowledge, customs and

values acquired by individuals from their native

environments), distinguished between those cultures

which more or less "civilized". Stripped of the racism,

the sub-field of anthropology known as anthropological

psychology is the intellectual heir of this work. More

recently, anthropological psychology has been replaced by cultural

psychology, which studies the impact of cultural

differences on the individual's mental life, without the

implication that one culture is more "developed" than another.Go to

a discussion of the cultural view.

The Ontogenesis of Personhood

Phylogenesis has to do with the

development of the species as a whole. Ontogenesis has to do

with the development of the individual species member. In

contrast to comparative psychology, which makes its

comparisons across species, developmental psychology makes its

comparisons across different epochs of the life span.

Mostly, developmental

psychology focuses its interests on infancy and childhood -- a

natural choice, given the idea that mental development is

correlated with physical maturation. At its core,

developmental psychology is dominated by the opposition of

nature vs. nurture, or nativism vs. empiricism:

- Is the newborn child a "blank slate", all of whose

knowledge and skills are acquired through learning

experiences?

- Or are some aspects of mental functioning innate, part

of the child's genetic endowment, acquired through the

course of evolution?

Nature and Nurture

The dichotomy between "nature" and "nurture"

was first proposed in those terms by Sir Francis Galton

(1822-1911), a cousin of Charles Darwin's who -- among other

things -- expanded Darwin's theory of evolution by natural

selection into a political program for eugenics --

the idea of strengthening the human species by artificial

selection for, and against, certain traits.

Galton

took the terms nature and nurture from

Shakespeare's play The Tempest, in which Prospero

described Caliban as:

Galton

took the terms nature and nurture from

Shakespeare's play The Tempest, in which Prospero

described Caliban as:

A devil, a born devil, on whose nature

Nurture can never stick.

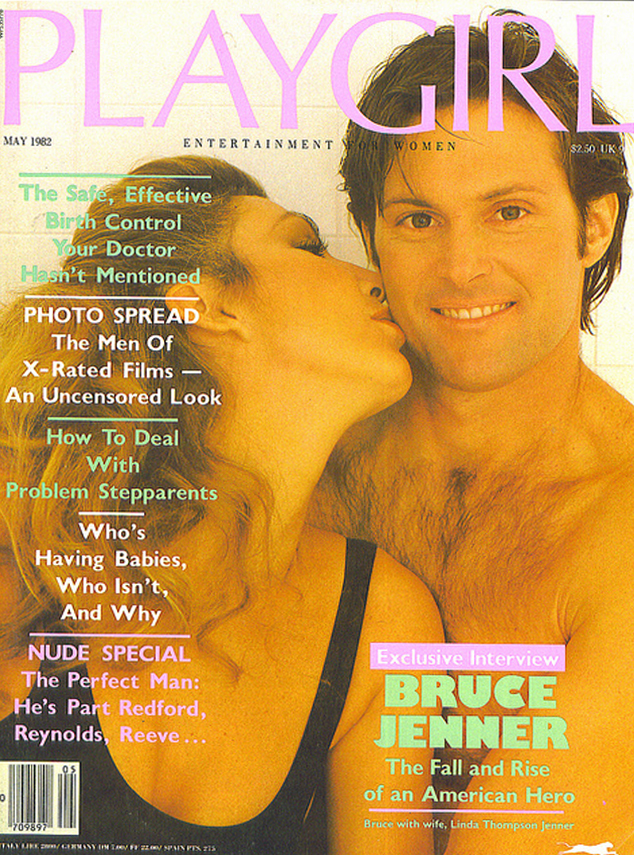

(Here, from an early 20th-century English

theatrical advertisement, is Sir Herbert Beerbohm Tree as

Caliban, as rendered by Charles A. Bushel.)

Galton wrote

that the formula of nature versus nurture was"a

convenient jingle of words, for it separates under two

distinct heads the innumerable elements of which personality

is composed".

Galton wrote

that the formula of nature versus nurture was"a

convenient jingle of words, for it separates under two

distinct heads the innumerable elements of which personality

is composed".

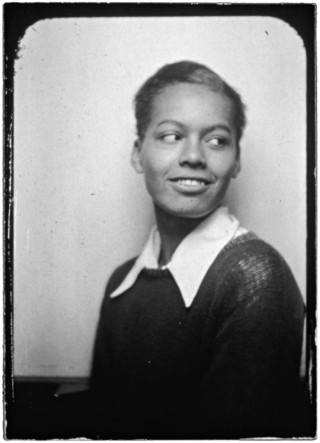

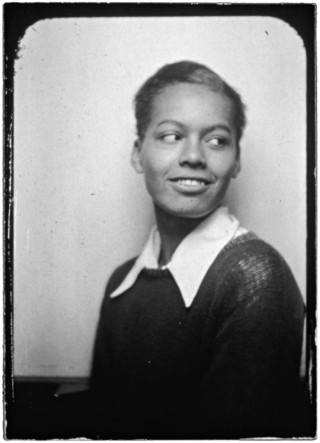

Galton's focus on nature, and biological determinism, was

countered by Franz Boas (1858-1942), a pioneering

anthropologist who sought to demonstrate, in his work and

that of his students (who included Edward Sapir, Margaret

Mead, Claude Levi-Strauss, Ruth Benedict, and Zora Neale

Hurston),

the power of culture in shaping lives. It

was nature versus nurture with the scales reset: against

our sealed-off genes, there was our accumulation of

collective knowledge; in place of inherited learning,

there was the social transmission of that knowledge from

generation to generation. "Culture" was experience raised

to scientific status. And it combined with biology to

create mankind ("The Measure of America" by Claudia Roth

Pierpont,New Yorker, 03/08/04).

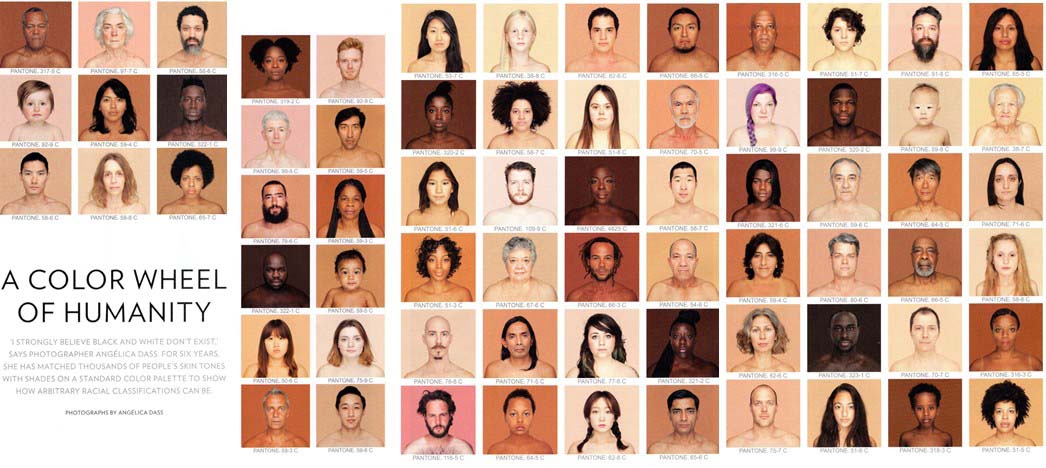

It is to Boas that we owe the maxim that

variations within cultural groups are larger than variations

between them.

Interestingly, Boas initially trained as a

physicist and geographer, and did his dissertation in

psychophysics, on the perception of color in water (don't

ask). But during a period of military service, he

published a number of other papers on psychophysics, some

of which anticipated the insights of signal detection

theory, discussed in the lectures on Sensation.

From his dissertation, he concluded that even something as

simple as the threshold for sensation depended on the

expectations and prior experiences of the perceiver: the

underlying sensory processes were not innate, and they

were not universal. They are in some sense acquired

through learning.

For a virtual library of Galton's works, see www.galton.org.

For a sketch of Boas' life and work, see "The

Measure of America" by Claudia Roth Pierpont,New Yorker,

03/08/04 (from which the quotes above are taken).

For more detail on Boas and his circle, see Gods

of the Upper Air (2919) by Charles King.

According to King, Boas and other early cultural

anthropologists "moved the explanation for human differences

from biology to culture, from nature to nurture". In

so doing, they were "on the front lines of the greatest

moral battle of our time: the struggle to prove that --

despite differences of skin color, gender, ability, or

custom -- humanity is one undivided thing". As Ella

Deloria, another of Boas's students (and sister of Vine

Deloria, Jr., the Native American activist and author of Custer

Died for Your Sins: An Indian Manifesto,

published in 1969), recorded in her notes on his lectures,

"Cultures are many; man is one". King says that his

book "is about women and men who found themselves on the

front lines of the greatest moral battle of our time: the

struggle to prove that -- despite differences of skin color,

gender, ability or custom -- humanity is one undivided

thing."

- Louis Menand, reviewing King's book in the New Yorker

("The Looking Glass, 08/26/201), notes that Boas and his

group shifted the meaning of culture from

"intellectual achievement" to "way of life". This is

something of a paradox, because cultural anthropologists

are often portrayed by critics such as Allan Bloom (in The

Closing of the American Mind, 1987) as cultural

relativists. It's OK, in this stereotype, for some

aboriginal people to eat each other -- after all, it's

their culture; and maybe, since they do it, we should try

it too. But as King and Menand point out, Boas and

his students weren't cultural relativists. They were

interested in describing different cultures from within --

in recovering cultural diversity that was, even in the

1930s and 40s, being lost to the homogenizing effects of

Westernization. They were also interested in holding

up other cultures a mirror through which they could

understand their own. As Menand points out,"The

idea... is that we can't see our way of life from the

inside, just as we can't see our own faces. The

culture of the "other" serves as a looking glass....

These books about pre-modern peoples are really books

about life in the modern West.... Other species are

programmed to "know" how to cope with the world, but our

biological endowment evolved to allow us to choose how to

respond to our environment. We can't rely on our

instincts; we need an instruction manual. And

culture is the manual."

- Jennifer Wilson, reviewing Gods of the Upper Air

in The Nation (05/18/2020), notes that "At a time

when the country's foremost social scientists... were

insisting that different cultures fell along a continuum

of evolution, cultural anthropologists [like Boas and his

circle] asserted that such a continuum did not

exist. Instead of evolving in a linear fashion from

savagery to civilization, they argued, cultures were in a

constant process of borrowing and interpolation.

Boas called this process "cultural diffusion", and it

would come to be the bedrock of cultural

anthropology...".

The opposition between "nature" and "nurture"

seems to imply that the "nurture" part refers to parental

influence: how children are brought up. As we'll see,

parental influence appears to be a lot less important than

we might think, compared to influences outside the

home. But more important,"nurture" is probably better

construed as experience, as opposed to whatever is

built into us by our genes. Those "experiences" begin

in the womb, and continue through old age to death; some

experiences happen to us, some we arrange for ourselves, but

whatever their origins they make us the people we are at

each and every point in the life cycle. This is the

point of Unique: The New Science of Human Individuality

(2020) by David J Linden a neuroscientist at Johns Hopkins

University. Being a neuroscientist, Linden focuses on

how nature and nurture, meaning genes and internal,

physiological changes instigated by events in the external

environment, interact to produce individual

differences. But his essential point goes much beyond

the genes and soup of neurogenetics and epigenetics.

Reviewing the book in the New York Times ("Beyond

Nature and Nurture, What Makes Us Ourselves?", 11/01/2020),

Robin Marantz Henig quotes Linden:

In ordinary English, "nurture" means how

your parents raise you, he writes. "But, of course,

that's only one small part of the nonhereditary

determination of traits." He much prefers the word

"experience", which encompasses a broad range of factors,

beginning in the womb an carrying through every memory,

every meal, every scent, every romantic encounter, every

illness from before birth to the moment of death. He

admits that the phrase he prefers to "nature versus

nurture" doesn't roll as "trippingly off the tongue", but

he offers it as a better summary of how our individuality

really emerges: through "heredity interacting with

experience, filtered through the inherent randomness of

development".

Still,

a formula such as "genes and experience" doesn't come nearly

as "trippingly off the tongue" as "nature and nurture", so

stick with the latter. Just understand what "nurture"

really means. And be sure to include the "and":

it's not "nature versus nurture", or "nature or

nurture". As we'll see, nature and nurture work

together in the development of the individual.

(Cartoon by Barbara Smaller, New Yorker,

07/11-18/2022.)

Still,

a formula such as "genes and experience" doesn't come nearly

as "trippingly off the tongue" as "nature and nurture", so

stick with the latter. Just understand what "nurture"

really means. And be sure to include the "and":

it's not "nature versus nurture", or "nature or

nurture". As we'll see, nature and nurture work

together in the development of the individual.

(Cartoon by Barbara Smaller, New Yorker,

07/11-18/2022.)

Of course, neither physical nor mental

development stops at puberty. More recently, developmental

psychology has acquired an additional focus on development

across the life span, including adolescence and

adulthood, with a special interest in the elderly.

The Human Genome

The human

genetic endowment consists of 23 pairs of chromosomes.

Each chromosome contains a large number of genes.

Genes, in turn, are composed of deoxyribonucleic acid (DNA),

itself composed of a sequence of four chemical bases: adenine,

guanine, thymine, and cytosine (the letters A, G, T, and C

which you see in graphical descriptions of various genes).

Every gene is located at a particular place on a specific

chromosome. And since chromosomes come in pairs, so do genes.

The human

genetic endowment consists of 23 pairs of chromosomes.

Each chromosome contains a large number of genes.

Genes, in turn, are composed of deoxyribonucleic acid (DNA),

itself composed of a sequence of four chemical bases: adenine,

guanine, thymine, and cytosine (the letters A, G, T, and C

which you see in graphical descriptions of various genes).

Every gene is located at a particular place on a specific

chromosome. And since chromosomes come in pairs, so do genes.

For a nice historical

survey of genetics, see The Gene: An Intimate History

by Siddhartha Mukherjee (2016).

Corresponding pairs of genes, contain

information about some characteristic, such as eye color, skin

pigmentation, etc. While some traits are determined by single

pairs of genes, others, such as height, are determined by

several genes acting together. In either case, genes come in

two basic categories.Dominant genes (indicated by

upper-case letters) exert an effect on some trait regardless

of the other member. For example, in general the genes for

brown eyes, dark hair, thick lips, and dimples are dominant

genes.Recessive genes (indicated by lower-case letters)

affect a trait only if the other member is identical. For

example, in general the genes for blue eyes, baldness, red

hair, and straight noses are recessive. The entire set of

genes comprises the organism's genotype, or genetic

blueprint.

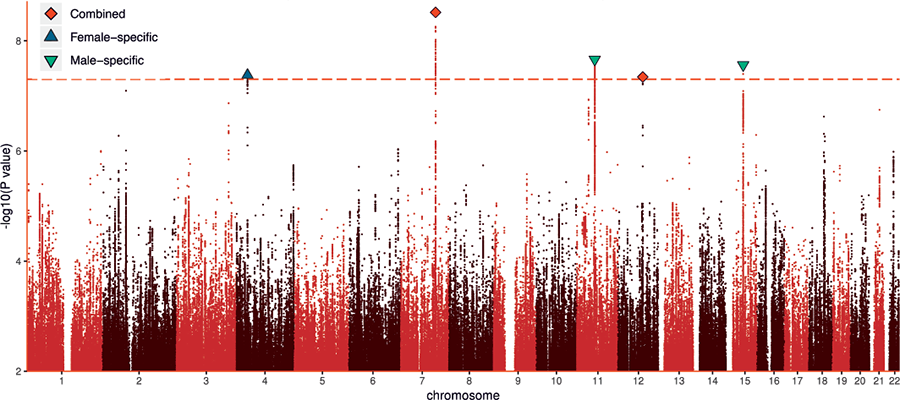

One of the most important technical

successes in biological research was the decoding of the human

genome -- determining the precise sequence of As, Gs, Ts, and

Cs that make us, as a species, different from all other

species. And along with advances in gene mapping, it has been

possible to determine specific genes -- or, more accurately,

specific alleles, or mutations of specific genes,

known as single-nucleotide polymorphisms, or SNPs

-- that put us at risk for various diseases, and which dispose

us to various personality traits. The most popular method for

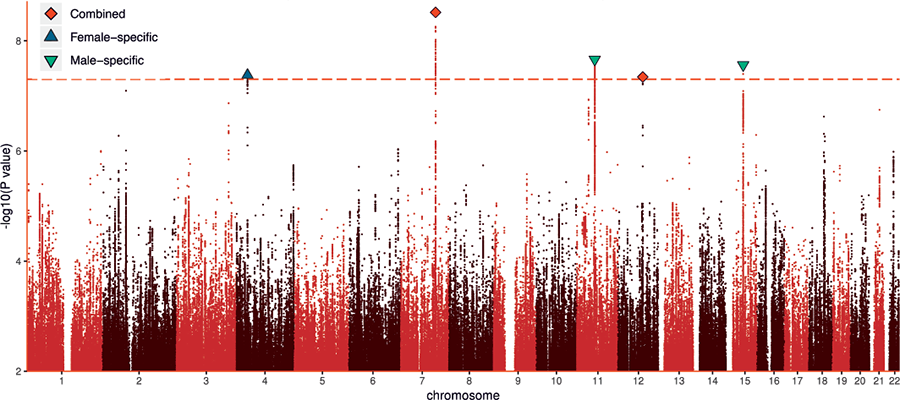

this purpose is a genome-wide association study

(GWAS). In what is essentially a multiple-regression

analysis, employing very large samples, GWAS examines the

relationship between every allele and some characteristic of

interest -- say, heart disease or intelligence. Of

course, a lot of these correlations will occur just by chance,

but there are statistical corrections for that.

Some sense of this situation is given by

the "genetic" autobiography (A Life Decoded, 2007)

published by Craig Venter, the "loser" (in 2001, to a

consortium government-financed academic medical centers) in

the race to decode the human genome -- though, it must be

said, the description of the genome produced by Venter's group

is arguably superior to that produced by the

government-financed researchers. Anyway, Venter has organized

his autobiography around his own genome (which is what was

sequenced by his group in the race), which revealed a number

of such genes, including:

Some sense of this situation is given by

the "genetic" autobiography (A Life Decoded, 2007)

published by Craig Venter, the "loser" (in 2001, to a

consortium government-financed academic medical centers) in

the race to decode the human genome -- though, it must be

said, the description of the genome produced by Venter's group

is arguably superior to that produced by the

government-financed researchers. Anyway, Venter has organized

his autobiography around his own genome (which is what was

sequenced by his group in the race), which revealed a number

of such genes, including:

- on Chromosome 1, the gene TNFSF4, linked to heart

attacks;

- on Chromosome 4, the CLOCK gene, related to an evening

preference (i.e., a "night person" as opposed to a "day

person");

- on Chromosome 8, the gene CHRNA8, linked to tobacco

addiction;

- on Chromosome 9, DRD4, linked to novelty-seeking (Venter

is an inveterate surfer);

- on Chromosome 18, the gene APOE, linked to Alzheimer's

disease; and

- on the X Chromosome, the MAOA gene (about which more

later, in the lectures on Personality and Psychopathology),

which is linked to antisocial behavior and conduct

disorder (among genetics researchers, Venter has a

reputation as something of a "bad boy").

None of these genes means that Venter

is predestined for a heart attack or Alzheimer's disease, any

more than he was genetically predestined to be a surfer. It's

just that these genes are more common in people who have these

problems than in those who do not. They're risk factors, but

not an irrevocable sentence to heart disease or dementia.

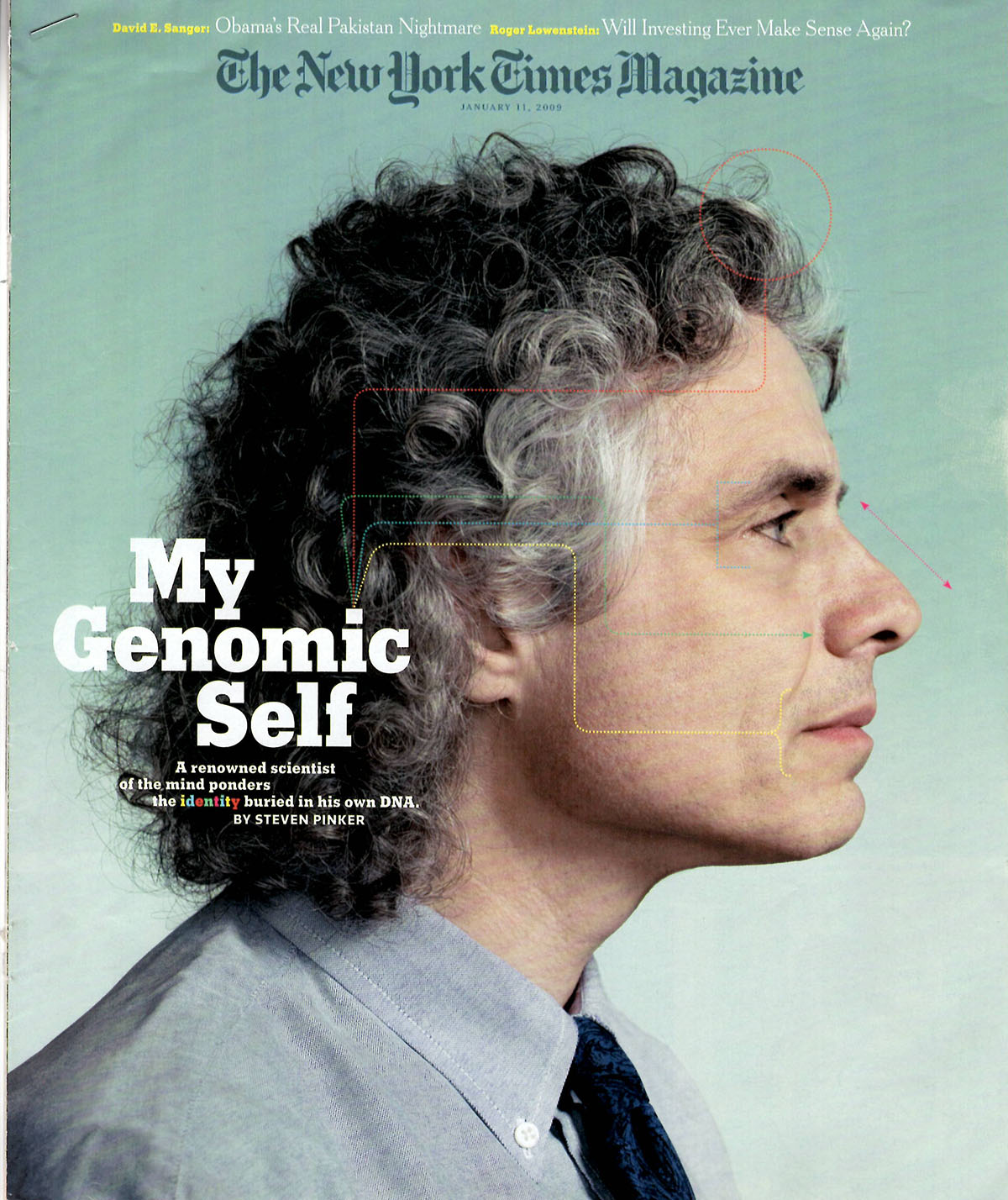

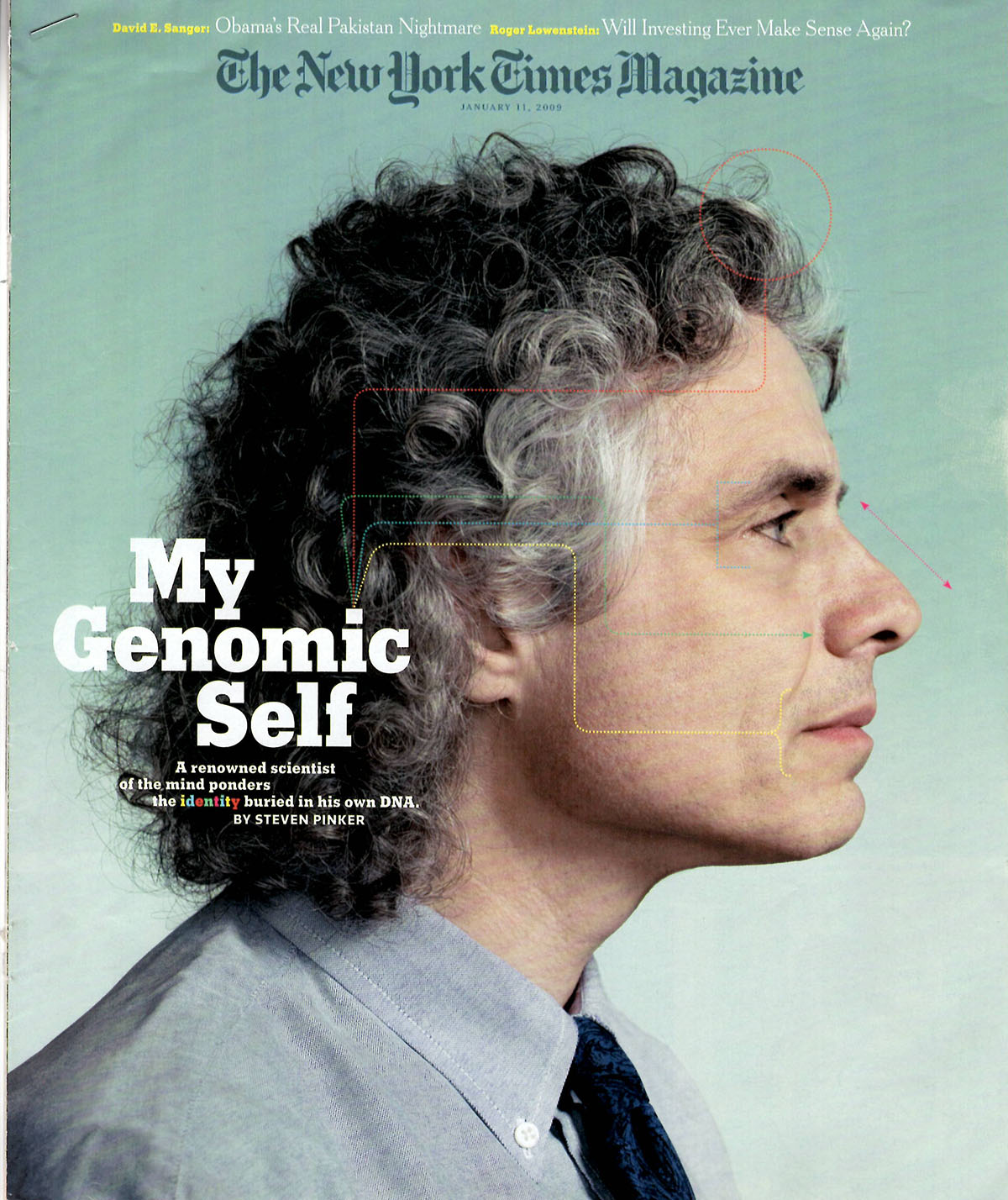

Steven

Pinker, a distinguished cognitive psychologist and vigorous

proponent of evolutionary psychology (see below) went

through much the same exercise in "My

Genomic Self", an article in the New York Times

Magazine (01/11/2009). While allowing that

the DNA analyses of personal genomics will tell us a

great deal about our health, and perhaps about our

personalities, they won't tell us much about our personal

identities. Pinker refers to personal genomics, of the

sort sold on the market by firms like 23andMe, as mostly

"recreational genomics", which might be fun (he declined to

learn his genetic risk for Alzheimer's Disease), but doesn't

yet, and maybe can't, tell us much about ourselves that we

don't already know. He writes:

Steven

Pinker, a distinguished cognitive psychologist and vigorous

proponent of evolutionary psychology (see below) went

through much the same exercise in "My

Genomic Self", an article in the New York Times

Magazine (01/11/2009). While allowing that

the DNA analyses of personal genomics will tell us a

great deal about our health, and perhaps about our

personalities, they won't tell us much about our personal

identities. Pinker refers to personal genomics, of the

sort sold on the market by firms like 23andMe, as mostly

"recreational genomics", which might be fun (he declined to

learn his genetic risk for Alzheimer's Disease), but doesn't

yet, and maybe can't, tell us much about ourselves that we

don't already know. He writes:

Even when the effect of some gene is indubitable,

the sheer complexity of the self will mean that it will not

serve as an oracle on what the person will do.... Even

if personal genomics someday delivers a detailed printout of

psychological traits, it will probably not change everything,

or even most things. It will give us deeper insight about the

biological causes of individuality, and it may narrow the

guesswork in assessing individual cases. But the issues about

self and society that it brings into focus have always been

with us. We have always known that people are liable, to

varying degrees, to antisocial temptations and weakness of the

will. We have always known that people should be encouraged to

develop the parts of themselves that they can (“a man’s reach

should exceed his grasp”) but that it’s foolish to expect that

anyone can accomplish anything (“a man has got to know his

limitations”). And we know that holding people responsible for

their behavior will make it more likely that they behave

responsibly. “My genes made me do it” is no better an excuse

than “We’re depraved on account of we’re deprived.”

A prominent GWAS by Daniel Benjamin, a

behavioral economist, and his colleagues studied 1.1 million

people and identified 1,271 SNPs which were, taken

individually, significantly associated with educational

attainment measured in years of schooling (Lee et al., Nature

Genetics, 2018). Taken together, these variants

accounted for about 11-13% of the variance in educational

attainment. However, in order to maximize the likelihood

of revealing genetic correlates (essentially, by reducing

environmental "noise"), the study was confined to white

Americans of European descent. When the same analysis

was repeated in a sample of African-Americans, the same

genetic variants accounted for less than 2% of the variance in

educational attainment. None of these SNPs should be

thought of as dedicated to educational achievement, especially

in populations other than those in which the GWAS was

conducted in the first place. In the first place, formal

schooling arose too recently to be subject to genetic

selection. In fact, the actual function of these SNPs is

unknown, and it is likely that many of them control traits

whose association with educational attainment is rather

indirect. So (to take an example from a story in The

Economist, 03/31/2018), children who inherit weak

bladders from their parents may do poorly on timed

examinations. In any event, the fact that there are SNPs

associated with educational attainment, or any other

psychosocial characteristic, doesn't mean that these traits

are directly heritable -- or that focusing on genes is the

best way to understand them, much less improve them.

Remember the lessons of the search for the "gay gene",

recounted above.

Notice the historical progression

here. To begin with, the "intelligence gene" is the Holy

Grail of behavior genetics. Psychologists have been

interested in finding the genes that cause schizophrenia, or

depression, or some other form of mental illness, but the

search for the intelligence gene goes back to the 19th century

-- that is, when Sir Francis Galton made the observation that

educational achievement and financial success, both presumed

markers of intelligence, tended to run in families (in Hereditary

Genius, first published in 1869). You might think,

maybe, that in 19th century England rich families were more

likely to send their children to Oxford and Cambridge and to

get elected to Parliament or elevated to the House of Lords,

than poor ones. You might think that. But Galton

concluded that intelligence was hereditary, and was passed

from one generation to another through bloodlines.

Galton knew nothing of genes: Gregor Mendel's papers setting

out the principles of heredity had been published in an

obscure journal in 1865 and 1866, but only garnered serious

attention when they were discovered and republished in 1900;

and Wilhelm Johannsen didn't coin the word "gene" until

1905).

The molecular structure of DNA wasn't

decoded until 1963 by Watson and Crick (with not-so-little but

very much uncredited help from Rosalind Franklin). But

even the discovery of the "double helix" didn't advance our

understanding of the genetic basis of intelligence.

Because, for the most part, we didn't know how many genes we

had, or where particular genes were located on the 23 pairs of

chromosomes that make up the human genome. All we had

was evidence from twin studies, of the sort reviewed in the lectures

on Thinking, which clearly established the genetic

contribution to individual differences in intelligence --

though the same studies also established the importance of the

environment, particularly the nonshared environment.

Even twin studies can be

misleading. For example, one group of behavior

geneticists used variants on the twin-study method

(technically, the adoption-study method) to show that there is

a genetic contribution to the amount of time that young

children spend viewing television (Plomin et al., Psych.

Science, 1990). But let's be clear: there is no

"television-viewing gene". TV wasn't invented

until the 1920s, so there hasn't been enough time to evolve

such a gene. But there may be genetic contributions to

traits that influence television viewing (for example, the

tendency of some children to just sit around the house; or the

tendency of some parents to ignore their children). But

any such influence is going to be indirect. Moreover,

even in this study the heritability of television-viewing

dropped from roughly 50% at age 3 to less than 20% at age 5,

so there are clearly other things going on.

At first, the hypothesis was that there

was one, or only a few, genes that coded for intelligence, and

that these genetic determinants could be identified by

correlating specific genes with IQ scores (or some proxy for

IQ, like years of schooling). That didn't work

out. For example, Robert Plomin's group at the

University of Texas (Chorney et al., Psych. Sci.,

1998) reported that a specific gene known as IGF2R (for

insulin-like growth factor-2 receptor), located on Chromosome

6, was associated with high IQ scores. Plomin is a

highly regarded behavior geneticist, and justly so, and he

knows how to do these studies right, so before he submitted

his research for publication he replicated his finding in a

second sample. As it happens, I was the editor of Psychological

Science, the journal to which Plomin's research group

submitted their paper. I was skeptical of the finding,

and told Plomin so: although I recognize that there is a

genetic component to intelligence (the twin studies reviewed

in the lectures on Thinking

establish that definitively) these kinds of findings, which

identify a specific gene with a specific psychological trait,

rarely hold up when replicated in a new sample. Still,

there was nothing wrong with the study, and I agreed with

Plomin that I'ostensibly m sceptical of these kinds of

studies: I was a graduat when the first "depression gene", or

at least the chromosome on which it was located, was

identified, and that fin

It should also be remembered that there

is a great deal of "genetic" material on our chromosomes

besides genes, and the GWAS technique doesn't discriminate

between gene variants -- sequences of nucleotides that code

for some trait (like intelligence) or disease (like

schizophrenia) -- and SNPs, which also come in variants (hence

the term polymorphism), but which aren't actually

genes. Anyway, with new powerful computers, we can

correlate individual SNPs with traits or diseases, just as

earlier investigators did with genes themselves. And the

result is that, indeed, some SNPs are correlated with

some traits. But -- and this is a big but -- the

correlations are very rarely statistically significant.

For example, the presence of one SNP, known as "rs11584700"

(don't ask), adds two days to educational

attainment. However, if you aggregate across a couple

dozen (or hundred) nonsignificant correlations, the overall

correlation between the entire package of SNPs -- known as a polygenic

risk score -- can rise to statistical

significance. Even so, the correlations, while they may

be statistically significant because of the large numbers of

subjects involved, may be practically trivial. More

important, however, remember the lesson of IGF2R. An

array of SNPs may correlate with intelligence (or years of

schooling) in one sample, but this relationship may not

replicate in another sample.

This is not to say that there are no

genetic correlates of intelligence (or even educational

attainment) or other socially significant traits. I'm

prepared to learn they are. But the really important

questions for me, when looking at genetic correlations, are:

- How strong is the association? Is it statistically

significant?

- If significant, is it replicable?

- If replicable, is the association strong enough to be of

any practical significance?

- If so, what do you plan to do with this knowledge?

For an enthusiastic summary of the

prospects for using GWAS to identify the genetic

underpinnings of intelligence, and a defense of the search

for the genetic causes of psychosocial phenotypes, see The

Genetic Lottery: Why DNA Matters for Social Equality

by Kathryn Paige Harden (2022), who directs the Twin Project

at the University of Texas. Her book was given a

scathing review by M.W. Feldman and Jessica Riskin, two

Stanford biologists, in the New York Review of Books

("Why Biology is Not Destiny", 04/21/2022). Feldman

and Riskin are appropriately skeptical about GWAS, and

behavior genetics in general (although if they had taken

this course, they wouldn't be quite so skeptical about

psychologists' ability to measure personality traits, and

they'd know where openness to experience comes

from). Like good interactionists, F&R argue that

it's impossible to separate genetic and environmental

influences, "because the environment is in the genome and

the genome is in the environment". Harden replied in a

subsequent issue (06/09/2022), followed by a rejoinder by

Feldman and Riskin. The whole exchange is very

valuable for setting out the terms of the debate.

Genetic Sequencing and Genetic

Selection

Since the human genome was

sequenced, and as the costs of gene sequencing

have gone down, quite an industry has developed

around identifying "genes for" particular traits,

and then offering to sequence the genomes of

ordinary people -- not just the Craig Venters of

the world -- to determine whether they possess any

of these genes.

The applications in the case of

genetic predispositions to disease are obvious --

though, it's not at all clear that people want to

know whether they have a genetic predisposition to

a disease that may not be curable or preventable.

- Research has identified a genetic mutation

for Huntington's disease, a presently

incurable brain disorder, on Chromosome 4, but

many people with a family history of

Huntington's disease do not want to be told

whether they have it (the folk singer Arlo

Guthrie is a famous example).

- Two genes, known as BRCA1 and BRCA2,

substantially increase a woman's risk for

breast cancer. Some women with these

genes, who also have a family history of

breast cancer, have opted for radical

preventive treatments such as double

mastectomy.

On the other hand, there are some uses of

genetic testing that are not necessarily so

beneficial.

- In certain cultures which value male

children more highly than female children,

genetic testing for biological sex may lead

some pregnant women to abort female fetuses,

leading to a gender imbalance. For

example, even without genetic testing, both

China and India have a clear problem with

"missing women" commonly attributed to

sex-selective abortion.

And there are other kinds of genetic testing

which also may not be to society's -- or the

individual's -- advantage.

- A variant of the ACTN3 gene on chromosome 11

is present in a high proportion of athletes

who compete at elite levels in "speed" and

"power" spots (as opposed to "endurance"

sports. Almost as soon as this finding

was announced, a commercial enterprise offered

a genetic test which, for $149, would indicate

whether a child had such a gene ("Born to Run?

Little Ones Get Test for Sports Gene" by

Juliet Macur, New York Times,

11/30/2010). The danger is that children

found to have the gene will be tracked into

athletics, when they'd rather be librarians;

(or, perhaps, librarians who run 10K races

recreationally) and other children will be

discouraged from sports, even though they'd be

able to have careers as elite athletes.

The point is that genes aren't destiny, except

maybe through the self-fulfilling

prophecy. So maybe we shouldn't behave as

though they were.

|

From Genotype to Phenotype

Of course, genes don't act in isolation

to determine various heritable traits. One's genetic endowment

interacts with environmental factors to produce a phenotype,

or what the organism actually looks like. The genotype

represents the individual's biological potential, which is

actualized within a particular environmental context. The

environment can be further classified as prenatal (the

environment in the womb during gestation), perinatal (the

environment around the time of birth), and postnatal (the

environment present after birth, and throughout the life

course until death).

Because of the role of the environment,

phenotypes are not necessarily equivalent to genotypes. For

example, two individuals may have the same phenotype but

different genotypes. Thus, of two brown-eyed individuals, one

might have two dominant genes for brown eyes (BB), while

another might have one dominant gene for brown eyes and one

recessive gene for blue eyes (Bb). Similarly, two individuals

may have the same genotype, but different phenotypes. For

example, two individuals may have the same dominant genes for

dimples, (DD), but one has his or her dimples removed by

plastic surgery.

The chromosomes are found in the

nucleus of each cell in the human body, except the sperm cells

of the male and the egg cells of the female, which contain

only one element of each pair. At fertilization, each element

contributed by the male pairs up with the corresponding

element contributed by the female to form a single cell, or zygote.

At this point, cell division begins. The first few divisions

form a larger structure, the blastocyst. After six

days, the zygote is implanted in the uterus, at which point we

speak of an embryo.

Personality, Behavior, and the Human Genome Project

Evidence of a genetic contribution to

individual differences (see below), coupled with the

announcement of the decoding of the human genome in 2001,

has led some behavior geneticists to suggest that we will

soon be able to identify the genetic sources of how we

think, feel, and behave. However, there are reasons for

thinking that behavior genetics will not solve the problem

of the origins of mind and behavior.

- In the first place, there's no inheritance of acquired

characteristics. So while there might be a genetic

contribution to how we think, there can't be a

genetic contribution to what we think.

- In any event, there aren't enough genes. Before 2001, it

was commonly estimated that there were approximately

100,000 genes in the human genome. The reasoning was that

humans are so complex, at the very apex of animal

evolution, that we ought to have correspondingly many

genes. However, when genetic scientists announced the

provisional decoding of the human genome in 2001, they

were surprised to find that the human genome contains only

about 30,000 genes -- perhaps as few as 15,000 genes,

perhaps as many as 45,000, but still far too few to

account for much important variance in human experience,

thought, and action. As of 2024, the count stood at about

20,000 protein-coding genes, of which about 6,000 had been

assigned a function: these are the genes for some

trait (e.g., Gregor Mendel's round or wrinkled pea-seeds;

blue or brown eyes; breast cancer; or, closer to home, IQ

or schizophrenia or Neuroticism). Another 20,000

genes do not code for proteins. However, there are

still a couple of gaps in our knowledge of the human

genetic code, and by the time these are closed, the number

of human genes may have risen; or it may well have fallen

again!

- The situation got worse in 2004, when a revised analysis

(reported in Nature) lowered the number of human

genes to 20-25,000.

- By comparison, the spotted green pufferfish also has

about 20-25,000 genes.

- On the lower end of the spectrum, the worm c.

elegans has about 20,000 genes, while the fruit

fly has 14,000.

- On the higher end of the scale, it was reported in

2002 that the genome for rice may have more than

50,000 genes (specifically, the japonica strain

may have 50,000 genes, while the indica variety

may have 55,600 genes). It's been estimated that

humans have about 24% of our genes in common with the sativa

variety of rice. It's also been reported that 26

different varieties of maize (corn) average more than

103,000genes.

If it takes 50,000 genes to make a crummy grain of

rice, and humans have only about 20,000 genes, then there's

something else going on. For example, through alternative

splicing, a single gene can produce several different

kinds of proteins -- and it seems to be the case that

alternative splicing is found more frequently in the human

genome than in that of other animals.

Alternatively, the human genome seems to contain more

sophisticated regulatory genes, which control the

workings of other genes.

- Before anyone makes large claims about the genetic

underpinnings of human thought and action, we're going

to need a much better account of how genes actually

work.

- Of course, it's not just the sheer number of genes,

but the genetic code -- the sequence of bases A, G, T,

and C -- that's really important. Interestingly in 2002

the mouse genome was decoded, revealing about 300,000

genes. Moreover, it turned about that about 99% of these

genes were common with the human genome (the

corresponding figure is about 99.5% in common for humans

and our closest primate relative, the chimpanzee). This

this extremely high degree of genetic similarity makes

sense from an evolutionary point of view: after all,

mice, chimpanzees, and humans are descended from a

common mammalian ancestor who lived about 75 million

years ago (ya). And it also means that the mouse is an

excellent model for biological research geared to

understanding, treating, and preventing human disease,

it is problematic for those who argue that genes are

important determinants of human behavior. That leaves

300 genes to make the difference between mouse and

human, and only about 150 genes to make the difference

between chimpanzees and humans.Not enough genes.

- Perhaps the action is not in the genes, but rather in

their constituent proteins:indica rice has about

466 million base pairs, and japonica about 420

million, compared to 3 billion for humans (and about 2.5

billion for mice).

Why are there 3 billion base pairs, but only

about 20,000 genes? It turns out that most DNA is what

is known as junk DNA -- that is, DNA that doesn't

create proteins. When the human genome was finally

sequenced in 2001, researchers came to the conclusion that

fully 98% of the human genome was "junk" in this

sense. But it's not actually "junk": "junk" DNA

performs some functions.

- Some of it forms telomeres, structures that "tie

off" the ends of genes, like the aglets or caps that

prevent ropes (or shoelaces) from fraying at their

ends. Interestingly, telomeres get shorter as a

person ages. And even more interestingly, from a

psychological point of view, telomeres are also shortened

by exposure to stress (see the work of UC San Francisco's

Elissa Epel).

So, stress does really seem to cause premature aging!

- Junk DNA also codes for ribonucleic acid,

or RNA.

- And junk DNA also appears to influence gene expression,

which may explain some of the differences (for example, in

susceptibility to genetically inherited disease) between

genetically identical twins.

The paucity of human genes, and the vast

amount of "junk DNA" in the genome, has led some researchers

to concluded that the source of genetic action may not lie

so much in the genes themselves, but in this other material

instead. Maybe. Or maybe DNA just isn't that important

for behavior in complex, sophisticated organisms like

humans. Maybe culture is more important.

Something to think about.

For an extended discussion of junk DNA and

its functions, see Junk DNA: A Journey Through

the Dark Matter of the Genome (2015) by Nessa

Carey. Or excerpts printed in Natural History

magazine, March, April, and May 2015). Carey also

wrote The Epigenetics Revolution: How Modern Biology

is Rewriting Our Understanding of Genetics, Disease, and

Inheritance (2012), also excerpted in Natural

History, April and May 2012).

In any event, for all the talk about the

genetic underpinnings of individual differences, as things

stand now and behavior geneticists don't have the foggiest

idea what they are.

"In the god-drenched eras of the past there

was a tendency to attribute a variety of everyday

phenomena to divine intervention, and each deity in a vast

pantheon was charged with responsibility for a specific

activity -- war, drunkenness, lust, and so on. 'How silly

and primitive that all was,' the writer Louis Menand has

observed. In our own period what Menand discerns as a

secular 'new polytheism' is based on genes -- the

alcoholism gene, the laziness gene, the schizophrenia

gene.

Now we explain things by reference to an

abbreviated SLC6A4 gene on chromosome 17q12, and feel much

superior for it. But there is not, if you think about it,

that much difference between saying 'The gods are angry'

and saying 'He has the gene for anger.' Both are ways of

attributing a matter of personal agency to some fateful

and mysterious impersonal power."

---Cullen Murphy in "The Path of

Brighteousness" (Atlantic Monthly, 11/03)

Embryological Development

What are the mechanisms of

embryological development? At one point, it was thought that

the individual possesses adult form from the very beginning --

that is, that the embryo is a kind of homunculus

(little man), and that the embryo simply grew in size. This

view of development is obviously incorrect. But that didn't

stop people from seeing adult forms in embryos!

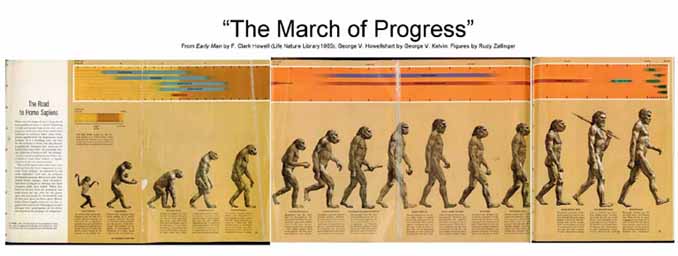

In the 19th century, with the adoption

of the theory of evolution, the homunculus view was gradually

replaced by the recapitulation view, based on

Haeckel's biogenetic law that

"Ontogeny recapitulates

phylogeny".

What Haeckel meant was that the

development of the individual replicates the stages of

evolution: that the juvenile stage of human development

repeats the adult stages of our evolutionary ancestors. Thus,

it was thought, the human embryo first looks like an adult

fish; later, it looks like adult amphibians, reptiles, birds,

mammals, and nonhuman primates. This view of development is

also incorrect, but it took a long time for people to figure

this out.

The current view of development is

based on von Baer's principle of differentiation.

According to this rule, development proceeds from the general

to the specific. In the early stages of its development, every

organism is homogeneous and coarsely structured. But it

carries the potential for later structure. In later stages of

development, the organism is heterogeneous and finely built --

it more closely represents actualized potential. Thus, the

human embryo doesn't look like an adult fish. But at some

point, human and fish embryos look very much alike. These

common structures later differentiate into fish and humans. A

good example of the differentiation principle is the

development of the human reproductive anatomy, which we'll

discuss later in the course.

Ontogeny and Phylogeny

For an engaging history of the debate between

recapitulation and differentiation views of development, see

Ontogeny and Phylogeny (1977) by S.J. Gould.

Neural Development in the Fetus, Infant, and

Child

At the end of the second week of

gestation, the embryo is characterized by a primitive

streak which will develop into the spinal cord. By the

end of the fourth week, somites develop, which will

become the vertebrae surrounding the spinal cord -- the

characteristic that differentiates vertebrates from

invertebrates.

Bodily asymmetries seem to have their origins

in events occurring at an early stage of embryological

development. Studies of mouse embryos by Shigenori Nonaka

and his colleagues, published in 2002, implicate a structure

known as the node, which contains a number of cilia, or

hairlike structures. The motion of the cilia induce fluids

to move over the embryo from right to left. These fluids

contain hormones and other chemicals that control

development, and thus cause the heart to grow on the left,

and the liver and appendix on the right -- at least for

99.99% of people. On rare occasions, a condition known as situs

inversus, the position of the embryo is reversed, so

the fluids flow left to right, resulting in a reversal of

the relative positions of the internal organs -- heart on

the right, liver and appendix on the left. It is possible

that this process is responsible for including the

hemispheric asymmetries associated with cerebral

lateralization -- although something else must also be

involved, given that the incidence of right-handedness is

far greater than 0.01%.

- In the second month, the eye buds move to the

front of the head, and the limbs, fingers, and toes become

defined. The internal organs also begin to develop,

including the four-chambered heart -- the first

characteristic that differentiates mammals from

non-mammals among the vertebrates. It's at this point that

the embryo changes status, and is called a fetus.

- The development of the nervous system begins in the

primitive streak of the embryo, which gradually forms an

open neural tube. The neural tube closes after 22

days, and brain development begins.

- In the 11th week of gestation the cerebral cortex

becomes clearly visible. The cortex continues to grow,

forming the folds and fissures that permit a very large

brain mass to fit into a relatively small brain case.

- In the 21st week of gestation synapses begin to

form. Synaptic transmission is the mechanism by which the

nervous system operates: there is no electrical activity

without synapses. So, before this time the fetal brain has

not really been functioning.

- In the 24th week myelinization begins. The myelin

sheath provides a kind of insulation on the axons of

neurons, and regulates the speed at which the neural

impulse travels down the axon from the cell body to the

terminal fibers.

All three processes -- cortical

development, synaptic development, and myelinization --

continue for the rest of fetal development, and even after

birth. In fact, myelinization is not complete until late in

childhood.

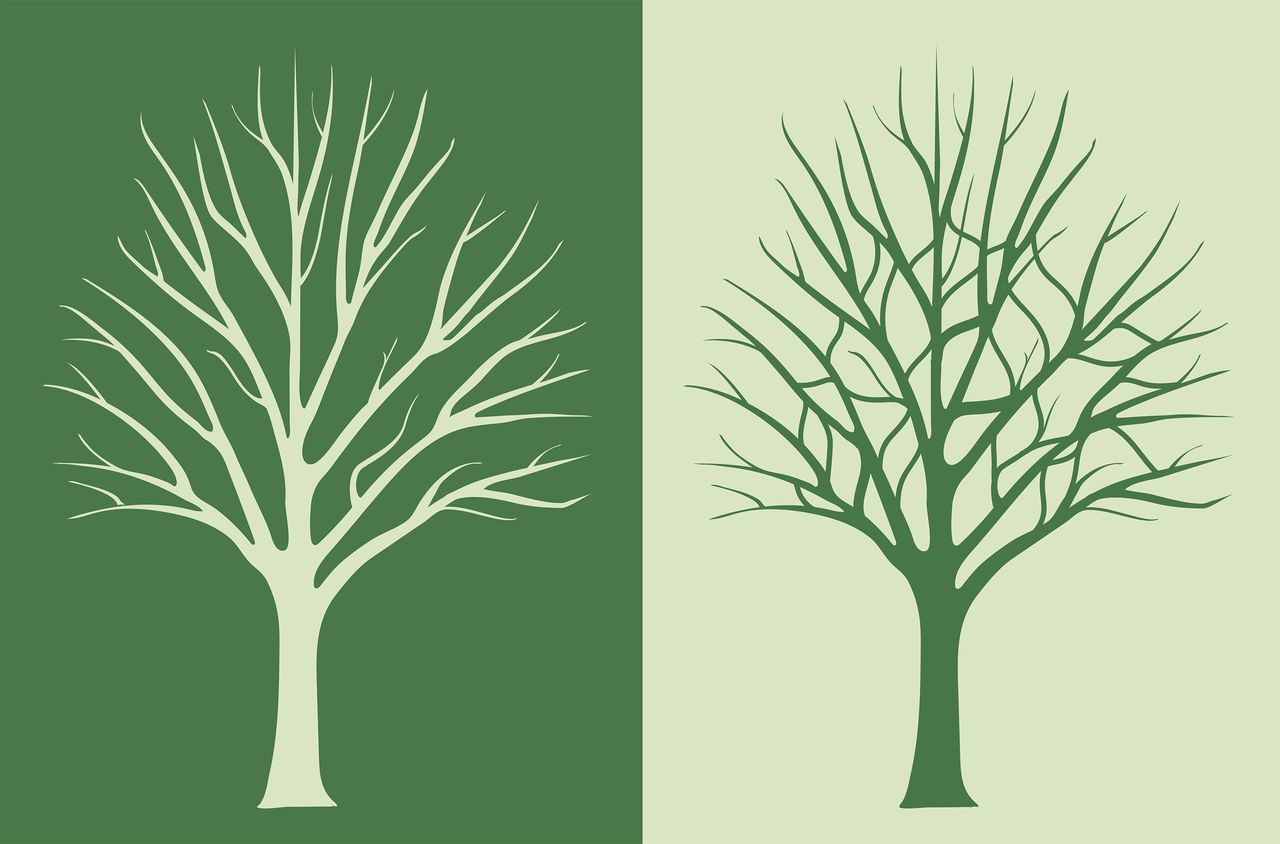

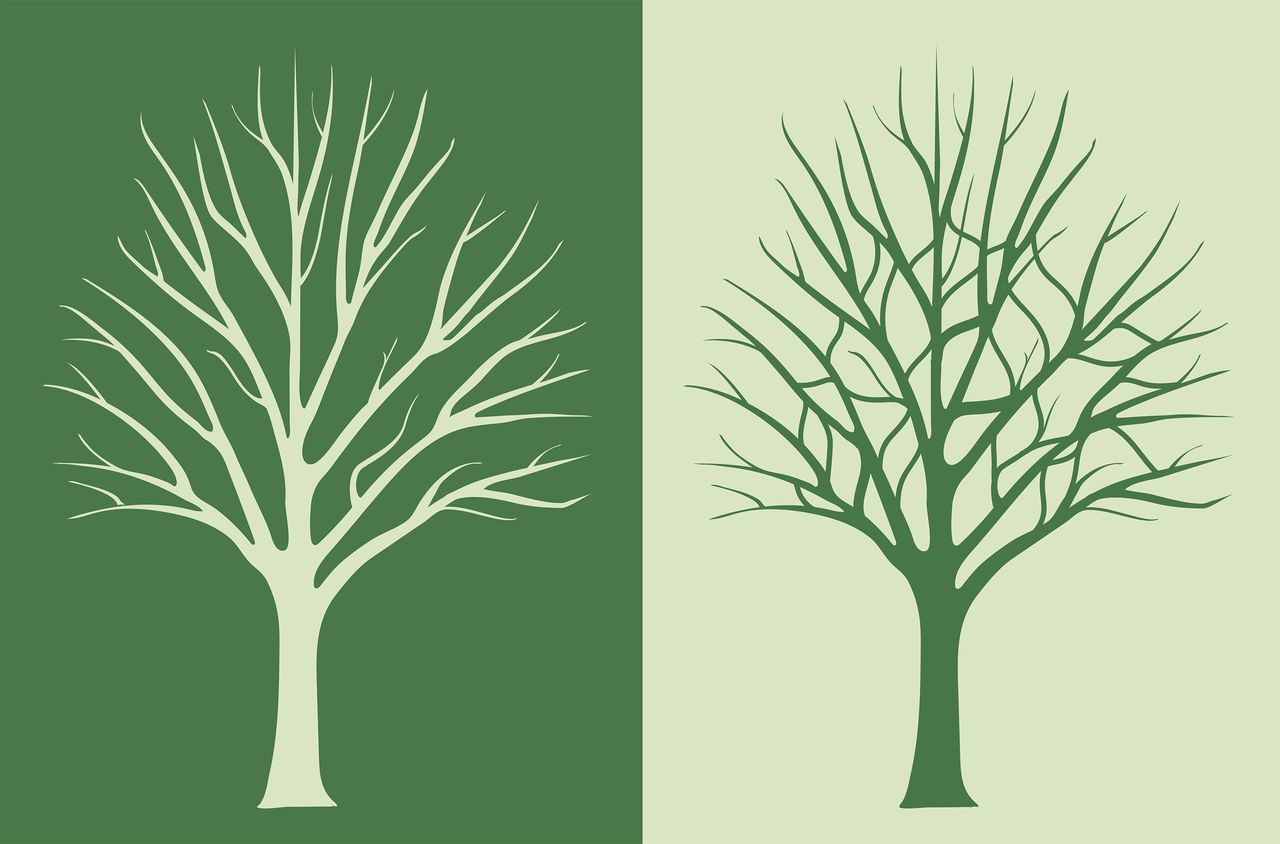

- As far as the cerebral cortex is concerned, we're

probably born with all the neurons we're going to get. The

major change postnatally is in the number, or the density,

of interconnections among neurons -- a process

called synaptogenesis (as opposed to neurogenesis),

by which the axons of presynaptic neurons increasingly

link to the dendrites of postsynaptic neurons. Viewed at

the neuronal level, the big effect of normal development

is the proliferation of neural interconnections, including

an increase in dendritic arborization (like a tree

sprouting branches) and the extension of axons (so as to

make contact with more dendrites).

- At the same time, but at a different rate, there is also

some pruning, or elimination, of synapses -- a

process by which neural connectivity is fine-tuned. So,

for example, early in development there is considerable

overlap in the projections of neurons from the two eyes

into the primary visual cortex; but after pruning, the two

eyes project largely to two quite different segments of

cortex, known as "ocular dominance columns". This pruning

can continue well into childhood and adolescence.

- Neurons die. Fortunately, we are born with a lot of

neurons, and unless neuronal death is accelerated by brain

damage or something like Alzheimer's disease, neurons die

relatively slowly. When neurons die, their connections

obviously disappear with them.

- The connections between neurons can also be strengthened

(or weakened) by learning. Think of long-term

potentiation. But LTP doesn't change the number of

neural interconnections. What changes is the likelihood of

synaptic transmission across a synapse that has already

been established.

- LTP is an example of a broader phenomenon called functional

plasticity.

- Violinists, who finger the strings with their left

hands, show much larger cortical area in that portion of

(right) parietal cortex that controls finger movements

of the left hand, compared to non-musicians.

- If one finger of a hand is amputated, that portion of

somatosensory cortex which would ordinarily receive

input from that finger obviously doesn't do so any

longer. But what can happen is that the somatosensory

cortex can reorganize itself, so that this portion of

the brain can now receive stimulation from fingers that

are adjacent to the amputated one.

- And if two fingers are sewn together, so that when one

moves the other one does also, the areas of

somatosensory cortex that would be devoted to each

finger will now overlap.

- In each case, though, the physical connections between

neurons -- the number of terminal fibers synapsing on

dendrites -- don't appear to change. Much as with LTP,

what changes is the likelihood of synaptic transmission

across synapses that have already been established.

- Finally, there's the problem of

neurogenesis.

Traditional neuroscientific doctrine has held that

new neurons can regenerate in the peripheral nervous

system (as, for example, when a severed limb has

been reattached), but not in the central nervous

system (as, for example, paraplegia following

spinal cord injury). However, increasing

evidence has been obtained for neurogenesis in the

central nervous system as well. This

research is highly controversial (though I,

personally, am prepared to believe it is true),

but if confirmed would provide the basis for

experimental "stem cell" therapies for

spinal-cord injuries (think of Christopher

Reeve). If naturally occurring

neurogenesis occurs at a rate greater than the

rate of natural neuronal death, and if these new

neurons could actually be integrated into

pre-existing neural networks, that would supply

yet an additional mechanism for a net increase

in new physical interconnections between neurons

-- but

so far the evidence in both respects is

ambiguous.

Studies of premature infants indicate

that an EEG signal can be recorded at about 25 weeks of

gestation. At this point, there is evidence of the first

organized electrical activity in the brain. Thus, somewhere

between the 6th and 8th month of gestation the fetal brain

becomes recognizably human. There are lots of the folds and

fissures that characterize the human cerebral cortex. And

there is some evidence for hemispheric specialization:

premature infants respond more to speech presented to the left

hemisphere, and more to music presented to the right. At this

point, in the 3rd semester of gestation, the brain clearly

differentiates humans from non-humans.

Interestingly, survivability takes a

big jump at this point as well. If born before about 24 weeks

of gestation, the infant has little chance of survival, and

then only with artificial life supports; if born after 26

weeks, the chances of survival are very good. If born at this

point, the human neonate clearly has human physical

characteristics, and human mental capacities. In other words,

by some accounts, by this point the fetus arguably has personhood,

because its has actualized its potential to become human. At

this point, it makes sense to begin to talk about personality

-- how the person actualizes his or her potential for

individuality.

What are the implications of fetal

neural development for the mind and behavior of the

fetus? Here are some things we know.

First, the fetus begins to move in the uterus as early as

seven weeks of gestation. While some of this is random,

other movements seem to be coordinated. For example, the

fetus will stick its hands and feet in its mouth, but it will

also open its mouth before bringing the limb toward it.

- Taste buds form on the fetal tongue by the 15th week,

and olfactory cells by the 24th week. Newborns

prefer flavors and odors that whey were exposed to in the

womb, which suggests that some sensory-perceptual learning

is possible by then.

- By about the 24th-27th week, fetuses can pick up on

auditory stimulation. Again, newborns respond to

sounds and rhythms, including individual syllables and

words, that they were exposed to in utero --

another example of fetal learning.

- The fetus's eyes open about the 28th week of gestation,

but there is no evidence that it "sees" anything -- it's

pretty dark in there, although some light does filter

through the mother's abdominal wall, influencing the

development of neurons and blood vessels in the

eye.

The importance of early experience in

neural development cannot be overemphasized.

Socioeconomic status, nurturance at age 4 (as opposed to

neglect, even if benign), the number of words spoken to the

infant, and other environmental factors all are correlated

with various measures of brain development.

The Facts of Life

Much of this information has been drawn from

The Facts of Life: Science and the Abortion Controversy

(1992) by H.J. Morowitz and J.S. Trefil. Advances in medical

technology may make it possible for fetuses to live outside

the womb even at a very early stage of gestation, but no

advance in medical technology will change the basic course

of fetal development, as outlined here and presented in

greater detail in the book. See also Ourselves Unborn: A

History of the Fetus in Modern America by Sara Dubow

(2010).

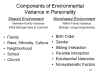

Nature and Nurture in Personality Development

So where does personality come from? We

have already seen part of the answer: Personality is not a

given, fixed once and for all time, whether by the genes or

through early experience. Rather, personality emerges out of

the interaction between the person and the environment, and is

continuously constructed and reconstructed through social

interaction. A major theme of this interactionist view of

personality is that the person is a part of his or her own

environment, shaping the environment through evocation,

selection, behavioral manipulation, and cognitive

transformation. In the same way, development is not just

something that happens to the individual. Instead, the

individual is an active force in his or her own development.

The Developmental Corollary to the Doctrine of

Interactionism

Just as the person is a part of his or her

own environment, the child is an agent of his or her own

development.

The

development of the individual begins with his or her genetic

endowment, but genes do not act in isolation. The organism's

genotype, or biological potential (sometimes referred to as

the individual's "genetic blueprint", interacts with the

environment to produce the organism's phenotype, or what the

organism actually "looks like" -- psychologically as well as

morphologically.

The

development of the individual begins with his or her genetic

endowment, but genes do not act in isolation. The organism's

genotype, or biological potential (sometimes referred to as

the individual's "genetic blueprint", interacts with the

environment to produce the organism's phenotype, or what the

organism actually "looks like" -- psychologically as well as

morphologically.

The individual's phenotype

is his or her genotype actualized within a particular

environmental context:

- Two individuals can have different genotypes but the

same phenotypes. For example, given two brown-eyed

individuals, one person might have two dominant genes for

brown eyes (blue eyes are recessive), while the other

might have one dominant gene for brown eyes, and one

recessive gene for blue eyes.

- Two individuals can have the same genotype but different

phenotypes. For example, of two individuals might both

possess two dominant genes for dimples, one individual

might have cosmetic surgery to remove them, but the other

might not.

More broadly, it is now known that

genes are turned "on" and "off" by environmental events.

- Sometimes, the "environment" is body tissue immediately

surrounding the gene. Except for sperm and egg cells,

every cell in the body contains the same genes. But the

gene that controls the production of insulin only does so

when its surrounding cell is located in the pancreas. The

same gene, in a cell located in the heart, doesn't produce

insulin (though it may well do something else). This fact

is the basis of gene therapy: a gene artificially inserted

into one part of the body will produce a specific set of

proteins that may well repair some deficiency, while the

same gene inserted into another part of the body may not

have any effect at all.

- Sometimes, the "environment" is the world outside the

organism. A gene known as BDNF (brain-derived neurotrophic

factor), plays an important role in the development of the

visual cortex, is "turned on" by neural signals resulting

from exposure to light. Infant mice exposed to light

develop normal visual function, but genetically identical

mice raised in darkness are blind.

Nature via Nurture?

For some genetic biologists, facts like these

resolve the nature-nurture debate: because genes respond to

experience, "nature" exerts its effects via "nurture". This

is the argument of Nature via Nurture: Genes,

Experience, and What Makes Us Human (2003) by Matt

Ridley. As Ridley writes:

Genes are not puppet masters or blueprints.

Nor are they just the carriers of heredity. They are

active during life; they switch on and off; they respond

to the environment. They may direct the construction of

the body and brain in the womb, but then they set about

dismantling and rebuilding what they have made almost at

once -- in response to experience (quoted by H. Allen Orr,

"What's Not in Your Genes",New York Review of Books,

08/14/03).

The point is correct so far as it goes: genes

don't act in isolation, but rather in interaction with the

environment -- whether that is the environment of the

pancreas or the environment of a lighted room.

But this doesn't solve the nature-nurture

debate from a psychological point of view, because

psychologists are not particularly interested in the

physical environment. Or, put more precisely, we are

interested in the effects of the physical environment, but

we are even more interested in the organism's mental

representation of the environment -- the meaning that

we give to environmental events by virtue of such cognitive

processes as perception, thought, and language.

- When Ridley talks about experience, he generally means

the physical features of an environmental event.

- When psychologists talk about experience, they generally

mean the semantic features of an environmental

event -- how that event fits into a pattern of beliefs,

and arouses feelings and goals.

As Orr notes, Ridley's resolution of the nature-nurture

argument entails a thoroughgoing reductionism, in which the

"environment" is reduced to physical events (such as the

presence of light) interacting with physical entities (such as

the BDNF gene). But psychology cannot remain psychology and

participate in such a reductionist enterprise, because its

preferred mode of explanation is at the level of the

individual's mental state.

For a psychologist, "nurture" means the meaning

of an organism's experiences. And for a psychologist, the

nature-nurture argument has to be resolved in a manner that

preserves meaning intact.

The point is that genes

act jointly with environments to produce phenotypes. These

environments fall into three broad categories:

- prenatal, meaning the intrauterine environment of

the fetus during gestation;

- perinatal, referring to environmental conditions

surrounding the time of birth, including events occurring

during labor and immediately after parturition; and

- postnatal, including everything that occurs after

birth, throughout the course of the individual's life.

In light of the relation between

genotype and phenotype, the question about "nature or nurture"

is not whether some physical, mental, or behavioral trait is

inherited or acquired. Better questions are:

- What is the relative importance of nature and nurture?

- Or even better, How do nature and nurture interact?

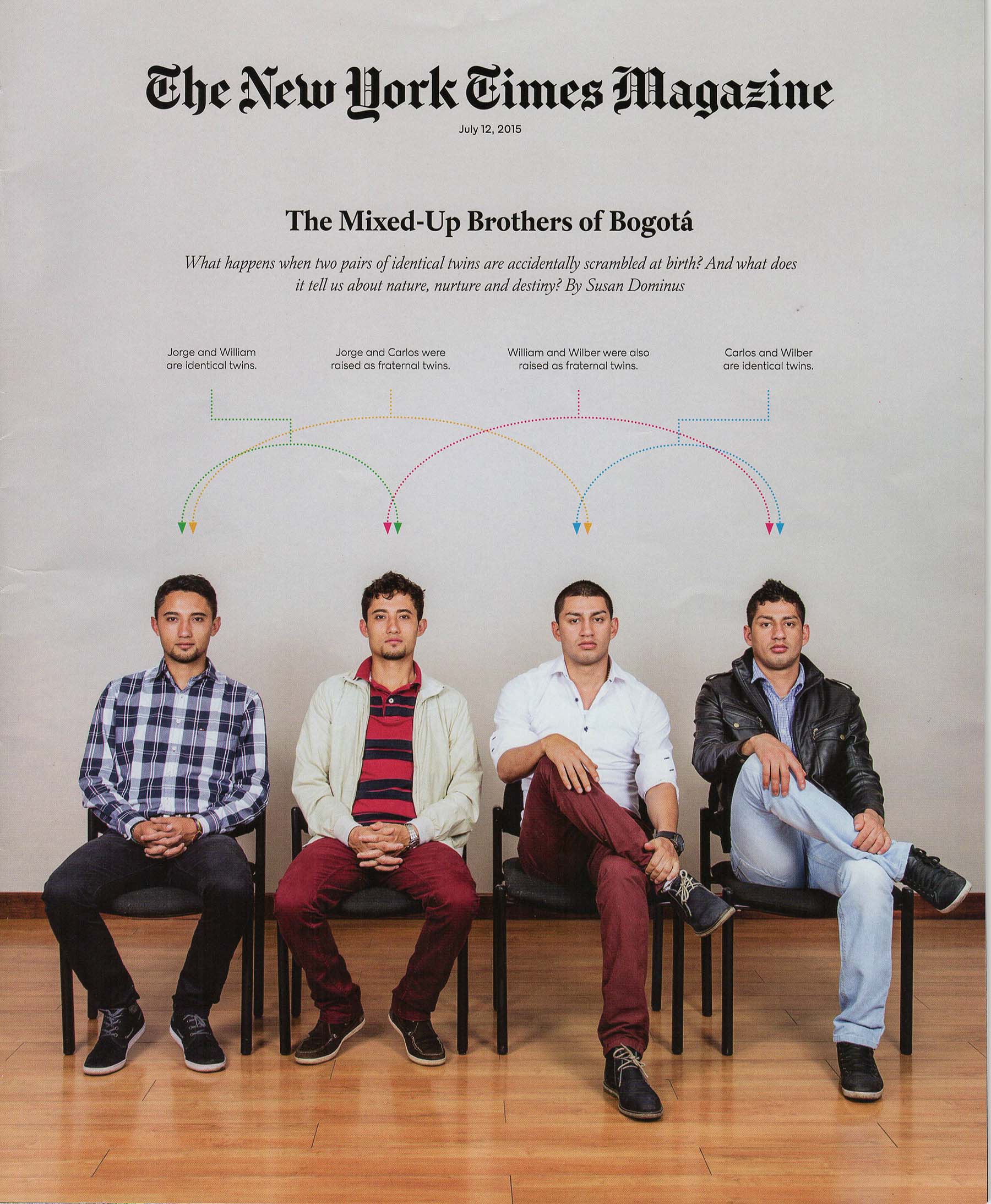

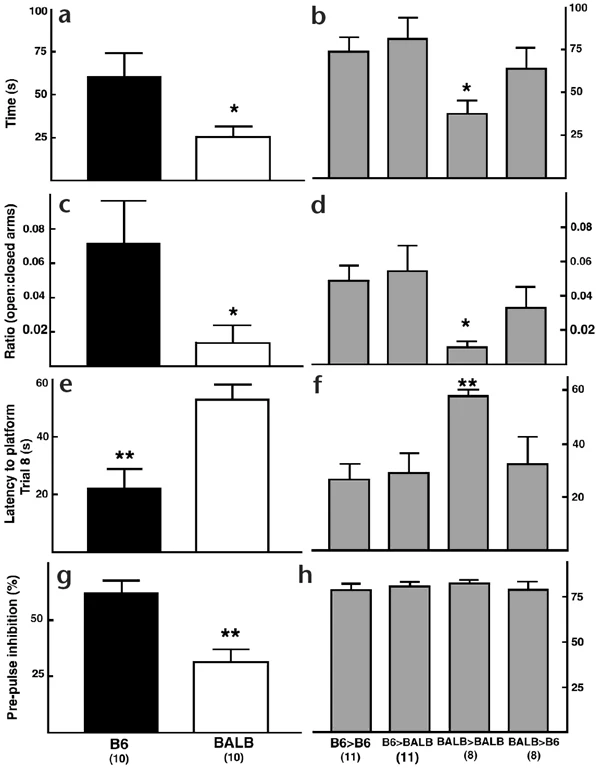

The Twin-Study Method

Many basic questions of nature and

nurture in personality can be addressed by using the

techniques of behavior-genetics are used to analyze

the origins of psychological characteristics. Perhaps the most

interesting outcome of these experiments is that, while

initially intended to shed light on the role of genetic

factors in personality development. These behavior-genetic

analyses show the clear role of genetic determinants in

personality, but also reveal a clear role for the environment.

Many basic questions of nature and

nurture in personality can be addressed by using the

techniques of behavior-genetics are used to analyze

the origins of psychological characteristics. Perhaps the most

interesting outcome of these experiments is that, while

initially intended to shed light on the role of genetic

factors in personality development. These behavior-genetic

analyses show the clear role of genetic determinants in

personality, but also reveal a clear role for the environment.

The most popular method in

behavior genetics is the twin study -- which, as its name

implies, compares two kinds of twins in terms of similarity in

personality:

- Monozygotic (MZ or identical) twins are

the product of a egg that has been fertilized by a single

sperm, but which subsequently split into two embryos --

thus yielding two individuals who are genetically

identical.

- Actually, MZ twins aren't precisely identical.

Research by Dumanski and Bruder (Am. J. Hum Gen

2008) indicates that even MZ twins might differ in the

number of genes, or in the number of copies of genes.

Moreover, failures to repair breaks in genes can occur,

resulting in the emergence of further genetic

differences over the individuals' lifetimes. This

discovery may have consequences for the determination of

environmental contributions to variance, detailed below.

But for most practical purposes, the formulas discussed

below provide a reasonable first approximation.

- Dizygotic (DZ or fraternal) twins occur

when two different eggs are fertilized by two different

sperm -- thus yielding individuals who have only about 50%

of their genes in common.

A qualification is in order. A vast

proportion of the human genome, about 90%, is the same for

all human individuals -- it's what makes us human, as

opposed to chimpanzees or some other kind of organism. Only

about 10% of the human genome actually varies from one

individual to another. So, when we speak of DZ twins having

"50%" of their genes in common, we really mean that they

share 50% of that 10%. And when we speak of unrelated

individuals having "no" genes in common, we really mean that

they don't share any more of that 10% than we'd expect by

chance.

Of course, you could compare triplets,

quadruplets, and the like as well, but twins are much more

convenient because they occur much more frequently in the

population. Regardless of twins or triplets or whatever, the

logic of the twin study is simple: To the extent that a trait

is inherited, we would expect MZ twins to be more alike on

that trait than DZ twins.

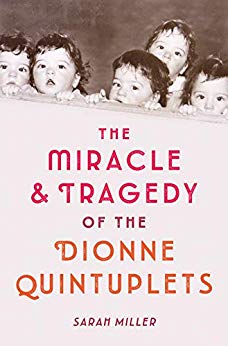

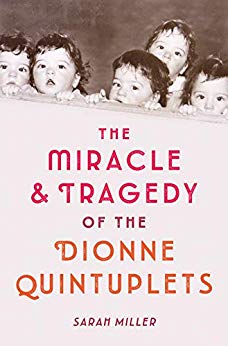

Born in Ontario

in 1934, the Dionne Quintuplets (all girls, all genetically

identical) were the first quintuplets to live past

infancy. Their parents already had four children, and

the birth of five more was completely unexpected. The

family's financial straits led their parents to sign over

custody of the girls to the Canadian Red Cross, which built

a special hospital and "observatory" for them across the

street from their family home; their parents also agreed to

display them at the Chicago World's Fair. "Quintland"

actually became a tourist attraction, complete with bumper

stickers. All of this was in an attempt to protect the

children -- and of course it misfired badly, creating, among

other things, a sharp division between the quints on the one

hand, and their parents and four older siblings on the

other. Their story has been told in many books: the

most recent, The Miracle and Tragedy of the Dionne

Quintuplets by Sarah Miller (2019), traces their

lives. Spoiler alert: the tragedy is that they were

exploited anyway; the miracle is that each girl grew up with

her own individual personality and interests.

Born in Ontario

in 1934, the Dionne Quintuplets (all girls, all genetically

identical) were the first quintuplets to live past

infancy. Their parents already had four children, and

the birth of five more was completely unexpected. The

family's financial straits led their parents to sign over

custody of the girls to the Canadian Red Cross, which built

a special hospital and "observatory" for them across the

street from their family home; their parents also agreed to

display them at the Chicago World's Fair. "Quintland"

actually became a tourist attraction, complete with bumper

stickers. All of this was in an attempt to protect the

children -- and of course it misfired badly, creating, among

other things, a sharp division between the quints on the one

hand, and their parents and four older siblings on the

other. Their story has been told in many books: the

most recent, The Miracle and Tragedy of the Dionne

Quintuplets by Sarah Miller (2019), traces their

lives. Spoiler alert: the tragedy is that they were

exploited anyway; the miracle is that each girl grew up with

her own individual personality and interests.

In

In  2009, the

Crouch quadruplets, Ray, Kenny, Carol, and Martina, all

received offers of early admission to Yale ("Boola Boola,

Boola Boola: Yale Says Yes, 4 Times" by Jacques Steinberg, New

York Times, 12/19/2009). Now you don't have to

be twins to share such outcomes (and the Crouch quadruplets

obviously aren't identical quadruplets

anyway!). Consider The 5 Browns, sibling pianists from

Utah, all of whom, successively, were admitted to study at

Julliard. How much of these outcomes is due to shared

genetic potential? How much to shared

environment? How much to luck and chance? That's

why we do twin and family studies.

2009, the

Crouch quadruplets, Ray, Kenny, Carol, and Martina, all

received offers of early admission to Yale ("Boola Boola,

Boola Boola: Yale Says Yes, 4 Times" by Jacques Steinberg, New

York Times, 12/19/2009). Now you don't have to

be twins to share such outcomes (and the Crouch quadruplets

obviously aren't identical quadruplets

anyway!). Consider The 5 Browns, sibling pianists from

Utah, all of whom, successively, were admitted to study at

Julliard. How much of these outcomes is due to shared

genetic potential? How much to shared

environment? How much to luck and chance? That's

why we do twin and family studies.

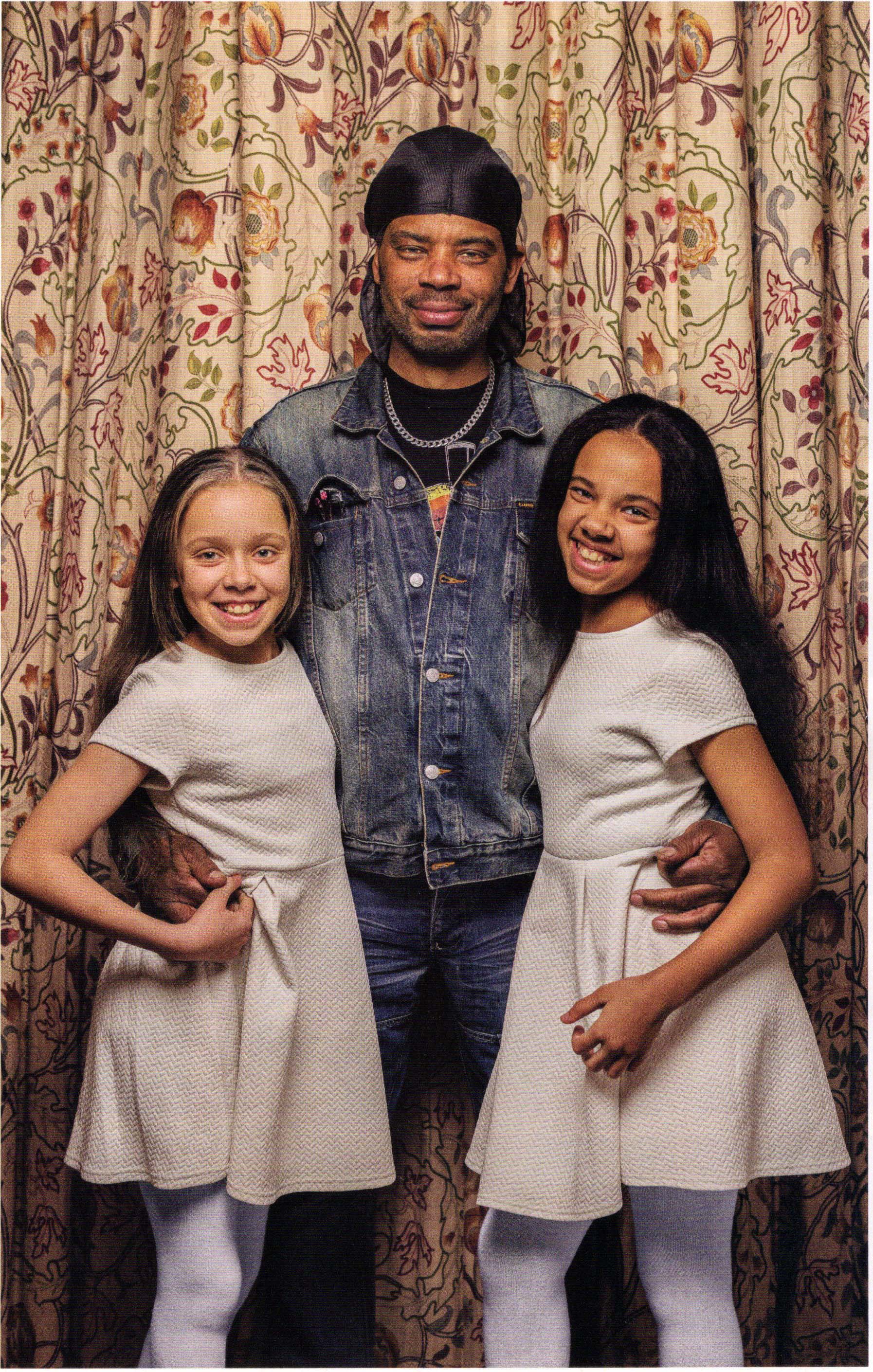

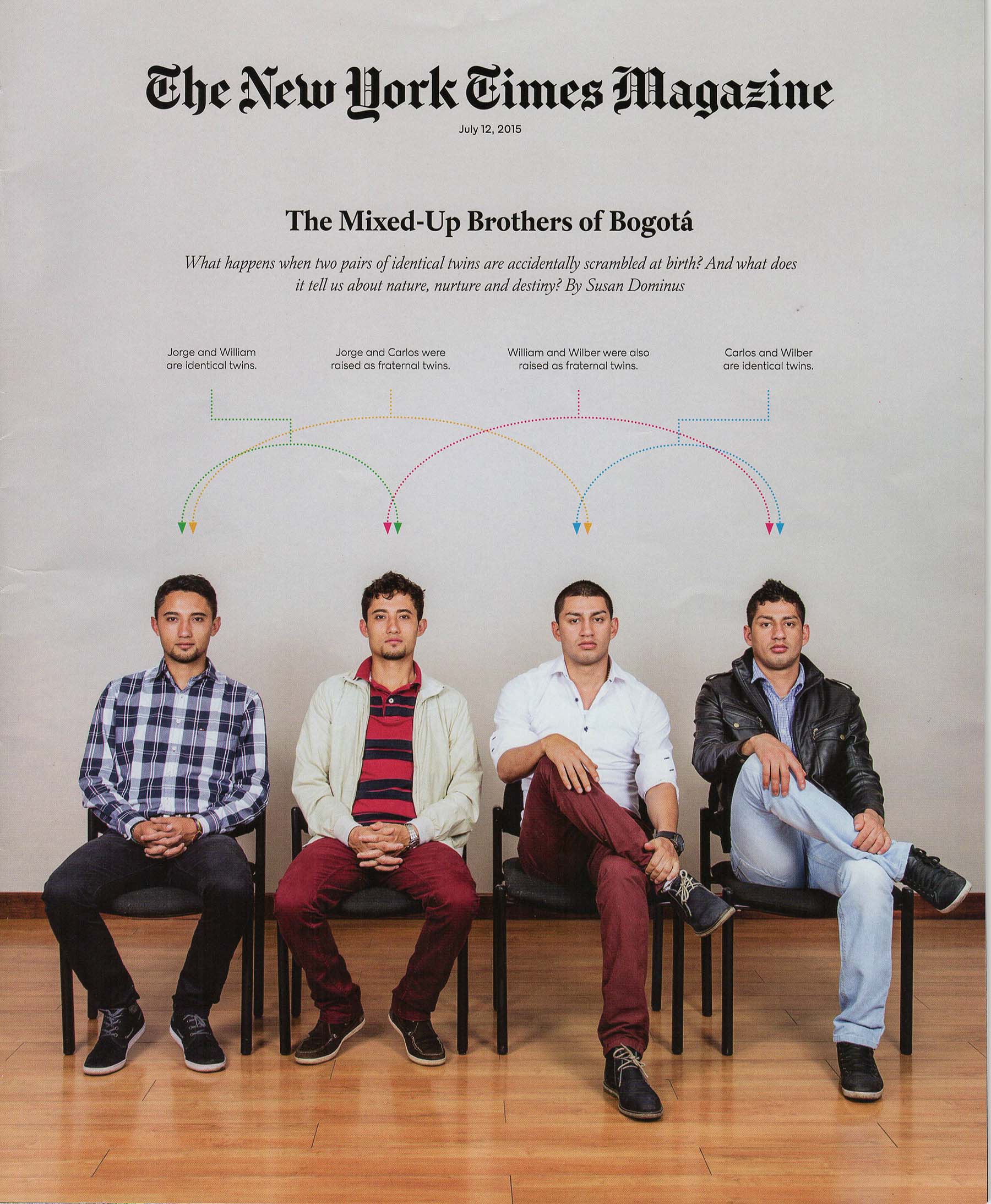

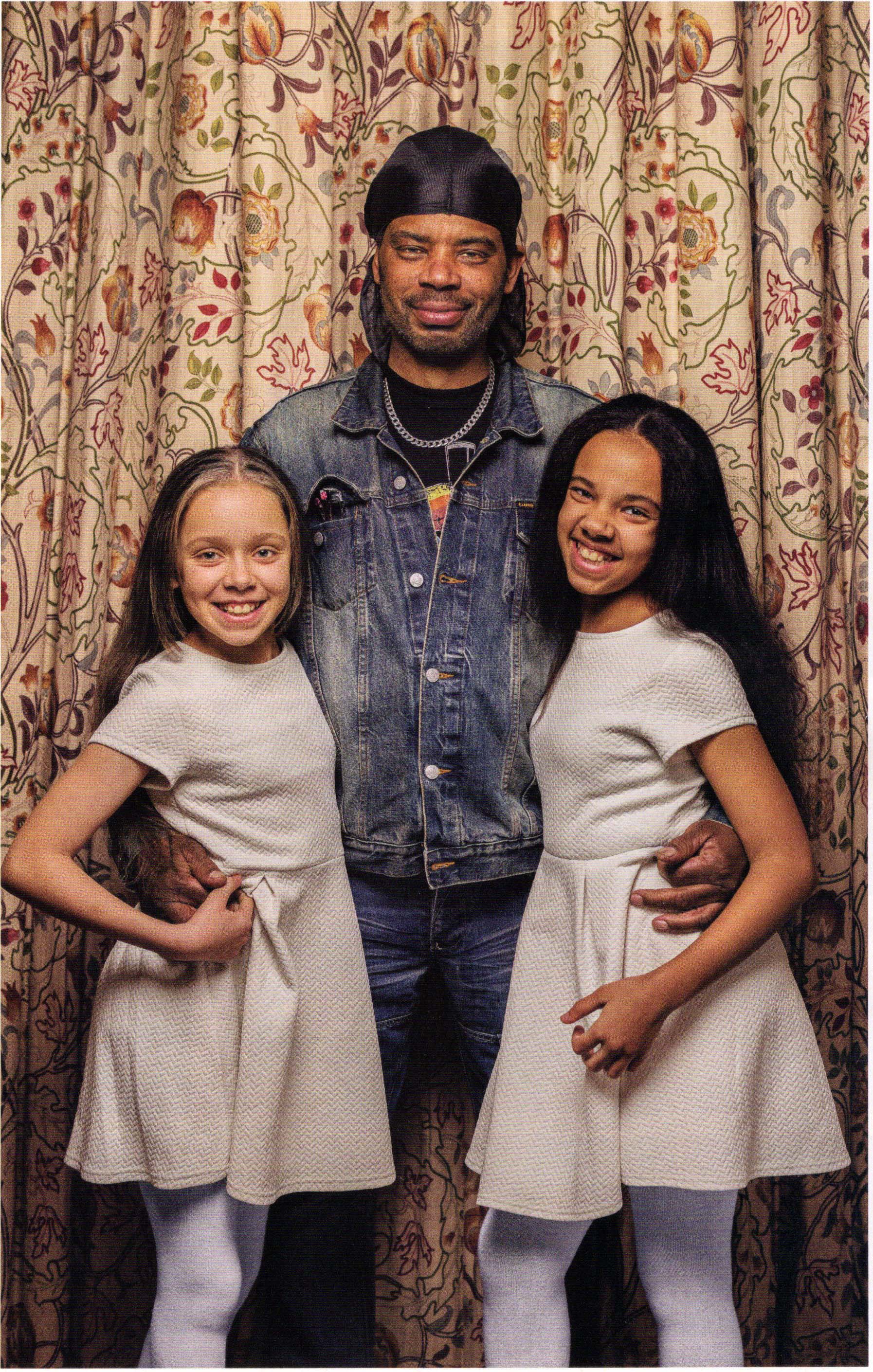

The

April 2018 issue of National Geographic magazine,

devoted to race, featured Marcia and Millie Biggs,

11-year-old fraternal twin sisters living in England (shown

here with their father, Michael; you'll see their photo from

the magazine cover later in these lectures). Their

mother, Amanda Wanklin, calls them her "one in a million

miracle". The girls' differences in physical

appearance are due entirely to the vicissitudes of genetic

chance. But while Millie is a "girlie" girl, Marcia is

more of a "tomboy" (those are Marcia's words, not

mine). Where those psychological differences come from

is a much more complex, and much more interesting, story --

as we'll see in what follows.

The

April 2018 issue of National Geographic magazine,

devoted to race, featured Marcia and Millie Biggs,

11-year-old fraternal twin sisters living in England (shown

here with their father, Michael; you'll see their photo from

the magazine cover later in these lectures). Their

mother, Amanda Wanklin, calls them her "one in a million

miracle". The girls' differences in physical

appearance are due entirely to the vicissitudes of genetic

chance. But while Millie is a "girlie" girl, Marcia is

more of a "tomboy" (those are Marcia's words, not

mine). Where those psychological differences come from

is a much more complex, and much more interesting, story --

as we'll see in what follows.

Twins in space!

Einstein's theory of special relativity predicts that a twin

traveling through space at high speed will age less rapidly

than his or her earth-bound counterpart. That

hypothesis hasn't been tested yet, because we don't have the

ability to put a twin in a spacecraft that travels at close

to light-speed. But still, there are reasons to think

that space travel, including exposure to microgravity and

ionizing radiation, not to mention the stress and absence of

a circadian clock, might have substantial physiological and

psychological effects. Taking advantage of the

presence of two identical twins, Scott and Mark Kelly, on

the roster of American astronauts, the National Aeronautics

and Space Administration (NASA) subjected the pair to a

battery of physiological and psychological tests before,