Biological Bases of Mind and Behavior

In

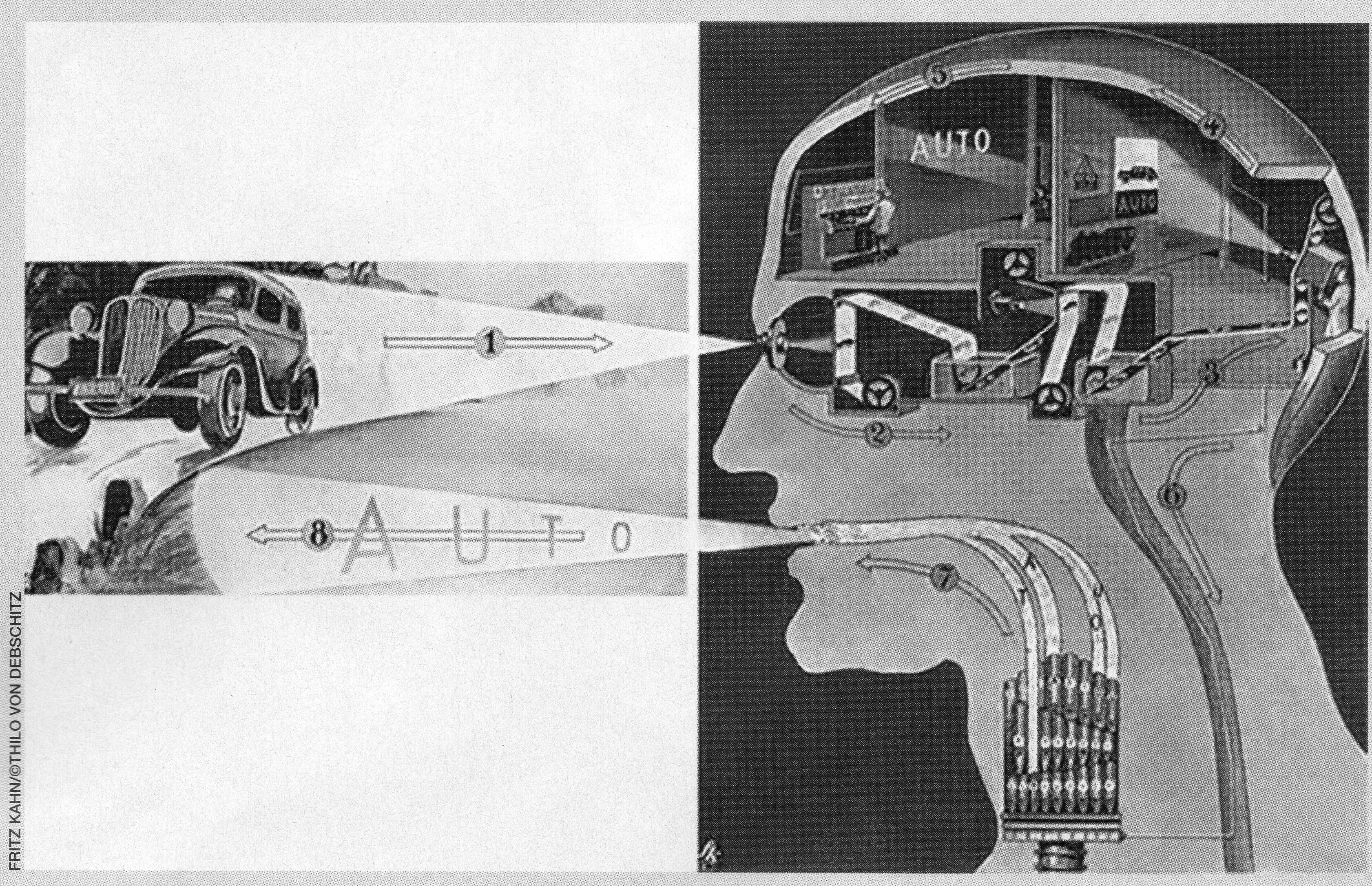

the 19th century, William James (1842-1910) defined

psychology as the science of mental life, attempting to

understand the cognitive, emotional, and

In

the 19th century, William James (1842-1910) defined

psychology as the science of mental life, attempting to

understand the cognitive, emotional, and

motivational processes that underlie human experience,

thought, and action. But even in the earliest days of

scientific psychology, there was a lively debate about how

to go about studying the mind. Accordingly, there arose the

first of several "schools" of psychology,

structuralism and functionalism.

Structuralism and Functionalism

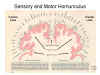

One point of view, known as structuralism tried to study mind in the abstract. argued that complex mental states were assembled from elementary sensations, images, and feelings. Structuralists used a technique known as introspection (literally, "looking into" one's own mind) to determine, for example, the elements of the experience of a particular color or taste. The major proponents of structuralism were:

- Wilhelm Wundt (1832-1920), a German physician and physiologist who became interested and philosophical and psychological problems. Wundt wrote a groundbreaking textbook, Foundations of Physiological Psychology (1873-1874), and established the first psychology laboratory in 1875, at the University of Leipzig (the first American laboratory was established by G. Stanley Hall, a student of James's, at Johns Hopkins University in 1883). For that reason, he is often called the founder of scientific psychology (see A.L. Blumenthal, "A Re-Appraisal of Wilhelm Wundt", American Psychologist, 1975). Despite his training in medicine and physiology, Wundt believed that psychology and physiology were complementary disciplines. He thought there was an clear and obvious connection between them -- for example, sensation is mediated by the sense organs -- but the former was not to be reduced to the latter.

- Edward B. Titchener (1867-1927), one of several American students of Wundt. With Kulpe, another student of Wundt's, Titchener defined psychology as the study of the facts of experience as dependent on the experiencing individual -- in contrast to physics, which in their view studied the same facts independent of those who experience them.

- Edwin G. Boring, Titchener's student at Cornell, built his career at Harvard as a historian of pre-scientific "philosophical" and early scientific psychology. A beloved teacher of the introductory psychology course, Boring also hosted "Psychology 1 with E.G. Boring" on National Educational Television, one of the first educational television courses offered on the predecessor to our modern Public Broadcasting System.

Structuralism was beset by a number of problems, especially the unreliability and other limitations of introspection, but the structuralists did contribute to our understanding of the basic qualities of sensory experience (see Boring's The Physical Basis of Consciousness, 1927).

Another point of view, known as functionalism, was skeptical of the structuralist claim that we can understand mind in the abstract. Based on Charles Darwin's (1809-1882) theory of evolution, which argued that biological forms are adapted to their use, the functionalists focused instead on what the mind does, and how it works. While the structuralists emphasized the analysis of complex mental contents into their constituent elements, the functionalists were more interested in mental operations and their behavioral consequences. Prominent functionalists were:

- William James, the most important American philosopher of the 19th century, and who taught the first course on psychology at Harvard, James's seminal textbook, Principles of Psychology (1890), is still widely and profitably read by new generations of psychologists. True to his philosophical position of pragmatism, James placed great emphasis on mind in action, as exemplified by habits and adaptive behavior.

- John Dewey (1859-1952), now best remembered for his theories of "progressive" education, who founded the famous Laboratory School at the University of Chicago.

- James Rowland Angell (1869-1949), who was both Dewey's student (at Michigan) and James's student at Harvard, and who rejoined Dewey after the latter moved to the University of Chicago; later Angell was president of Yale University, where he established the Institute of Human Relations, a pioneering center for the interdisciplinary study of human behavior. In contrast to Titchener, who wanted to keep psychology a "pure" science, Angell argued that basic and applied research should go forward together.

Psychological functionalism is often called "Chicago functionalism", because its intellectual base was at the University of Chicago, where both Dewey and Angell were on the faculty (functionalism also prevailed at Columbia University). It is to be distinguished from the functionalist theories of mind associated with some modern approaches to artificial intelligence (e.g., the work of Daniel Dennett, a philosopher at Tufts University), which describe mental processes in terms of the logical and computational functions that relate sensory inputs to behavioral outputs.

The functionalist point

of view can be summarized as follows:

- Operations over content. Whereas structuralism attempted to analyze the contents of the mind into their elementary constituents, functionalism attempted to understand mental operations -- that is, how the mind works. It's this sense of functions as operations that gives functionalism its name.

- Adaptive value of mind. Functionalists assume that the mind evolved to serve a biological purpose -- specifically, to aid the organism's adaptation to its environment. Thus, functionalists are interested in what James called (in the Principles) "the relationship of mind to other things" -- how the mind represents the objects and events in the environment. Functionalism also laid the basis for the application of psychological knowledge to the promotion of human welfare.

- Individual differences. For Wundt and other structuralists, it didn't matter who the observer was: so long as observers were properly trained, they were interchangeable. But the functionalists, with their roots in Darwin's theory of natural selection, were interested in variation.

- Mind in context. From a functionalist point of view, the mind essentially mediates between the environment and the organism. Therefore, the functionalists were concerned with the relations between internal mental states and processes and the states and processes in the internal physical environment (i.e., the organism) on the one hand, and the external social environment (i.e., the real world) on the other.

- Mind in Action

The evolutionary function of the mind is to guide

action, freeing adaptive behavior from control by

involuntary reflexes, taxes, and instincts.

- Mind in body. Because the mind is what the brain does, functionalists assumed that understanding the nervous system, and related bodily systems, would be helpful in understanding the workings of the mind.

In these lectures, we pick up this last point, by examining the biological foundations of our mental lives in the brain and the rest of the nervous system.

For an interactive tour of the brain and behavior, see "The Brain from Top to Bottom", a website developed by Bruno Dubuc of McGill University and the Canadian Institute of Neurosciences, Mental Health, and Addiction.

The "Neuron Doctrine"

Well into the 17th century, following Descartes' arguments for dualism, the mind (or the soul) was usually considered to be composed of an immaterial substance. However, this view began to change with the explosion of knowledge of anatomy and physiology that occurred, largely in England, in the latter decades of that century. Among the principal players in this revolution were the "virtuosi" organized by the physician Thomas Willis (1621-1675) into the Oxford Experimental Philosophy Club (Willis later became Professor of Natural Philosophy at Oxford). Among the members of Willis' "club" were William Harvey (1578-1657), who showed how the heart functioned to circulate blood through the body; Robert Hooke (1635-1703), who identified the cell as the smallest unit in living matter; Robert Boyle (1627-1691), the father of modern chemistry; and Christopher Wren (1632-1723), the architect who rebuilt London's churches after the Great Fire of 1666 (he also illustrated many of the virtuosi's' books).

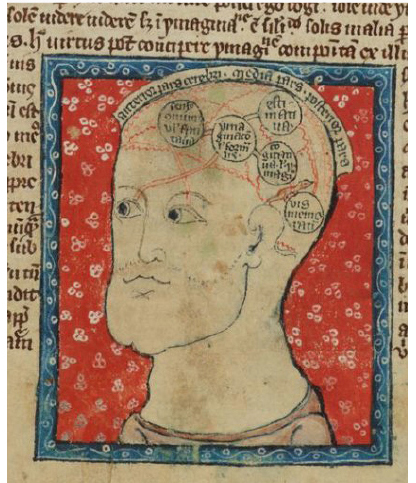

For his part, Willis described the network

of blood vessels covering the brain, proposed that various

parts of the brain were specialized for particular

functions, and concluded that the ventricles of the brain

were not functional. Most importantly, he developed the neuron

doctrine -- the view that the network of nerves

that connect various parts of the brain with each other

and with other tissues and organs permitted the brain to

control the body (knowing nothing about electricity,

Willis thought that this communication was achieved by the

transmission of "animal spirits"). Still under the

influence of Cartesian dualism, Willis still believed that

the mind and the brain were separate, though the asserted

that the immaterial, rational mind was housed in a

material brain that performed most of its functions by

mechanical principles.

But

how do the nerves connect? Here's where another

version of the neuron doctrine comes in.

According to the reticular theory, neurons were

connected directly each to the others, like a wire or a

pipe, forming a kind of net (reticulum means "net"

in Latin). That was, basically, Willis's view.

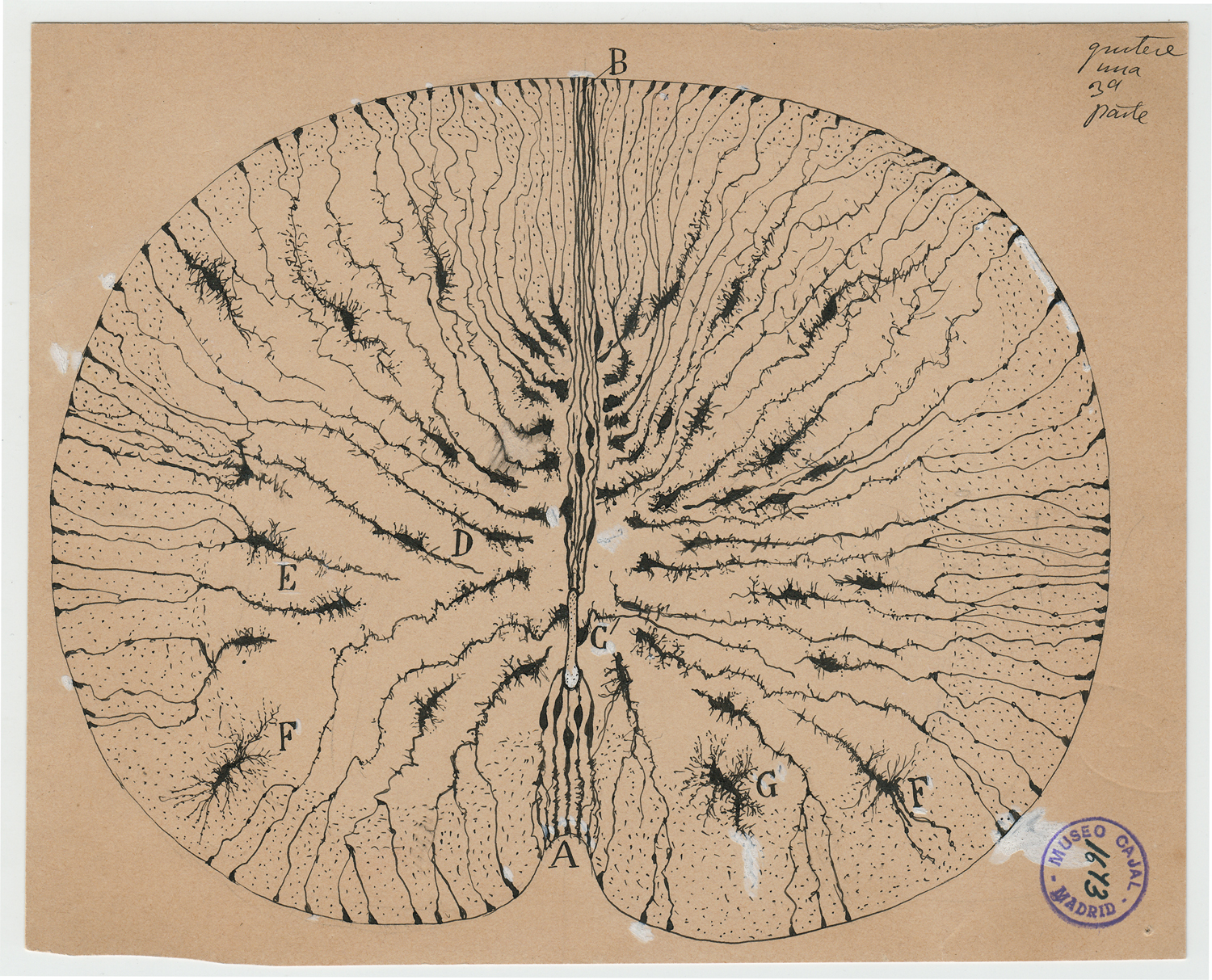

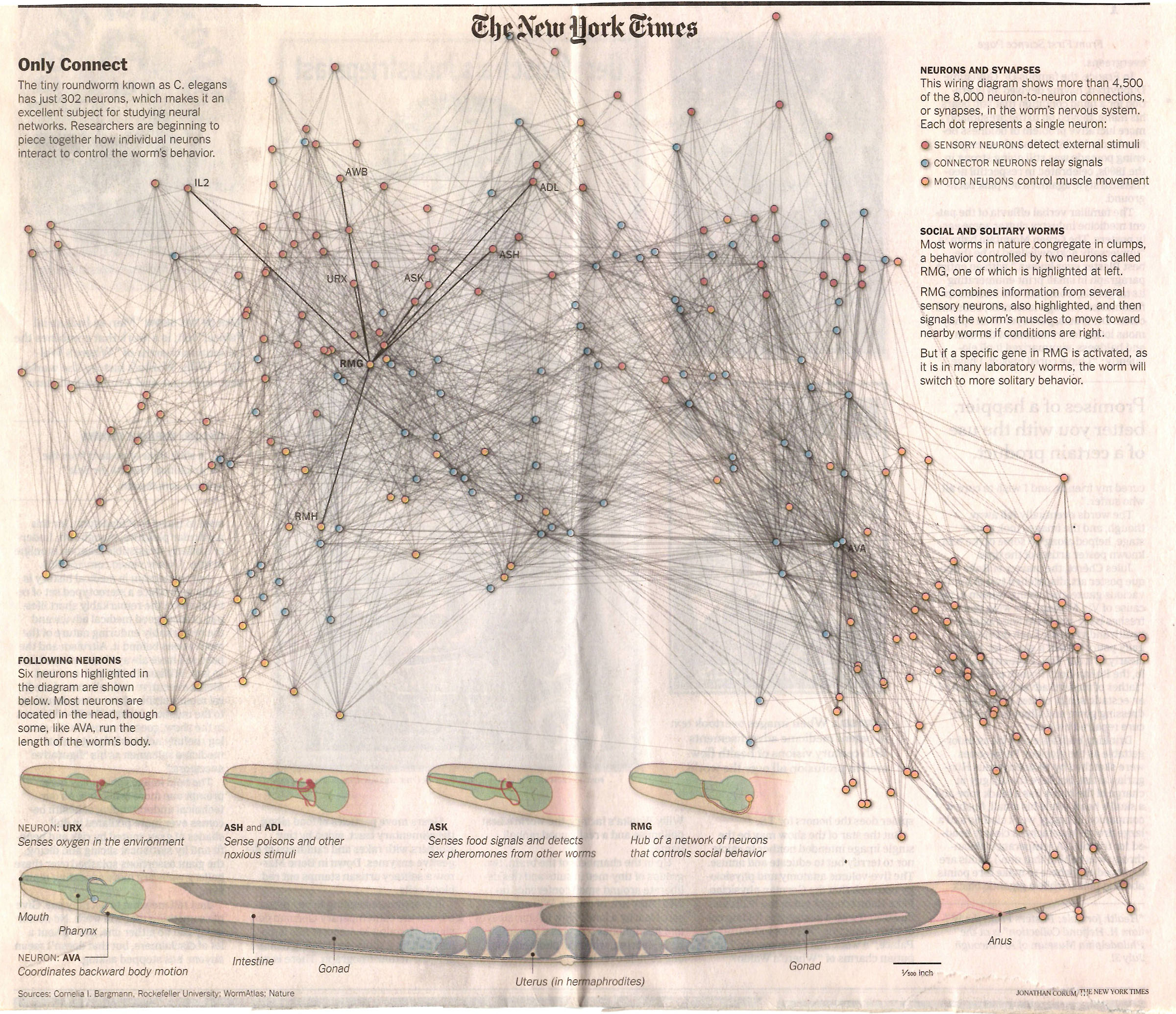

But Santiago Ramon y Cajal, a Spanish neurologist

and neuroanatomist, proved by his careful microscopic

analyses (and beautiful drawings of what he saw) that

neurons were separated by the synaptic gap. In his

research, Cajal discovered that the nervous system was not

a continuous tangle of fibers, as Willis thought, but

rather is composed of individual cells, which he named

"neurons". For this reason, he is generally regarded

as the "father" of neuroscience. Cajal (at

the University of Madrid) shared the 1906 Nobel Prize in

Physiology or Medicine with Camilo Golgi, a

histologist at Pavia University, in Italy, who developed a

staining method which allowed individual cells to be

viewed (and made Cajal's discovery possible). The

irony: Golgi supported the reticular theory, Cajal used

Golgi's technique to prove him wrong; but Cajal couldn't

have done his work without Golgi's technique, so it's

fitting that they shared the Prize.

But

how do the nerves connect? Here's where another

version of the neuron doctrine comes in.

According to the reticular theory, neurons were

connected directly each to the others, like a wire or a

pipe, forming a kind of net (reticulum means "net"

in Latin). That was, basically, Willis's view.

But Santiago Ramon y Cajal, a Spanish neurologist

and neuroanatomist, proved by his careful microscopic

analyses (and beautiful drawings of what he saw) that

neurons were separated by the synaptic gap. In his

research, Cajal discovered that the nervous system was not

a continuous tangle of fibers, as Willis thought, but

rather is composed of individual cells, which he named

"neurons". For this reason, he is generally regarded

as the "father" of neuroscience. Cajal (at

the University of Madrid) shared the 1906 Nobel Prize in

Physiology or Medicine with Camilo Golgi, a

histologist at Pavia University, in Italy, who developed a

staining method which allowed individual cells to be

viewed (and made Cajal's discovery possible). The

irony: Golgi supported the reticular theory, Cajal used

Golgi's technique to prove him wrong; but Cajal couldn't

have done his work without Golgi's technique, so it's

fitting that they shared the Prize.

Actually, though, it turns out that the

biology is not quite that simple. At the highest

level of organization, biologists classify all animals

into five groups: sponges (Porifera) and Placozoa

(small, disc-shaped animals similar to amoeba, but

different) don't have nervous systems at all; the other

three groups -- Ctenophora (comb jellies) and Cnidaria

(other jellyfish and corals) all have nervous systems, but

even here there are differences. Ctenophora and

Cnidaria don't have a central nervous system;

instead, they operate with "nerve nets" -- networks of

neurons spread out across their bodies. And their

neurons do communicate by means of direct contact,

as the reticular theory holds. Only the fifth group,

known as Bilatria, which consists of all the other animal

species, have a central nervous system; and their neurons

communicate across synapses. For details, see

Burkhardt et al., Science, 04/21/2023.

Willis's story is detailed in Soul Made Flesh: The Discovery of the Brain -- and How It Changed the World by Carl Zimmer (2004).

Cajal's story is told in The Brain in Search of Itself: Santiago Ramon y Cajal and the Story of the Neuron by Banjamin Ehrlich (2022), reviewed in "Illuminating the Brain's 'Utter Darkness'" by Alec Wilkinson, New YOrk Review of Books, 02/09/2023.

Ramon y Cajal's drawings are so beautiful that they were subject of "Beautiful Brain: The Drawings of Santiago Ramon y Cajal", an art exhibition organized by the Weisman Art Museum at the University of Minnesota in 2017-2018. The exhibition catalog was published by Abrams Books, one of the leading publishers of art books. See also "Mysterious Butterflies of the Soul" by Benjamin Ehrlich (excerpted from his book, The Brain in Search of Itself: Santiago Ramon y Cajal and the Story of the Neuron), Scientific American 04/2022; which reproduces some of Cajal's drawings.

Organization of the Nervous System

Like all biological entities, the

nervous system is organized hierarchically, with

larger units composed of smaller units.

- At the lowest level, cells are the

smallest units that can function independently.

- Tissues are groups of cells of a

particular kind.

- Organs are groups of tissues that perform a particular function.

- Systems are groups of organs that

perform related functions.

- At the highest level, there is the organism -- a living individual composed of many separate, but mutually interacting systems.

The nervous system is one such

system, allowing various organs and tissues to communicate

with, and influence, each other. This communication is

accomplished by means of electrical discharges.

Other bodily systems include the:

Other bodily systems include the:

- Endocrine system, consisting of the pituitary, adrenal, and other glands, plus the ovaries and testes.

- Integumentary system, consisting of the skin, hair, nails, etc.

- Skeletal system, consisting of the bones and joints

- Muscular system, consisting of the muscles and tendons.

- Cardiovascular system, consisting of the heart and blood vessels.

- Lymphatic system, consisting of the lymph nodes, thymus, spleen, and tonsils.

- Respiratory system, consisting of the nose,pharynx and larynx, and lungs.

- Digestive system, consisting of the mouth, stomach, intestines, and anal canal, as well as the teeth, tongue, liver, gallbladder, and pancreas.

- Urinary system, consisting of the kidneys, urinary bladder, and urethra.

- Reproductive system, consisting of the testes and ovaries, internal and external reproductive apparatus, and the mammary glands.

Whereas the traditional systems of the

body all consist of tissues and organs, the immune

system, consisting of Toll-like receptors, B

cells, and T cells, is found at the cell level.

Hierarchical Level |

Examples in the Nervous System |

| Cells | Neurons

|

| Tissues | Nerves

|

| Organs | Brain Brainstem Spinal Cord |

| Systems | Nervous System(s) consisting of the

nerves, spinal cord, and brain

|

| Organism | The living, thinking, behaving person

composed of several overlapping and interconnecting

systems, including the nervous system. |

For an online tour of the human body, created through CAT and MRI scans, go to the Visible Human Project of the National Library of Medicine: www.nlm.nih.gov/research/visible.

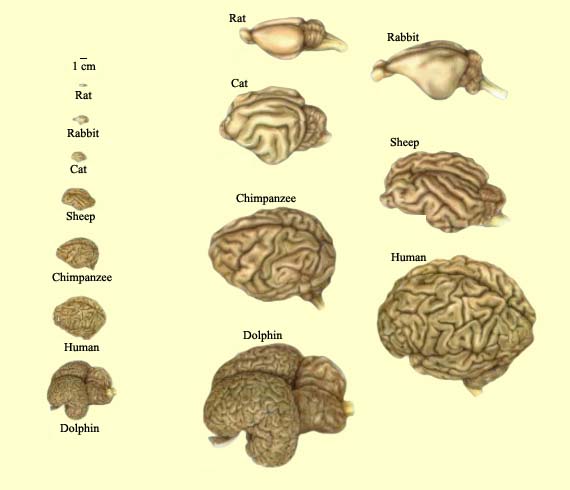

The hierarchical

organization of biological structures continues above the

level of the individual organism:

- species, a class of individuals having common attributes (like our species, homo sapiens);

- genus, a group of related species (h. sapiens are part of the genus homo, along with h. erectus, h. habilis, and h. sapiens neanderthalensis);

- family, a group of related genera (homo is part of the family hominidae, along with other early hominids, such as Australopithicus);

- order, a group of related families (hominidae are part of the order primates, along with chimpanzees, rhesus monkeys, and other apes and monkeys);

- class, a group of related orders (primates are part of the class mammalia, along with cats and dogs);

- phylum (or division), a group of related classes (mammalia are part of the phylum chordata, along with all other animals that have a spinal cord, like lizards and birds); and

- kingdom, a group of related phyla (chordata are part of the "animal" kingdom animalia, as distinct from the members of the "vegetable" kingdom plantae).

The Endocrine and Immune Systems

The nervous system is not the only means of communication within the body.

There is also the endocrine

system, consisting of the endocrine

glands, among which are:

There is also the endocrine

system, consisting of the endocrine

glands, among which are:

- pituitary gland

- thyroid

- pancreas

- adrenal glands

- ovaries (in females) and testes (in males).

Whereas the nervous system

allows various organs to communicate with each other by

virtue of electrical signals generated by

neurotransmitters flowing across synapses, the endocrine

system permits communication by means of chemical

substances called hormones secreted by

the endocrine glands and carried through the body by the

bloodstream to various organs of the body. Communication

via the nervous system is relatively quick, while

communication via the endocrine system is relatively slow.

Whereas the nervous system

allows various organs to communicate with each other by

virtue of electrical signals generated by

neurotransmitters flowing across synapses, the endocrine

system permits communication by means of chemical

substances called hormones secreted by

the endocrine glands and carried through the body by the

bloodstream to various organs of the body. Communication

via the nervous system is relatively quick, while

communication via the endocrine system is relatively slow.

One behaviorally important hormone is oxytocin,

sometimes called the "love hormone" because of the role

it plays in promoting social bonding. In a classic

series of studies, Thomas Insel and his colleagues (he

is now the Director of the National Institute of Mental

Health) discovered the role of oxytocin in prairie voles

-- which, unlike most mammals, form monogamous bonds

that last long after mating, so that the couples

continue to cohabit and the males assist in raising the

young. This bonding is promoted by two hormones

released during mating itself: oxytocin in females and vasopressin,

which promotes territoriality, in males. Later studies

showed that, in addition to their direct effects on

behavior, there are receptors for both oxytocin and

vasopressin in those areas of the prairie voles' brains

that serve as "reward" centers. Stimulation of

these centers, in turn, can lead to addiction. And

better yet, activation of the reward centers becomes

conditioned to the mere presence of the partner.

So, the mating pairs quite literally become addicted to

each other.

One behaviorally important hormone is oxytocin,

sometimes called the "love hormone" because of the role

it plays in promoting social bonding. In a classic

series of studies, Thomas Insel and his colleagues (he

is now the Director of the National Institute of Mental

Health) discovered the role of oxytocin in prairie voles

-- which, unlike most mammals, form monogamous bonds

that last long after mating, so that the couples

continue to cohabit and the males assist in raising the

young. This bonding is promoted by two hormones

released during mating itself: oxytocin in females and vasopressin,

which promotes territoriality, in males. Later studies

showed that, in addition to their direct effects on

behavior, there are receptors for both oxytocin and

vasopressin in those areas of the prairie voles' brains

that serve as "reward" centers. Stimulation of

these centers, in turn, can lead to addiction. And

better yet, activation of the reward centers becomes

conditioned to the mere presence of the partner.

So, the mating pairs quite literally become addicted to

each other.

I'll have more to say about the conditioning process in the lectures on Learning, and about addiction in the lecture on Motivation. For now, just a couple of qualifiers:

- Oxytocin and vasopressin bind to receptors in the reward system in prairie voles, but not in other vole species -- which are not monogamous.

- The

pair-bonding affects social behavior, what you might

call couplehood, but that doesn't mean that both male

and female prairie voles aren't capable of mating

outside the pair bond. They can and they do,

with the result that males can father young with other

females, and can also end up raising young that are

not their own. The differences have to do with

the details of their genetic endowments.

- Not all male prairie voles pair-bond in this manner. But most of them do, and the differences between them and other vole species are particularly striking.

- Parental behavior such as grooming stimulate oxytocin production in young prairie voles, such that infants that are denied these social interactions have difficulty bonding with mates as adults.

- Somewhat inevitably, perhaps, these results have been generalized to humans. There's a self-help book entitled Make Love Like a Prairie Vole: Six Steps to Passionate Plentiful, and Monogamous Sex, as well as a sort of aphrodisiac spray called "Liquid Trust", which contains synthetic oxytocin. Synthetic oxytocin has also been used experimentally as a treatment for people with autism, in an attempt to stimulate social behavior. But, as Insel himself has noted, "You have to be very careful and not assume that we are very, very large prairie voles" (quoted in "What Can Rodents Tell Us About Why Humans Love?" by Abigail Tucker, Smithsonian Magazine, 02/2014, from which some of this material, and the cute picture of a prairie vole, is drawn). Human pair-bonding is likely more complicated, and more influenced by social factors, than a simple matter of a couple of hormones.

- Oxytocin may be

the "love hormone", but it isn't just a

"love hormone. It also performs a number of

other functions, such as the maintenance and repair

of muscle tissue. (There's a lesson here about

specialization: the "specialty" of some gene, or

neurotransmitter, or hormone" may depend a lot on the

location on which it's acting.)

A system of internal organs known as the hypothalamic-pituitary-gonadal axis, or HPG axis, is important for the control of reproductive behaviors. Each of the organs named secretes a different hormone, but the three of them work in an integrated fashion, so it makes sense to think of them as constituting a system.

- A portion of

the hypothalamus releases gonadotropin-releasing

hormone (GnRH).

- GnRH, in turn, stimulates the pituitary gland to release the luteinizing hormone (LH) and follicle-stimulating hormone (FSH)

- LH and FSH, in their turn, stimulate the gonads to release the female and male sex hormones, estrogen and testosterone.

- In particular, testosterone levels regulate levels of dominance and aggression, and thus status-seeking or status-maintaining behaviors, especially in males -- which is why you see so many advertisements for drug treatments for 'Low-T" syndrome in men.

Another system beginning with the hypothalamus, the hypothalamic-pituitary-adrenal axis (HPA) is important for stress-response -- discussed later in these lectures, when I take up the autonomic nervous system. Again, each of the organs releases a different hormone, and the three of them work in an integrated fashion.

- During stress, a different portion of the hypothalamus secretes vasopressin and corticotropin-releasing hormone (CRH)

- Vasopressin and CRH, in turn stimulates the secretion of adrenocorticotropic hormone (ACTH) by the pituitary gland.

- ACTH then stimulates the adrenal cortex to which produces glucocorticoid hormones such as cortisol .

- These glucocorticoids in turn act back on the hypothalamus and pituitary to suppress the production of CRH and ACTH, thus creating a cycle of negative feedback which modulates the stress response.

You'll see more of how this works later in these lectures, when I discuss how the sympathetic and parasympathetic branches of the autonomic nervous system act in response to stress.

The HPA and HPG

axes interact with each other. According to the dual-hormone

hypothesis, cortisol, a product of the HPA alters

the behavioral effects of testosterone released by

the HPG (Mehta & Josephs, 2010). In

particular, levels of testosterone are correlated with

levels of dominance and aggression, but only in

individuals who are low in cortisol. For

individuals who are high in cortisol, the relationship

between testosterone and dominance/aggression

disappears, or may even be reversed.

The nervous and endocrine systems interact in interesting ways, especially in the operation of the autonomic nervous system.

In the first place, some hormones are also

neurotransmitters. For example, adrenalin (epinephrine)

and noradrenalin (norepinephrine), neurotransmitters that

are important for the autonomic nervous system, are also

secreted by the adrenal glands (hence their name).

For a history, see Adrenaline by Brian B. Hoffman

(2013).

- If a chemical is secreted into the bloodstream, it's a hormone.

- If it's released by the terminal fibers of a neuron, it's a neurotransmitter.

In the second place, neural impulses can

stimulate the adrenal glands to release hormones. Activity

in a subcortical structure called the hypothalamus

stimulates the adrenal gland to release various hormones.

For example,

- Release of a "thyrotropin releasing factor" (TRF) stimulates the pituitary gland to release yet another substance, which acts on the thyroid gland to control the process of thermoregulation -- getting the animal warmer when it's body temperature falls too much, or cooling a body that's become overheated.

- A "lutenizing releasing factor" (LRF), also released by the hypothalamus, acts on the pituitary to regulate aspects of reproductive behavior.

- Somatostatin, a "somatotropin release-inhibiting factor" (SRIF), also released by the hypothalamus, acts on the pituitary to inhibit the release of growth hormone, and thus inhibits the growth of the body.

Hormones can affect the structure and function of the nervous system, as we'll see in the lectures on Development, when we discuss gender dimorphism.

Certain hormones are also implicated in

emotion and motivation:

- Dopamine plays an important role in the reward systems by which we derive pleasure from eating, sex, and other activities.

- Oxytocin mediates feelings of trust and affection toward others, and also has a calming effect during periods of stress.

- Vasopressin activates the "flight or fight" response to stress

These interactions are now studied by a new interdisciplinary field called psychoneuroendocrinology.

There is also an immune

system, responsible for the recognition and

destruction of foreign material (like viruses and

bacteria) that enter the body, causing infectious

diseases. Like the nervous system, the immune system is

organized into subsystems:

There is also an immune

system, responsible for the recognition and

destruction of foreign material (like viruses and

bacteria) that enter the body, causing infectious

diseases. Like the nervous system, the immune system is

organized into subsystems:

Interactions between the nervous system and

the immune system, particularly the adaptive immune

system, are commonly thought to be responsible for the

well-known association between stress and disease, and are

studied by another interdisciplinary field, psychoneuroimmunology.

- The adaptive immune system, consisting of "B" and "T" cells, which adapt themselves to specific pathogens, and then remain in the body to fight off subsequent attacks by the same pathogens. In a sense, the adaptive immune system is a "memory" that records information about past infections; it is the basis for vaccines that protect us against viral or bacterial diseases like polio.

- The innate immune system includes antimicrobial molecules and various phagocytes, that ingest and destroy pathogens. The innate immune system is a nonspecific "rapid response system" for detecting and fighting infectious agents, mediated by "Toll-like receptors" that identify incoming pathogens and initiate the body's reflex-like response to them.

For a discussion of the relationships between the nervous and immune systems, see "The Seventh Sense" by Jonathan Kipnis (Scientific American, 08/2018). The article's title reflects Kipnis's belief that the immune system acts like a sensory modality, detecting the presence of micro-organisms in the body, much as the visual system is sensitive to light waves and the auditory system is sensitive to sound waves (see the Lecture Supplement on Sensation). Frankly, that seems a stretch, not least because the detection of micro-organisms (which the immune system undoubtedly does) doesn't give rise to any conscious sensory experience like sight or sound. But the rest of the article is a pretty interesting introduction to psychoneuroimmunology.

Neurons

At

the lowest level of biological organization, the cell, we

have the neuron, which comes in three basic types:

At

the lowest level of biological organization, the cell, we

have the neuron, which comes in three basic types:

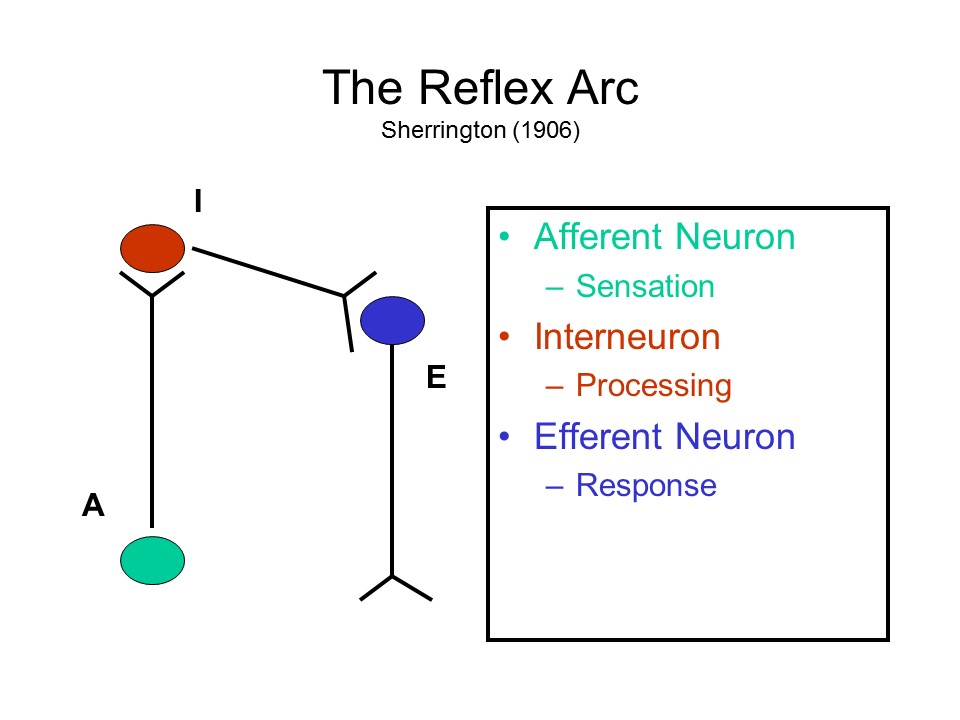

- Afferent neurons carry sensory signals from the receptor organs to the spinal cord and brain.

- Efferent neurons carry motor signals from the spinal cord and brain to the muscles and other internal organs.

- Interneurons connect afferent and efferent neurons.

Note: If you want to reduce confusion between "afferent" and "efferent", think of "affect" as a "feeling", and of something having an "effect" on something else.

The human brain contains about 86-100

billion neurons -- most, interestingly, in a structure known

as the cerebellum, rather than the cerebral cortex

itself. The upper estimate is the conventional one;

the lower estimate comes from Suzana Herculano-Houzel, a

Brazillian neuroscientist who developed a new method of

counting.

Structure of the Neuron

No matter what its type, every neuron consists of a cell body, with its nucleus, a number of dendrites stemming out from the cell body, and a long axon, ending in a number of terminal fibers.

The axon may be covered with a myelin sheath, and this sheath may be interrupted by breaks, called the Nodes of Ranvier.

An individual neuron may be drawn schematically as follows:

| O--------------------------------------< | ||

|

Cell Body (and Dendrites) |

Axon |

Terminal Fiber |

Each of these elements

has a particular function:

- The dendrites (or branches) of a

neuron receive stimulation from adjacent neurons (they

are, in a sense, the "afferent" portion of the neuron,

receiving stimulation from other neurons).

Actually, it's not the dendrites themselves that receive

stimulation, but rather dendritic spines which

stick out of the dendrites.

- When sufficiently stimulated, the cell body discharges an electrical impulse.

- This impulse is then carried along the axon to the terminal fibers, enabling the neuron to stimulate other adjacent neurons (in a sense, the terminal fibers are the "efferent" portion of the neuron, stimulating other neurons).

- The myelin sheath covers the axons of most afferent and efferent neurons, and plays a role in the regeneration of damaged peripheral nerve tissue.

- Myelinated axons comprise the "white matter" of the central nervous system.

- The "grey matter" consists of cell bodies, which are not covered in myelin.

- The Nodes of Ranvier are interruptions in the myelin sheath, and affect the speed with which the neural impulse is carried down the axon.

In this way, neural impulses are carried from one point to another in the nervous system.

A

A  sequence

of these three types of neurons constitutes the reflex

arc -- described by Charles Sherrington

(Oxford University), a British physiologist who won the

Nobel Prize for Physiology or Medicine in 1932 (shared with

Edgar D. Adrian of Cambridge). Essentially, the

reflex arc processes sensory inputs and generates motor

outputs. Although things are actually more complicated than

this, conceptually, at least, the reflex arc is the basic

building block of behavior. It goes back at least as

far as the 17th-century French philosopher Rene Descartes,

who coined the term reflex. In Descartes's

view, energy from a stimulus is picked up by various sensors

and transmitted to the brain, where it is reflected "back"

in the form of a motor response.

sequence

of these three types of neurons constitutes the reflex

arc -- described by Charles Sherrington

(Oxford University), a British physiologist who won the

Nobel Prize for Physiology or Medicine in 1932 (shared with

Edgar D. Adrian of Cambridge). Essentially, the

reflex arc processes sensory inputs and generates motor

outputs. Although things are actually more complicated than

this, conceptually, at least, the reflex arc is the basic

building block of behavior. It goes back at least as

far as the 17th-century French philosopher Rene Descartes,

who coined the term reflex. In Descartes's

view, energy from a stimulus is picked up by various sensors

and transmitted to the brain, where it is reflected "back"

in the form of a motor response.

Glia Cells

In addition to neurons, glia

cells (also known as neuroglia) also comprise

another elementary unit in the nervous system. There are

roughly a trillion glia cells in the human brain.

That's about 10 times the high estimate of the number of

neurons, which might be the source of the common assertion

that we use only about 10% of our brains. But this

is a complete misunderstanding. All of our

neurons are actively engaged in mental activity.

Glial cells aid neural

functioning in several ways:

- They build the myelin sheath (white matter) around some axons, which provides insulation.

- They guide the migration of axons and dendrites during development, so that individual neurons "connect up" effectively.

- They provide a kind of "packing tissue" for neurons, keeping them in their proper places for synaptic transmission.

- They transfer nutrients from blood vessels to the neurons; and maintain a proper ion balance.

- They remove waste material left over when neurons die, and fill up the vacant space thus created.

Although it was once thought that the

glia cells are not directly involved in mental processes,

recent evidence suggests that, in addition to the support

functions outlined above, glia may form an

information-processing and communication network of their

own, running parallel to the neurons (see "The Other Half of

the Brain" by R. Douglas Fields, Scientific American,

04/2004). For example, UCB's Prof. Marian Diamond, famous

for her undergraduate neuroanatomy course, discovered that

while there was nothing remarkable about Einstein's brain so

far as neurons were concerned, certain parts of the cerebral

cortex had a remarkably high concentration of glia

cells.Diamond interpreted this as reflecting a greater

metabolic activity in his neurons. Later research

showed that Einstein's corpus callosum was bigger than

average, possibly allowing for greater connectivity between

his left and right hemispheres (Einstein was a famously

visual thinker); there were also some anatomical

differences: for example, his frontal lobes had four gyri,

not the usual three.

For the saga of Einstein's brain, which is an even better tale than the saga of Descartes' head, see "Genius in a Jar" by Brian D. Burrell, Scientific American, 9/2015. Also Finding Einstein’s Brain (2018) by Frederick E. Lepore.

Even if they do not play a direct role in mental processes, glia cells do appear to be implicated in certain forms of brain disease.

- In Alzheimer's disease the patient suffers dementia, a general loss of intellectual functions. This affects some elderly persons (in which case it is sometimes known as "senile dementia"), but is can also affect younger individuals ("presenile dementia"). AD results from the degeneration of nerve cells -- either natural, due to normal aging, or accelerated. In either case, the dead neural tissue is replaced with glia cells, resulting in the characteristic "plaques and tangles" that can be observed on autopsy.

- Similarly, certain brain tumors are caused by an abnormal growth of glia cells, which produce damaging pressure on other brain structures, or prevent normal functioning of neurons.

The Challenge of Alzheimer's Disease

Alzheimer's Disease (AD) is a serious public health problem: in 2003, it was estimated that AD affected some 4.5 million Americans, 10% of the population over 65 -- not to mention their extended families. And because the elderly population is increasing due to the Baby Boom following World War II (and the echoing baby boom produced by the Baby Boomers' children), not to mention increasing immigration, the problem is going to get worse -- straining budgets and frazzling nerves.

Psychopathology

AD was first described by a Alois

Alzheimer, German psychiatrist and neuropathologist, in

1906. Alzheimer's Disease is a form of dementia, a

set of syndromes all of which involve loss of multiple

cognitive functions, with preserved clarity of

consciousness (i.e., the patient is not delirious). Put

bluntly, dementias involve a loss of general intelligence,

including abstract thinking, judgment, object recognition,

and comprehension, which interferes with work, social

activities, and social relationships. Dementia takes a

number of different forms, including

- Alzheimer's Disease

- Pick's disease

- Huntington's Disease

- Parkinson's Disease

The chief feature of AD is a progressive loss of memory, including "short-term" as well as "long-term" memory, which is why clinics specializing in the diagnosis and treatment of AD are often referred to as "memory disorders" clinics.

Neuropathology

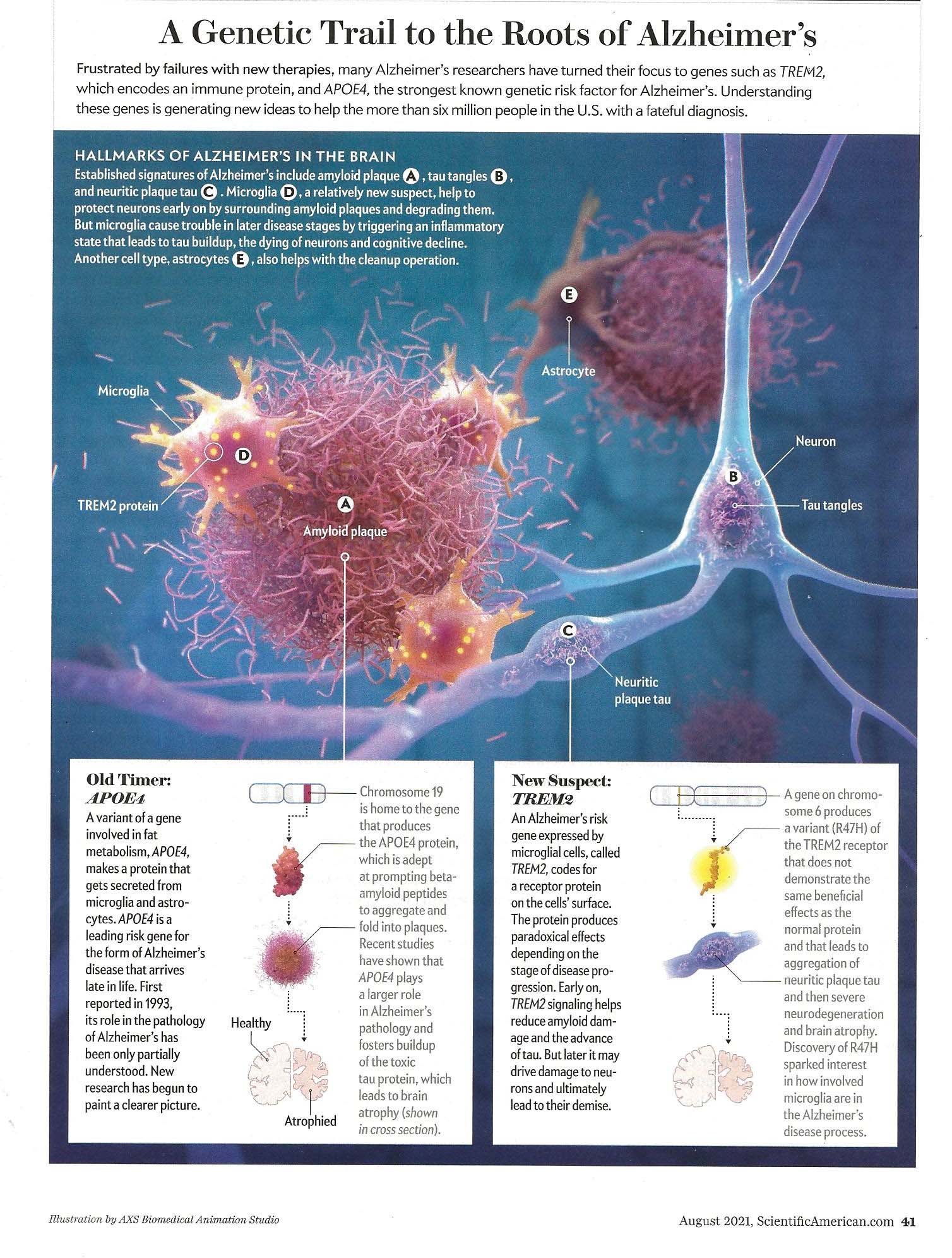

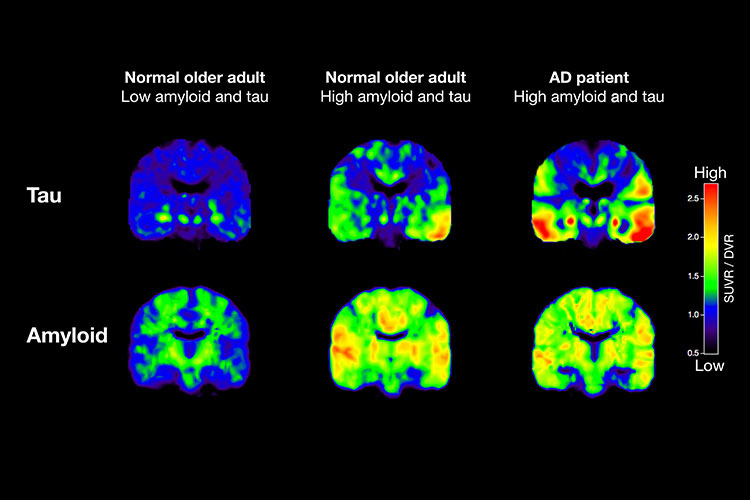

Alzheimer was also the first to

describe the neuropathology of AD, based on autopsies of

his patients. Alzheimer's disease is a

neurodegenerative disease, meaning that it is caused by

the progressive loss of brain cells, which naturally

entails a loss of synapses (and thus of synaptic

connections). This cell death is accompanied by the

development of neurofibrillary tangles

composed of tau protein and senile plaque

composed of amyloid-beta protein (abbreviated Aβ or A

beta; the same protein found in glia cells). In fact,

prospective studies of individuals at risk for AD (e.g.,

by virtue of family history) show that an accretion of

amyloid can be seen more than 5 years before AD is first

diagnosed, tau buildup and brain shrinkage can be observed

more than 1 year before diagnosis. The brain atrophy is

widespread, not limited to a single area, resulting in

general dementia as opposed to some specific deficit

(though, especially in the early stages, memory is hit

hardest). The cell death and synaptic loss results in

reduced levels of particular neurotransmitters, such as

ACh. (Illustration from "A New Understanding of

Alzheimer's" by Jason Ulrich and David M. Holtzman, Scientific

American 08/2021.)

Alzheimer was also the first to

describe the neuropathology of AD, based on autopsies of

his patients. Alzheimer's disease is a

neurodegenerative disease, meaning that it is caused by

the progressive loss of brain cells, which naturally

entails a loss of synapses (and thus of synaptic

connections). This cell death is accompanied by the

development of neurofibrillary tangles

composed of tau protein and senile plaque

composed of amyloid-beta protein (abbreviated Aβ or A

beta; the same protein found in glia cells). In fact,

prospective studies of individuals at risk for AD (e.g.,

by virtue of family history) show that an accretion of

amyloid can be seen more than 5 years before AD is first

diagnosed, tau buildup and brain shrinkage can be observed

more than 1 year before diagnosis. The brain atrophy is

widespread, not limited to a single area, resulting in

general dementia as opposed to some specific deficit

(though, especially in the early stages, memory is hit

hardest). The cell death and synaptic loss results in

reduced levels of particular neurotransmitters, such as

ACh. (Illustration from "A New Understanding of

Alzheimer's" by Jason Ulrich and David M. Holtzman, Scientific

American 08/2021.)

The most popular theory is that AD is

caused by the buildup of amyloid-beta plaque in the

cortex, especially the frontal and parietal lobes, and

that the tangles are a kind of adventitious consequence.

However, it is possible that the actual cause is

amyloid-beta that floats freely, rather than inside

plaque. In a mouse model, animals with free-floating

amyloid-beta, but no plaque, showed the same behavioral

deficits as a comparison group that had both types.

It is possible that Alzheimer's patients produce too much

amyloid, more than can be metabolized; alternatively, they

may produce normal amounts, but dispose of it at a slower

rate than normal. Either way, amyloid-beta builds

up, and eventually gets impairs proper neuron function.

Other researchers believe that the problem

lies with the tau protein, which accumulates in the

hippocampus. While beta-amyloid is responsible for

the "plaque" element in the "plaques and tangles" that are

central to the neuropathology of AD, tau is responsible

for the "tangles". These competing groups are known

in neuroscientific circles as the "tauists" and the

"baptists" (the latter for beta-amyloid

protein).

- Interestingly, tau also builds up in boxers and football players who have received repeated blows to the head, leading to a condition called chronic traumatic encephalopathy (CTE). The severity of CTE is correlated with the number of head injuries the person has suffered, and can be a particular problem with young children's developing brains. A good argument for wearing really good football helmets (a good argument for touch football!). A good reason not to let little soccer players make too many head strikes.

- On the other hand, some elderly persons show considerable buildup of both amyloid-beta and tau in their brains, yet show no signs of serious cognitive impairment.

- Some researchers now focus on a protein known as REST (RE1-Silencing Transcription factor) or NRSF (Neuron-Restrictive Silencer Factor), which is absent in AD patients.

- Other researchers look for the causes of AD in the physical and social environment, such as chemical toxins, and even economic inequality.

Still, most theorists focus on beta-amyloid and

tau. One prominent theory is that both are

necessary for AD to develop. It is not known, however,

whether beta-amyloid promotes the spread of tau, or tau

promotes the spread of beta-amyloid.

But where do beta-amyloid and tau come from? Apparently, they are waste products created by brain activity. It turns out that the brain has its own waste-removal system. To make a long story short, a newly discovered glymphatic system employs cerebrospinal fluid, which enters the brain along the arteries, to carry waste products, toxins, and the like away from the brain. Most of this waste-disposal activity occurs during sleep, when the brain is relatively inactive (the glymphatic system may also carry nutrients to the brain in much the same way. Defects in this glymphatic system may contribute to the buildup of tau and beta-amyloid that, apparently, causes AD.

For more details on the glymphatic system, see "Brain Drain" by Maiken Nedergaard and Steven A. Goldman, Scientific American, 03/2016.

Early Diagnosis?

Although it is common the hear that people have been diagnosed with AD, in fact this is only presumed AD, because there are no diagnostic tests that can distinguish AD from other forms of dementia. At present, AD can be definitively diagnosed only at autopsy, where it is possible for a pathologist to visually confirm the presence of senile plaques and neurofibrillary tangles that define the disease in neurological terms. Until the patient dies, a provisional diagnosis of AD can be made based on the patient's performance on certain behavioral tests of mental function (there are other forms of dementia besides AD), age of onset (AD sometimes has an early age of onset, and was formerly called presenile dementia), and information from family members.

CT, MRI, and other brain-imaging techniques are of limited use in diagnosing AD because the plaques and tangles do not show up in them. Somewhat paradoxically, a provisional diagnosis of AD has been made when dementia occurs, especially with an early onset, in the absence of evidence of tissue damage in conventional CT or MRI brain scans.

Another approach is to take repeated brain scans of individuals at risk for AD, or showing some of the behavioral signs of AD, much as mammograms are sometimes used to track breast cancer. It is known that elderly individuals who are healthy lose about 0.2% of their brain volume every year. However, Dr. Nick Fox of the National Hospital for Neurology and Neurosurgery, in London, has reported that individuals who end up with AD lose much greater amounts of brain volume, about 2-3% per year, especially in the hippocampus. Accordingly, it may be possible to detect AD by conducting repeated brain scans of at-risk individuals. However, this approach would be extremely expensive, and it is not clear that these brain scans would discriminate between AD and other forms of dementia.

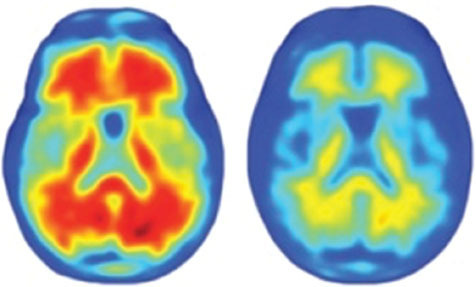

There is another possibility. It is known that the chief cause of senile plaques in AD is a protein called amyloid-beta. This protein appears in the brain even before behavioral symptoms appear, and is presumed to cause the actual damage to nerve cells that results in AD. For this reason, it may be possible to diagnose AD by testing for the presence of amyloid-beta in patients' brains. In 2002, Dr. Henry Engler of the University of Uppsala, in Sweden, reported a study in which he injected PIB, a molecule that can cross the blood-brain barrier and attach itself to amyloid-beta, along with a radioactive tag (carbon-11) , into the bloodstreams of patients with (presumed) mild AD and healthy controls. Subsequent PET scans showed no trace of PIB in the brains of controls, but large amounts of PIB in the frontal and temporo-parietal lobes of the patients.

This PET brain image shows unattached amyloid-beta leaving the brain of a healthy control subject through the ventricles (left panel), but amyloid-beta accumulating in the frontal and temporo-parietal areas of a patient with mild AD (right panel).

According to a news article in Science magazine, Engler's initial report of his research, at the 2002 International Conference of Alzheimer's Disease and Related Disorders, "audibly took the audience's breath away" ("Long-Awaited Technique Spots Alzheimer's Toxin" by Laura Helmuth, Science, 297:752-753, 08/02/02; this article was also the source of the graphic). Interestingly, amyloid-beta also shows up in the lens of the eye, and can cause a rare type of cataract. Whether through PET scans or eye exams, it may someday be possible to definitively diagnose AD while patients are still living. The availability of a biological marker, if that's what amyloid-beta proves to be, will also be of use in tracking the progress of patients who are receiving various types of treatment for AD.

One problem with Engler's

technique is that the radioactive isotope, carbon-11, has

only a very short half-life. It has to be produced on

site, and then used very quickly. In 2010, Dr. Daniel

Skovronsky employed another isotope, flouride-18, which is

more readily available and lasts longer. Using this

preparation, the researchers were able to see the buildup

of amyloid plaque (the yellow and red areas in the lower

images) only in patients with a presumptive diagnosis of

Alzheimer's disease (based on standard memory testing),

compared to normal controls. Interestingly, some degree of

plaque buildup was also seen in those normal subjects who

performed relatively poorly on the diagnostic tests

("Promise Seen for Detection of Alzheimer's" by Gina

Kolata, New York Times, 06/24/2010). In 2011,

the Food and Drug Administration approved a new diagnostic

technique based on this research -- the first to permit

diagnosis of AD without an autopsy, and promising early

identification of patients at risk for AD. This raises the

question of whether anyone would want to know if they were

getting AD, and also the question of how to prevent

insurance companies and other third parties from misusing

such information. But the advent of the diagnostic

technique itself is a great advance for neurology.

One problem with Engler's

technique is that the radioactive isotope, carbon-11, has

only a very short half-life. It has to be produced on

site, and then used very quickly. In 2010, Dr. Daniel

Skovronsky employed another isotope, flouride-18, which is

more readily available and lasts longer. Using this

preparation, the researchers were able to see the buildup

of amyloid plaque (the yellow and red areas in the lower

images) only in patients with a presumptive diagnosis of

Alzheimer's disease (based on standard memory testing),

compared to normal controls. Interestingly, some degree of

plaque buildup was also seen in those normal subjects who

performed relatively poorly on the diagnostic tests

("Promise Seen for Detection of Alzheimer's" by Gina

Kolata, New York Times, 06/24/2010). In 2011,

the Food and Drug Administration approved a new diagnostic

technique based on this research -- the first to permit

diagnosis of AD without an autopsy, and promising early

identification of patients at risk for AD. This raises the

question of whether anyone would want to know if they were

getting AD, and also the question of how to prevent

insurance companies and other third parties from misusing

such information. But the advent of the diagnostic

technique itself is a great advance for neurology.

One question is whether use of PET imaging

for diagnosing AD is cost-effective. In 2003, a diagnostic

PET scan cost approximately $1500, as was considered "90%

accurate" in diagnosing AD as opposed to other forms of

dementia. But standard diagnostic procedures, involving

behavioral tests of mental function similar to those used

in experimental cognitive psychology, cost approximately

$300 and were considered "80 to 90% accurate". The

question for health consumers (and specialists in health

economists and health policy) is whether the 5-10%

improvement in accuracy is worth a 500% increase in costs

("Finding Alzheimer's Early" by Laura Johannes, Wall

Street Journal, 10/16/03).

A

related approach is to use PET imaging to track the spread

of tau in the brain. Research by UCB Prof. William

Jagust, suggests that the buildup of beta-amyloid in the

brain is a characteristic of normal aging, while the

buildup of tau is specifically related to AD.

A

related approach is to use PET imaging to track the spread

of tau in the brain. Research by UCB Prof. William

Jagust, suggests that the buildup of beta-amyloid in the

brain is a characteristic of normal aging, while the

buildup of tau is specifically related to AD.

Link

to video describing PET imaging of tau and beta-amyloid

by UCB's William Jagust.

Pharmaceutical Treatments, and the

Possibility of Prevention

Although advances in understanding brain development make the prospects for recovery of function somewhat better than previously thought, cures for AD and other neurological diseases are a long time away. For all practical purposes, it remains true that brain damage is forever. However, given early diagnosis, it may be possible to administer drugs that will retard the progression of the disease. Among the most popular medicines currently used for treating AD are Aricept, Reminyl, and Exelon: all these drugs effectively increase levels of the neurotransmitter acetylcholine (ACh) by inhibiting the breakdown of ACh.

Another drug, Namenda, has a different

mechanism of action. Drugs like Aricept ostensibly replace

the missing ACh. This may slow the progress of the

disease, but does nothing for the underlying pathology,

which is premature brain cell death. A genuine cure would

operate on that level, retarding neuronal death. Namenda

accomplishes this by acting as a glutamate receptor

antagonist. Glutamate regulates the amount of

calcium in nerve cells: too much can damage them. By

reducing glutamate, Namenda in turn reduces the amount of

calcium in nerve cells.

In fact, a whole host of quite different drugs have been considered in the prevention and treatment of AD:

- Some inhibit the enzymes that produce amyloid-beta.

- Some clear amyloid-beta that has already begun to build up.

- Some prevent amyloid-beta from aggregating into clumps that damage neurons.

- Some block the production of toxic tau proteins.

- Some enhance the health of neural tissue, in an attempt to protect it against the damaging effects of amyloid-beta and tau.

As of 2012, clinical trials were underway

on a novel drug regime for the prevention of Alzheimer's

disease. The subjects are members of a group of extended

families in Medellin, Colombia, large numbers of whom

harbor a genetic mutation, APOE4, that greatly increases

susceptibility to a particular early-onset form of

Alzheimer's disease (another candidate gene is known as

TREM2). Not everyone who carries APOE4 will get

Alzheimer's, and many more people (about 99%) who get

Alzheimer's don't have APOE4, but It is hoped that success

with this very narrowly defined group will lead to

preventative programs that are more generally applicable.

Another

advance, more or less, came in 2021, when the Food and

Drug Administration approved a new drug, under the trade

name Aduhelm (generic name: aducanumab), for the treatment

of Alzheimer's Disease -- the first such approval in

almost 20 years ("FDA Approves Alzheimer's Drug Despite

Fierce Debate Over Whether It Works" by Pam Belluck and

Rebecca Robbins, New York Times, 06/07/2021; also

"How an Unproven Alzheimer's Drug Got Approved" by

Belluck, Robbins, and Sheila Kaplan, New York Times,

06/21/2021). The drug targets amyloid-beta, which

forms the plagues which are the characteristic biological

change in AD. And, in fact, it does a good job of

reducing amyloid-beta levels in the brain (the image,

which shows brain-scan findings pre-and post-treatment

(left and right, respectively) for a single patient in the

trial, is from "Alzheimer's Drug Approved Despite Murky

Results" by Kelly Servick, Science,

06/11/2021). The only problem is, the drug has

little or no effect on the cognitive impairments which are

the primary symptoms of AD. As discussed in the

lectures on Psychopathology and

Psychotherapy, the FDA requires positive results

from two independent "Stage 3" experiments in which a new

drug is compared with placebo (or the current standard of

care). Biogen, the maker of Aducanumab, submitted

two trials in which the drug markedly reduced amyloid

levels in the brain. But one trial showed only a

very small effect on cognitive function (under a high dose

but not a low dose, but amounting to an improvement of

only 0.39 points on an 18-point scale), and the other

trial didn't show any effect at all (under either

dose). That sounds like a failure to me, but the FDA

approved the drug nonetheless, on the grounds that it did

have a significant effect on the presumed underlying

neuropathology of AD. Further "Stage 4" research on

Aducanumab is underway, and the FDA might review its

approval in the future. But that could take as long

as nine (9!) years. In the meantime, all hell broke

loose at the FDA, because the drug was approved even

though it failed to meet the FDA's own standards for

showing a significant clinical effect, and because a large

number of patients suffered swelling of the brain, a

serious side-effect. Moreover, although the two

clinical trials were conducted with patients suffering

only mild impairment, the FDA approved its use for all

patients with AD, even hose with moderate and severe

impairment of functioning. Given the scope of the

problems caused by AD, no wonder Biogen's stock price

soared on the news. At a sticker price of $56,000

per year, plus the costs of regular MRIs to monitor for

brain swelling, you'd think there ought to be more

evidence of its effectiveness. In the wake of the

drug's approval, some advisors to the FDA panel that

approved the drug resigned ("Three FDA Advisers Resign

Over Agency's Approval of Alzheimer's Drug", by Pam

Belluck and Rebecca Robbins, NYT

06/11/2021). In turn, the FDA defended its decision

on the grounds that the drug did significantly

affect biomarkers for AD, and so might still prove to have

clinical efficacy. Moreover one could argue that,

especially for neurological and psychiatric illnesses, the

really important effects are on function, subjective

well-being, and even survival, rather than on "surrogate

endpoints" such as amyloid levels. On the other

hand, it's possible that "senile plaque" isn't the problem

in AD after all. Stay tuned....

Another

advance, more or less, came in 2021, when the Food and

Drug Administration approved a new drug, under the trade

name Aduhelm (generic name: aducanumab), for the treatment

of Alzheimer's Disease -- the first such approval in

almost 20 years ("FDA Approves Alzheimer's Drug Despite

Fierce Debate Over Whether It Works" by Pam Belluck and

Rebecca Robbins, New York Times, 06/07/2021; also

"How an Unproven Alzheimer's Drug Got Approved" by

Belluck, Robbins, and Sheila Kaplan, New York Times,

06/21/2021). The drug targets amyloid-beta, which

forms the plagues which are the characteristic biological

change in AD. And, in fact, it does a good job of

reducing amyloid-beta levels in the brain (the image,

which shows brain-scan findings pre-and post-treatment

(left and right, respectively) for a single patient in the

trial, is from "Alzheimer's Drug Approved Despite Murky

Results" by Kelly Servick, Science,

06/11/2021). The only problem is, the drug has

little or no effect on the cognitive impairments which are

the primary symptoms of AD. As discussed in the

lectures on Psychopathology and

Psychotherapy, the FDA requires positive results

from two independent "Stage 3" experiments in which a new

drug is compared with placebo (or the current standard of

care). Biogen, the maker of Aducanumab, submitted

two trials in which the drug markedly reduced amyloid

levels in the brain. But one trial showed only a

very small effect on cognitive function (under a high dose

but not a low dose, but amounting to an improvement of

only 0.39 points on an 18-point scale), and the other

trial didn't show any effect at all (under either

dose). That sounds like a failure to me, but the FDA

approved the drug nonetheless, on the grounds that it did

have a significant effect on the presumed underlying

neuropathology of AD. Further "Stage 4" research on

Aducanumab is underway, and the FDA might review its

approval in the future. But that could take as long

as nine (9!) years. In the meantime, all hell broke

loose at the FDA, because the drug was approved even

though it failed to meet the FDA's own standards for

showing a significant clinical effect, and because a large

number of patients suffered swelling of the brain, a

serious side-effect. Moreover, although the two

clinical trials were conducted with patients suffering

only mild impairment, the FDA approved its use for all

patients with AD, even hose with moderate and severe

impairment of functioning. Given the scope of the

problems caused by AD, no wonder Biogen's stock price

soared on the news. At a sticker price of $56,000

per year, plus the costs of regular MRIs to monitor for

brain swelling, you'd think there ought to be more

evidence of its effectiveness. In the wake of the

drug's approval, some advisors to the FDA panel that

approved the drug resigned ("Three FDA Advisers Resign

Over Agency's Approval of Alzheimer's Drug", by Pam

Belluck and Rebecca Robbins, NYT

06/11/2021). In turn, the FDA defended its decision

on the grounds that the drug did significantly

affect biomarkers for AD, and so might still prove to have

clinical efficacy. Moreover one could argue that,

especially for neurological and psychiatric illnesses, the

really important effects are on function, subjective

well-being, and even survival, rather than on "surrogate

endpoints" such as amyloid levels. On the other

hand, it's possible that "senile plaque" isn't the problem

in AD after all. Stay tuned....

For more information, see;

- "Alzheimer's: Forestalling the Darkness" by Gary Stix, Scientific American, June 2010.

- "Seeds of Dementia" by Larry C Walker & Mathias Jucker, Scientific American, May 2013.

- "The

Vanishing Mind", a series of articles in the New

York Times by Gina Kolata and others

(2010ff).

The Synapse

Other neural structures are built up from neurons, but neurons themselves are not physically connected; instead, they are separated by a gap, called the synapse -- a term coined by the physiologist Charles Sherrington. The synapse separates the terminal fibers of one neuron (the presynaptic neuron) from the dendrites and cell body of the next (the postsynaptic neuron). Each neuron contains a large number of terminal fibers and dendrites: thus, each neuron synapses onto a large number (1,000 or so, on average) of other neurons, producing a rich network of interconnections. Given that the adult human brain contains about 100 billion neurons, each making approximately 1,000 synaptic connections with other neurons, there are roughly 100 trillion synapses -- more synapses in a single brain than there are stars in our Milky Way galaxy).

Several neurons, each separated by a synapses from each of the others, may be drawn schematically as follows.

O----------<O----------<O----------<

The neuron may be thought of as a wire

that conducts an electrical charge from the cell body down

the axon to the terminal fibers. The electrical discharge of

a neuron is based on an electrochemical process.

- Initially, the neuron is in a resting state, or resting potential, in which the cell membrane carries a negative polarization.

- When the neuron is stimulated (e.g., by the discharge of a presynaptic neuron, the action of a sensory receptor, or an electrode implanted in the cell body), ion channels open that allow positively charged sodium ions (Na+) outside the cell to join positively charged potassium ions (K+) already inside.

- The resulting increase in positive charge inside the neuron induces a small electrical current, a process known as depolarization.

- The process creates a positive action potential in the cell body that moves along the axon to the terminal fibers.

At this point the action potential meets the synapse, and the problem is for the action potential to get across the synaptic gap, from the presynaptic neuron to the postsynaptic neuron. (Here's where the "wire" analogy breaks down, because individual neurons aren't connected to each other, the way electrical wires are.)

The precise mechanism by which the neural impulse propagates down the axon from the cell body to the synapse is too complicated to detail here: you'll learn all about this if you take a more advanced course in biological psychology or neuroscience. Put briefly, it's not exactly like an electrical current. Rather, the impulse travels by means of a series of depolarizations involving the exchange of sodium and potassium ions. This process is described by the Hodgkin-Huxley model, described by Alan Hodgkin and Andrew Huxley in a classic 1952 paper (Andrew Huxley is a descendant of T.H. Huxley, known as "Darwin's bulldog" for his vigorous defense of evolutionary theory in the 19th century; he's also related to Aldous Huxley, who wrote Brave New World as well as the Doors of Perception, a report of his experiences with psychedelic drugs that gave The Doors their name). For their discovery, Hodgkin and Huxley shared the 1962 Nobel Prize in Physiology or Medicine with John Eccles.

Eccles, for his part, won the Prize for discovering the mechanism of synaptic transmission. Traditionally, neurophysiologists believed that synaptic transmission occurred electrically, by something like a spark. Eccles demonstrated that the mechanism was actually chemical in nature. He and his colleague Bernard Katz also identified the first neurotransmitter, acetylcholine. Katz, for his part, got his own Nobel Prize in 1970, for his "kiss and run" model of synaptic activity -- which really is too complicated for a course at this level!

But for the record, two other physiologists, Otto Loewi (Graz University, Austria) and Henry Dale (Cambridge), had already received the Prize for earlier work on acetylcholine in the nervous system.

Neurotransmitters

This feat is accomplished by means of synaptic

transmission. The arrival of a neural impulse at

the terminal fiber of the presynaptic neuron induces the

discharge of a chemical known as a neurotransmitter

substance. Neurotransmitter flows into the synapse, and is

taken up by the dendrites (technically, the dendritic

spines) of the postsynaptic neuron. If a sufficient quantity

is taken up, the postsynaptic neuron is depolarized. Another

substance clears used neurotransmitter out of the synaptic

cleft, permitting the cycle to start all over again. In this

way, neural impulses travel from the peripheral sensory

receptors to the spinal cord, up the spinal cord to the

brain, from one brain structure to another, back down the

spinal cord from the brain, and out from the spinal cord to

the muscles and glands.

Strictly speaking, the presynaptic and postsynaptic neurons are not completely separated. Specialized cell-adhesion molecules form a physical connection between them, thus stabilizing their connection. But the neural impulse isn't transmitted by these physical connections. The neural impulse passes from one neuron to another chemically, by means of synaptic transmission.

Neurotransmitters are differentiated

according to function. Excitatory

neurotransmitters depolarize a postsynaptic neuron, while inhibitory

neurotransmitters hyperpolarize it, making it more difficult

to discharge. Some neurotransmitters have both excitatory

and inhibitory effects, depending on the presence of other

transmitters. There are also excitatory and inhibitory

synapses -- presumably because they release excitatory and

inhibitory neurotransmitters; but the point is that there's

a difference between the synapse and the neurotransmitter.

- Glutamate is a good example of an "excitatory" neurotransmitter: it is used in over 90% of the synapses in the brain.

- Gamma aminobutyric acid, or GABA, is a good example of an "inhibitory" neurotransmitter: it is used at more than 90% of the remaining synapses (so that's 99% of synapses, right there).

So glutamate and GABA are the primary

neurotransmitters, operating throughout the nervous

system. There are many more neurotransmitters,

however, which perform specialized functions.

Acetylcholine

(ACh), another prominent "excitatory"

neurotransmitter, provides a good example of the ways in

which synaptic transmission works. ACh has an inhibitory

effect on the vagus nerve, which serves the heart; and an

excitatory effect on the nerves that serve the voluntary

musculature, including the muscles that supply the lungs.

Consider the following toxic effects on the function of

excitatory ACh:

- Botulism, a toxin, prevents the release of excitatory ACh from the terminal fibers of the presynaptic neuron. The cosmetic drug Botox is a derivative, which is where it gets its name.

- Curare prevents the uptake of excitatory ACh by the dendrites of the postsynaptic neuron;

- Nerve gas prevents used ACh from being cleared away from the synapse -- and thus effectively prevents new ACh from being released by the presynaptic neuron.

Biological sleep aids (sometimes called hypnotics, although they have nothing to do with hypnosis) provide another, perhaps less threatening, example.

- The first generation of sleep aids consisted of barbiturates, such as sodium pentothal or sodium amytal. Barbiturates bind to receptors for GABA -- which, as noted above, is a major inhibitory neurotransmitter, and it also blocks the receptors for glutamate, which is a major excitatory neurotransmitter. The result is a general suppression of nervous system activity -- which is why barbiturates are often used to induce general anesthesia (in considerably higher doses, they're also used in physician-assisted suicide and capital punishment). The barbiturates are sedatives and muscle relaxants, so it's not surprising that they help people get to sleep. Even at the low doses used to promote sleep, however, barbiturates pose serious risk of addiction, and thus overdoses leading to death, and so they're not generally used for this purpose anymore.

- The second generation of sleep aids consisted of benzodiazepines, such as diazepam, lorazepam, and midazolam, which are also used to induce anesthesia, because they're also sedative muscle-relaxants. Benzodiazepines also potentiate the effects of GABA by binding to receptors, but in a different way. The barbiturates increase the amount of time that the receptor channel is open, while the benzodiazepines increase the frequency with which the channel opens. The ultimate effect is the same, however, which is an increase in inhibitory action. Benzodiazepines also pose a risk of addiction, but they offer considerably less risk of accidental overdose.

- The third generation of sleep aids are -- get this --

nonbenzodiazepines, so called because they have

an entirely different molecular structure than the

benzodiazepines. They are also known as the "Z-drugs":

zopiclone, zolpidem and zaleplon.The most familiar

example is Ambien, which is by far the most popular

sleep aid currently on the market. The Z-drugs

have the same mechanism of action as the

benzodiazepines: they bind to GABA receptors and keep

them open. pose less risk of overdosing than the

barbiturates, and less risk of addiction than the

benzodiazepines (though they can increase risk for

depression and psychological dependence).

Ambien can also induce a blackout-like state, in which

the patient engages in various (mostly highly

automatized) activities, with no memory of doing so

later.

- A fourth generation of sleep aids is currently under development, which takes an entirely different approach. an example is Suvorexant. Instead of potentiating inhibitory neurotransmitters like GABS, they work on another neurotransmitter, orexin, which is important in the system that promotes wakefulness. Suvorexant effectively prevents orexin from reaching its receptors. So, instead of suppressing brain activity in general, suvorexent inhibits the activity of a neurotransmitter that keeps us awake. As Ian Parker puts it in an article describing the developmental and approval process for Surovexant, the drug "ends the dance by turning off the music, whereas a drug like Ambien knocks the dancer senseless" ("The big Sleep", New Yorker, 12/09/2013). All the benzodiazepine-like drugs, which act on the GABA system, can put the patient to sleep, but Suvorexant, which acts on the system that keeps us awake, will maintain sleep as well. And there is little of the post-sleep grogginess that usually comes as a side effect of the other drugs. Suvorexant is the first drug specifically designed as a sleep aid -- the first to be specifically engineered to address the physiology of the sleep-wake cycle. But, as of late 2013, it was still working its way through the FDA approval process

- Note: Because sleep is such an issue

for college students, it's important to understand

that the best treatments for various kinds of insomnia

are not pharmacological but psychological. A

specifically form of psychotherapeutic intervention,

as Cognitive-Behavioral Therapy for Insomnia (CBT-I),

has been shown to be at least as effective as any

medication currently on the market, with none of the

adverse side-effects. You can read more about

this in the Lecture Supplements on Psychopathology and

Psychotherapy.

In any case, the result is the cessation of neural transmission in the nerves supplying the skeletal musculature, including the lungs. The person cannot breathe, and will die of suffocation unless he or she is artificially respirated until the toxin is metabolized and washes out of the system. Thus, skeletal paralysis, and death, is the final common pathway uniting the three toxic effects.

Technically, the terms excitatory and inhibitory apply to the receptors on the postsynaptic neuron, rather than to the neurotransmitters themselves -- which is why some neurotransmitters can have both excitatory and inhibitory functions. But, at our level, it is convenient to think of the neurotransmitters themselves as being excitatory, or inhibitory, or both.

In the lock-and-key model of neurotransmitter function, each neurotransmitter has a particular molecular structure, or shape, which can fits only certain receptor molecules. The neurotransmitter is like a key, and the receptor is like a lock. Only if the neurotransmitter fits into a particular receptor can it excite or inhibit the postsynaptic neuron. Some drugs used in the treatment of schizophrenia work by binding themselves to certain receptors, taking the place of certain neurotransmitters, and thus effectively jamming the lock in the lock-and-key system, and preventing the neurotransmitter from functioning properly. So-called designer drugs are deliberately structured to take the place of particular neurotransmitters.

There are many different

kinds of neurotransmitters, each with a particular chemical

structure and range of action. You don't have to memorize

all these neurotransmitters -- this is a course in