Memory

Learning and perception both presuppose a capacity for memory.

- Learning is a relatively permanent change in behavior resulting from experience, and memory records both the experience and the new behavior.

- Perception combines information extracted from the stimulus environment (features and patterns that provide the stimulus basis for perception) with information retrieved from memory (knowledge and beliefs about the world that provide the cognitive basis for perception).

Perceptual activity, in turn, changes the contents of memory: In a very real sense, memory is a byproduct of perception. The records of perceptual activity, known as memory traces or engrams, influence behavior long after the perceptual stimulus has disappeared.

Thus, in order to understand learning and perception we have to understand how knowledge is encoded, stored, and retrieved in the information-processing system we call the human mind.

Memory consists of mental representations of knowledge, stored in the mind. This knowledge comes in a variety of forms:

- facts (e.g., whether quartz is harder than granite; when Martin Luther nailed 95 theses to the church door; your dormitory telephone number);

- language (e.g., the meaning of the word "obstreperous"; how to indicate that something was done without taking personal responsibility for it; whether the sentence, "The stole girl poor a coat warm" is grammatically correct);

- rules (e.g., how to take the square root of a number; the best route from the Union to Tolman Hall; how to tie a half-hitch knot);

- personal experiences (e.g., what you had for dinner last Friday night; the last time you behaved irresponsibly; the last number you looked up in a telephone directory).

In answering these questions, we must consult our memories. But not all questions are of the same kind, which raises the issue of whether there are different kinds of memory.

The Multistore Model of Memory

One distinction among memories is temporal -- how long the memory lasts. This distinction is based on the intuition that remembering an unfamiliar telephone number that you've just looked up is somehow different from remembering your own telephone number. One memory is permanent; the other is gone almost instantly.

Until recently, it was common

to distinguish among three different types of memory -- three

different storage structures, that held information

temporarily or permanently. This idea is known as the

multistore model of memory. The multistore model was

originally proposed by Waugh & Norman (1965) and Atkinson

& Shiffrin (1968), based on a computer metaphor of the

mind -- although its roots go back to William James (1890).All

versions of the multistore model posit the existence of a

number of different storage structures (hence the

name), as well as a set of control processes which

move information between them, transforming it along the way.

Until recently, it was common

to distinguish among three different types of memory -- three

different storage structures, that held information

temporarily or permanently. This idea is known as the

multistore model of memory. The multistore model was

originally proposed by Waugh & Norman (1965) and Atkinson

& Shiffrin (1968), based on a computer metaphor of the

mind -- although its roots go back to William James (1890).All

versions of the multistore model posit the existence of a

number of different storage structures (hence the

name), as well as a set of control processes which

move information between them, transforming it along the way.

- Incoming sensory information is initially held in the sensory registers.

- By means of attention, some information is transferred to short-term memory.

- Information is maintained in short-term memory by means of rehearsal.

- If the information in short-term memory receives enough rehearsal, it is copied into long-term memory by means of certain encoding processes.

- Information can be transferred from long-term memory back to short term memory by means of certain retrieval processes.

- Information in long-term memory is also used to support pattern-recognition processes in the sensory registers.

The multistore model of memory quickly

became so popular that be became known as the "modal model" of

memory, meaning that it was the view most frequently adopted

by memory theorists. However, the precise vocabulary of the

multistore model varied from theorist to theorist.

- Some theorists talked about sensory registers while others talked about sensory stores or sensory memories.

- Some theorists used terms like short-term store and long-term store instead of short- and long-term memory.

- Other theorists, adopting terms introduced by William James, referred to short-term memory as primary memory and long-term memory as secondary memory. I prefer this usage myself.

- Still others preferred to talk about working memory rather than short-term memory.

Don't get hung up on these terminological differences. Actually, there is a serious difference between the traditional concept of primary memory and working memory, which we'll discuss later.

The Sensory Registers

The sensory registers contain a complete, veridical representation of sensory input. They are of unlimited capacity, and are able to hold all the information that is presented to them. According to the model, there is one sensory register for each sensory modality. Two of the sensory registers have been given special names: for vision, the icon; for audition, the echo. The sensory registers store information in precategorical form -- that is, the input is not yet processed for meaning. Moreover, information is held in the sensory registers for only a brief period of time, perhaps less than a second. Information is lost from the sensory registers through decay or displacement; it cannot be maintained by any kind of cognitive activity.

The

characteristics of the sensory registers were demonstrated in

a classic experiment on iconic memory by George

Sperling (1960). Sperling presented his subjects with a visual

display consisting of a 3x4 array of letters; the array was

displayed only very briefly. Then, after an interval of only

0-1 seconds after the offset of the display, they were asked

to report the contents of the array.

- In the whole report condition, the subjects were instructed to report all the letters in the array.

- In the partial report condition, they were presented with a tone of low, medium, or high pitch which cued them to report only the letters in the lower, middle, or upper row.

- When subjects were instructed to report the whole array, they didn't do very well -- they were only able to remember 4-5 of the items, on average.

- But when subjects were cued immediately to report only a portion of the array, they were able to do this almost perfectly. Based on the random sampling by rows, Sperling estimated that at least 10 of the 12 items in the array were available.

- But the longer the partial-report cue was delayed, the worse the subjects did. If the partial-report cue was delayed by as little as a second, performance in the partial-report condition dropped to the levels observed in the whole-report condition.

From this, Sperling concluded that the icon contained a veridical representation of the stimulus array, but that the array decayed very briefly, within about a second.

Similar experiments were done on echoic memory, with similar results, leading to similar conclusions.

In a critique of the concept of iconic memory, Ralph Haber (1983) suggested that it might only be useful for reading a book in a lightning storm! Still, something like the sensory registers must exist, as an almost logically necessary link between sensation and memory. Iconic memory might be especially useful to nonhuman animals, as it would help both predators and prey to catch a glimpse of each other "out of the corner of their eye". And echoic memory might be even more useful as a support for speech perception, where individual sounds go by very fast, and have to be captured to link with other sounds.

Primary (Short-Term) Memory

Information is transferred from the sensory registers to primary (or short-term) memory after it has received some degree of processing -- that is, after it has been subject to feature detection, pattern recognition, and directed attention. Typically, primary memory is thought of as storing an acoustic representation of the information -- that is, what the name or description of an object or event sounds like. Primary memory has a limited capacity: it can contain only about 7 (plus or minus 2) items (though the effective capacity of this store can be increased by "chunking" items together -- the capacity of memory is approximately 7 "chunks", rather than 7 items). Information can be maintained in primary memory indefinitely by means of rehearsal. If it is not rehearsed, the information either decays over time, or (more likely) is displaced by new information.

The

capacity of primary memory is often demonstrated by the digit-span

test, in which subjects are read a list of digits (or

letters), and must repeat them back to the experimenter. Most

people do pretty well with lists up to 6 or 7 digits in

length, but start to do poorly with lists that are longer than

that.

But the capacity

of primary memory can be increased by chunking -- by

grouping items together in some meaningful way. The "Alphabet

Song" from Hair ("The American Tribal Love-Rock

Musical") capitalizes on chunking.

In a famous paper, George Miller (1956)

characterized the capacity of short-term memory as "The

magical number seven, plus or minus two". That is, we can hold

about 7 items in short-term memory at a time. It was this kind

of research, at Bell Laboratories, that fixed the length of

telephone numbers at 7 digits -- a 3-digit exchange plus a

4-digit number. If anything new is to enter short-term memory,

something has to go out to make room for it.

There's a story here. Miller made the capacity of short-term memory famous, but -- as he acknowledged -- it wasn't his discovery. Credit for that goes to John E. Kaplan (1918-2013), a psychologist and human-factors researcher who spent his career at Bell Laboratories, the research arm of AT&T. Prior to the 1940s, telephone exchanges typically began with the first two letters of a familiar word, followed by 5 digits -- as in the Glenn Miller tune, "Pennsylvania 6-500" (the actual telephone number of the Pennsylvania Hotel, often claimed as the oldest telephone number in New York City), or the film Butterfield 8 (after an exchange serving the upscale Upper West Side of Manhattan. But the telephone company (there was only one at the time!) worried about running out of phone numbers, and so switched to an all-digit system. This raised the question of how many digits people could hold in memory. Kaplan did the study, and determined that the optimal number was 7. (Kaplan also determined the optimal layout for the letters and numbers on the rotary, and then the touch-pad telephone -- reversing the conventional placement on a hand calculator or computer keyboard -- but that's another story).

However,

as Miller also noted, the effective capacity of short-term

memory can be increased by chunking. If you group the

information into chunks, and the chunks into "chunks of

chunks", you can actually hold quite a bit of information in

short-term memory. For example, when area codes were added to

telephone numbers, they were assigned according to

geographical area, so that people wouldn't have to remember

all 3 digits of the area code, thus straining the capacity of

short-term memory.

Secondary (Long-Term) Memory

If it receives enough rehearsal, information may be transferred to secondary (or long-term)memory, the permanent repository of stored knowledge. The capacity of secondary memory is essentially unlimited. Information is not lost from this structure, either through decay or displacement.

The Serial-Position Curve. Support

for a structural distinction between primary and secondary

memory is provided by the serial-position curve. Consider a

form of memory experiment known as single-trial free recall:

the experimenter presents a list of items for a single study

trial, and then the subject simply must recall the items that

he or she studied. If we plot the probability of recalling

each item against its position in the study list, we typically

observe a bowed curve: items in the early and late portions of

the list are more likely to be recalled than those in the

middle.

The Serial-Position Curve. Support

for a structural distinction between primary and secondary

memory is provided by the serial-position curve. Consider a

form of memory experiment known as single-trial free recall:

the experimenter presents a list of items for a single study

trial, and then the subject simply must recall the items that

he or she studied. If we plot the probability of recalling

each item against its position in the study list, we typically

observe a bowed curve: items in the early and late portions of

the list are more likely to be recalled than those in the

middle.

Here are selected serial-position curves generated by students taking Psychology 1 who completed the "Serial Position" exercise an exercise on the ZAPS website. The values for the "Reference" group were generated from all the students, from all classes all over the world, who completed the exercise that year.

The enhanced memorability of items

appearing early in the list is called the primacy effect. The

enhanced memorability of items appearing late in the list is

called the recency effect. The primacy and recency effects are

affected by different sorts of variables.

Increasing the interval between adjacent items, and thus increasing the amount of rehearsal each item can receive, increases primacy but has no effect on recency.

Engaging the subject in a distracting task immediately after presentation of the list, and then increasing the length of the retention interval, reduces recency but has no effect on primacy.

Therefore, it may be concluded that the primacy effect reflects retrieval from secondary memory, while the recency effect reflects retrieval from primary memory.

Neuropsychological

evidence also supports a structural distinction between

primary (short-term) and secondary (long-term) memory. For

example, Patient H.M., who suffered from the amnesic syndrome

following the surgical removal of his hippocampus and related

structures in the medial portion of his temporal lobes, had

normal digit-span performance, but impaired free recall.

Apparently, he had normal short-term memory, but impaired

long-term memory. In contrast, another patient, known as K.F.

(Shallice & Warrington, 1970), who had lesions in his left

parieto-occipital area, had grossly impaired digit-span

performance, but normal free recall of even 10-item lists. The

fact that damage to two different parts of the brain results

in different patterns of performance on short- and long-term

memory tests supports a structural distinction between the two

kinds of memory.

Primary Memory and Working Memory

Nevertheless, most memory theorists no longer prefer to think of a structural distinction between primary and secondary memory (and, as indicated by the comment by Ralph Haber cited above, there is also a debate about the sensory registers). For example, consider Patient K.F., just cited as a contrast to Patient H.M. K.F. has poor short-term memory but normal long-term memory. But according to the multistore model of memory, short-term memory is the pathway to long-term memory. This implies that you can't have long-term memory if you don't have short-term memory. But K.F. has long-term memory, despite the fact that he apparently doesn't have short-term memory.

If you

consider primary and secondary memory to be separate stores,

you need a way for primary and secondary memory to interact.

Accordingly, Alan Baddeley (1974, 1986, 2000) introduced the

notion of working memory, which consists of several

elements:

- a phonological loop for auditory rehearsal (like repeating a telephone number over and over);

- a visuo-spatial sketchpad that recycles visual images of a stimulus;

- a central executive that controls conscious information-processing; and

- an episodic buffer that connects the phonological loop and visuo-spatial sketchpad to secondary memory.

While

Baddeley's view implies that working memory is a separate

store from secondary memory (which is why the episodic buffer

is needed to link them), John Anderson has proposed an

alternative "unistore" conception of working memory is simply

that subset of declarative memory which contains activated

representations of:

- the organism

- in its current environment,

- its currently active processing goals, and

- pre-existing declarative knowledge activated by perceptual inputs or the operations of various thought processes.

When these four types of representations are linked together, an episodic memory is formed.

Many investigators have adopted Baddeley's multistore view of working memory, but there is also evidence favoring Anderson's unistore view. For the record, I tend to side with Anderson, but my principal focus in these lectures is on secondary memory, so nothing much rides on it.

Another option is to think of memory as consisting of a single, unitary store. The modal model of memory implies that primary ( short-term) and secondary (long-term) memory are separate "mental storehouses". The alternative view is that primary (or working) memory consists of those elements of secondary memory that are currently in a state of activation, or currently being used by the cognitive system (Crowder, 1993; Cowan, 1995).

These lectures are focused mostly on long-term, secondary memory.

Attention: Linking Perception and Memory

James

distinguished between primary (short-term) and secondary

(long-term) memory in terms of attention. Primary memory is

the memory we have of an object once it has disappeared, but

while we are still paying attention to our image of it.

Secondary memory is the memory we have of an object once we

have stopped paying attention to our image of it. The

distinction may be illustrated with memory for telephone

numbers:

- When someone you meet gives you their telephone number, and you repeat it to yourself until you can write it down, that's primary memory.

- When you've lost the piece of paper on which you've written that number, but you remember it anyway, that's secondary memory.

When psychologists talk about "secondary" or "long-term" memory, they mean whatever trace remains of a stimulus that has disappeared, after 30 seconds of distraction. So it's clear that attention links perception and memory. But what is attention?

"Every one knows what attention is", William James famously wrote in the Principles of Psychology (1890/1980, p. 403-404.

It is the taking possession by the mind, in clear and vivid form, of one out of what seem several simultaneously possible objects or trains of thought. Focalization, concentration, of consciousness are of its essence. It implies withdrawal from some things in order to deal effectively with others, and is a condition which has a real opposite in the confused, dazed, scatterbrained state which in French is called distraction, and Zerstreutheit in German.

James went on to distinguish between different varieties of attention: to the objects of sensation in the external world, or to thoughts and other products of the mind; immediate, when the object of attention is intrinsically interesting, or derived, where the object is of interest by virtue of its relation to something else; passively drawn, as if by an involuntary reflex, or actively paid, by a deliberate and voluntary act.

James's description has never been bettered, but before World War II there was little scientific literature on the topic. Partly this was due to the influence of the structuralists, like Titchener, who took attention as a given: you can't introspect without paying attention. R.S. Woodworth, inclined more toward functionalism, offered a common-sense theory of how one stimulus was selected for attention over another, and later worked on the problem of the span of apprehension, or the question of how many objects we can perceive in a single glance. But after World War I attention proved too mentalistic a concept for the behaviorists who followed John B. Watson, and the word pretty much dropped out of the vocabulary of psychologists. Accordingly, it took more than half a century before psychologists could begin to figure out how attention really works.

The Attentional Bottleneck

One of the landmark events of the cognitive revolution within psychology was the development of theories of the human mind expressly modeled on the high-speed computer. According to these theories, human thought is characterized as information processing. Schematically, these theories of human information processing can be represented as flowcharts, with boxes representing structures for storing information, and arrows representing the processes which move information from one storage structure to another. Attention is one of these processes, and it determines which information will become conscious and which will not.

One of

the first models of this type was proposed by Donald

Broadbent, a British psychologist. Information arriving at the

sensory receptors is first held in a short-term store, from

which it passes through a selective filter into a limited

capacity processor that compares sensory information with

information already present in a long-term store. Depending on

the results of this comparison, the newly arrived sensory

information may itself be deposited in the long-term store,

and it may be used to generate some response executed through

the body's systems of muscles and glands. The limited-capacity

processor is tantamount to consciousness; thus, attention is

the pathway to awareness. Preattentive processing is

unconscious processing: So how much preattentive processing is

there? According to Broadbent, not very much. According to his

theory, preattentive processing is limited to a very narrow

range of physical properties, such as the spatial location and

physical features of the stimulus.

Broadbent

based this conclusion on experiments conducted by Colin Cherry

on what Cherry called "the cocktail-party phenomenon". When

you're at a cocktail party, there are lots of conversations

going on, but you can only pay attention to one of these at a

time. Attentional selection is accomplished by virtue of

spatial and physical processing: you look at the person you're

talking to, and if you should happen to look away for a moment

(for example, to ask a waiter to refresh your drink), you

maintain the conversation by staying focused on the sound of

the other person's voice. Of course, the moment you look away,

your attention is distracted from the conversation, and you're

likely to miss something that's been said. Attention is drawn

and focused on physical grounds; analysis of meaning

comes later.

Cherry simulated the cocktail-party phenomenon in an experimental paradigm known as dichotic listening or shadowing. In a dichotic listening experiment, the subject is asked to repeat (or shadow) a message presented over one of a pair or earphones (or speakers), while ignoring a competing message presented over the other device. Normal subjects can do this successfully, but their ability to repeat the target passage comes at some expense. While they are able to remember much of what was presented over the attended channel, they pretty much forget whatever was presented over the unattended channel. Moreover, while they generally noticed when the voice on the attended channel switched from male to female, they fail to do so when the voice switched from one language to another, or from forwards to backwards speech.

The dichotic listening experiment simultaneously reveals the limited capacity of attention and the basis on which attention selection occurs. Just as we can only pay attention to one conversation at a time, so we can only process information from one channel at a time. And just as we pay attention to our companions by looking at them, so we discriminate between attended and unattended channels on the basis of their spatial location or other physical features. Physical analysis comes first, preattentively; analysis of meaning comes later, post-attentively. The implication of the filter model is that while certain aspects of physical structure can be (and probably must be) analyzed unconsciously, meaning analysis must be performed consciously.

A Cocktail-Party Symphony

A movement of Symphony #5 ("Solo", 1999) by the American composer Libby Larsen is subtitled "The cocktail party effect", and was inspired by Cherry's experiments on dichotic listening, and referring to our ability to isolate and pay attention to a single voice among the extraneous noise one encounters at a crowded cocktail party -- or, in this case, a single instrumental line against the background of full orchestra. "It's a kind of musical 'Where's Waldo?'" says Larsen. "In this case, Waldo is a melody, introduced at the beginning of the movement, from then on hidden amidst the other music."

Attention in the Multistore Model of Memory

Other

information-processing theorists, approaching the problem

from a different angle, came to much the same conclusions

regarding the limitations on pre-attentive information

processing. Thus, while Cherry and Broadbent were analyzing

the properties of attention, other investigators, such as

Nancy Waugh and Donald Norman, and Richard Atkinson (now

President of the University of California) and Richard

Shiffrin (they were both professors at Stanford at the

time), were studying the properties of the storage

structures involved in human memory. According to the

multistore model of memory, sensory information first

encounters a set of sensory registers, which hold,

for a very brief period of time, a complete and veridical

representation of the sensory field. In principle, there is

one sensory register for each modality of sensation: Neisser

called the visual register the icon and the

auditory one the echo. The sensory registers hold

vast amounts of information, but we are only aware of that

subset of stored information, which has been attended to and

copied into another storage structure, known as primary

or short-term memory. With further processing,

information held in primary memory can be encoded in a

permanent repository of knowledge known as secondary

or long-term memory. In the absence of further

processing, the contents of primary memory decay or are

displaced by new material extracted from the sensory

registers or retrieved from secondary memory.

Other

information-processing theorists, approaching the problem

from a different angle, came to much the same conclusions

regarding the limitations on pre-attentive information

processing. Thus, while Cherry and Broadbent were analyzing

the properties of attention, other investigators, such as

Nancy Waugh and Donald Norman, and Richard Atkinson (now

President of the University of California) and Richard

Shiffrin (they were both professors at Stanford at the

time), were studying the properties of the storage

structures involved in human memory. According to the

multistore model of memory, sensory information first

encounters a set of sensory registers, which hold,

for a very brief period of time, a complete and veridical

representation of the sensory field. In principle, there is

one sensory register for each modality of sensation: Neisser

called the visual register the icon and the

auditory one the echo. The sensory registers hold

vast amounts of information, but we are only aware of that

subset of stored information, which has been attended to and

copied into another storage structure, known as primary

or short-term memory. With further processing,

information held in primary memory can be encoded in a

permanent repository of knowledge known as secondary

or long-term memory. In the absence of further

processing, the contents of primary memory decay or are

displaced by new material extracted from the sensory

registers or retrieved from secondary memory.

Primary memory, so named by William James, is the seat of consciousness in the multistore model, combining information extracted through sensory processes with prior knowledge retrieved from secondary or long-term memory. Like the central processor in Broadbent's model, the capacity of primary memory is severely limited: it was famously characterized by George Miller in terms of

"The magical number seven, plus or minus two"

items. Of course, as Miller pointed out, the capacity of primary memory can be effectively increased by "chunking", or grouping related items together. Thus, consciousness is limited to about seven "chunks" of information, far less than is available in either the sensory registers or secondary memory. Unattended information held in the sensory registers, or residing in latent form in secondary memory, may be considered preconscious -- available to consciousness, under certain conditions, but not immediately present within its scope.

Atkinson and Shiffrin focused on memory structures, but the implications of their model for attention and consciousness are the same as those of Broadbent's. Attention selects information from the sensory registers for further processing in primary memory, but the information in the sensory registers is represented in "pre-categorical" form. It has been analyzed in terms of certain elementary physical features, such as horizontal or vertical lines, angles and curves, and the like -- a process known as feature detection. In addition, certain patterns or combinations of features have been recognized as familiar -- a process known as pattern recognition. But that is about all. For the most part, the multistore model holds that the analysis of meaning takes place in primary memory, which has a direct connection to the storehouse of knowledge comprising secondary or long-term memory.

Thus, the multistore model of memory attention selects sensory information based on its physical and spatial properties -- where it is and what it looks and sounds like. It does not select information based on its meaning -- indeed, it cannot do so, because meaning analyses are performed after attentional selection has taken place, and the information has been copied into primary memory. At least so far as perception is concerned, preattentive processes are limited to physical and spatial analyses, and preconscious representations contain information about the spatial and physical features of objects and events. Like the filter model, then, the classic multistore model of memory permits certain physical analyses to transpire unconsciously, but requires that meaning analyses be performed consciously.

Early vs. Late Selection

But wait: that can't be the case, because attention, and therefore consciousness, is sometimes drawn to objects and events on the basis of their meaning, not just their spatial location or their physical features. At a cocktail party, we do shift our attention if we should happen to hear someone mention our own name -- a shift that would seem to require attentional selection based on meaning, not just sound. As it happens, even in Cherry's experiment, subjects shifted attention in response to the presentation of their own names on about 35% of the trials. Neville Moray confirmed this observation. In his shadowing experiment, the phrase "you may stop now" was presented, without warning, over the unattended channel. Subjects noticed this about 6% of the time, but when the phrase was preceded by their names, the rate went up to 33%.

Anne

Treisman (who taught at Berkeley for several years)

performed a dichotic listening experiment in which the

shadowed message shifted back and forth between the

subject's ears. Treisman observed that, on about 30% of

trials, her subjects followed the message from one ear to

the other. When they realized that they had made a mistake,

the subjects shifted their attention back to the

original ear. However, the fact that the shift occurred at

all indicates that there must be some preattentive

processing of the meaning of an event, beyond its spatial

and physical structure.

Treisman's observations led her to modify Broadbent's theory. In her proposal, attention is not an absolute filter, but rather merely attenuates processing of the unattended channel. This attenuator can be tuned to demands arising from either the person's goals or the immediate context. Thus, at a cocktail party, if you are being a good guest and behaving yourself properly, you pay most attention to the person who is presently talking to you, with attentional selection controlled by physical features such as the person's location and tone of voice. However, you are also attentive for people talking about you, so that attentional selection open to particular semantic features as well. Put more broadly, attention is not determined solely by the physical attributes of a stimulus. Rather, it can be deployed depending on a perceiver's expectancies and goals.

Unlike Broadbent's original model, Treisman's approach was a truly cognitive theory of attention. It departed from a focus on "bottom-up" processing, driven by the physical features of stimulation, and affords a clear role for "top-down" selection based on semantic attributes. Because attentional selection can be based on meaning, the model implies that preattentive processing is not limited to the analysis of the physical structure and other perceptual attributes of a stimulus. Semantic processing can also occur preattentively. But how much semantic processing can take place?

This question set the stage for a long-running debate between early- and late-selection theories of attention. According to early-selection theories, like Broadbent's original proposal, attentional selection occurs relatively early in the information-processing sequence, before meaning analysis can occur. According to late-selection theories, such as those foreshadowed by Treisman, attentional selection occurs relatively late in information processing, after some semantic analysis has taken place. The question is an important one, because the answer determines the limits of preattentive or preconscious processing.

Late-selection

theories of attention abandon the notion of an attentional

filter or attenuator entirely. According to the most radical

of these, proposed by J. Anthony Deutsch and Diana Deutsch,

and by Donald Norman, all inputs are analyzed fully

preattentively, and attention is deployed depending on the pertinence

of information to ongoing tasks. In this view, attention is

required for the selection of a response to stimulation, but

not for the selection of inputs to primary memory. For this

reason, late-selection theories are also called response-selection

theories. They imply that a great deal of semantic

processing takes place preattentively, greatly expanding the

possibilities for preconscious processing. It should be

understood that late selection theories do not hold that

literally every physical stimulus in the person's sensory

field is analyzed preattentively for meaning. They simply

hold that meaning analysis is preattentive, just as physical

analysis is. Whatever gets processed, gets processed

semantically as well as physically, at least to some extent.

Late-selection

theories of attention abandon the notion of an attentional

filter or attenuator entirely. According to the most radical

of these, proposed by J. Anthony Deutsch and Diana Deutsch,

and by Donald Norman, all inputs are analyzed fully

preattentively, and attention is deployed depending on the pertinence

of information to ongoing tasks. In this view, attention is

required for the selection of a response to stimulation, but

not for the selection of inputs to primary memory. For this

reason, late-selection theories are also called response-selection

theories. They imply that a great deal of semantic

processing takes place preattentively, greatly expanding the

possibilities for preconscious processing. It should be

understood that late selection theories do not hold that

literally every physical stimulus in the person's sensory

field is analyzed preattentively for meaning. They simply

hold that meaning analysis is preattentive, just as physical

analysis is. Whatever gets processed, gets processed

semantically as well as physically, at least to some extent.

The primary difference

between early-and late-selection theories of attention is

illustrated in this figure, adapted from Pashler (1998). The

left side of the figure represents four stimulus events

competing for attention. The early stages of information

processing provide physical descriptions of these stimuli,

followed by identification and, at late stages of

information processing, semantic description, and finally

some response. According to early-selection theories,

depicted in Panel A, preattentive processing is limited to

physical description; all available stimuli are processed at

this stage, but identification, semantic description, and

response are limited to the single stimulus selected on the

basis of the physical descriptions composed at the early

stages. According to late-selection theories, depicted in

Panel B, all available stimuli are also identified and

processed for meaning preattentively; attention and

consciousness are required only for response. In some

respects, identification is the crux of the difference

between early- and late-selection theories: early-selection

theories hold that conscious awareness precedes stimulus

identification, while late-selection theories hold that

identification precedes consciousness.

Filters vs. Resources

The debate between early- and late-selection theories of attention was very vigorous -- and to some, seemingly endless and unproductive. In response, some investigators completely reformulated the problem. In their view, attention was not any kind of filter or attenuator or selector; rather, attention was identified with mental capacity -- with the person's ability to deploy attentional resources in various directions.

The

earliest capacity theory was proposed by Daniel Kahneman

(who taught at Berkeley for several years), who equated

attention with mental effort. The amount of effort we can

make in processing is determined to some extent by our level

of physiological arousal, but even at optimal arousal levels

a person's cognitive resources are limited. Within these

limits, however, attention can be freely directed, based on

the individual's allocation policy. The allocation

policy, in turn, is determined by the individual's enduring

dispositions (innate or acquired) as well as by momentary

intentions. Thus, at a cocktail party, we may intend

to pay attention to the person we're speaking to, but we are

also disposed to pick up on someone else's mention of our

name. The person's ability to carry out some

information-processing function is determined by the

resources required for the task. If the tasks at hand are

relatively undemanding, he or she can carry out several

tasks simultaneously, in parallel, so long as there is no

structural interference between them (for example, we cannot

utter two sentences at once, because we have only a single

vocal apparatus). On the other hand, if the tasks are highly

demanding, the person focuses all of his or her attentional

resources on one task at a time, in series.

Spotlights and Zoom Lenses

A variant on the capacity model is the spotlight metaphor of attention initially proposed by Michael Posner and later adopted by Broadbent as well. In this view, metaphorically speaking, paying attention to something "throws light on the subject". The attentional spotlight "illuminates" whatever occupies the space it is focused on, permitting the information provided by that object to be processed. Once engaged on a particular portion of the stimulus environment, attention can also be disengaged and shifted elsewhere in response to various kinds of cues -- a process that takes some (small) amount of time. In Posner's theory, engagement, disengagement, and shifting (or movement) constitute elementary components in the attentional system, and someone who suffers from "attentional deficit disorder" presumably has a deficit in one or more of these components. Interestingly, neuropsychological and brain-imaging studies indicate that each of these component processes is controlled by a different attentional module, or system, in the brain. According to the spotlight metaphor, shifting attention moves the attentional spotlight from one portion of the sensory field to another. However, the attentional spotlight is not like a real spotlight. In the first place, there is only one of them: it is not possible to illuminate more than one portion of space at a time. Moreover, the attentional spotlight cannot expand or contract, so that, wherever it shines, it illuminates a constant portion of space. Along the same lines, other theorists have offered an analogy between attention and the zoom lens on a camera. There is only one zoom lens, just as there is only one spotlight, but unlike the attentional spotlight, the attentional zoom lens can widen or narrow its focus.

Of course, there is no reason why the scope of the attentional spotlight cannot be changed, just as is possible with a real spotlight, so the two models are not necessarily incompatible in that respect. However, comparing the two metaphors raises the question of what happens to objects that are outside the attentional field. A zoom-lens mechanism would seem to exclude them entirely, so we would not expect any substantial "preattentive" processing to occur.

But even a tightly focused spotlight illuminates portions of space other than that on which it is focused (to demonstrate this for yourself, turn on a flashlight in your garage at night). So, following the metaphor, it should be possible to process information that lies outside the scope of conscious attention. Still, even under the spotlight metaphor, there are limits to preattentive or preconscious processing. A diffuse spot casts less light on any particular portion of space than does a tightly focused one. Accordingly, we would expect there to be limits on the amount of processing that can occur on the fringes of the illuminated area. We appear to be back where we were with the capacity model -- which is appropriate, after all, given that the spotlight and zoom-lens metaphors are just variants on the capacity metaphor. While we might expect relatively complex semantic processing to be possible at the center of attention, processing on the periphery might be limited to only relatively simple, physical attributes.

For the record, the filter, attenuator, and capacity models of attention, including the spotlight and zoom-lens variants on the capacity model, do not exhaust the possibilities for attention theory. These are models of spatial attention -- that is, models that attempt to understand how attention is focused on particular locations in space, so that the objects and events occurring in those locations can be processed. However, there is another class of models, which propose that attention is focused on the objects themselves, rather than on their spatial locations. For example, in Balint's syndrome, patients with bilateral lesions in the posterior parietal and lateral occipital lobes are unable to perceive more than one object at a time, even if these objects are placed at the same location. In other words, they have difficulty attending to a point in space, but have difficulty attending to objects within that space. It is possible that the attentional system is space-based at one level and object-based at another level, but this does not change our central problem: what is the cognitive fate of unattended objects? To what extent is preattentive processing possible? Can preattentive processing go beyond relatively simple analyses of the physical properties of the stimulus and extend to relatively complex semantic analyses as well?

The Concept of Automaticity

The key to answering this question, at least in the context of Kahneman's capacity model of attention is the concept of automaticity. In terms of the person's allocation policy, enduring dispositions are applied automatically, while momentary intentions are applied deliberately. Momentary intentions call for mental effort, and they drain cognitive resources. By contrast, automatic processes do not require much mental effort, and so they don't consume many cognitive resources. Put another way, automatic processes are unconscious: they are executed without any conscious intention on our part. And, although the mental contents they generate may be available for conscious introspection, the processes themselves are not: they are performed outside the scope of conscious awareness. Some automatic processes are innate: they come with us into the world, as part of our phylogenetic heritage. Others, however, are acquired through experience and learning. Either way, they are preattentive, or preconscious, because they do not require the deployment of attention, and they do not enter into conscious awareness.

The implications for the scope of unconscious, preattentive processing are clear: if automatic processes are unconscious, then any mental activity can be performed unconsciously, so long as it has been automatized, one way or another. In this way, automaticity expands the scope of unconscious influence. Early-selection theories of attention restricted unconscious processing to relatively primitive analyses of physical features. Capacity theories permit even complex mental processes to be performed unconsciously, so long as they are automatized.

Attentional theorists were quick to pick up on the notion of automaticity, not least, I think, because it skirted the problem of distinguishing empirically between early- and late-selection theories of attention. For example, David LaBerge argued that most complex cognitive and motoric skills cannot be performed consciously, because their cognitive requirements exceed the capacity of human attentional resources. For example, a reader must translate the visual stimulus of a phrase such as

ATTENTION IS THE GATEWAY TO MEMORY

into an internal mental

representation of the word's meaning. In formal terms, this

translation process involves:

- employing feature detectors to analyze the graphemic information on the printed page into elementary lines, angles, curves, intersections, and the like;

- recognizing particular combinations of these features as letters of the alphabet;

- recognizing certain spelling patterns as familiar syllables and morphemes;

- recognizing certain combinations of syllables and morphemes as familiar words; and, above the level of individual words,

- recognizing certain combinations of words as familiar phrases, etc.

If we had to follow this sequence consciously, stroke by stroke, letter by letter, word by word, phrase by phrase, we'd never get any reading done. In fact, of course, skilled readers get a lot of reading done. LaBerge and Samuels argued that this happens because at least some components of the reading process have become automatized -- they just happen without any intention or awareness on our part, freeing cognitive resources for other activities, such as the task of making sense of what we have read. The first process, feature detection, is innately automatic: we are hard-wired to do it. Processes at other levels can be automatized through learning. At early stages of learning, they are effortful and conscious; and later stages, they become effortless and unconscious.

At

At

around the same time, Posner and Snyder used the Stroop

effect to illustrate the distinction between automatic

and strategic processing. In the basic "Stroop"

experiment, subjects are presented with a list of letter

strings names printed in different colors, and were asked to

name the color in which each string is printed. When the

string consists of random letters, the task is fairly easy,

as indicated by relatively rapid response latencies. When

the letter string is a meaningful word, however, response

latency increases. Of special interest are conditions where

the words are the names of colors. Although one would think

that interference would be reduced if the color word matched

the color of the ink in which it was printed (e.g., the word

yellow printed in yellow ink), there is still some

interference, compared to the non-word control condition.

The task is especially great when the word and its color do

not match (e.g., yellow printed in green ink), it is very

hard. Despite the subjects' conscious intention to name ink

colors, and to ignore the words themselves, they cannot help

reading the color names, and this interferes with naming of

the colors. It just happens automatically. Other early work

on automaticity was performed by Schneider and Shiffrin.

The Stroop Effect in Art

Jasper Johns, a prominent

contemporary American artist, has often used

"psychological" material like Ruben's Vase and Jastrow's

Duck-Rabbit in his art. He's also done a variant on the

Stroop test. In "False Start" (1959), he splashes paint in

various colors across the canvas, and then overlays the

colors with color names -- sometimes the name matches the

color, sometimes it does not.

Jasper Johns, a prominent

contemporary American artist, has often used

"psychological" material like Ruben's Vase and Jastrow's

Duck-Rabbit in his art. He's also done a variant on the

Stroop test. In "False Start" (1959), he splashes paint in

various colors across the canvas, and then overlays the

colors with color names -- sometimes the name matches the

color, sometimes it does not.

These seminal studies

laid down the core characteristics of automatic, as opposed

to controlled processes:

- Inevitable evocation: Automatic processes are inevitably engaged by the appearance of specific environmental stimuli, regardless of the person's conscious intentions;

- Incorrigible completion: Once invoked, they proceed inevitably to their conclusion;

- Effortless execution: In either case, execution of an automatic process consumes no attentional resources.

- Parallelism: Because they consume no attentional resources, automatic processes do not interfere with other ongoing cognitive processes.

- Automatization: Some processes are innately automatic, or nearly so, while others are automatized only after extensive practice with a task.

- Unconscious: For much the same reason, automatic processes leave no consciously accessible traces of their operation in memory.

Some investigators added other

properties to the list, but for most theorists, two features

are especially important in defining the concept of

automaticity: automatic processes are invoked regardless of

the subject's intentions, and their execution consumes no

(or very little) cognitive capacity. Note that, in terms of

capacity theory, these two fundamental properties are

linked. According to Kahneman, voluntary control occurs by

virtue of the allocation of cognitive resources to some

process. If automatic processes are independent of cognitive

resources, then they cannot be controlled be allocating, or

denying, cognitive resources to them. Everything about

automaticity comes down to a matter of capacity. In capacity

theories, attention must be "paid", and "paying" incurs a

cost. Automatic processes are attention-free, and incur no

costs. Because they don't involve attention, they can't be

started deliberately, they can't be stopped deliberately,

and can't be monitored consciously.

Some version of the capacity theory continues to dominate most research on attention, even though recent research has cast doubts on particular details. Similarly, recent research casts doubt on the strong version of automaticity: "automatic" processes aren't quite as automatic as we thought they were, and no matter how much they have been practiced, they still draw on cognitive resources to some extent.

Theories of attention and automaticity continue to evolve in response to new data -- that's the way things go in science. But if attention is what links perception and memory, the memory-based view of automatization links attention to memory as well: effortful processes depend on declarative memory, automatic ones depend on procedural memory. Which brings us full circle.

The best technical introduction to research and theory on attention is, naturally enough, The Psychology of Attention (1998) by Harold Pashler, a professor at UC San Diego. For a more popular treatment, see Focus: The Hidden Driver of Excellence (2013) by Daniel Goleman, a science writer who holds a PhD in psychology.

Memory Proper

We now turn our focus on long-term or secondary memory, or what James called memory proper -- what people ordinarily mean when they talk about memory.

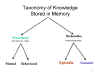

Classification of Knowledge

Adopting the unistore theory of memory

does not mean that all memory is the same. There may not be

different memory storehouses, but there are certainly

different types of knowledge stored in the single structure.

The fundamental distinction between types of knowledge is

between declarative and procedural knowledge.

Adopting the unistore theory of memory

does not mean that all memory is the same. There may not be

different memory storehouses, but there are certainly

different types of knowledge stored in the single structure.

The fundamental distinction between types of knowledge is

between declarative and procedural knowledge.

Declarative knowledge is factual

knowledge concerning the nature of the world, in both its

physical and its social aspects. It includes knowledge about

what words, numbers, and other symbols mean; what attributes

objects possess; what categories they belong to. It includes

knowledge of events, and the contexts in which they take

place. Declarative knowledge may be represented in propositional

format: that is, as sentences containing a subject,

verb, and object. For example:

- Blunt means "dull".

- Three is less than four.

- A red octagonal sign means stop.

- Automobiles have wheels.

- Chevrolets are automobiles.

- A hippie touched a debutante.

It is sometimes convenient to distinguish between perception-based and meaning-based representations in declarative memory.

- Perception-based representations often take the form of sensory images, and preserve information about the physical structure of an object or event -- information about its physical details and the spatial relations among its components. A perception-based representation of a car is like a mental "picture" of what a car looks like.

- Meaning-based representations often take the form of verbal descriptions, and preserve information about the meaning and conceptual relations of an object or event. A meaning-based representation of a car is like a dictionary entry, that indicates that a car is a type of motor vehicle, often powered by an internal-combustion engine, used for transporting people on roads.

Procedural

knowledge consists of the rules and skills used to

manipulate and transform declarative knowledge. It includes

the rules of mathematical and logical operations; the rules

of grammar, inference, and judgment; strategies for forming

percepts, and for encoding and retrieving memories; and

motor skills. Procedural knowledge may be represented as productions

(or, more properly, production systems): statements

having an if-then, goal-condition-action format. For

example:

- If you want to divide A by B,then count the number of times B can be subtracted from A and still leave a remainder greater than or equal to zero.

- If you want to know how likeable a person is and you already know some of his or her personality traits,then count the number of his or her desirable traits.

- If you want to remember something,then relate it to something you already know.

- If you want to tie your necktie in a "Shelby" knot, and the tie is already drapes around your neck,then begin with the tie inside out, wide end under.

Attention, Automaticity, and Procedural Knowledge

The concept of procedural knowledge offers a different perspective on attention and automaticity. For example, John Anderson has suggested that effortful processes are mediated by declarative memory while automatic processes are mediated by procedural memory.

According to Anderson, skill learning

proceeds through three stages.

- At the initial cognitive stage, people memorize a declarative representation of the steps involved. When they want to perform the skill, they retrieve this memory and consciously follow the several steps the way they would follow a recipe.

- At an intermediate associative stage, the person practices the skill, becomes more accurate and efficient in following the algorithm. During this stage, Anderson argues that the mental representation of the skill shifts from declarative to procedural format -- from a list of steps to a production system -- a process known as proceduralization. (For those familiar with the operation of computer programs, proceduralization is analogous to the compilation of a program from the more or less familiar language of a BASIC or FORTRAN program into the machine language of 0s and 1s. In fact, Anderson has sometimes referred to this shift as knowledge compilation.) The availability of production rules eliminates the need to retrieve individual steps from declarative memory, and in fact the complied procedure eliminates many of these steps altogether.

- When knowledge compilation is complete, the person moves to the final autonomous stage, where performance is controlled by the procedural representation, not the declarative one. Autonomous performance is typically very fast, efficient, and accurate, but for present purposes the important thing is that the person has no awareness of the individual steps as he or she performs them. The person is certainly aware of intending to drive the car, and the person is certainly aware that the car is moving forward, but the steps that intervened between this goal and its achievement are simply not available to introspective access. The procedure is unconscious in the strict sense of the word. The loss of conscious access occurs, apparently, because the format in which the knowledge is represented has changed: declarative knowledge is available to conscious awareness, but procedural knowledge is not.

A couple of things need to be said about unconscious procedural knowledge. First, recall that at the associative stage the declarative and procedural representations exist side by side in memory, with control over performance gradually shifting from the former to the latter. This means that at the initial and intermediate stages of skill acquisition, the person may well be aware of what he or she is doing. At the autonomous stage, after proceduralization is complete and performance is being controlled by the new procedural knowledge structure, the corresponding declarative structure may persist, and the person may have introspective access to it. But it is not this declarative structure that is controlling performance -- in that sense, the person's awareness of what he or she is doing is indirect -- like reading a grammar text to find out how one is speaking and understanding English. If access to the declarative knowledge is lost, the person may regain it -- perhaps by slowing the execution of the procedure down, perhaps by memorizing a list of instructions corresponding to the procedure. But again, this awareness is indirect. The procedure itself, the knowledge that is actually controlling performance automatically, remains isolated from consciousness, unavailable to introspection.

Within the domain of declarative knowledge, a further distinction may be drawn between episodic and semantic memory.

Episodic memory is

autobiographical memory, and concerns one's personal

experiences. In addition to describing specific events, they

also include a description of the episodic context (i.e.,

time and place) in which the events

Episodic memory is

autobiographical memory, and concerns one's personal

experiences. In addition to describing specific events, they

also include a description of the episodic context (i.e.,

time and place) in which the events occurred, as well as a

reference to the self as the agent or experiencer of these

events; the reference to the self may also include

additional information about the person's cognitive,

emotional, and motivational state at the time of the event.

Thus, a typical episodic memory might take the following

form:

occurred, as well as a

reference to the self as the agent or experiencer of these

events; the reference to the self may also include

additional information about the person's cognitive,

emotional, and motivational state at the time of the event.

Thus, a typical episodic memory might take the following

form:

| I was happy when I saw | [self] |

| a hippie touch a debutante | [fact] |

| in the park on Saturday. | [context] |

Semantic memory, by contrast, is the mental lexicon, and concerns one's context-free, knowledge. Information stored in semantic memory makes no reference to the context in which it was acquired, and no reference self as agent or experiencer of events. Knowledge stored in semantic memory is categorical, including information about subset-superset relations, similarity relations, and category-attribute relations. Thus, some typical semantic memories might take the following form:

- I am tall.

- Happy people smile.

- Hippies have long hair.

- Touches can be good or bad.

- Debutantes wear long dresses.

- Parks have trees.

- Saturday follows Friday.

Semantic memory is often portrayed as a network, with nodes representing individual concepts and links representing the semantic or conceptual relations between them. Thus, a node representing car would be linked to other nodes representing vehicle,road, and gasoline.

Episodic memory can be portrayed in

the same way, with a node representing an event linked to

other nodes representing the spatiotemporal context in which

the event occurred, and the relation of the self to the

event.

Relations Among the Forms of Memory

The three basic forms of knowledge -- procedural, declarative-semantic, and declarative-episodic -- are related to each other.

Because most knowledge comes from experience, most semantic knowledge starts out with an episodic character, representing one's first encounter with the object or event from which the knowledge is derived. However, as similar episodes accumulate, the contextual features of the individual episodic memories are lost (or, perhaps, blur together), resulting in a fragment of generalized, abstract, semantic memory.

Note, too, that the formation of a meaningful episodic memory depends on the existence of a pre-existing fund of semantic knowledge (about hippies and debutantes, for example).

Most procedural knowledge starts out in declarative form, as a list representing the steps in a skilled activity. Through repeated practice, and overlearning, the form in which this activity is represented changes from declarative to procedural. At this point, the skill is no longer conscious, and is executed automatically whenever the relevant goals and environmental conditions are instantiated. How much practice? Some idea can be found in what is known as the thousand-hour rule.

Finally, memory itself is a skilled activity. Thus, the encoding, storage, and retrieval of declarative memories depends on procedural knowledge -- knowledge of how to use our memories.

Memory and Metamemory

An interesting aspect of memory is metamemory, or our declarative knowledge about our own memory systems.

At one level, metamemory consists of our awareness of the (declarative) contents of our memory systems. This is the kind of knowledge that permits us to know, intuitively, that we do know our home telephone numbers, that we might still know the telephone number of a former lover (after all, you called him or her often enough in the past), that we might recognize the main telephone at the White House (if for no other reason than that it was mentioned on the "In This White House" episode of the NBC television series The West Wing, 2000, when Ainsley Hayes checks her Caller ID; in any event, it's 202-456-1414) and that we do not know Fidel Castro's telephone number. It is the kind of knowledge that permits us to say, when confronted with a familiar face at a cocktail party, that we don't recall the person's name but would recognize it if we heard it -- what is sometimes known as the feeling of knowing (FOK) experience. These judgments are usually accurate, but in making them we do not have to search the contents of memory.

At another level, metamemory consists of our understanding of the (procedural) principles by which memory operates. This is the kind of knowledge that permits us to know that we can remember 4 digits, but better write down 11 digits; that rehearsing an item to ourselves helps us to remember it; that memory is better over short than long delays. Metamemory of this sort is quite accurate: when asked how they would go about remembering various things, even young children reveal a fairly sophisticated understanding of these principles.

Manifestations of Memory

In class, the lectures focus on declarative, episodic knowledge -- autobiographical memory, for personal experiences that occurred at particular times, and in particular places. Certain aspects of procedural knowledge, and of semantic memory, are covered in the lectures on thought and language.

In

the laboratory, episodic memory is often studied by means of

the verbal learning paradigm. In this procedure, the

subject's task is to memorize one or more lists of familiar

words. Consider, for example, an experiment that runs for

three days. On the first two days, the subject is presented

with two different lists of 25 words each: each list

contains five items from each of five conceptual categories

(e.g., four-legged animals, women's first names, etc.). The

subject is given one study trial per list, and studies each

list in a different room. On the third day, the subject's

memory is tested: she is asked to remember the contents of

each list. Note that the subject is not asked to add new

words to her vocabulary -- this would be a semantic memory

task. The subject may receive only a single study-test

trial; alternatively, trials may be continued until the list

is thoroughly memorized.

In

the laboratory, episodic memory is often studied by means of

the verbal learning paradigm. In this procedure, the

subject's task is to memorize one or more lists of familiar

words. Consider, for example, an experiment that runs for

three days. On the first two days, the subject is presented

with two different lists of 25 words each: each list

contains five items from each of five conceptual categories

(e.g., four-legged animals, women's first names, etc.). The

subject is given one study trial per list, and studies each

list in a different room. On the third day, the subject's

memory is tested: she is asked to remember the contents of

each list. Note that the subject is not asked to add new

words to her vocabulary -- this would be a semantic memory

task. The subject may receive only a single study-test

trial; alternatively, trials may be continued until the list

is thoroughly memorized.

In any case, each session, each list, and each word constitutes an event: it occurs in a particular place, at a particular time -- the room in which the list is studied, the list in which the word is studied, and the position of the word on the list. Thus, the verbal learning paradigm provides a simple laboratory model for perceiving and remembering events in the real world.

There are many variants on this basic paradigm. For example, the subject may study words or pictures, sentences or paragraphs, slide sequences or film clips; he or she may be asked to remember odors or tastes, or sequences of tones. In any case, each item comprises a unique event that occurs in a particular spatiotemporal context -- an episodic memory.

Memory for such a list may be tested by a number of different methods.

- In the method of free recall, the subject is simply asked to recall the items that were studied -- perhaps all the words together, or one list at a time. No further information is given, and no constraints are imposed on the manner of recall.

- In the method of serial recall, the subject must recall the items in the order in which they were originally presented.

- In the method of cued recall, the subject is offered specific prompts or hints concerning the list items -- for example, the first letter or first syllable of a word; the name of the category to which it belongs; or some closely associated word. These cues are intended to help remind the subject of list items.

- In the method of recognition, the subject is asked to examine a list consisting of items that actually appeared on the list (targets or "old" items), and others that did not appear (lures, foils, or "new" items). The subject is asked to distinguish between targets and lures, and, often, to rate his or her confidence in this judgment.

Notice that all these tests are really variants on cued recall. There's always some cue, even though it may be pretty vague. In serial recall, each item on the list serves as a cue for remembering the one that follows. In recognition, the "cue" is the item itself.

Another commonly used verbal-learning

procedure is called paired-associate learning -- a

technique invented by Mary Cover Jones, who did graduate

work with William James and became the first female

president of the American Psychological Association. Here

the items on the list consist of pairs of words instead of

single words -- a stimulus term (A), analogous to

the conditioned stimulus in classical conditioning, and a

response term (B), analogous to the conditioned response (I

don't believe these analogies, but the procedure is a

holdover from a time when memory was studied as a variant on

animal learning). Subjects study the A-B pairs as usual. At

the time of test, they are prompted with the stimulus term

(A), and asked to remember the response term (B). Again, the

subject may receive only a single study-test trial;

alternatively, trials may be continued until the list is

thoroughly memorized. Paired-associate learning is also a

variant on cued recall, where the stimulus term serves as a

cue for the recall of the target term.

Another commonly used verbal-learning

procedure is called paired-associate learning -- a

technique invented by Mary Cover Jones, who did graduate

work with William James and became the first female

president of the American Psychological Association. Here

the items on the list consist of pairs of words instead of

single words -- a stimulus term (A), analogous to

the conditioned stimulus in classical conditioning, and a

response term (B), analogous to the conditioned response (I

don't believe these analogies, but the procedure is a

holdover from a time when memory was studied as a variant on

animal learning). Subjects study the A-B pairs as usual. At

the time of test, they are prompted with the stimulus term

(A), and asked to remember the response term (B). Again, the

subject may receive only a single study-test trial;

alternatively, trials may be continued until the list is

thoroughly memorized. Paired-associate learning is also a

variant on cued recall, where the stimulus term serves as a

cue for the recall of the target term.

Now assume that a subject has memorized a list of paired-associates, A-B, and then is retested after some interval of time has passed. Usually, the subject will display some degree of memory failure: either a failure to produce the correct B response, or the production of an incorrect B response, when presented with stimulus A. Under these circumstances, a number of alternative memory tests may be conducted.

In relearning, the subject is asked to learn a second list of paired associates. Some of these are old, but forgotten, A-B pairs. Others are entirely new, and may be designated C-D pairs. It turns out that relearning of A-B pairs proceeds faster than of C-D pairs --a phenomenon known as savings (recall a related discussion, in the lectures on learning, of savings in relearning after extinction -- again, note the parallels between human memory and animal learning).

The "lie detector" paradigm relies on psychophysiology -- the use of electronic devices to monitor physiological changes -- for example, the electroencephalogram (EEG), for brain activity; the electrocardiogram (EKG) for cardiovascular activity; the electromyogram (EMG) for muscle activity; the electrodermal response (EDR), for changes in the electrical conductance of the skin -- while the subject is engaged in some mental function. If we present a subject with a list of items containing old, but forgotten, A-B pairs, and entirely new C-D pairs, we may well observe a differential pattern of psychophysiological response. Usually, as its name implies, the lie-detector is used to detect the willful withholding of information. But it can also be used to detect memory, since the only difference between A-B and A-D pairs is that the former are old while the latter are new.

In the bulk of these lectures we focus on episodic memory, memory for discrete events, as measured by recall or recognition, and ask:What makes the difference between an event that is remembered and one that is forgotten?

Principles of Conscious Recollection

Just as perception depends on memory, so memory depends on perception. Perception changes the contents of the memory system, leaving a trace or engram which persists long after the experience has passed.

Stage Analysis

We

can understand the causes of remembering and forgetting in

terms of three stages of memory processing:

We

can understand the causes of remembering and forgetting in

terms of three stages of memory processing:

- In encoding, we create a record of perceptual activity that leaves a representation of an event in memory. This representation is known as the memory trace or engram.

- In storage, a newly encoded trace is retained over time in a latent state.;

- In retrieval, a stored memory trace is activated so it can be used in some cognitive task.

Forgetting can involve a failure at any of these stages, alone or in combination. Thus, at least in principle, an event can be forgotten because a trace of that event was never encoded in the first place; or because it was lost from storage; or because it can't be retrieved.

Unfortunately, when we're dealing

with autobiographical memory in the real world, we don't

always have knowledge or control over these three stages.

This is especially true for the encoding stage: because the

experimenter was not usually present when the event

occurred, he or she cannot check the accuracy of what the

subject recalls. If I ask you what you had for dinner last

Thursday night, how can I know that you're correct? The

verbal-learning experiment, in which memory is tested for

material "studied" in the laboratory, allows us to

rigorously control the conditions of encoding, storage, and

retrieval, so we can determine their effects on memory.

Link

to an interview with Gordon H. Bower.

Encoding