Beyond Impressions:

Descriptive and Inferential Statistics

Ernest Rutherford, who made that nasty remark about physics and stamp collecting, also said that "If your experiment needs statistics, you ought to have done a better experiment". But he was wrong about that, just as he was wrong about reductionism. Even physicists use statistics -- most recently in their search for the Higgs boson (also known as "the God particle", which gives mass to matter). The Higgs was first observed in a set of experiments completed in December 2011, but official announcement of its existence had to wait until July 4, 2012, so that they could double the number of observations (to about 800 trillion!), and achieve a confidence level of "five sigma". You'll find out what this means later in this Supplement.

Scientists of all stripes, including physicists and psychologists, use statistics to help determine which observations to take seriously, and which explanations are correct.

"Lies, damn lies, and statistics" -- that's a quote attributed by Mark Twain to Benjamin Disraeli, the 19th-century British prime minister. And it's true that scientists and policymakers are often able to massage statistics to "prove" things that aren't really true. But if you understand just a little statistics, you won't be fooled quite so often. That minimal understanding is the goal of this lecture. If you continue on in Psychology, you'll almost certainly take a whole formal course in methods and statistics, at the end of which you'll understand more!

Scales of Measurement

Quantification, or assigning numbers to observations, is the core of the scientific method. Quantification generates the numerical data that can be analyzed by various statistical techniques. The process of assigning numbers to observations is called scaling.

As late as the end of the 18th century, it was widely believed, thanks mostly to the philosopher Immanuel Kant) that psychology could not be a science because science depends on measurement, and the mind could not be measured. On the other hand, Alfred W. Crosby (in The Measure of Reality: Quantification and Western Society, 1250-1600, 1997) points out that as early as the 14th century Richard Swineshead (just imagine the grief he got in junior high school) and a group of scholars (known as the Schoolmen) at Oxford's Merton College were considering ways in which they might measure "moral" qualities such as certitude, virtue, and grace, as well as physical qualities such as size, motion, and heat. What accounts for the change? Kant, like most other philosophers of his time, was influenced by the argument of Rene Descartes, a 17th-century French philosopher, that mind and body were composed of different substances -- body was composed of a material substance, but mind (or, if you will, soul) was immaterial. Because material substances took up space, they could be measured. Because mind did not take up space, it could not be measured.

Nevertheless, less than half a century after Kant made his pronouncement, Ernst Weber and Gustav Fechner asked their subjects to assign numbers to the intensity of their sensory experiences, and discovered the first psychophysical laws. Shortly thereafter, Franciscus Donders showed how reaction times could measure the speed of mental processes. People realized that they could measure the mind after all, and scientific psychology was off and running.

But what kind of measurement is mental measurement? Robert Crease (in World in the Balance: The Historic Quest for an Absolute System of Measurement, 2011), distinguishes between two quite different types of measurement:

- In ontic measurement, we are measuring the physical properties of things that exist in the world. Measuring the length of a bolt of cloth with a yardstick, or weighing yourself on a bathroom scale, are good examples. There are absolute, universal standards for ontic measurements. For example, a meter is equal to 1/10,000,000 of the distance from the Earth's equator to the North Pole, and kilogram is equal to the mas of 1 liter of water.

- In ontological measurement, we try to measure properties or qualities that do not exist in quite the same way as length and weight do, because they are in some sense invisible. This is where Kant got hung up. Intelligence, or neuroticism, or loudness are hypothetical constructs, "invisible" entities which we invoke to describe or explain something that we can see. Hypothetical constructs exist in physics and chemistry, too. But they are abundant in psychology. And precisely because they are "hypothetical", many of the controversies in psychology revolve around how different investigators define their constructs. We'll see this most clearly when we come to research and theory on the most salient psychological construct of them all -- intelligence.

In a classic paper, S.S. Stevens, the

great 20th-century psychophysicist, identified four

different kinds of measurement scales used in psychology.

In a classic paper, S.S. Stevens, the

great 20th-century psychophysicist, identified four

different kinds of measurement scales used in psychology.

Nominal (or categorical) scales simply use numbers to label categories. For example, if we wish to classify our subjects according to sex, we might use 0 = female and 1 = male (this is the preference of most male researchers; female researchers have other ideas). But it doesn't matter what the numbers are. We could just as easily let 5 = male and 586 = female, because we don't do anything with these numbers except use them as convenient labels.

Ordinal scales use numbers to express relative magnitude. If we ask you to rank political candidates in terms of preference, where 1 = least preferred and 10 = most preferred, a candidate ranked #8 is preferred more than a candidate ranked #6, who is preferred more than a candidate ranked #4. However, there is no implication that #8 is preferred twice as much as #4, or four times as much as #2. All we can say is that one candidate is preferred more (or less) than another. Rank orderings are transitive: if #8 is preferred to #4, and #4 is preferred to #2, then #8 is preferred to #2.

In interval scales, equal differences between scores can be treated as actually equal. Time is a common interval scale in psychological research: 9 seconds is 5 seconds longer than 4 seconds, and 8 seconds is 5 seconds longer than 3 seconds, and the two 5-second differences are equivalent to each other. Interval scales are important because they permit scores to be added and subtracted from each other.

In ratio scales, there is an absolute zero-point against which all other scores can be compared -- which means that scores can be multiplied and divided as well as added and subtracted. Only with ratio scales can we truly say that one score is twice as large, or half as large, as another. Time is on a ratio scale as well as an interval scale -- 8 seconds is twice as long as 4 seconds.While interval scales permit addition and subtraction, ratio scales permit multiplication and division.

Most psychological data is on nominal, ordinal,

or interval scales. For technical reasons that need not

detain us here, ratio scales are pretty rare in psychology.

But this fact does not prevent investigators from speaking

of their data, informally, as if it were on a ratio scale,

and analyzing it accordingly.

"Data Is" or "Data Are"?

In the paragraph just above I wrote that "data is", whereas if you read the psychological literature you will often find psychologists writing "data are". Technically, the word data is the plural of the word datum, but Latin plurals often become English singulars --agenda is another example. The fact is that scientists rarely work with a single datum, or individual piece of information, but rather with a body of data. Accordingly, I generally use data as a mass noun that takes a singular verb, rather than as a count noun that takes the plural.

Alternatively,data can be viewed as a sort of collective noun, like team. In this case, it also takes the singular.

Usage differs across writers, however, and even individual

writers can be inconsistent. This is just one example of

how English is constantly evolving.

For an engaging (and somewhat left-leaning) history of data, see How Data Happened: A History from the Age of Reason to the Age of Algorithms (2023) by Chris Wiggins (an applied mathematician) and Matthew L. Jones (a historian), reviewed by Ben Tarnoff in "Ones and Zeros" (The Nation, 10/30-11/06/2023). The general theme of the book is "How Everything Became Data" -- including people.

"Subjects" or "Participants"?

Another tricky language issue crops up when we refer to the individuals who provide the data analyzed in psychological experiments. In psychophysics, such individuals are typically referred to as "observers"; in survey research, they are often referred to as "informants". But traditionally, they are referred to as "subjects", a term which includes both humans, such as college students, and animals, such as rats and pigeons. However, beginning with in its 4th edition, the Publication Manual of the American Psychological Association -- a widely adopted guide to scientific writing similar to The Chicago Manual of Style in the humanities -- advised authors to "replace the impersonal term subjects with a more descriptive term" like participants (p. 49). Since then, references to research "participants" have proliferated in the literature -- a trend that even drew the notice of the New York Times (The Subject Is... Subjects" by Benedict Carey, 06/05/05).

The Times quoted Gary VandenBos, executive director of publications and communications for the APA, as saying that "'Subjects' implies that these are people who are having things done to them, whereas 'participants' implies that they gave consent". This, of course, is incorrect. Such individuals might be called "objects", but never "subjects"; the term "subject" implies activity, not passivity (think about the subject-object distinction in English grammar).

More important, perhaps, the "participant" rule blithely

ignores the simple fact that there are many "participants"

in psychological experiments, each with their own special

designated role during the social interaction known as

"taking part in an experiment":

- There are the subjects who provide the empirical data collected in an experiment, and

- the experimenters who conduct the experiment itself;

- there are the confederates (Schachter and Singer called them "stooges", which definitely has an unsavory ring to it!) who help create and maintain deception in certain kinds of experiments;

- there are laboratory technicians who operate special equipment (as in brain-imaging studies), and

- perhaps other research assistants as well, such as data coders, who have active contact with the subject, the experimenter, or both.

All these people "participate" in the experiment. To call subjects mere "participants" not only denies recognition of their unique contribution to research, but it also denies proper recognition to the other participants as well.

Only one category of participants provides the data collected in an experiment: the subjects, and that's what "they" should be called whether they are human or nonhuman.

Descriptive Statistics

Probably the most familiar

examples of psychological measurement come in the form of

various psychological tests, such as intelligence tests

(e.g., the Stanford-Binet Intelligence Scale or the Wechsler

Adult Intelligence Scale) and personality questionnaires

(e.g., the Minnesota Multiphasic Personality Inventory or

the California Psychological Inventory). The construction of

these scales is discussed in more detail in the lectures on

Thought and Language

and Personality and Social

Interaction.

Probably the most familiar

examples of psychological measurement come in the form of

various psychological tests, such as intelligence tests

(e.g., the Stanford-Binet Intelligence Scale or the Wechsler

Adult Intelligence Scale) and personality questionnaires

(e.g., the Minnesota Multiphasic Personality Inventory or

the California Psychological Inventory). The construction of

these scales is discussed in more detail in the lectures on

Thought and Language

and Personality and Social

Interaction.

For now, let's assume that a subject has completed one of these personality questionnaires, known as the NEO-Five Factor Inventory, which has scales for measuring extraversion, neuroticism, and three other personality traits (we'll talk about the Big Five factors of personality later). Scores on each of these scales can range from a low of 0 to a high of 48.

Now let's imagine that our subject has scored 20 on both of these tests. What does that mean? Does that mean that the person is as neurotic as he or she is extraverted? We don't really know, because before we can interpret a person's test scores, we have to have information about the distribution of scores on the two tests. Before we can interpret an individual's score, we have to now something about how people in general perform on the test. And that's where statistics come in.

In fact, there are two broad kinds of

statistics: descriptive statistics and inferential

statistics.

Descriptive statistics, as their name implies, help us to describe the data in general terms. And they come in two forms:

- Measures of central tendency, such as the mean, the median, and the mode;

- Measures of variability, such as the variance, the standard deviation, and the standard error of the mean.

We can use descriptive statistics to indicate how people in general perform on some task.

Then there are inferential statistics, which allow us to make inferences about whether any differences we observe between different groups of people, or different conditions of an experiment, are actually big enough,significant enough, to take seriously. the kinds of measures we have for this include:

- the t-test and the analysis of variance;

- the correlation coefficient and multiple regression.

These will be discussed later.

As far as central tendency goes, there are basically three different measures that we use.

- The most popular of these is the mean, or arithmetical average, abbreviated M, which is computed simply by adding up the individual scores and dividing by the number of observations.

- The median is that point which divides the distribution exactly in half: below the median there are 50% of the observations, and 50% of the observations are above the median. We can determine the median simply by rank-ordering the observations and finding the point that divides the distributions in half.

- The mode is simply the most frequent observation. If two different points share the highest frequency, we call the distribution bimodal.

Next, we need to have some way of characterizing the variability of the observations, the variability in the data, or the dispersion of observations around the center. The commonest statistics for this purpose are known as:

- the standard deviation (abbreviated SD);

- the variance, which is simply the square of the SD;

- the standard error of the mean.

For an exam, you should know how to determine the mean, median, and mode of a set of observations. I almost always ask this question on an exam, and it's just a matter of simple arithmetic. But you do not need to know how to calculate measures of variability like the standard deviation and the standard error. Conceptually, however, the standard deviation has to do with the difference between observed scores and the mean. If most of the observations in a distribution huddle close to the mean, the variability will be low. If many observations lie far from the mean, the variability will be high.

The standard deviation, then, is the measure of the dispersion of individual scores around our sample mean. But what if we took repeated samples from the same population. Each time we'd get a slightly different mean and a slightly different standard deviation, because each sample would be slightly different from the others. They wouldn't be identical. The standard error is essentially a measure of the variability among the means of repeated samples drawn from the same population. You can think of ti as the standard deviation of the means calculated from repeated samples, analogous to the standard deviation of the mean calculated from a single sample.

Most psychological measurements follow what is known as the normal (or Gausian) distribution. If you plot the frequency with which various scores occur, you obtain a more-or-less bell-shaped curve that is more-or-less symmetrical around the mean, and in which the men, the median, and the mode are very similar. In a normal distribution, most scores fall very close to the mean, and the further you get from the mean, the fewer scores there are. If you have a perfectly normal distribution, the mean, the median, and the mode are identical, but we really don't see that too much in nature.

The normal distribution follows from what is known as the central limit theorem in probability theory. That's all you have to know about this unless you take a course in probability and statistics. And, for this course, you don't even have to know that! But I have to say it.

One of the interesting features of a normal distribution is that the scatter or dispersion of scores around the mean follows a characteristic pattern known as The Rule of 68, 95, and 99. What this means is that in a large sample:

- approximately 68% of the observations will fall within 1 standard deviation of the mean;

- approximately 95 % will fall within 2 standard deviations;

- and approximately 99% of the observations will fall within 3 standard deviations (actually, 99.7%, but who's counting?).

10,00 Dice Rolls...

A nice visualization of probability and the normal distribution in action comes from Kirkman Amyx, a Bay Area artist whose work, as described on his website, "explores the use of photography as a data visualization tool which can allow for seeing patterns, structure, and meaning through image repetition. These conceptually based projects which utilize a hint of science, data analysis, and the measurement of time, are ultimately visual inquiries and explorations of phenomena."

Consider his

digital painting, "10,000 Dice Rolls CMV", Amyx

writes: "This visual exploration is investigating

the dichotomy between chance and predictably, the theory

of probability, and the law of large numbers. Over a

period of 10 hours 10,000 dice were rolled, and each

outcome photographed in the location of the fall. Digital

compilations and a 6 minute video were made of the 10,000

files to show how a random but repeated event can quickly

produce a predictable pattern. Here you can see that most

of the rolls fell in the center of the space, with very

few along the outer edges. If you measured the distance

between the center of the space and the location where

each dice roll fell, and then calculated the mean and

standard deviation, you would find that roughly 68% of the

rolls would fall within 1 standard deviation of the center

of the space, 95% would fall within 2 SDs, and

99% within 3 SDs. ("CMV" stands for "Cyan,

Magenta, Violet", the three colors in which the dice rolls

were printed.)

Consider his

digital painting, "10,000 Dice Rolls CMV", Amyx

writes: "This visual exploration is investigating

the dichotomy between chance and predictably, the theory

of probability, and the law of large numbers. Over a

period of 10 hours 10,000 dice were rolled, and each

outcome photographed in the location of the fall. Digital

compilations and a 6 minute video were made of the 10,000

files to show how a random but repeated event can quickly

produce a predictable pattern. Here you can see that most

of the rolls fell in the center of the space, with very

few along the outer edges. If you measured the distance

between the center of the space and the location where

each dice roll fell, and then calculated the mean and

standard deviation, you would find that roughly 68% of the

rolls would fall within 1 standard deviation of the center

of the space, 95% would fall within 2 SDs, and

99% within 3 SDs. ("CMV" stands for "Cyan,

Magenta, Violet", the three colors in which the dice rolls

were printed.)

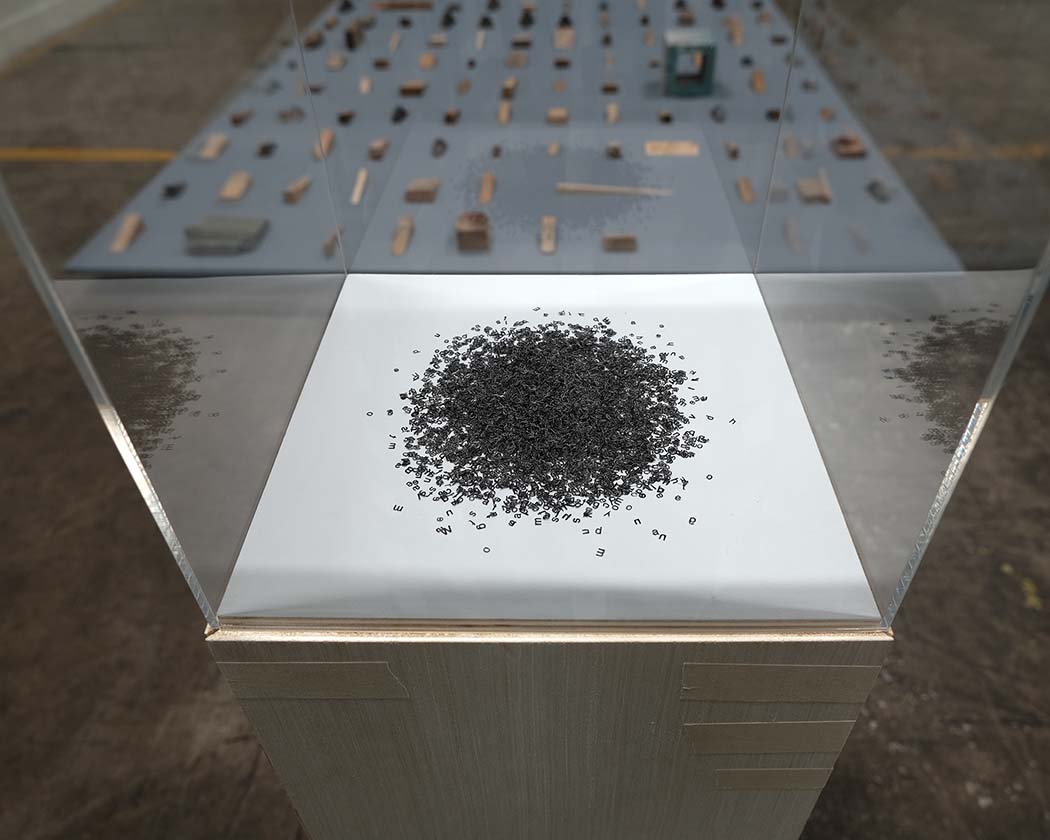

...and

9,519 Characters

A

three-dimensional example of this type is "We Choose the

Moon", an artwork by Eli Blasko, a Tucson-based artist who

works in various media (the artwork, made in 2020, was

displayed in the 2023 Biennial Exhibition at the Tucson

Museum of Art). In order to make the piece, Blasko

took the transcript of a 1962 speech by President John F.

Kennedy) announcing the Apollo moonshot program ("We

choose to go to the moon in this decade and do the other

things, not because they are easy, but because they are

hard..."), cut out each individual letter, and then

dropped all 9,519 cutouts onto a piece of paper. The

resulting mound has a peak at the center, and then spreads

out pretty symmetrically until there are only single

characters at the very circumference. If you took a

cross-section of the mound, it would look a lot like the

normal curve. If you drew concentric rings, you'd

find that about 68% of the cutouts fell within 1 SD

of the center, 95% within 2 SD, and 99% within 3 SD.

Here are some other views of Blasko's artwork (all images

from Blasko's website).

A

three-dimensional example of this type is "We Choose the

Moon", an artwork by Eli Blasko, a Tucson-based artist who

works in various media (the artwork, made in 2020, was

displayed in the 2023 Biennial Exhibition at the Tucson

Museum of Art). In order to make the piece, Blasko

took the transcript of a 1962 speech by President John F.

Kennedy) announcing the Apollo moonshot program ("We

choose to go to the moon in this decade and do the other

things, not because they are easy, but because they are

hard..."), cut out each individual letter, and then

dropped all 9,519 cutouts onto a piece of paper. The

resulting mound has a peak at the center, and then spreads

out pretty symmetrically until there are only single

characters at the very circumference. If you took a

cross-section of the mound, it would look a lot like the

normal curve. If you drew concentric rings, you'd

find that about 68% of the cutouts fell within 1 SD

of the center, 95% within 2 SD, and 99% within 3 SD.

Here are some other views of Blasko's artwork (all images

from Blasko's website).

|

|

|

It's important

to remember that means are only estimates of population

values, given the observations in a sample. If we measured

the entire population we wouldn't have to estimate the mean:

we'd know exactly what it is. But in describing sample

distributions we define a confidence interval

of 2 standard deviations around the mean. So given the mean

score, and a reasonably normal distribution of scores, we

can be 95% confident that the true mean lies somewhere

between 2 standard deviations below and 2 standard

deviations above the mean. Put another way, there is only a

5% chance that the true mean lies outside those limits: p < .05

again!

It's important

to remember that means are only estimates of population

values, given the observations in a sample. If we measured

the entire population we wouldn't have to estimate the mean:

we'd know exactly what it is. But in describing sample

distributions we define a confidence interval

of 2 standard deviations around the mean. So given the mean

score, and a reasonably normal distribution of scores, we

can be 95% confident that the true mean lies somewhere

between 2 standard deviations below and 2 standard

deviations above the mean. Put another way, there is only a

5% chance that the true mean lies outside those limits: p < .05

again!

People whose scores fall outside the confidence interval are sometimes called "outliers", who may differ from the rest of the sample in some way. So that gives us a second rule -- what you might call "The Rule of 2": if there are a lot of subjects with scores more than 2 standard deviations away from the mean, this is unlikely to have occurred merely by chance. If you watch the television news, you'll see that confidence intervals are also reported for other kinds of statistics. So, for example, when a newscaster reports the results of a survey or a political poll, he or she may report that 57% of people prefer one candidate over another, with a confidence interval of 3 percentage points. In that case, we can be 95% certain that the true preference is somewhere between 54% and 60%. The calculation is a little bit different, but the logic of confidence intervals is the same.

An interesting example occurred in an official report of the US Bureau of Labor Statistics for May 2012 (announced in June). Reflecting the slow recovery from the Great Recession that began with the financial crisis of 2008, unemployment was estimated at 8.2% (up a little from the months before); it was also estimated that 69,000 new jobs had been created -- not a great number either. But the margin of error around the job-creation estimate was 100,000. This means that as many as 169,000 jobs might have been created that month -- but also that we might have lost as many as 31,000 jobs! Sure enough, the July report revised the May figure upward to 77,000 new jobs, and the August report revised it still further, to 87,000 new jobs.

Here's another one, of even greater importance. In the case of Atkins v. Virginia (2002), the US Supreme Court held that it was unconstitutional to execute a mentally retarded prisoner, on the grounds that it was cruel and inhuman. However, it left vague the criteria for identifying someone as mentally retarded. After that decision was handed down, Freddie Hall, convicted in Florida for a brutal murder, appealed his death sentence on the grounds that he was mentally retarded (Hall didn't seek an insanity defense: he only wished to prevent his execution for the crime). As we'll discuss later in the lectures on Psychopathology, the conventional diagnosis of mental retardation requires evidence of deficits in both intellectual and adaptive functioning, with both deficits manifest before age 18. As discussed later in the lecture on Thought and Language, the conventional criterion for intellectual disability is an IQ of 70 or less. Given the way that IQ tests are scored, this would put the person at least two standard deviations below the population mean, and most states employ something like that criterion. Hall, unfortunately for him, scored slightly above 70 when he was tested, and Florida law employed a strict cutoff IQ of 70, allowing for no "wiggle-room" (it also uses IQ as the sole criterion for mental retardation). In Hall vs. Florida (2014), Hall's lawyers argued that such a cutoff was too strict, and didn't take into account measurement error. That is to say, while he might have scored 71 or 73 (or, on one occasion, 80) when he was tested, the measurement error of the test was such that there was some chance that his true score was below 70. Put another way, the confidence interval around his scores was such that it was possible that his true score was 70 or below. In a 5-4 decision, the Court agreed, mandating that Florida (and other states that also have a "bright line" IQ cutoff) must take account of both the measurement error of IQ tests and other evidence of maladaptive functioning in determining whether a condemned prisoner is mentally retarded.

In industry, a popular quality-control standard, pioneered by the Motorola Corporation, is known as six sigma. In statistics, the standard deviation is often denoted by the Greek letter sigma, and the six-sigma rule aims to establish product specifications that would limit manufacturing defects to the lower tail of the normal distribution, more than 6 standard deviations ("six sigma") below the mean. Thinking about the "Rule of 68, 95, and 99", and remembering that 32% of observations will fall outside the 1SD limit, half above and half below, that means that a "one sigma" rule would permit 16% manufacturing defects (((100-68)/2 = 16) which is pretty shoddy work); a rule of "two sigma" would permit defects in 2.5% of products ((100-95)/2), and a rule of "three sigma" would permit defects in 0.5% of products((100-99)/2). Most statistical tables stop at 3 SDs, but if you carry the calculation out, in practice the "six sigma" rule sets a limit of 3.4 defects per million, or 0.0000034%). Now,that's quality!

Scrubbing the Test

In New York State, students are required to pass "Regents Examinations" in five areas -- math, science, English, and two in history or some other social science -- in order to graduate from high school. The exams are set by the state Board of Regents (hence the name), and consist of both multiple-choice and open-ended questions. In order to pass a Regents' Exam, the student must get a score of 65 out of 100 (a previous threshold of 55 was deemed too low). Up until 2011, State policy permitted re-scoring tests that were close to the threshold for passing, in order to prevent students from being failed simply because of a grading error. The exams are used both to evaluate student competence in the areas tested, and in the preparation of "report cards" for schools and principals.

I was educated in New York State, and, at least at that time, the Regents Exams were things of beauty. They covered the entire curriculum (algebra and , trigonometry, biology and chemistry, world and US history, Latin and German, etc.; I believe they even had them in music and art). To be sure, they were high-stakes tests. You could not get a "Regents" diploma, making you eligible for college admission, if you did not pass them. If you passed the "Regents" you passed the course, regardless of whether you actually took it (many students took at least one required "Regents" exam in summer school, in order to make room in their schedules for electives). And if you failed the test you failed the course, no matter how well you did throughout the academic year on "local" exams. But nobody complained about "teaching to the test", because everybody -- teachers, principals, students, and parents alike -- understood that the tests fairly represented the curriculum that was supposed to be taught. They had what psychometricians call content validity. (The same principle of content validity underlies the construction of exams in this course.)

![]() However,

in February 2011, a study by the New York Times

found an anomaly in the distribution of scores on five

representative Regents Exams. For the most part, the

distribution of scores resembled the normal bell-shaped

curve -- except that more students scored exactly

65, and fewer students received scores of 61-64, than

would be expected by chance. Apparently, well-meaning

reviewers were "scrubbing the test", mostly when

evaluating the open-ended essay questions, in order to

give failing students just enough extra points to pass

(or, perhaps they were cheating, in order to make their

schools look better than they were.In May of that year,

the New York State Department of Education issued new

regulations policy forbidding re-scoring the exams -- both

the essay and multiple-choice sections.

However,

in February 2011, a study by the New York Times

found an anomaly in the distribution of scores on five

representative Regents Exams. For the most part, the

distribution of scores resembled the normal bell-shaped

curve -- except that more students scored exactly

65, and fewer students received scores of 61-64, than

would be expected by chance. Apparently, well-meaning

reviewers were "scrubbing the test", mostly when

evaluating the open-ended essay questions, in order to

give failing students just enough extra points to pass

(or, perhaps they were cheating, in order to make their

schools look better than they were.In May of that year,

the New York State Department of Education issued new

regulations policy forbidding re-scoring the exams -- both

the essay and multiple-choice sections.

Links to articles by Sharon Otterman in the New York Times describing the study (02/19/2011) and its consequences for New York State education policy (05/2/2011), from which the graphic was taken.

Comparing Scores

The

The  normal distribution offers

us a way of comparing scores on two tests that are scaled

differently. Imagine that we have tests of extraversion and

neuroticism from the NEO-Five Factor Inventory, whose scores

can range from 0-48. A subject scores 20 on the both scales.

Does that mean that the person is as neurotic as s/he is

extraverted? There are several ways to approach this

question. Both require that we have information about the

distribution of scores on the two tests, based on a

representative sample of the population. This information

was provided by the authors of the NEO-FFI, based on the

results of a standardization sample consisting of almost 500

college-age men and almost 500 college-age women. The

distributions of test scores would look something like

these.

normal distribution offers

us a way of comparing scores on two tests that are scaled

differently. Imagine that we have tests of extraversion and

neuroticism from the NEO-Five Factor Inventory, whose scores

can range from 0-48. A subject scores 20 on the both scales.

Does that mean that the person is as neurotic as s/he is

extraverted? There are several ways to approach this

question. Both require that we have information about the

distribution of scores on the two tests, based on a

representative sample of the population. This information

was provided by the authors of the NEO-FFI, based on the

results of a standardization sample consisting of almost 500

college-age men and almost 500 college-age women. The

distributions of test scores would look something like

these.

Here's a side-by-side comparison,

showing the mean, median, and mode of each distribution, and

locating our hypothetical subject who scores 20 on both

scales. Note that the distribution of extraversion is pretty

symmetrical, while the distribution of neuroticism is

asymmetrical. This asymmetry, when it occurs, is called skewness.

In this case, neuroticism shows a marked positive skew

(also called rightward skew), meaning that there

are relatively few high scores in the distribution.

Understand the concept of skewness, but don't get hung up on keeping the different directions straight-- positive vs. negative, left vs. right. I mix them up myself, and would never ask you to distinguish between positive and negative skewness on an exam. In unimodal distributions:

- In positive skewness, the mean is higher than the median.

- In negative skewness, the mean is lower than the median.

In

fact, we have a number of different

ways of putting these two scores on a comparable basis.

- First, we can calculate the subject's scores in terms of percentiles. We order the scores, from lowest to highest, and we determine what percentage of the sample have scores below 20. As it happens, a score of 20 is below the median (50th percentile) for extraversion, but above the median for neuroticism.

- More precisely, a score of 20 on the NEO-FFI Neuroticism scale corresponds to a percentile score of 69;

- and a score

of 20 on the NEO-FFI Extraversion scale corresponds to

a percentile score of 12.

So, we can say that the subject is not very extraverted, but he's somewhat neurotic. More to the point, it seems that he's more neurotic than extraverted.

- Another way to do this is to calculate Z-scores, representing the distance of the subject's scores from the sample mean.

- The NEO-FFI norms show that mean score on the Neuroticism scale is 19.07, with a standard deviation of approximately 7.46 (aggregating data from males and females). So, the subject's Z-score for neuroticism is +0.12.

- The mean

score on the Extraversion scale is 27.69, with a

standard deviation of approximately 5.83. So, the

subject's Z-score for extraversion is -1.32.

So, once again we can say that the subject is more neurotic than he is extraverted.

- A variant on the Z-score is he T-score (where the "T" stands for "True"). This is simply a transformation of the Z=score to a conventional mean of 50 and a standard deviation of 10 == much like IQ scores are transformed to a conventional mean of 100 and a standard deviation of 15. T-scores are often used in the interpretation of personality inventories such as the MMPI, CPI, and various versions of the NEO-PI.

- A score of 20 on the NEO-FFI Neuroticism sub-scale corresponds to a T-score of approximately 52 (averaging across males and females).

- A score of

20 on the NEO-FFI Extraversion sub-scale corresponds

to a T-score of approximately 39..

Again, our subject is more neurotic than average, less extraverted than average, and more neurotic than extraverted.

Now let's see how descriptive and inferential statistics work out in practice.

Testing a Hypothesis

Sometimes it would seem that you wouldn't need statistics to draw inferences -- they seem self-evident. They pass what researchers jokingly call the traumatic interocular test -- meaning that the effect hits you right between the eyes.

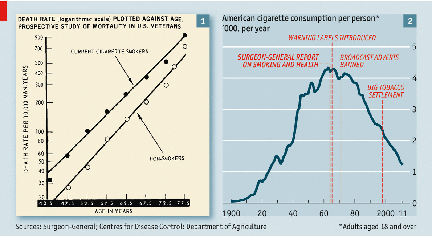

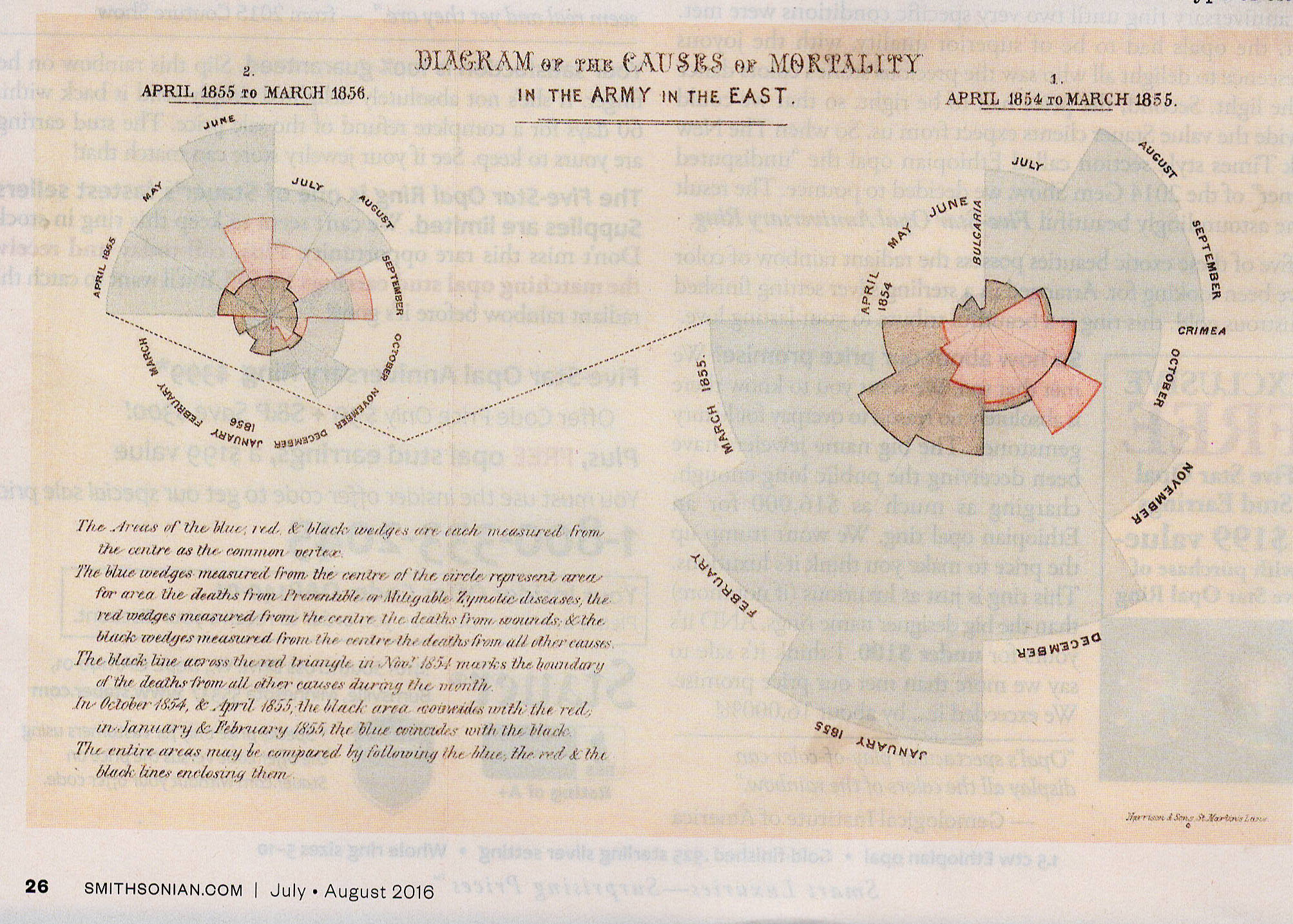

Consider this famous graph from the

1964 Surgeon General's report on "Smoking and Health".

This shows the death rate plotted against age for US service

veterans. The two lines are virtually straight,

showing that the likelihood of dying increases as one gets

older -- big surprise. But the death rate for smokers

at any age is consistently higher than that for non-smokers

-- which would seem to support the Surgeon General's

conclusion that, on average, smokers die younger than

nonsmokers. But is that difference really significant,

or could it be due merely to chance? And how big is

the difference, really? The answers are "yes" and

"big", but the cigarette manufacturers resisted the Surgeon

General's conclusions (and continue to resist them to this

day!). So before we can convince someone that

cigarettes really do harm every system in the body (the

conclusion of the most recent Surgeon General's report,

issued in 2014 on the 50th anniversary of the first one), we

need to perform some additional statistical analyses.

Consider this famous graph from the

1964 Surgeon General's report on "Smoking and Health".

This shows the death rate plotted against age for US service

veterans. The two lines are virtually straight,

showing that the likelihood of dying increases as one gets

older -- big surprise. But the death rate for smokers

at any age is consistently higher than that for non-smokers

-- which would seem to support the Surgeon General's

conclusion that, on average, smokers die younger than

nonsmokers. But is that difference really significant,

or could it be due merely to chance? And how big is

the difference, really? The answers are "yes" and

"big", but the cigarette manufacturers resisted the Surgeon

General's conclusions (and continue to resist them to this

day!). So before we can convince someone that

cigarettes really do harm every system in the body (the

conclusion of the most recent Surgeon General's report,

issued in 2014 on the 50th anniversary of the first one), we

need to perform some additional statistical analyses.

The other graph plots US cigarette consumption per person from 1900 to 2011. Cigarette use increases steadily, but then seems to take a sharp turn downward when the Surgeon General issued his report. But did it really? And how quickly did smoking behavior begin to change? That there's been a change is self-evident, but how much of the change was caused by the report itself, compared to the introduction of warning labels, or the banning of cigarette advertisements on radio and TV. to figure these things out, again, we need additional statistical analyses.

That's what inferential statistics do: enable us not just to describe a pattern of data, but to test specific hypotheses about difference, association, and cause and effect.

The Sternberg Experiment

Consider

a simple but classic psychological experiment by Saul

Sternberg, based on the assumption that mental processes

take time -- in fact, Sternberg's experiment, which deserves

its "classic" status, represented a modern revival of

reaction-time paradigm initiated by Franciscus Donders in

the 19th century.

In Sternberg's experiment, a subject is shown a set of 1 to 7 letters, say C--H--F--M--P--W, that comprise the study set. After memorizing the study set, he or she is presented with a probe item, say --T--, and must decide whether the probe is in the study set. Answering the question, then, requires the subject to search memory and match the probe with the items in the study set. There are two basic hypotheses about this process. One is that memory search is serial, meaning that the subject compares the probe item to each item in the study set, one at a time. The other is that search is parallel, meaning that the probe is compared to all study set items simultaneously.

How to distinguish between the two hypotheses? Given the assumption that mental processes take time, it should take longer to inspect the items in a study set one at a time, than it does to inspect them simultaneously. Or, put another way, if memory search is serial, search time should increase with the size of the study set; if memory search is parallel, it should not.

Search time may be estimated by response latency -- the time it takes the subject to make the correct response, once the probe has been presented. So, in the experiment, Sternberg asked his subjects to memorize a study set; then he presented a probe, and recorded how long it took the subjects to say "Yes" or "No". (Subjects hardly ever make errors in this kind of task.) His two hypotheses were: (1) that response latency would vary as a function of the size of the memory set (under the hypothesis of serial search, more comparisons would take more time); and (2) response latencies for "Yes" responses would be, on average, shorter than for "No" responses (because subjects terminate search as soon as they discover a match for the probe, and only search all the way to the end of the list when the probe is at the very end, or not in the study set at all.

So in the Sternberg-type experiment, a group of subjects, selected at random, are all run through the same procedure. From one trial to another, the size of the study set might be varied from 1, 3, 5, and 7 items; and on half the trials the correct answer is "Yes", indicating that the probe item was somewhere in the study set, while on the other half of the trials the correct answer is "No", meaning that the probe was missing. These two variables, set size and correct response, which are manipulated (or controlled) by the experimenter, are known as the independent variables in the experiment. The point of the study is to determine the effects of these variables on response latency, the experimental outcome measured by the experimenter, and which is known as the dependent variable. In properly designed, well-controlled experiments, changes in the dependent variable are assumed caused by changes in the independent variable.

As it happens, Sternberg found that

response latency varied as a function of the size of the

memory set. It took subjects about 400 milliseconds to

search a set consisting of just one item, about 500

milliseconds to search a set of 3 items, about 600

milliseconds to search a set of 5 items, and about 700

milliseconds to search a set of 7 items.

The Sternberg task has been of great interest to psychologists, because it seems to offer us a view of the mind in operation: we can see how long various mental processes take. Apparently, we search memory one item at a time, in series, and it takes about 50 milliseconds to search each item in the memory set.

Now suppose we were interested in the question of whether age slows down the process of memory search. We know that the elderly have more trouble remembering things than do young adults, and it is a reasonable to suspect that this is because aging slows down the memory search process. Based on the theory that aging slows down mental processes, we derive the hypothesis that older subjects will be slower on the Sternberg task than younger subjects. If we test this hypothesis, and it proves to be correct, that supports the theory. If the hypothesis proves to be incorrect, then it's back to the drawing board.

In order to test the hypothesis, and determine whether our theory is supportable, we recruit two groups of 10 adults, one young and the other elderly, and run them on a version of the Sternberg memory-search task. Of course, any differences observed between young and old subjects might be due to a host of variables besides age per se. Accordingly, we select our subjects carefully so that the two groups are matched as closely as possible in terms of IQ, years of education, socioeconomic status, and physical health.

Note that in this experiment we are not comparing every young person in the world to every old person. That might be nice, but it is simply not possible to do so. Instead, we draw a small sample from the entire population of young people, and another sample from the population of elderly. Of course, it is important that our samples be representative of the populations from which they are drawn -- that is, that the people included in the samples possess the general characteristics of the population as a whole.

Note, too, that age, the independent variable in our little experiment, is not exactly manipulated by the experimenter. We can't take 20 people and randomly assign 10 of the to be young, and the other 10 old -- any more than, if we were interested in gender differences, we could randomly assign some subjects to be male and others to be female! Instead, we have to be satisfied with sampling our subjects based on the pre-existing variable of age. Because we rely on pre-existing group differences, which we treat as if they resulted from an experimental manipulation, our little experiment is, technically, a quasi-experiment. But the logic of experimental inference is the same.

Whether we're randomly assigning subjects to conditions, or selecting subjects based on some pre-existing variable, it is important to eliminate potentially confounding variables. For example, if young people are generally healthier than old people, group differences in physical infirmity might account for differences in reaction time. In some experiments, this problem is solved by means of random assignment: subjects are assigned by chance alone to one experimental group or another. In this way, the experimenter hopes to spread potentially confounding variables evenly between the groups. This might be the case if we wanted to investigate the effects of drugs (such as alcohol) on memory search. We would select a sample of college students, divide them into two groups, have one group take alcohol and the other take a placebo (the independent variable), and test response latency (the dependent variable). Most such experiments shows that alcohol slows response latencies, which is why if you drink you shouldn't drive.

- An experiment that has separate groups of subjects assigned to experimental and control conditions uses a between-subjects design.

- If all subjects are run under both experimental and control conditions, this is called a within-subjects design.

Obviously, people cannot be assigned randomly to groups differing on age, any more than they can be randomly assigned to gender. Accordingly, we employ a stratified sample design in which subjects are divided into levels according to the independent variable -- in this case, age. In order to eliminate the effects of potentially confounding variables, however, we make sure that the subjects are matched on every other variable that could possibly have an effect on the dependent variable.

We conduct a simple version of Sternberg's experiment, involving only a single set size, five items, and observe the following results (mean response latencies calculated for each subject over several trials, measured in milliseconds). Remember, this is fabricated data, for purposes of illustration only.

|

Group |

Pairs of Subjects |

|||||||||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

|

|

Young |

635 |

725 |

630 |

675 |

625 |

640 |

660 |

635 |

645 |

650 |

|

Elderly |

740 |

695 |

730 |

725 |

750 |

745 |

710 |

760 |

735 |

725 |

The reaction times look different, by about 100 milliseconds, according to the Traumatic Interocular Test, but how can we be sure that this difference is something to be taken seriously? Notice that not every subject within each age group performed in precisely the same manner: there are individual differences in performance on the Sternberg task.

- "Individual differences" is another way of referring to between-subject or within-group variance. In particular, some of the elderly subjects performed better than some of the young subjects.

- There is also within-subject variance, reflecting the fact that individual subjects don't perform precisely the same way on each trial.) What is the "true value" of response latency for old and young subjects? You don't see the within-subject variance in this table, because it presents only individual subjects' mean scores. But of course, over trials each subject showed some variability around his or her mean, and that is within-subject variance.

- Within-subject

variance also occurs in within-subject

designs,

where the same subjects

are exposed to all conditions

of the experiment. Of course,

we can't do that in this experiment.

Furthermore, notice that one of the young subjects showed a slower reaction time than one of the old subjects. Given the differences in performance within each group, is it possible that the differences we observe between the two groups are due merely to chance factors, and that if we drew another sample of old and young subjects the difference would disappear, or even reverse? In order to check the reliability of experimental outcomes, psychologists employ statistical tests.

A Digression on Probability

What does it mean to say that an

observation -- such as the difference between two groups --

might be due to chance factors? What it means is that the

difference might not be significant, because it might well

occur simply by chance, and that if we conducted the

experiment again, we might get quite different observations,

and a different difference between the two groups -- again,

just by chance" by the roll of the dice, as it were.

What does it mean to say that an

observation -- such as the difference between two groups --

might be due to chance factors? What it means is that the

difference might not be significant, because it might well

occur simply by chance, and that if we conducted the

experiment again, we might get quite different observations,

and a different difference between the two groups -- again,

just by chance" by the roll of the dice, as it were.

In fact, probability theory has its origins in analyses of games of chance -- dice, cards, and the like -- by Gerolamo Cardano in the 16th century, and -- more famously -- Pierre de Fermat (he of Fermat's Last Theorem fame) and Blaise Pascal (he of Pascal's Conjecture fame) in the 17th century.

Consider

an event that can have a fixed number of outcomes -- like

the roll of a die or a draw from a deck of cards.

Consider

an event that can have a fixed number of outcomes -- like

the roll of a die or a draw from a deck of cards.

- In the case of the die, which has six sides, the probability of any face falling up is 1/6 (assuming that the die isn't "loaded"). So, the likelihood of a singe roll of a die resulting in a "3" is 1/6.

- In the case of the cards, of which there are 52 in a standard deck, the probability of any particular card being drawn is 1/52 (assuming that the deck isn't "stacked"). So, the likelihood of drawing the 4 of Clubs is 1/52.

The probability of an event A can be calculated

as follows:

p(A) = The number of ways in which A can occur / The total number of possible outcomes.

- Thus, from a single roll of a single die, the probability of rolling a 4 is 1/6, because only one face of the die has 4 pips.

The probability that either one or another event will occur is the sum of their individual probabilities.

- Thus, the probability of rolling an even number is 3/6, or 1/2, because there are 3 different faces that contain an even number of pips -- 2, 4, or 6. The probability of each of these outcomes is 1/6, so the probability of any one of these occurring is 1/6 + 1/6 + 1/6 = 3/6 = 1/2.

The probability that both one and another event will occur is the product of their individual probabilities.

- Thus, the probability of rolling an even number on two successive rolls of a die is 9/36 or 1/4, because the probability of rolling an even number on the first time is 3/6, and the probability of rolling an even number the second time is also 3/6.

These calculations refer to independent probabilities, where the probability of one even does not depend on the probability of another. But sometimes probabilities are not independent.

- As noted earlier, the probability of drawing the 4 of Clubs from a deck of cards is 1/52.

But what if you now draw a second card?

- If you replace the first-drawn card in the deck, and reshuffle, the probability of drawing the 4 of Clubs on the second remains 1/52. This is called sampling with replacement.

- But if you do not replace the first-drawn card -- what is called sampling without replacement -- the probability of drawing the 4 of Clubs changes, depending on the first card you drew.

- If the first-drawn card was, in fact, the 4 of Clubs, then -- obviously -- the probability of drawing the 4 of Clubs on the second attempt goes to 0.

- If the first-drawn card was not the 4 of Clubs, then the probability of drawing the 4 of Clubs on the second attempt increases slightly to 1/51.

The law of large numbers states that, the more often you repeat a trial, the more likely it is that the outcomes will reflect chance operations.

- The average

value of a single roll of a die is (1+2+3+4+5+6) =

3.5.

- If you toss a single die 3 times, and get a 6 each time, the average value of the rolls will be (3x6)/3 = 6.

- But if you toss that same die 300 times, the average value will revert to something closer to 3.5.

Dying in a Terrorist Attack

When you're asked what the probability is of one event or another occurring, the answer is the sum of their individual probabilities.

When you're asked what the probability of one event and another occurring, the answer is the product of their individual probabilities

The

American performance artist Laurie Anderson has parlayed

this into a joke. Paraphrasing her routine:

The

American performance artist Laurie Anderson has parlayed

this into a joke. Paraphrasing her routine:

Question: What's the best way to prevent yourself from being killed by a terrorist bomber on an airplane?

Answer:

Carry a bomb onto the plane. The odds of there being one

bomb-carrying passenger on a plane are small, but the odds

of there being two bombs are even smaller!

In fact, the statistician Nate Silver has estimated the probability of dying on an airplane as a result of a terrorist attack as 1 in 25 million ("Crunching the Risk Numbers", Wall Street Journal, 01/08/2011). So if you follow Anderson's logic, the chance of there being two terrorist bombers is 1 in 525 trillion!

Descriptive Statistics

Returning to our

experiment, first we need to have some way of characterizing

the typical performance of young and old subjects on the

Sternberg task. Here, there are three basic statistics that

measure the central tendency of a set of

observations:

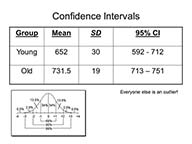

- The mean (M) is the arithmetical average, computed by adding up the numbers and dividing by the number of observations. In this case, the mean response latency for the young subjects is 652 milliseconds (6520/10), while M for the old is approximately 732 milliseconds (7315/10).

- The median is the value below which 50% of the observations are found. It is determined simply by rank-ordering the observations, and finding the point that divides the distribution in half. For the young subjects, the median is 642.5 milliseconds, halfway between 640 and 645; for the old, it is 732.5, halfway between 730 and 735.

- The mode is simply the most frequent observation. For the young subjects, the mode is 635 milliseconds, for the old it is 725.

Notice that, in this case, the mean, median, and mode for each group are similar in value. This is not always so. But in a normal distribution, these three estimates of central tendency will be exactly equal.

Second, we need to have some way of

characterizing the dispersion of

observations around the center, or variability.

The commonest statistics for this purpose are the variance

and the standard deviation (SD).

- In the case of the young subjects, the SD is approximately 30 milliseconds.

- For the old subjects the SD is approximately 19 milliseconds.

The standard deviation is a measure of the dispersion of individual values around the sample mean. But what if we took repeated samples from the same populations of young and old subjects? Each time, we'd get a slightly different mean (and standard deviation), because each sample would be slightly different from the others. The standard error of the mean (SEM) is, essentially, a measure of the variance of means of repeated samples drawn from a population.

- For the old subjects, SEM is approximately 6.

- For the young

subjects, SEM is approximately 9.

Confidence Intervals

Applying the Rule of 68, 95, and 99, we

can infer that approximately 68% of the observations will

fall within 1 standard deviation of the mean; approximately

95% of the scores will fall within 2 standard deviations;

and approximately 99% of observations will fall within 3

standard deviations.

Applying the Rule of 68, 95, and 99, we

can infer that approximately 68% of the observations will

fall within 1 standard deviation of the mean; approximately

95% of the scores will fall within 2 standard deviations;

and approximately 99% of observations will fall within 3

standard deviations.

- Thus, in a large sample of young people, we would expect that 68% of subjects would show response latencies between 622 and 682 milliseconds (652 plus or minus 30), and 95% would show latencies between 592 and 712 milliseconds (632 plus or minus 60).

- Similarly, in a large sample of old people, we would expect that 68% of the subjects would show response latencies between 713 and 751 milliseconds, 95% between 694 and 770 milliseconds.

Put another way, in terms of confidence intervals, Remember that means are only estimates of population values, given the observations in a sample (if we measured the entire population, we wouldn't have to estimate the mean -- we'd know what it is!). In describing sample distributions, we define a confidence interval as 2 standard deviations around the mean. Given the results of our experiment:

- We can be 95% confident that the true mean response latency for the entire population of young subjects is somewhere between 592 and 712 milliseconds.

- And we can be 95% confident that the true mean for the entire population of elderly subjects is somewhere between 713 and 751 milliseconds.

Note that in this instance the confidence intervals do not overlap. This is our first clue that the response latencies for young and old subjects really are different.

The normal distribution permits us to determine the extent to which something might occur by chance. Thus, for the young subjects, a response latency of 725 milliseconds falls more than 2 standard deviations away from the mean. We expect such subjects to be observed less than 5% of the time, by chance: half of these, 2.5%, will fall more than 2 standard deviations below the mean, while the remaining half will fall more than 2 standard deviations above the mean.

- In fact, there was such a subject in our sample of 10 young subjects. Perhaps this was just a random happenstance. Or perhaps this individual is a true outlier who was texting his girlfriend during the experiment!

- The same thing goes for that elderly subject whose mean reaction time was 695 milliseconds. Maybe this was a random occurrence, or maybe this is a person who, in terms of mental speed, has aged really successfully!

Inferential Statistics

The normal

distribution also permits us to determine the significance

of the difference between groups. One statistic commonly

used for this purpose is the t test(sometimes

called "Student's t", after the pseudonymous

author, now known to be W.S. Gossett (1876-1937), who first

published the test), which indicates how likely it is that

difference between two groups occurred by chance alone. You

do not have to know how to calculate a t test. Conceptually,

however, the t test compares the difference

between two group means compared to the standard deviations

around those means.

The normal

distribution also permits us to determine the significance

of the difference between groups. One statistic commonly

used for this purpose is the t test(sometimes

called "Student's t", after the pseudonymous

author, now known to be W.S. Gossett (1876-1937), who first

published the test), which indicates how likely it is that

difference between two groups occurred by chance alone. You

do not have to know how to calculate a t test. Conceptually,

however, the t test compares the difference

between two group means compared to the standard deviations

around those means.

The t test is an inferential statistic: it goes beyond mere description, and allows the investigator to make inferences, or judgments, about the magnitude of a difference, or some other relationship, between two groups or variables. There are several varieties of the t test, all based on the same logic of comparing the difference between two means to their standard error(s).

As a general rule of thumb, if two

group means differ from each other by more than 2 standard

deviations, we consider that this is rather unlikely to have

occurred simply by chance. This heuristic is known as the

rule of two standard deviations.

- In the case of our young and old subjects, note that the mean of the young subjects, 652, is more than 4 standard deviations away from the mean, 732, of the old subjects (732 - 652 = 80, 80/19 = 4.21).

- Similarly, the mean of the old subjects is more than 2 standard deviations away from the mean of the young subjects (80/30 = 2.67). Thus, these two means are so far away from each other that we consider it rather unlikely that the difference is due to chance.

But that's really conservative. A more appropriate indicator is the distance between the means in terms of standard errors.

- The mean value for the old subjects is almost nine standard errors away from the mean of the young subjects (80/9 = 8.89).

- And the mean value for the young subjects is more than 13 standard errors away from the mean of the old subjects (80/6 =13.3).

In fact, in this case, t = 7.12, p < .001, suggesting that a difference this large would occur far less than once in a thousand by chance alone).

The probability attached to any value of t depends on the number of subjects: the more subjects there are, the lower a t is needed to achieve statistical significance.

We can conclude, therefore, that the young probably do have shorter response latencies than the elderly, meaning -- if Sternberg was right that his task measured memory search -- that memory search is, on average, faster for young people than for old.

Note, however, that we can never be absolutely

certain that a difference is real. After all, there

is that one chance out of a thousand. As a rule,

psychologists accept as statistically significant

a finding that would occur by chance in only 5 out of 100

cases -- the "p < .05" that you will see so

often in research reports. But this is just a convenient

standard. In Statistical Methods for Research Workers

(1925), a groundbreaking text on statistics, Ronald Fisher,

the "father" of modern statistics, proposed p<.05,

writing that "It is convenient to take this point as a limit

in judging whether a deviation ought to be significant or not".

To "p<" or Not To "p<"

Because precise p values are hard to calculate

by hand, traditionally investigators estimated them from

published tables. Thus, in the older literature, you will

see p-values reported merely as "less" than .05

(5 chances out of 100), .01 (1/100), .005 (5/1000), and

.001 (1/1000). More recently, the advent of high-speed

computers has made it possible for investigators -- by

which I mean their computers -- to calculate exact

probabilities very easily. Hence, in the newer literature,

you will often see p-values reported as ".0136"

or some-such. In my view, this makes a fetish out of p-values,

and so I prefer to use the older conventions of

statistical significance: < .05, < .01, < .005,

and < .001 (anything less than .001, in my view, is

just gilding the lily).

Types of Errors

To repeat: there is always some probability that a result will occur simply by chance. Statistical significance is always expressed in terms of probabilities, meaning that there is always some probability of making a mistake. In general, we count two different types of errors:

- Type I error refers to the probability of accepting a difference as significant when in fact it is due to chance. In this case, we might conclude that the young and the old differ in search speed, when in fact they do not. This happens when we adopt a criterion for statistical significance that is too liberal (e.g., 10 or 20 times out of 100). Another term for Type I error is false positive.

- Type II error refers to the probability of rejecting a difference as nonsignificant when in fact it is true. In this case, we might conclude that the young and the old do not differ in response latency, when in fact they do. This happens when we adopt a criterion that is too strict (e.g., 1 in 10,000 or 1 in 1,000,000). Another term for Type II error is false negative.

Note that the two types of errors compensate for each other: if you increase the probability of a Type I error, you decrease the likelihood of a Type II error, and vice-versa. The trick is to find an acceptable middle ground.

Note also that it is easy to confuse Type I and Type II errors. Your instructor gets them confused all the time. For that reason, you will never be asked to define Type I and Type II errors as such on a test. However, you will be held responsible for the concepts that these terms represent: False positives and false negatives.

Of course, there is no way to eliminate the

likelihood of error entirely. However, we can increase our

confidence in our experimental results if we perform a replication

of the experiment, and get the same results. Replications

come in two kinds: exact, in which we repeat the original

procedures slightly, or conceptual, in which we vary details

of the procedure. For example, we might have slightly

different criteria for classifying subjects as young or old,

or we might test them with different set sizes. Whether the

replication is exact or conceptual, if our hypothesis is

correct we ought to get a difference between young and old

subjects.

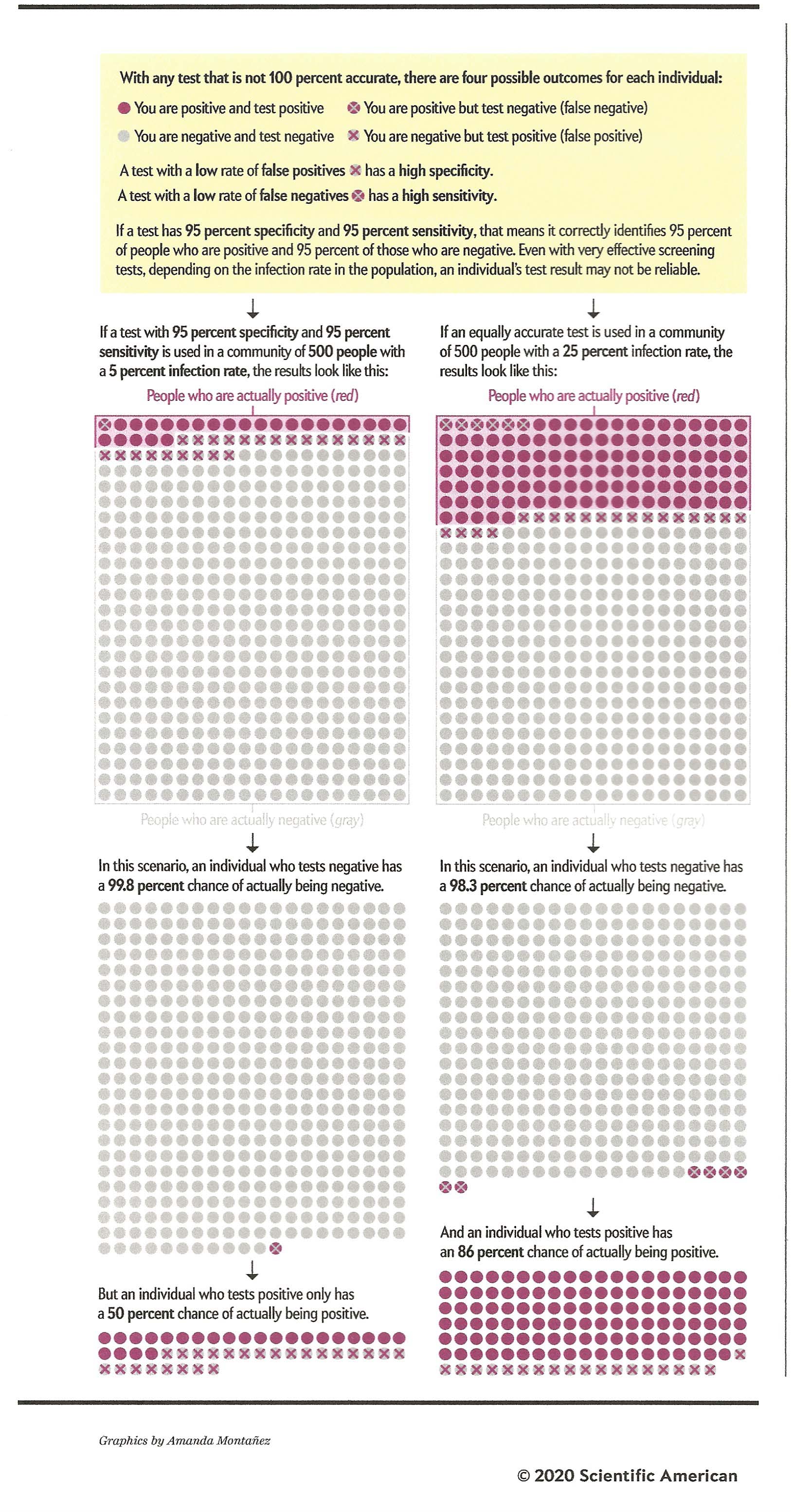

Sensitivity and Specificity in Medical TestsSetting aside the problems of experimental design and statistical inference, the issue of false positives and false negatives comes up all the time in the context of medical testing. Suppose that you're testing for a particular medical condition: a mammogram to detect breast cancer, or occult (hidden) blood in feces that might indicate colorectal cancer -- or, since I'm writing this in July 2020, a test to detect the coronavirus that caused the Covid-19 pandemic. These tests are screening devices, and positive results usually call for further testing to confirm a diagnosis.

There are two standards for the reliability of a medical test:

The "gold

standard" for medical testing is 95% sensitivity and

95% specificity. That is, it will correctly

identify 95% of those who have the disease as well

95% of those who do not have the disease

(Note: there's that "p<.05" again!).

As of July 2020, many of the available tests for

Covid-19 appear not to meet this standard,

generating lots of false-positive and false-negative

results. But -- and

here's the rub -- even if a test did have 95%

sensitivity and specificity, it still might make a

lot of errors, depending on the baserate of

the disease in question. To make a long story

short, those 95% figures really only apply when the

baserate for the disease in question is 50% -- that

is, when half the population actually has the

disease (which, to put it gently, is hardly ever the

case). To see how

this works, consider the following graph, taken from

"False Positive Alarm", an article by Sarah Lewin

Frasier in Scientific American (July 2020 --

an issue focused on the Covid-19 pandemic).

Now

consider the right-hand panels, which show the

outcomes of the same test, 95% sensitivity and 95%

specificity, for a disease whose infection rate in

the population is 25%. In a random sample of

500 people, that means that 125 will have the

disease, and 375 will be healthy. At 95%

sensitivity, the test will correctly identify 119

individuals as positive (95% of 125), and miss only

6; and at 95% specificity, it will correctly

identify 356 people as disease free (95% of 375),

and incorrectly identify only 19 as having the

disease. That means that, of all 138

individuals who received positive test results, 119

(86%) were diagnosed correctly as having the

disease, and only 19 (14%) were diagnosed

incorrectly as being disease-free. That's a much

better ratio. Not shown

in the Scientific American graphic is the

case were the infection rate is 50%. You can

work out the arithmetic for yourself, but under

these circumstances, the test will miss only 12

individuals with the disease (5%), and incorrectly

diagnose only 12 healthy people (another 5%). Of course

for most diseases, the infection rate is going to be

closer to 1% than 50% or even 25%. By way of

comparison, the lifetime prevalence rate for breast

cancer in women is about 12%; for colon cancer,

about 4%. For Covid-19, as of July 2020 about

9% of diagnostic tests were positive for the

coronavirus; but, at that time most people being

tested already show symptoms of coronavirus

infection, such as fever, dry cough, or shortness of

breath; so the actual prevalence rate is probably

lower than that. A little more than 1% of the

population had tested positive for the coronavirus;

but again; this figure was not based on a random

sample and most positive cases were

asymptomatic. Still, under such circumstances,

where the baseline prevalence rate in the population

is closer to 1% than 50%, the probability of getting

a false positive test result is pretty high -- which

is why it's important that the sensitivity and

specificity of a test be as high as possible.

Unfortunately, for Covid-19, we so many different

tests are being used that we don't really know much

about their sensitivity and specificity; nor, if we

get a test, do we have much control over whether we

get one that is highly reliable.

Of course,

a lot depends on the costs associated with making

the two kinds of mistakes. We may be willing

to tolerate a high rate of false positives, if the

test has a low rate of false negatives. We'll

take up this matter again, in the lectures on

"Sensation", when we discuss signal-detection

theory, which takes account of both the

expectations and motivations of the judge using a

test. The same

arithmetic applies to the evaluation of a treatment

for a disease. In this case, the cure rate is

analogous to sensitivity (how many cases does the

treatment actually cure), while the rate of negative

side effects is analogous to specificity (in how

many cases does the treatment do more harm than

good). And, to

return to psychology for just a moment, the same

arithmetic applies to the diagnosis of mental

illnesses. On many college campuses and

elsewhere, it's common to ask students to fill out

questionnaires to screen for illness such as

depression or risk of suicide. Again, these

are just screeners, and it's important to know how

they stand with respect to sensitivity and

specificity. |

Correlation

Another way of addressing the same

question is to calculate the correlation

coefficient (r), also known as

"Pearson's product-moment correlation coefficient". The

correlation coefficient is a measure of the direction and

strength of the relationship between two variables. If a

correlation is positive that means that

the two variables increase (go up) and decrease (go down)

together: high values on one variable are associated with

high values on the other. If a correlation is negative,

as one variable increases in magnitude the other one

decreases, and vice-versa. The strength of a correlation

varies from 0 (zero) to 1. If the correlation is 0, then

there is no relationship between the two variables. If the

correlation is 1, then there is a perfect correspondence

(positive or negative) between the two variables). If the

correlation is in between, then there is some relationship,

but it is not perfect.

Another way of thinking about the correlation coefficient is that it expresses the degree to which we can predict the value of one variable, if we know the value of another variable. If the correlation between Variable X and Variable Y is 1.00, we can predict Y from X with certainty. If the correlation is 0, we cannot predict it at all. If the correlation is between 0 and 1, we can predict with some degree of confidence -- the higher the correlation, the higher the certainty. Such analyses are known as regression analyses, which generate regression equations in which the correlation coefficient plays an important role. In correlational research, the independent variable is usually called the predictor variable; the dependent variable is called the criterion variable.

In fact, Sternberg himself employed

regression analysis in his experiment. In the figure shown

earlier, reaction time (the dependent variable) is regressed

on set size (the independent variable). The resulting

regression equation indicates that each item in the search

set adds about 30 milliseconds to search time

In fact, Sternberg himself employed

regression analysis in his experiment. In the figure shown

earlier, reaction time (the dependent variable) is regressed

on set size (the independent variable). The resulting

regression equation indicates that each item in the search

set adds about 30 milliseconds to search time

Now, if we are right in our hypothesis that search speed is slower in elderly subjects, then we expect a positive correlation between response latency and age: the older the subject is, the longer (i.e., slower) the response latency. You do not have to know how to calculate a correlation coefficient. Conceptually, though, some idea of the correlation coefficient can be gleaned by considering the scatterplot that is formed by pitting one variable against another.

Suppose, instead of classifying our subjects as young and old, we knew their actual ages.Remember, again, that this is fabricated data, for purposes of illustration only.

|

Subject |

1 |

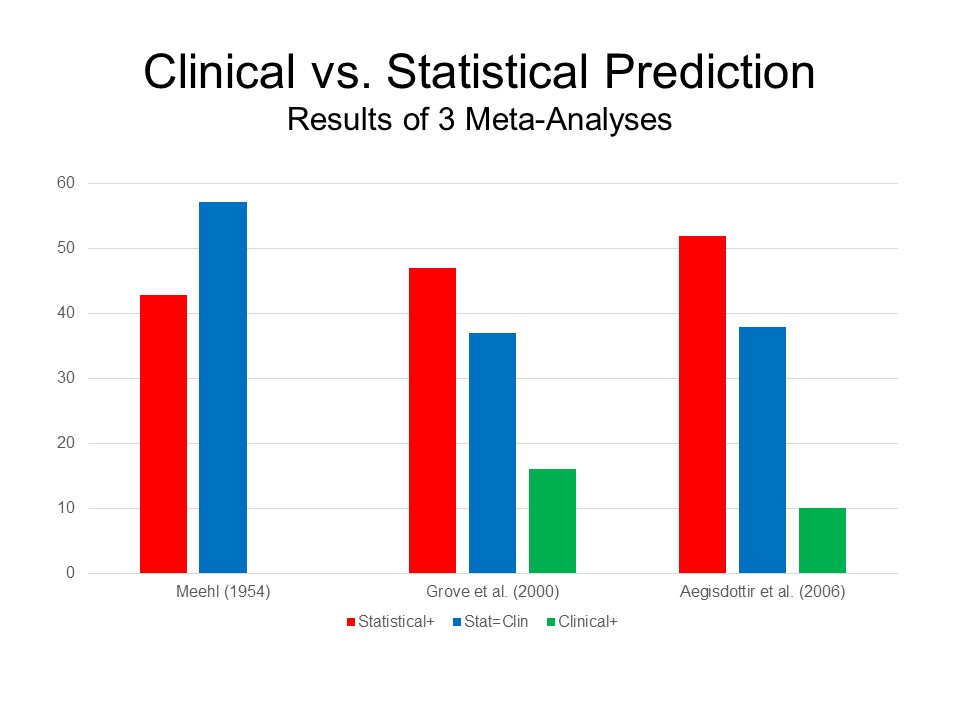

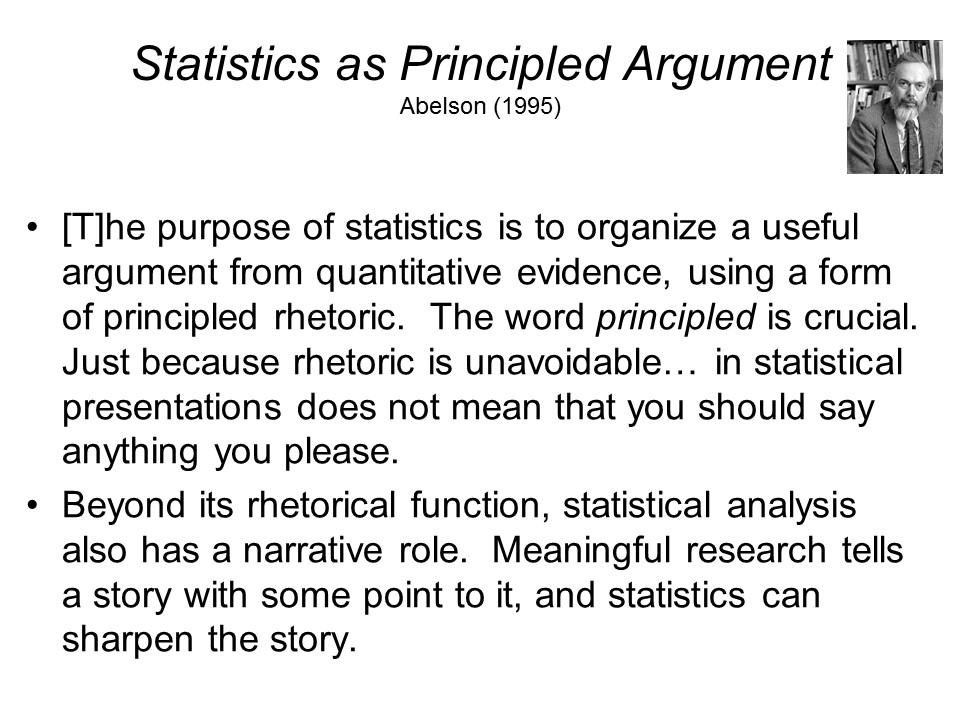

2 |