Sensation

We now understand that learning does not represent merely a

"relatively permanent change in behavior resulting from

experience". Rather, it is a change in knowledge.

Through various learning processes, the organism acquires new

knowledge that allows it to predict and control events in the

world.

The implication of this cognitive view of learning is that human beings, and other organisms (primates, certainly, and probably other mammals as well, at least) are cognitive beings. Our behavior goes beyond innate, pre-programmed reflexes, taxis, and instincts, to include actions based on our knowledge and understanding of the world.

Intelligent behavior requires that we:

- acquire knowledge about ourselves and the world around us;

- store this knowledge in memory so that it will be available for use later;

- integrate this new information with prior knowledge;

- use knowledge to plan and execute actions that will cope with environmental demands and achieve personal goals; and

- use language as a tool for thinking, problem-solving, and communication with others

The

first step in this process is to form internal, mental

representations of ourselves and the world around us. But how

do we come to know the world?

Nativism and Empiricism

Modern philosophers and psychologists have debated two broad possible sources of knowledge.

According to the nativism

associated with Rene Descartes, a 16th-century French

philosopher, mind and body are separate entities (a

philosophical position known as dualism).

According to Descartes, who was a devout Roman Catholic, the

body responds to sensory impressions, which in turn generate

animal behavior as reflexes. All animal knowledge,a

posteriori, a Latin phrase meaning that it

is acquired through sensory experience. But the human mind

contains a priori knowledge that that exists

independent of sensory experience, because it comes from God.

According to the nativism

associated with Rene Descartes, a 16th-century French

philosopher, mind and body are separate entities (a

philosophical position known as dualism).

According to Descartes, who was a devout Roman Catholic, the

body responds to sensory impressions, which in turn generate

animal behavior as reflexes. All animal knowledge,a

posteriori, a Latin phrase meaning that it

is acquired through sensory experience. But the human mind

contains a priori knowledge that that exists

independent of sensory experience, because it comes from God.

On

the other side of the debate was the empiricism

espoused by John Locke, a 17th-century English philosopher.

Locke argued that all knowledge comes to us by way of

sensory experiences, either through sensation,

by which we observe external objects, or reflection,

by which we observe the workings of our own minds. Locke's

empiricism provided the philosophical background for the

concept of conditioning, and greatly influenced Pavlov,

Thorndike, Watson, and Skinner. Like these later

psychologists, Locke and other empiricists argued that

associations were formed between sensory stimuli, in which the

presence of one object evoked the idea of another, with which

it was associated; and that complex ideas were built out of

higher-order associations between simple ideas.

On

the other side of the debate was the empiricism

espoused by John Locke, a 17th-century English philosopher.

Locke argued that all knowledge comes to us by way of

sensory experiences, either through sensation,

by which we observe external objects, or reflection,

by which we observe the workings of our own minds. Locke's

empiricism provided the philosophical background for the

concept of conditioning, and greatly influenced Pavlov,

Thorndike, Watson, and Skinner. Like these later

psychologists, Locke and other empiricists argued that

associations were formed between sensory stimuli, in which the

presence of one object evoked the idea of another, with which

it was associated; and that complex ideas were built out of

higher-order associations between simple ideas.

Immanuel

Kant, a 17th-century German philosopher, provided a synthesis

of nativism and empiricism. Like Locke, Kant believed that

knowledge was acquired through experience; but he also

believed that our experience of the world presumes the prior

existence of certain innate mental structures -- ideas such as

space, time, and other categories of thought.

Immanuel

Kant, a 17th-century German philosopher, provided a synthesis

of nativism and empiricism. Like Locke, Kant believed that

knowledge was acquired through experience; but he also

believed that our experience of the world presumes the prior

existence of certain innate mental structures -- ideas such as

space, time, and other categories of thought.

This cognitive constitution is

presupposed by experience. Consider Locke's view of the

association of ideas. Locke adhered to the traditional

principle that ideas were associated by virtue of contiguity

-- meaning spatial and temporal contiguity. But you can't

experience contiguity unless you already have some idea of

space and time. Never mind that association by contiguity is

the wrong idea about associations. The point is that if you

believe that associations are formed by virtue of the spatial

and temporal contiguity between events, then you must already

possess the ideas of space and time, before you can experience

contiguity between them. As argued in previous lectures,

modern approaches to learning suppose that associations are

based on contingency rather than contiguity. But

that just means that the organism must already possess the

concepts of correlation and causation before it can experience

contingency. Either way, Kant seems to have it right:a

posteriori sensory experience presupposes a priori

mental structures.

This cognitive constitution is

presupposed by experience. Consider Locke's view of the

association of ideas. Locke adhered to the traditional

principle that ideas were associated by virtue of contiguity

-- meaning spatial and temporal contiguity. But you can't

experience contiguity unless you already have some idea of

space and time. Never mind that association by contiguity is

the wrong idea about associations. The point is that if you

believe that associations are formed by virtue of the spatial

and temporal contiguity between events, then you must already

possess the ideas of space and time, before you can experience

contiguity between them. As argued in previous lectures,

modern approaches to learning suppose that associations are

based on contingency rather than contiguity. But

that just means that the organism must already possess the

concepts of correlation and causation before it can experience

contingency. Either way, Kant seems to have it right:a

posteriori sensory experience presupposes a priori

mental structures.

Interestingly, the phrenologists postulated faculties of time, locality (space), and causality in their system -- again, precisely the faculties that would need to be innately specified for learning to occur, under either the traditional S-R view or the modern cognitive view.

The Vocabulary of Sensation and Perception

The tension between empiricism and

nativism runs throughout the study of cognition: we have

already seen it in sociobiology and evolutionary psychology,

and we will see it again in our treatment of language, and

again in our treatment of development. But nobody -- not even

Descartes -- denies the importance of sensory experience.

Therefore, we begin our study of cognition by describing sensation

and perception.

- Sensation detects the presence of an object or event in the environment. The function of our sensory apparatus is to answer such questions as "Has there been a change in the environment?";"Is there something out there?"; and if so,"How strong is it?".

- Perception forms a mental representation of that object or event. The function of our perceptual apparatus is to answer such questions as "What is out there?","Where is it?", and "What is it doing?".

The boundary between sensation and perception is not entirely clear -- it's more like the boundary between the United States and Canada or Mexico, than the Iron Curtain of the Cold War era.

In sensation, we must first distinguish between the distal stimulus and the proximal stimulus.

- The distal stimulus is the object of perception itself -- the tree, or rock, or ocean, or car that exists in the world outside the mind.

- The proximal stimulus consists of all the physical energies emanating from the distal stimulus, and which fall on the sensory receptor organs associated with our eyes, ears, etc. The proximal stimulus might be a pattern of light waves reflected from the tree, or a pattern of sound waves generated by the motion of its branches and leaves as it sways in the breeze, or some other kind of physical energy.

The first step in sensation is transduction, or the conversion of the proximal stimulus (light waves, sound waves, or whatever) into a neural impulse. This is accomplished by the sensory receptor organ. There are many different receptor organs in the body, roughly corresponding to the various modalities of sensation -- vision, audition, etc.

The neural impulse is then transmitted to the cerebral cortex of the brain (such as the primary visual area of the occipital lobe) via various neural pathways (such as the optic nerve).

Sensation continues with the observer's detection of the stimulus, and analysis of certain fundamental qualities such as intensity.

Somewhere around this point we begin to talk about perception, which ends with the construction of a mental representation -- a mental image, if you will -- of the distal stimulus, including a description of its physical features and an analysis of its meaning and implications.

The problem of perception, then, is simply this:

How do we get from the distal stimulus, through the proximal stimulus,

to the mental representation of the distal stimulus?

The Sensory Modalities

The first step in the process of forming mental representations of the world is sensation -- transforming the physical energies radiating from the stimulus in the world into neural impulses traveling through the nervous system.

How many senses are there -- how many

different ways of experiencing, and knowing, the world?

The Greek

philosopher Aristotle (384-322 BC), in De Anima,

gave the traditional answer of five" special senses": vision,

hearing, smell, taste, and touch. (This gives rise to the

notion of a "sixth sense", or intuition; this "sixth sense"

also plays a role in the pseudoscientific literature on parapsychology).

Even

before Aristotle, and far from Greece, Mahavira (599-527, or

perhaps 540-468 BCE; image at left is a statue of Mahavira at

the Shri Mahavirji temple in Rajasthan, India), the Indian

philosopher who founded Jainism, taught that (nearly)

everything in the universe is alive, has a soul, and

intelligence, and has sensory experiences. But all of

matter is classified into five categories, depending on the

scope of sensory modalities available to it (this list and the

examples come from Great Minds of the Eastern Intellectual

Tradition by Grant Hardy, 2011).

Even

before Aristotle, and far from Greece, Mahavira (599-527, or

perhaps 540-468 BCE; image at left is a statue of Mahavira at

the Shri Mahavirji temple in Rajasthan, India), the Indian

philosopher who founded Jainism, taught that (nearly)

everything in the universe is alive, has a soul, and

intelligence, and has sensory experiences. But all of

matter is classified into five categories, depending on the

scope of sensory modalities available to it (this list and the

examples come from Great Minds of the Eastern Intellectual

Tradition by Grant Hardy, 2011).

- Humans, and other large animals, have all five traditional senses.

- Flies, wasps, and butterflies have only four senses, lacking hearing.

- Ants, fleas, and moths lack both sight and vision.

- Worms, leeches, and shellfish have only taste and touch.

- Plants, the soil, the wind, water, and fire have only

touch.

It's not clear where Mahavira got these ideas, but their

ethical implications were clear to him. In his s view,

all matter, not just animals but plants and rocks as well, had

just a little bit of sentience in them. And because all

levels of matter included touch, all matter could suffer --

when an animal is killed for food, when a plant is harvested,

when a rock is broken. And because Mahavira subscribed

to the Vedic principle of ahimsa, or nonviolence, he

worked hard to minimize the pain he caused other

creatures. He stopped wearing clothes, because picking

cotton or flax to make textiles caused the plants to suffer;

and eventually he stopped eating even vegetables, and died of

starvation. Modern Jains, even the most orthodox, don't

go that far (or they wouldn't have lasted this long!); but

they do try to minimize the suffering they cause to others,

opting mostly for various forms of vegetarianism and other

ascetic practices..

So, whether you're in the Greece of the

West or the India of the East, there appear to be five sensory

modalities. On the contrary, some authors (e.g.,

Stoffregen & Bardy in Behavioral & Brain Sciences,

2001) have argued that there are no differences among the

various senses, and that there is no basis for assuming that

sensory information reaches us via separate "channels". From

this point of view, we might just as well think of ourselves

as having only a single sense, sensitive to various features

in a global array of stimulation.

Still, Aristotle's insight, that we have a number of different sensory modalities, remains by far the more popular view. And for most of that time, authorities considered that Aristotle's five senses exhausted the list -- so much so that an entire genre of art developed out of the idea.

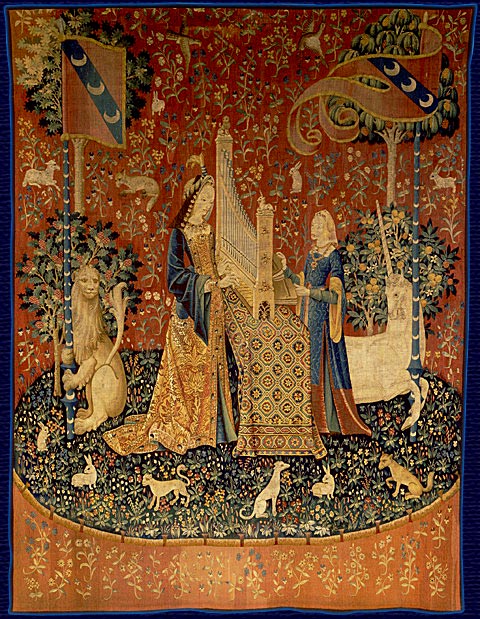

For

example, five of the series of six late-medieval (c. 1500)

French tapestries known as The Lady and the Unicorn

depict the five senses (the sixth tapestry, on the left, is

entitled "My Sole Desire"). The tapestries can be viewed

at the Musee National du Moyen Age, in Paris -- and they also

appear in some of the "Harry Potter" films.

For

example, five of the series of six late-medieval (c. 1500)

French tapestries known as The Lady and the Unicorn

depict the five senses (the sixth tapestry, on the left, is

entitled "My Sole Desire"). The tapestries can be viewed

at the Musee National du Moyen Age, in Paris -- and they also

appear in some of the "Harry Potter" films.

The Lady and the Unicorn (c. 1500)Musee National de Moyen Age,

Paris

|

||||

|

|

|

|

|

| Touch |

Taste |

Smell |

Hearing |

Sight |

In 1617-18, Jan Brueghel the Elder, together with his friend

Peter Paul Rubens, initiated the genre in Dutch art with a

series of five allegorical paintings depicting The Five

Senses. You can view them today at the Museo del

Prado in Madrid, Spain.

Brueghel and Rubens: The Five Senses (1617-18)Museo del Prado, Madrid

|

||||

|

|

|

|

|

| Sight |

Hearing |

Smell |

Taste |

Touch |

The Dutch genre was brought to its apex (arguably) by the

teenaged Rembrandt van Rijn -- in fact, these are his earliest

known surviving works. One of these, depicting taste,

has not been seen in 400 years. But there's hope that it

might be recovered; another painting in the series, "The

Unconscious Patient", depicting smell, was discovered in a

basement and originally put on auction with an asking price of

$500-800 (it eventually sold for $87,000!). The four

surviving paintings were displaced together for the first time

in ages at the Ashmolean

Museum in Oxford, under the title "Sensations: Rembrandt's

First Paintings". The extant paintings were given

allegorical titles:

- "A Pedlar Selling Spectacles (Allegory of Sight)". Yes, that's how "peddler" is spelled by the museum.

- "Three Singers" (Allegory of Hearing".

- "Unconscious Patient (Allegory of Smell)". That's not ether or chloroform, which weren't introduced into surgery until the 19th century. Rather, that's smelling salts, intended to bring the patient back to consciousness.

- The "Allegory of Taste" is the one that's missing, and apparently we don't know what it depicts.

- "Stone Operation (Allegory of Touch). No, not the removal of kidney stones, but rather a procedure in which quack surgeons removed "stones" from the skull to cure patients of their headaches.

Rembrandt's Sensations Exhibit at the Ashmolean Museum,

Oxford, September-November 2016

|

||||

|

|

|

|

|

| Sight |

Hearing |

Smell |

(Taste) |

Touch |

"The Five Senses" was a popular topic for genre painting in the Renaissance and Baroque eras. Here's another example, from Michaelina Wautier (1604-1689), perhaps the most highly regarded woman painter of the Flemish Baroque (though her paintings were often misattributed to her brother Charles). For a fascinating story about Wautier and the rediscovery of her art, see "No Woman Could Have Painted This, They Said. They Were Wrong" by Valeriya Safronova, New York Times, 10/07/2025.

The Five Senses of Michaelina Wautier

At the Museum of Fine Arts, Boston |

||||

|

|

|

|

.jpg) |

| Sight |

Hearing |

Smell |

Taste |

Touch |

Exteroception and Proprioception

On the contrary, I identify nine

different sensory modalities, or general

domains in which sensation occurs. These modalities may be

arranged hierarchically (of course!), beginning with a

division (initially proposed by Sherrington in 1906)into two

broad categories,exteroception and proprioception.

Within each of these categories, there are (of course!)

further subcategories.

Exteroception refers to sensations that arise from stimulation of sensory receptors located on or near the surface of the body, and includes three subcategories.

In the distance senses there is no direct contact between the distal stimulus and the sense organ; rather, radiated energy travels a distance between the distal stimulus and the receptor organ. The distance senses include vision (seeing), in which light waves stimulate the rods and cones in the retina of the eye; and audition (hearing), in which sound waves stimulate hair cells on the basilar membrane of the cochlea of the inner ear.

In the chemical senses the distal stimulus makes contact with a receptor organ, initiating a chemical reaction which gives rise to sensory experience. The chemical senses include gustation (tasting), in which chemical molecules in food and drink stimulate taste buds surrounding the papillae of the tongue; and olfaction (smelling), in which airborne chemical molecules stimulate receptors in the olfactory epithelium of the nose.

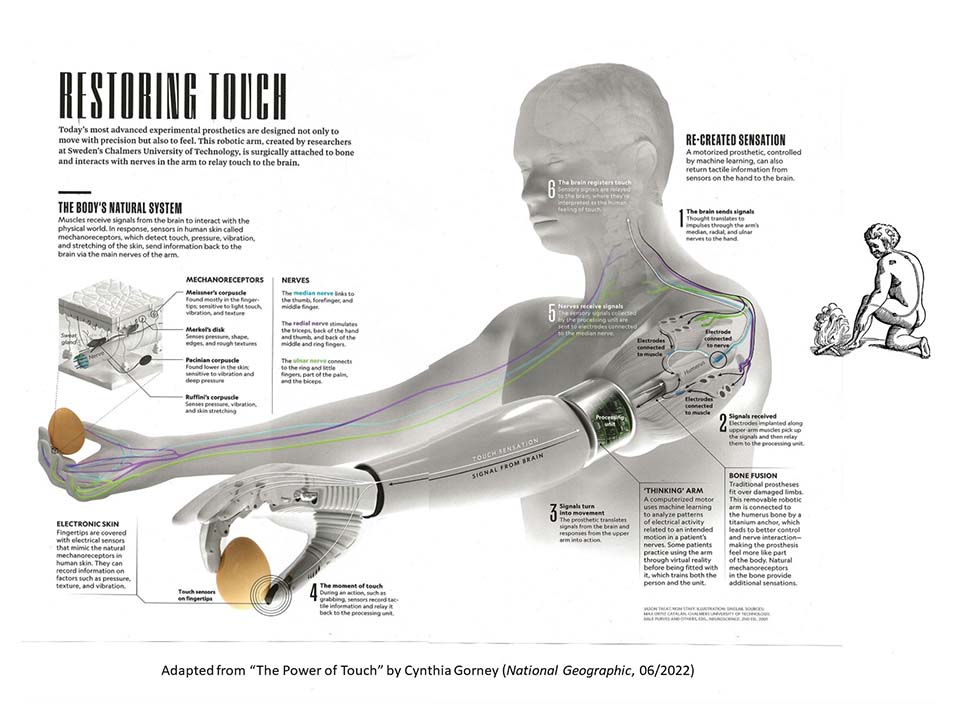

In the skin senses, also known as somesthesis, the distal stimulus makes contact (more or less) with the surface of the skin, stimulating receptors buried underneath. The skin senses include the tactile sense, in which mechanical pressure from an object stimulates receptors buried in the skin, and the thermal sense, in which the temperature differential between the object and the skin (that is, whether the object is relatively warm or cold, compared to the skin itself) stimulates corresponding receptors buried in the skin. There is also a sensation of pain.

Proprioception refers to sensations concerning the position and motion of the body.

In kinesthesis (sensation of the motion of the body), the stretching of the muscles, contracting of the tendons, and movement in the joints stimulates specialized nerve endings located nearby.

In equilibrium, also known as the vestibular sense, gravitational force pulls on crystals suspended in liquid in the semicircular canals and the saccule and utricle of the inner ear; these crystals then fall on hair cells arranged in various orientations. Equilibrium is sometimes classified as a form of exteroception.

Sherrington also identified a category

of interoception, which receives stimulation

from internal tissues and organs, such as the viscera and the

blood vessels. Because interoception does not typically give

rise to conscious sensations -- you can't detect changes in

blood pressure, for example -- we won't consider this category

of sensation further.

- At the same time, there are possible exceptions, where

interoception does appear to give rise to conscious

sensation -- for example, when you feel hungry or thirsty,

chilly or feverish. Hunger has to do with

blood-sugar levels, and thirst has to do with cell fluids,

and it's not clear that people really have sensory

awareness of these physiological parameters.

- But people do feel something when they're

hungry or thirsty -- though maybe these sensations arise

from contractions in the stomach or dryness in the mouth

-- which wouldn't exactly be interoception, at least in

Sherrington's terms.

- People with hypoglycemia often claim that they can tell when their blood-sugar levels have gone down. But it's not clear that they're sensing blood-sugar levels directly. Instead, they may be making an inference about their blood-sugar levels based on other symptoms, such as dysphoria or dizziness.

- It's been claimed that difficulties with interoception

are risk factors for eating disorders (such as anorexia,

bulimia, or binge eating) and body

dysmorphic disorder. Certainly, patients with

eating disorder engage in eating behaviors (including

fasting) that are discordant with their actual internal

states (see "Inside the Wrong Body" by Carrie Arnold, Scientific

American Mind, May-June 2012). (More about these

disorders in the lectures on Psychopathology and

Psychotherapy.)

- Interoceptive skill is sometimes measured with the "heartbeat test" developed by Hugo Critchley and his colleagues: scores on this test are highly correlated with laboratory measures of interoceptive awareness.

- Sit in a comfortable chair, take a few deep breaths;

start a stopwatch, and count your heartbeats for 1

minutes (without taking your pulse!). This is

your estimated pulse.

- Then take your pulse by putting your fingers on your

wrist or neck and counting for one minute. Then

rest for a minute and do it again. Take the

average, to get your average pulse.

- Subtract your average pulse from your estimated pulse.

- Divide the absolute value of the difference by your average pulse to get a decimal.

- Subtract this decimal from1.

- A score of 0.80 or higher indicates "very good" interoceptive skill.

- A score of 0.60 to 0.79 indicates "moderately good" skill.

- A score or 0.59 or less indicates "poor" interoception.

- Cognitive-behavioral therapists often use biofeedback to help people learn how to control their internal physiological states. The whole point of biofeedback is that people can't sense these states directly, and need special monitors to gain this awareness indirectly. (More on biofeedback in the lectures on Psychopathology and Psychotherapy.)

For more on interoception, see "How Do You Feel? Interoception: The Sense of the Physiological condition of the Body" by A.D. Craig in Nature Reviews Neuroscience (2002).

Notice that many of the eight senses are variations on two different mechanisms. In vision, gustation, olfaction, and (probably) the temperature sense, the proximal stimulus is a chemical reaction of some kind. In audition, the tactile sense, kinesthesis, and equilibrium, the proximal stimulus is mechanical in nature. It seems likely that, over the course of evolutionary time, these senses progressively differentiated from two primitive sensory modalities, involving photochemical and mechanical stimulation, respectively.

Defining the Sensory Modalities

How do we know how many sensory modalities there are -- that Aristotle was wrong? The organization of the sensory modalities illustrates the basic concept of transduction: the conversion of some physical stimulus energy into a neural impulse. Looking over the sensory modalities, it appears as if each sensory receptor is specifically responsive to a different proximal stimulus energy (which, in turn, radiates from a distal stimulus). In transduction, a particular type of physical energy is converted into a neural impulse, which is carried via a sensory tract through the thalamus (which functions as a kind of sensory relay station) to a particular part of the brain known as a sensory projection area. These four features differentiate among the various modalities of sensation.

Here's how the system works, in simplified form, for each of the nine sensory modalities.

Vision

Thus, in vision, the proximal stimulus consists -- naturally -- of light waves, electromagnetic radiation emitted by or reflected from a distal stimulus. Not all light waves give rise to the experience of seeing, however; in humans, only wavelengths of 380-780 nanometers (nm, or billionths of a meter) are visible. Other wavelengths, such as infrared (greater than 780 nm) and ultraviolet (less than 380 nm) are not visible to the human eye, though they might be visible to other animals.

In any event,

light waves pass through the cornea of the

eye, and then through the pupil, the size of

which is controlled by the iris. Under

conditions of dim light, the pupil widens to let more light

in; in bright light, the pupil contracts. The light is then

focused by the lens of the eye, so that it

falls on the retina on the inside back of

the eyeball. This is the retinal image.

There, light waves impinge on two types of receptor organs:rods

and cones. The rods, of which there are

about 100 million, are stimulated by different levels of

brightness (intensity); they are responsible for black-white

vision, and are especially important for seeing at night. The

cones, of which there are about 7 million, are stimulated by

different wavelengths of light; they are responsible for color

vision, and are particularly useful during the day.

A note on myopia (nearsighteness). The lens focuses the image on the retina. Unfortunately, various conditions cause the eye to become slightly elongated, causing the lens to focus the image on the interior space of the eyeball just before the retina -- what is known, in optics, as a refractory error. As a result, the images of distant objects are blurry -- hence the name "nearsightedness". Nearsightedness is pandemic in the world, an increase that can be attributed, in part, to increases in up-close reading of smartphone screens!

Once

transduction has taken place, by a photoelectric process, the

neural impulse travels via bipolar cells to

ganglion cells and then are carried over the

optic nerve (which is the exclusively

afferent cranial nerve II).Different fibers

of the optic nerve meet and cross at the optic chiasm,a

division in the optic nerve which projects each half-field to

the opposite hemisphere of the brain. Thus, retinal images

from the left visual field of each eye project to the right

hemisphere; and retinal images from the right visual field of

each eye project to the left hemisphere.

It is this fact which permits the "split brain" experiments which have been so important in teaching us about hemispheric specialization: stimuli presented to the left visual half-field project to the right cerebral hemisphere, and vice-versa. In the intact brain, these images are also transmitted between the hemispheres; but this transmission is impossible when the corpus callosum has been severed. Of course, by strategically moving the eyes, an image projected on the right half-field can also be brought into the left half-field, and vice-versa. For this reason, in split-brain experiments the stimuli are presented very briefly, and disappear before the eyes have a chance to move.

Farther along on their journey to the brain, the visual impulses pass through the lateral geniculate nucleus (or LGN), a bundle of neurons which is part of the thalamus. Each cell in the LGN corresponds to a particular part of the retina; thus, the LGN processes information about the spatial organization of the visual image.

With the exception of olfaction, all afferent impulses pass through the thalamus on the way to their sensory projection areas (olfactory impulses go directly to the limbic area instead). Thus, the thalamus acts as a kind of a sensory relay station, directing impulses representing various modalities to their appropriate cortical projection areas.

From there, the neural impulses continue through to the primary visual cortex, or Area V1, in the occipital lobe (also known as Brodmann area 17), an the very pole of the occipital lobe. Again, each cell in the visual cortex corresponds to a particular part of the retina -- a feature known as retinotopic organization.

Perhaps the first

neuroanatomical drawing ever made was by the Arab physician

Ibn al-Haytham, around 1027 CE. It shows the optic nerves

leading from the two eyes, meeting at the optic chiasm, and

then proceeding to the brain.

Perhaps the first

neuroanatomical drawing ever made was by the Arab physician

Ibn al-Haytham, around 1027 CE. It shows the optic nerves

leading from the two eyes, meeting at the optic chiasm, and

then proceeding to the brain.

Setting the Biological Clock

The human body is subject to a number of biological rhythms, of which the most noticeable is the daily cycle of waking and sleeping. The sleep-wake cycle is known as a circadian rhythm (from the Latin circa, "around" of "about", and dies, "day"; thus, "about a day" or "around the day"). The circadian rhythm produces regular changes in activity levels, hormonal levels, and body temperature throughout the day, and has the function of synchronizing behavior and physiological states to the changing state of the environment. In humans and many other animals, the circadian rhythm is diurnal, meaning we are active during the day; in rats and some other animals, the rhythm is nocturnal, meaning that they are active during the night.

Circadian rhythms are a subset of a larger

class of biological rhythms which also includes:

- infradian rhythms, over periods longer than 1 day, such as the 28-day menstrual cycle in human females;

- circannual rhythms, such as the seasonal cycles of hibernation and mating in some nonhuman animals; and

- ultradian rhythms, over periods shorter than 1 day, such as the 90-minute sleep cycle superimposed on our basic circadian rhythm.

The existence of biological clocks was originally inferred by Curt Richter and other investigators from behavioral evidence. In the absence of any light clues, activity levels, hormonal levels, and body temperature vary regularly on a cycle that is about 25 hours in length. In theory, the 24-hour cycle is entrained by the actual pattern of light and dark in the environment.

More recently, the biological substrate of circadian rhythms has been located in the suprachiasmatic nucleus (SCN) of the hypothalamus, a structure which is located just above the optic chiasm in the brain (the optic chiasm is the place where the optic nerves from the two eyes come together). The SCN receives information about environmental light through a special neural pathway known as the retinohypothalamic pathway, permitting light to serve as a Zeitgeber (from the German Zeit, "time", and geber, "giver"; thus, "timegiver").

But where does the information about light come from? The retina of the eye, obviously. For a long time it was thought that the rods and cones provided this information, as well as information about the rest of the visual world. However, in 2002 David Berson and his associates reported the discovery of a new kind of photoreceptor cell in the retina, existing alongside the rods and the cones employed in vision (Science, 2/8/02). It is these cells, a subset of retinal ganglion cells that appear to contain the photopigment melanopsin, not the rods and cones themselves, that provide information to the SCN.

As discussed below, we know that people who

lack certain types of cones suffer from various forms of colorblindness.

This new discovery suggests that there might be people who,

because they lack these specialized photoreceptor cells,

might suffer a kind of timeblindness. Whether any

such people actually exist remains to be determined by

further research.

Of more immediate practical significance, the

existence of these Intrinsically photosensitive Retinal

Ganglion Cells (ipRGCs) has implications for sleep

disorders -- particularly those suffered by those who sleep

with their cell phones or tablets by their side, or who go

to sleep with the telephone on. The reason is that

while these ipRGSs are sensitive to any strong light (such

as the light of the rising sun), they are particularly

sensitive to "blue" light -- which is precisely what the

"blue" light-emitting diodes in flat screens emit. and

to make things worse, the "blue" LEDs emit stronger light

than red and green ones do. So as long as these

screens are illuminated, they're stimulating those ipRGCs,

which send messages to your SCN which say, effectively,

"Sun's rising, time to wake up and go to work hunting and

gathering". And those screens are on all

night, for all too many of us. And so all too many of

us don't get the sleep we need. So do yourself a

favor, and practice "electronic abstinence". Turn off

the TV and keep your cell phone in another room while you

sleep. Even better, turn those screens off a couple of

hours before you go to bed.

The Eye and Evolution

The eye often figures in arguments against Darwin's theory of evolution by natural selection. As early as 1802, before Darwin had ever published a word -- indeed, before Darwin (1809-1882) was even born -- William Paley, an English clergyman, argued that the human eye was so intricate that it could only have been intentionally designed (in Natural Theology or Evidences of the Existence and Attributes of the Deity):

To buttress his argument, Paley coined

the analogy of the divine watchmaker. In our time, Paley's "watchmaker" argument has been revived by Michael Behe, in Darwin's Black Box (1996) in the form of irreducible complexity. Behe's idea is that some biological structures, like the eye, are simply too complex to have evolved incrementally, through natural selection of purely random mutations. If the eye evolved, its evolution must have been directed -- and directed by God. Darwin himself echoed Paley's argument.

Nevertheless, Darwin went on to conclude that the eye, like everything else in the biological world, had developed through the same process of natural selection:

Darwin's reasoning has been vindicated by modern evolutionary biology: precisely the gradations he discussed have been observed in animals which are alive today.

If, that is, the organism has a brain to receive them. Some organisms, like the box jellyfish, don't have anything like a brain, so they're not capable of perception as we defined it earlier. That is, they don't have the wherewithal to generate internal, mental images of the objects in their environment. So you need more than just an eye to see. You've also got to have a brain. Eyes didn't just evolve from simple to complex, either. The eyes of individual species evolved in a manner that supported each species's adaptation to its ecological niche.

And so it goes, al of these species differences produced by evolution, by building gradually on a primitive base.

|

Audition

In audition, the

proximal stimulus consists -- again, naturally -- of sound

waves -- mechanical vibrations in

the air, with frequencies ranging from 20 to 20,000

cycles per second, are funneled by the auricle

of the outer ear into the auditory canal and

set the tympanic membrane into sympathetic

vibration.

Other species have different ranges of audible frequencies -- for example, bats, which use ultrasonic frequencies to navigate.

These vibrations then pass through bony structures of the hammer (or malleus), anvil (incus), and stirrups (stapes) to the oval window, thence to the basilar membrane of the cochlea, which vibrates against hair cells in the organ of Corti.

Mechanical stimulation of these hair

cells sets up neural impulses which are transmitted over the

cochlear branch of the vestibulocochlear

nerve (cranial nerve VIII).

The impulses then pass through the cochclear nucleus, then the inferior colliculus, and the medial geniculate nucleus of the thalamus

The primary

auditory projection area, also known as A1,

consists of Brodmann areas 41 and 42 in the temporal

lobe of the brain, adjacent to the lateral fissure.

Different segments of Area 41 are responsive to different

frequencies of sound, a principle known as tonotopic

organization.

Cochlear Implants for the Deaf

Understanding how transduction is accomplished in the auditory modality helps us to understand some of the most frequent causes of deafness. One major form of deafness is known as conduction deafness, in which there is some impediment (wax buildup, or a problem with the bones) to the transmission of sound waves through to the inner ear. In many cases, conduction deafness can be treated medically or surgically, or alleviated by a hearing aid. Sensory-neural hearing losses arise from damage to the neural structures themselves -- in the cochlea, the auditory nerve, or even the temporal lobe.

One very common cause of sensory-neural

deafness is the loss of hair cells in the cochlea (in the

most common forms of congenital deafness, where a child is

born deaf, the degeneration of hair cells occurs during

fetal development). If the hair cells are damaged, no neural

impulses can arise to be transmitted along the auditory

nerve to the auditory projection area. There is no treatment

for this condition at present. However, it is possible to

employ cochlear implants that will make up

for the loss of hair cells. These are electronic devices

which pick up sound waves from the environment, and

transduce them into electrical impulses which then directly

stimulate the auditory nerve. Assuming that the nerve and

the projection areas are not damaged, some degree of hearing

can be restored (see below for the discussion of Muller's

"Doctrine of Specific Nerve Energies"). It should be

understood, however, that at present cochlear implants are

of limited utility.

- A normal cochlea contains thousands of nerve cells to be

stimulated by the basilar membrane, but at present (2014)

the most advanced cochlear implants employ fewer than a

two dozen electrodes, thus greatly reducing the

possibility of pitch discrimination. One early user

of cochlear implants has said that they make everyone

sound like "R2D2 with laryngitis" (Andrew Solomon,

"Defiantly Deaf",New York Times Magazine,

08/28/94).

- There are some risks associated with cochlear implants,

and they destroy whatever residual hearing capacity the

individual might have (for this reason, the devices are

sometimes implanted in only one ear).

- Moreover, unless the devices are implanted in infancy, the person may never acquire spoken language (see the Supplement on Language, below, for a discussion of critical periods in language acquisition).

Until recently, it was thought that these hair cells do not grow further aver the fetal period, and if damaged do not regenerate; now there is some evidence from animal experiments that both these assumptions may be false. This new evidence raises the possibility that it may be possible to develop medical treatments that will promote the growth or regeneration of cochlear hair cells in children and adults (though we are a long way from this point now).

Many individuals in the Deaf community (the capital "D" denoting a culture rather than a medical condition) oppose cochlear implants and any other medical or surgical treatments on the ground that they imply that deafness is a disability that can be prevented or cured, rather than a matter of individual differences with which everyone, deaf and hearing, ought to cope. Such arguments have gained force as we move further toward multicultural awareness. Regardless of what position one takes on that issue, however, it is quite clear that deaf individuals fare better when they learn to communicate using American (or some other national) Sign Language, rather than relying on vocalization and lip-reading. Forbidding deaf children to learn sign language, a position advocated by Alexander Graham Bell and others in the 19th century, retards their intellectual development and essentially renders them illiterate. (Unless forcibly prevented from doing so, deaf children who are not taught a sign language will spontaneously develop one.) ASL is a full-fledged language with its own grammar (Signed English, by contrast, is just a translation of written English into signs), and the evidence is that deaf children have the best outcome when they are raised bilingually, acquiring ASL as well as written English.

Ordinary hearing aids simply amplify the sound waves that reach the eardrum. In essence, they function no differently than loudspeakers or earphones. But what if you don't have a functioning eardrum, or the structures of the middle ear are damaged? Under these circumstances, no matter how loud the sound is, the sound wave can't get through to the cochlea to be transduced into a neural impulse. Accordingly, cochlear implants bypass the damaged parts of the ear, and stimulate the auditory nerve directly.

Cochlear implants consist of a microphone and miniature radio transmitter, worn behind the ear much like a standard hearing aid. The microphone sends electronic signals through wires to a speech processor carried in the person's pocket, which converts the sound into a coded signal that the brain can understand. This signal is then sent back to the radio transmitter, which "broadcasts" to a miniature radio receiver implanted in the person's head. The receiver then sends the signals over another wire to an array of electrodes implanted in the cochlea (hence the name of the device), which provides appropriate stimulation to the auditory nerve. Then the auditory nerve completes the job by transmitting neural impulses to the temporal lobe (see Rauschecker & Shannon, "Sending Sound to the Brain",Science, 2/8/02).

Of course, this process depends on the

auditory nerve being intact. But, perhaps someday, the same

sort of process could be used to send signals directly to

the auditory cortex of the temporal lobe. Remember: it

doesn't matter how the signal gets there; it only matters

where it goes!

For treatment of psychosocial issues surrounding deafness and Deaf culture, see the Solomon article cited earlier; also two books by Harlan Lane:When the Mind Hears and Mask of Benevolence; also When the Mind Hears by Oliver Sacks.

Retinal Implants for the Blind

Analogous retinal implants, intended to

restore vision in patients suffering blindness caused by

degeneration of the retinal tissue (such as retinitis

pigmentosa or macular degeneration), are currently under

development.In one such device, an artificial retina

consisting of a camera mounted on glasses, which sends

information to a microcomputer that, in turn, stimulates

electrodes implanted in the retina of the eye -- which, in

turn, stimulates the rods and cones in the retina. In

retinitis pigmentosa, the rods and cones are no longer

sensitive to light, but they can convert the electrical

impulses from the artificial retina into neural impulses

which travel over the optic nerve to the visual centers of

the occipital cortex. See Zrenner, "Will Retinal Implants

Restore Vision?" (Science, 2/8/02), and "The Bionic

Eye" by Jerome Groopman (New Yorker,

09/23/03).

In fact, the prototype of an artificial retina was introduced by UCB's Lawrence Livermore Laboratory in 2010. It used 16 (the "first generation") or 60 (the "second generation") microelectrodes, and allowed patients to distinguish light from dark areas and detect movement. Lawrence Livermore scientists are currently working on a "third generation" implant which would allow some perception of form, and estimate that an implant with only 1,000 microelectrodes would actually permit facial identification. The 60-electrode version was named "Invention of the Year" by Time magazine in 2013.

Vestibular Implants for the Dizzy

A similar idea for vestibular implants is intended

to help patients who suffer from vertigo and other disorders

of balance. In one proposal, a miniature gyroscope

senses head rotation, sending signals to to electrodes in

the inner ear which connect to the vestibular nerve.

For more details, see "Regaining Balance with Bionic Ears"

by Charles C. Della Santina, Scientific American,

04/2010).

Sensory Substitution Technologies

Some blind people can profit from retinal implants, some deaf people can profit from cochlea implants, and all of this makes sense given what we know about the organization of the sensory modalities. Somehow, electrical signals representing a stimulus have to get to the relevant sensory projection area in the brain. Ordinarily this is accomplished by the sensory receptors and the sensory nerves, but it is possible, using modern electronic technology, to sidestep these parts of the process.

Another approach to rehabilitation was pioneered by Paul Bach y Rita, a neurologist at the University of Wisconsin Nature, 1969). Bach y Rita connected a television camera to a matrix of 400 electrodes attached to the back of a blind patient. The camera converted the scene to a low-resolution image (essentially, 400 pixels), which was transmitted to the electrodes in the form of mild electrical shocks. The patient could then "feel" the scene through the tactile receptors in his or her skin. And it worked. Bach y Rita's subjects, all of whom had been blind from birth, learned to decode the pattern of somatosensory stimulation, and thus "see" the scene in front of them.

There are limits on this technology. The equipment was bulky, but more important, the resolution of the scene was limited by what is known as the two-point threshold. If you stimulate two different points on the skin, and gradually bring the two points closer and closer together, there will come a point (sorry) where the two stimuli are indistinguishable, and the two physical points are perceived as only a single point. Although the skin is pretty sensitive, the two-point threshold effectively limits the resolution of the somatosensory "scene". You can make the physical matrix of electrodes smaller and smaller, that's just a matter of technology. But you can't reduce the two-point threshold -- unless you switch targets for the somatosensory stimulation to some other area of the body. In more recent work on a device known as the BrainPort, investigators following in Bach y Rita's footsteps (he died in 2006, but not before establishing an engineering company to develop his device) have applied a miniaturized electrode matrix, embedded in something that looks like a flat lollipop, to the tongue -- a very sensitive piece of skin with a very low two-point threshold. And it works. Similarly, the vOICe, the product of another company, translates the visual scene into a pattern of sound; yet another device, intended for the deaf, translates auditory stimuli into patterns of tactile stimulation, useful for burn patients who have lost tactile sensitivity in their skin. sending signals to to electrodes in the inner ear which connect to the vestibular nerve.

All this technology works because, as Bach y Rita famously put it, "You hear with our brain, not with your ears". Aside from practical applications in rehabilitiation technology for

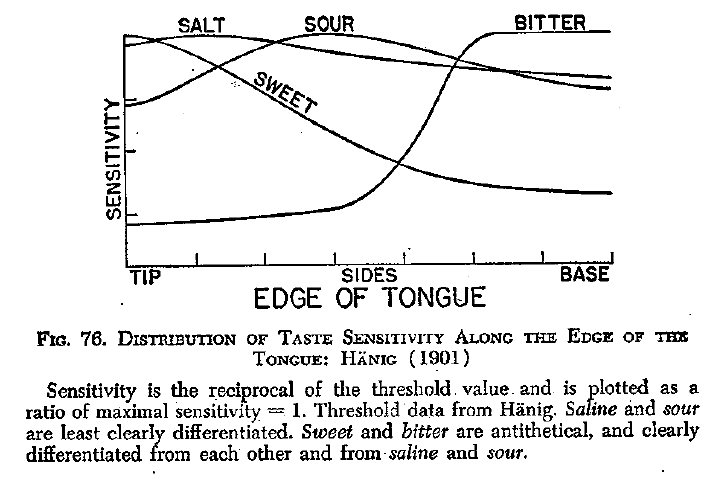

Gustation

Gustation, the

sense of taste, begins with certain chemical

molecules carried on food and drink (and, for that

matter, anything else you put in your mouth), dissolved in

saliva (remember Pavlov's Nobel-Prize-winning

research on the salivary reflex?).

The dissolved molecules then fall on taste

buds in the papillae (bumps) of

the tongue, as well as the palate and the throat (and even in

the lining of the intestine). Each papilla can contain

up to 700 taste buds, and there are about 10,000 taste buds in

all. The dissolved molecules then bind to chemical

receptors on the taste buds, just like the

"lock-and-key"mechanism described for synaptic transmission

(see the lectures on Biological

Bases of Mind and Behavior; also he lectures on Psychopathology and Psychotherapy,

where the lock-and-key mechanism is discussed in the context

of psychiatric drugs).

Neural impulses generated by the taste buds (and maybe similar receptors in the palate) are carried over an afferent portion of the glossopharyngeal nerve (cranial nerve IX) as well as other cranial nerves, such as the facial nerve (VII) and the vagus nerve (X).

The primary gustatory area, sometimes designated G1, is located in the anterior insular cortex, located deep inside the lateral fissure that separates the frontal and temporal lobes, and perhaps the frontal portion of the operculum that folds over the lateral fissure -- as well as that portion of somatosensory cortex that receives sensory impulses from the tongue.

Olfaction

Olfaction, the

sense of smell, begins with other chemical molecules,

this time carried on air, which dissolve in the mucous

membrane of the nasal cavity.

Neural impulses generated when these molecules make contact with receptor cells embedded in the olfactory epithelium.

The resulting neural impulses are carried over the olfactory nerve (I), which begins in the olfactory bulb buried underneath the frontal lobe of the cerebral cortex. Of all the sensory systems, only the olfactory system does not use the thalamus as a sensory relay station.

The primary olfactory cortex, or O1, lies in the limbic system, including the prepyriform cortex and periamygdaloid cortex in the limbic system.

The standard textbook

story is that human olfaction is pretty poor, especially when

compared to other species. Paul Broca (he of Broca's aphasia)

is responsible for starting this rumor, based on the

observation that the human olfactory bulbs (we have two of

them, one for each hemisphere) are relatively small, compared

to the rest of the brain; Sigmund Freud (he of psychoanalysis)

promoted the idea as well. In fact, the proportion of

neurons in the oflactory bulb, compared to the rest of the

brain, is pretty constant across a wide range of mammalian

species. But what really matters is relative sensory

acuity, and there, it turns out, humans don't do so badly:

we're worse than some animals with some odorants, but better

than most with others A paper by Bushdid et al. (Science,

2014) estimates that humans can discriminate at least 1 trillion

different odors; this compares favorably with estimates for

color (2.3 to 7.5 million) and tones (300-350 thousand).

For a review and refutation of ideas about human "microsmaty"

(tiny smell), see "Poor Human Olfaction is a 19th-Century

Myth" by John P. McGann (Science, 2017).

A special case of olfaction

is the activity of pheromones, chemical

signals that serve as a medium of communication, especially in

nonhuman animals (which is one reason why your dog or cat

spends so much time marking its territory, and sniffing out

the marks of other animals that may have violated its space).

Pheromones may play a role in human behavior, as well.

- A paper by Martha McClintock (Nature, 1971) reported that roommates and close friends at Wellesley College, then as now an all-women's residential college, tended to synchronize their menstrual cycles (she obtained the same effect among cloistered Roman Catholic nuns).

- A paper by Noam Sobel (Science, 2011) reported that men show diminished sexual arousal and desire when exposed to "emotional tears" produced by women crying in response to sad stimuli.

Both effects are presumably mediated by

pheromones carried either in menstrual blood or tears. These

are chemical substances, carried over the air and into the

nose, so technically they count as olfactory stimuli. But the

interesting thing is that pheromones themselves do not give

rise to conscious sensations. Still, they effect the behavior

of others who are exposed to them.

Although the indirect evidence for

pheromones is pretty convincing, direct evidence has been

harder to come by. A great deal of research has focused

on two candidates: androstadienone (AND),

derived from male sex hormones, and estratetraenol ("EST),

derived from female sex hormones. Both are found in

sweat, and both have been turned into so-called "pheromone

perfumes" marketed as aphrodisiacs. Recently, however, a

placebo-controlled, double-blind experiment found no effects

of either substance on the classification of androgynous faces

(e.g., sexually aroused heterosexual men should have

classified them them as female, sexually aroused heterosexual

women should have classified them as male), or on ratings of

other photographs for sexual attractiveness (Hare et al. Royal

Society Open Science, 20107).

For more information on pheromones, see:

- "The Scent of Your Thoughts" by Deborah Blum (Scientific American, 10/2011;

- "50 Years of Pheromones" by Tristram D. Wyatt (Nature, 2009, 457, 262-263).

For a contrary view, see:

- The Great Pheromone Myth by Richard E. Doty and Christopher Hawkes (2010).

Flavor:

Interactions Among Smell, Taste, Touch, Vision, and Sound

Gustation and olfaction, although mediated by

different neural structures, interact with each other:

- A head cold clogs the nasal passages, preventing the person from smelling properly; but food is also tasteless.

- Cigarette smoke clogs the taste pores, but perfume is also odorless to cigarette smokers.

- In a famous demonstration, blindfolded tasters have

difficulty identifying the taste of a strawberry if they

cannot also smell it.

Taste also interacts with the tactile sense: what is known

as "mouthfeel" -- the texture of food, whether it is crunch

or creamy, for example -- will also affect how it tastes.

- Pringles potato chips, which are manufactured so that each one is precisely identical to all the others, seem crisper and fresher if the sound of their "crunch" is amplified through earphones (Spence & Zampini, J. Sensory Studies, 2004).

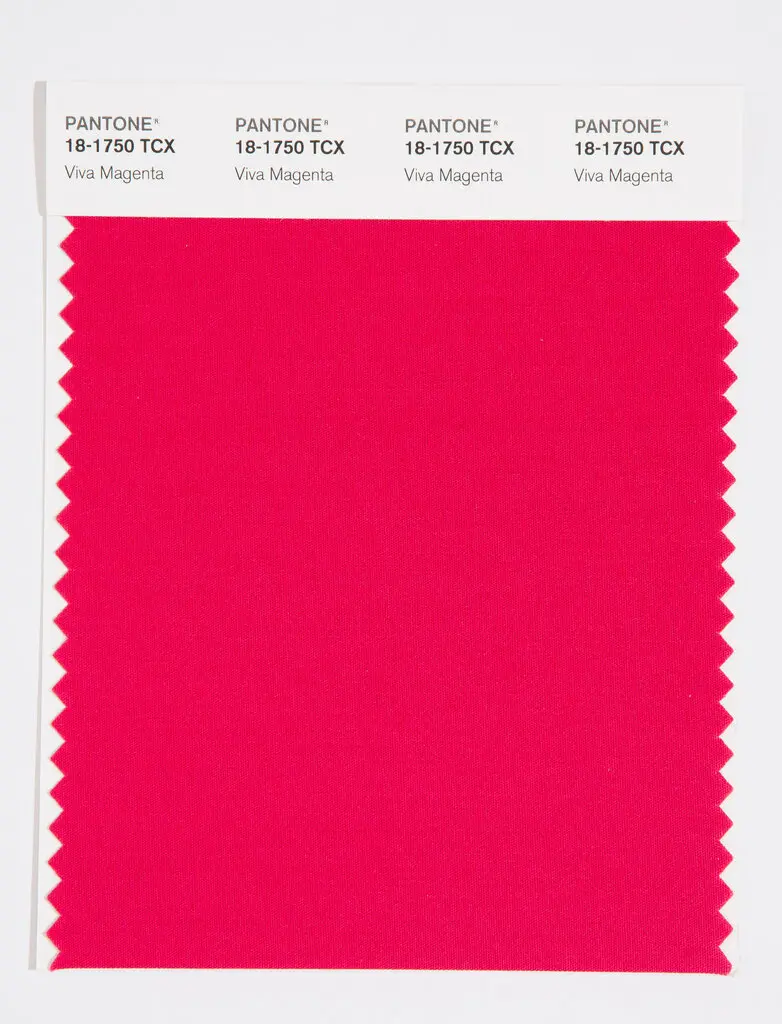

Taste also interacts with color: a strawberry dessert tastes much sweeter when served in a white container, compared to a black one.

And it even interacts with shape.

And sound: wine gets higher ratings when subjects hear the

sound of a cork popping, compared to the unscrewing of a

screwcap.

The "Pringles" study won the the 2008 IgNobel Prize for Nutrition, but all of these crossmodal effects reflect a basic principle of sensory integration: the sensory modalities do not operate independently of each other. Rather, the activity of different sensory systems is integrated to create a coherent, unified sensory experience.

All of these

stimulus modalities come together to produce flavor

of any food is given by a combination of taste, smell, and

touch. This is easily demonstrated by tasting a

strawberry (without, of course, knowing that it is a

strawberry -- otherwise you're liable to commit the

stimulus error! -- while holding your nose. You will

taste the sweet of the fruit, but you will not taste the

flavor of strawberry until you breathe again.

Similarly, irritants like carbonation, mint, and pepper

contribute to flavor through the tactile modality.

What pepper contributes to the taste of food is -- not to

put too fine a point on it -- tactile pain.

Actually, most of

flavor comes from the nose, not the tongue. When

we chew and swallow our food, airborne molecules from

the food are forced up through the nose through the retronasal

pathway, where they bind with odor

receptors. Amazingly, the brain distinguishes

between ordinary odors, entering the nose through the

nostrils in what is known as orthonasal olfaction,

and those which enter the nose from behind, in what is

known as retronasal olfaction. But, also

amazingly, the same receptors are involved in both kinds of

olfaction. Instead, the brain keeps track of whether

we're sniffing something (orthonasal olfaction) or

chewing and swallowing retronasal olfaction).

How? Possibly, by distinguishing whether receptors in the

olfactory epithelium and being stimulated at the

same time as the taste buds are.

- Taste is the sensory experience that arises from stimulation of our taste buds.

- Smell is the sensory experience that arises from orthonasal stimulation through the nostrils.

- Flavor is the sensory experience that arises from retronasal stimulation through the retronasal pathway.

For

more on flavor, see Flavor: The Science of Our Most

Neglected Sense by Bob Holmes (2017), a prominent science

writer.

The Tactile Sense

The proximal stimulus for touch is mechanical

pressure, which causes deformation of the skin or

hair shafts. The tactile sense is also sensitive to vibration

and electrical stimulation. This stimulus stimulates a number

of mechanoreceptors embedded in the skin,

including:

- free nerve endings buried in the epidermis of the skin;

- "perifollicular"or "basket" endings wrapped around the base of hair follicles;

- Merkle's disks are sensitive to pressure and rough texture;

- Meissner's corpuscles are sensitive to light touch, vibration, and stimulus texture; and

- Pacinian corpuscles are sensitive to

vibration and deep pressure;

- Ruffini's corpuscles are sensitive to pressure, vibration, and stretching of the skin.

Neural

Neural  impulses arising from these receptor

organs are then carried over the afferent tracts

of the spinal nerves, and up the afferent

tract of the spinal cord as well as over

some of the cranial nerves (e.g., the trigeminal nerve

(V) and the facial nerve (VI).

impulses arising from these receptor

organs are then carried over the afferent tracts

of the spinal nerves, and up the afferent

tract of the spinal cord as well as over

some of the cranial nerves (e.g., the trigeminal nerve

(V) and the facial nerve (VI).

Finally,

Finally, these impulses

arrive at the somatosensory projection area

of the parietal lobe (Brodmann areas 1, 2,

and 3), and are distributed to the appropriate region of the

somatosensory homunculus.

Updating Descartes

Anyway, as discussed in the "Biological Bases"

lectures, Descartes's notion of the reflex, and this

and similar diagrams, inspired Sherrington to coin the

term reflex arc: the simplest biological unit

of behavior consists of an afferent (sensory) neuron

connected to an interneuron (in the brain or spinal

cord) connected to an efferent (motor) neuron -- an

elementary connection between environmental stimulus

and motor response. As also discussed in the "Biological Bases" lectures,

the actual situation is a little bit more complicated

-- as depicted in the main figure.

|

The Thermal Sense

The

stimulus for temperature, the sense of warmth and cold is a temperature

differential between the stimulus and the skin

itself. Note that "cold" and "warm" are not the effective

proximal stimuli: a stimulus is felt to be "cold" only if its

temperature is lower than that of the skin with which it makes

contact. Same goes for "warm".

Positive and negative differentials stimulate neural activity in the Krause end-bulbs (for relatively cold stimuli) and Ruffini end-organs (for relatively warm stimuli). Simultaneous stimulation of both receptors generates the sensation of hot as opposed to warmth. The free nerve endings are also sensitive to temperature differentials.

Neural impulses are then carried over the afferent tracts of the spinal nerves, and up the afferent tract of the spinal cord as well as over some of the cranial nerves (as noted above).

And these impulses, too, finally arrive at the somatosensory projection area of the parietal lobe, and are distributed to the appropriate region of the somatosensory homunculus.

Cutaneous Pain (Nociception)

Cutaneous

pain arises from inflammation arising from

injury to or destruction of cutaneous tissue (otherwise known

as the skin).

There are free

nerve endings in the epidermis of the skin that are

sensitive to pain; but they are also sensitive to touch and

movement.

- Fast pain -- sharp or prickling, with a rapid onset and rapid offset -- appears to be mediated by A-delta fibers in the spinal (and some cranial) nerves.

- Slow pain -- aching, throbbing, or burning, with a slow onset and slow offset -- appears to be mediated by C-fibers in the same nerves.

Fast pain sensations then travel up the spinal cord along the neospinothalamic tract, while slow pain sensations then travel up the spinal cord along the paleospinothalamic tract.

Fast pain impulses pass through the

thalamus to the somatosensory cortex,

while their slow pain counterparts terminate in the brainstem.

Pain sensations usually result from

injury to some tissue, like the skin. But chronic pain

can persist even after the injury has healed. In many

cases of chronic pain, neurons in the "pain pathway" become

abnormally excitable, and discharge impulses toward the

brain even though they're not receiving any input from

nociceptors.

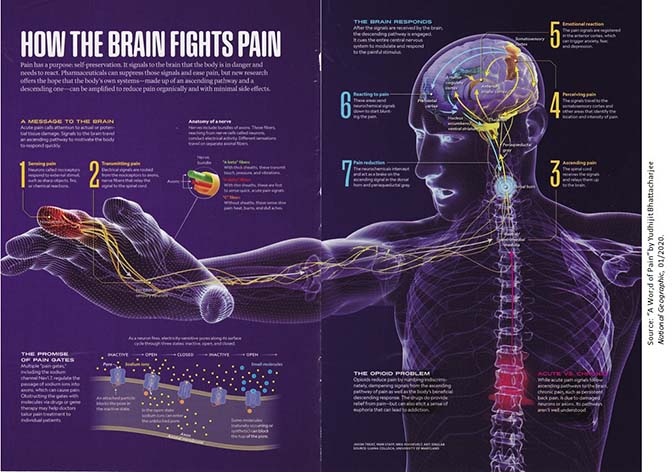

Here's

a good diagram showing our best understanding of the

physiology of pain (from "A World of Pain", by Yudhijit

Bhattacharjee, National Geographic, 01/2020).

In this approach, pain sensations arise in tactile receptors

that are especially sensitive to points or sharp edges,

heat, and the like. In a "bottom-up" process, these

signals are carried over afferent neurons which contain both

A-delta and C fibers to ascending tracts in the spinal cord,

through the thalamus, and then to the somatosensory cortex,

which identifies the location and intensity of the

pain. The thalamus (which, remember, serves as a

sensory relay station) also sends the signals to other brain

centers, such as the anterior cingulate cortex, anterior

insular cortex, which mediate the individual's emotional

response to the pain.

Here's

a good diagram showing our best understanding of the

physiology of pain (from "A World of Pain", by Yudhijit

Bhattacharjee, National Geographic, 01/2020).

In this approach, pain sensations arise in tactile receptors

that are especially sensitive to points or sharp edges,

heat, and the like. In a "bottom-up" process, these

signals are carried over afferent neurons which contain both

A-delta and C fibers to ascending tracts in the spinal cord,

through the thalamus, and then to the somatosensory cortex,

which identifies the location and intensity of the

pain. The thalamus (which, remember, serves as a

sensory relay station) also sends the signals to other brain

centers, such as the anterior cingulate cortex, anterior

insular cortex, which mediate the individual's emotional

response to the pain.

The same diagram shows the

gate-control mechanism for pain control. Following the

onset of pain, a "op-down" process is initiated in the

prefrontal cortex to modulate the pain. These

descending signals contact the ascending pain signals in the

dorsal horn and periaqueductal gray of the spinal cord,

effectively reducing the intensity of the ascending pain

signals.

A Mechanism for Acupuncture?

The distinction between slow and fast pain forms the basis for a prominent theory of how acupuncture works. The theory is that fast and slow pain are in an antagonistic relationship to each other -- meaning that one can counteract, or inhibit, the other. According to the theory, slow pain signals, stimulated by acupuncture needles and processed at the level of the brain stem, prevent fast pain signals from getting past the brain stem, and through the thalamus, to the somatosensory projection area. It's a pretty good theory.

Usually, we think of pain as a skin sense, but pain is more complicated than this. Almost any stimulus will produce pain if it is intense enough: sound, light, heat, or cold are good examples (it is not clear if intense tastes and odors are actually painful, no matter how unpleasant they might be). Moreover, pain is also produced by muscle fatigue, and by the introduction of salt and other chemicals into wounds and other openings in the skin. So setting aside cutaneous pain, in some sense there is no unique proximal stimulus for pain. And because pain can be experienced in (almost) all sensory modalities, in a sense there is are no unique receptor organs for pain, either -- and no specific sensory tract, and no specific projection area.

The situation is complicated further by the fact that, regardless of origin, pain appears to have two broad components:

- Sensory pain, which provides information about the locus and extent of injury. Sensory pain activates the somatosensory cortex, which represents the location of the pain on the somatosensory homunculus.

- Suffering, which has more to do with the meaning of the pain. Suffering activates the anterior cingulate gyrus in the frontal lobe of cerebral cortex.

In the final analysis, the experience of pain is substantially determined by the meaning of the stimulus. In doctors' offices, people flinch when there isn't much pain. The responses of injured children differ markedly depending on whether an audience is present. Placebos provide genuine relief of pain. Soldiers experienced different levels of pain from objectively similar wounds in World War II, Korea, and Vietnam. And many religious rituals in tribal cultures (e.g., fertility, puberty) would appear objectively painful, but elicit no signs of pain from the participants.

The bottom line is that pain is often more a problem of perception than of sensation. The experience of pain depends not so much on the transduction of stimulus energies, but rather of higher mental processes involving perception, memory, and thought.

Review of the Skin Senses

So just to review how the skin senses

work. Mechanical pressure, vibration, or tissue

inflammation stimulate specialized sensory receptors in

the skin. This generates neural impulses which travel over

the afferent branch of the spinal nerves to the spinal

cord. There they may generate an involuntary spinal reflex

in response to the stimulus. Then the sensory impulses

travel up the ascending tract of the spinal cord to the

somatosensory projection area in the parietal lobe. There

they might generate a voluntary response to the stimulus.

Kinesthesis

The sense of movement and

position is generated by activity in the skeletal

musculature -- the contraction of tendons,

and stretching of muscles (and of the

skin), and the movement of joints.

These activities

stimulate nerve endings that are embedded in these body

parts, including

- neuromuscular spindles embedded in muscles;

- neurotendinous organs, also known as Golgi tendon organs, embedded, naturally enough, in the tendons;

- free nerve endings in the joints between bones.

These impulses, too, are carried over the afferent tracts of the spinal nerves, and up the afferent tract of the spinal cord as well as over some of the cranial nerves.

And finally to the somatosensory projection area of the parietal lobe.

Equilibrium

Equilibrium, the sense of balance,

begins with gravitational force which

pulls on otoliths, tiny crystals suspended

in the semicircular canals, and the

saccule and utricle of the vestibular

sac, both structures located in the inner ear.

The semicircular canals are arranged at approximate right

angles to each other, and detect rotation of the head.

The saccule and utricule detect the position of the head

with respect to gravity.

These structures contain

hair cells, which are stimulated by the

crystals, generating neural impulses in much the same manner

as the cochlea does for audition.

- Rotary motion of the head will cause the otoliths to fall on different hair cells in the semicircular canals, thus signaling a change in the organism's orientation with respect to gravity.

- Linear motion of the head in a forward direction causes the otoliths to fall on hair cells in the posterior portion of the vestibular sac, while motion in a backward direction stimulates hair cells in the anterior portion.

The neural impulses are then carried over the vestibular branch of the vestibulocochlear nerve (VIII).

Unlike kinesthesis and the skin

senses, neural impulses relating to equilibrium and change

of motion carried over the vestibular division of cranial

nerve VIII project to the cerebellum.

Sensory Mechanisms and the Nobel PrizeOn several occasions, research on sensory physiology has resulted in the Nobel Prize in Physiology or Medicine for the scientists involved. Most of these recipients wouldn't identify themselves as psychologists, but their work reveals the biological bases of psychological processes. In 1911, Alivar Gullstrand of the University of Uppsala won for mathematical work calculating exactly how light passes through the lens to cast an image on the retina -- a much more complicated process than you might imagine. In 1914, Robert Barany at the University of Vienna received the prize for work showing how structures of the inner ear worked to mediate the our sense of balance. In 1967, the Prize was shared by Ragnar Granit (Karolinska Institute, Sweden), Haldan Keffler Hartline (Rockefeller U.), and George Wald (Harvard) for their studies of visual mechanisms. Granit showed that there were three types of cones, each sensitive to a different band of wavelengths. Hartline studied the interactions between adjacent nerve cells, underlying contrast. Wald showed how light, stimulating rhodopsin, is transformed into electrical impulses. In 2004, Richard Axel (Columbia) and Linda Buck (Fred Hitchinson Cancer Center, Seattle) won for their collaborative work demonstrating that different olfactory receptors ar responsive to specific odorants. Research on the skin senses proved somewhat difficult, because there are not clear sensory receptors, like the rods and cones in the retina, that can be clearly identified for study. Still:

|

The Doctrine of Specific Nerve Energies

To repeat, in the

abstract, each modality of sensation is characterized by

four factors:

- the proximal stimulus (or type of physical energy falling on a receptor organ);

- the receptor organ which is stimulated (ordinarily, prepared to respond to a particular proximal stimulus);

- the sensory tract leading from the sensory surfaces to the brain, including the afferent tract of the spinal nerves and the afferent tract of the spinal cord, as well as certain cranial nerves; and

- the sensory projection area in the cortex, a particular area of the brain which is the final destination of afferent impulses arising from the sensory receptors and transmitted along the sensory tracts.

At first glance, it

seems that each sensory receptor organ is specific to a

different kind of proximal stimulus -- which would mean that

the various sensory modalities are actually defined by

particular kinds of proximal stimuli.

- In vision, electromagnetic radiation falls on the rods and cones in the retina.

- In audition, mechanical vibrations in air stimulate hair cells in the basilar membrane of the cochlea in the inner ear.

- In gustation, chemical molecules stimulate the taste buds surrounding the papillae of the tongue.

- In olfaction, they stimulate receptors in the olfactory epithelium.

- In touch, mechanical pressure stimulates a number of specialized receptors, such as "basket" endings, Merkel's disks, and Meissner's and Pacini's corpuscles, buried in the skin.

- In temperature, a heat differential stimulates the Krause end-bulbs and the Ruffini end-organs.

- In pain, tissue inflammation stimulates a-delta and C-fibers.

- In kinesthesis, movements of the skeletal musculature stimulate specialized nerve endings, such as the neuromuscular spindles and the neurotendinous organs.

- And in equilibrium gravitational force and acceleration acts on crystals that stimulate hair cells.

This would be a great, and simple, system. Unfortunately, it turns out that the modality of sensation is not uniquely associated with any particular proximal stimulus or receptor organ.

For example, other stimuli can lead to a particular sensation. If you close your eyes and press your finger lightly on your eyelid, you will experience the sensation of touch stemming from pressure that deforms the surface of the skin. But you will also experience the sensation of vision, in terms of various lights and colors. Thus, a particular proximal stimulus (pressure) somehow stimulates the receptors for vision as well as those for touch. Similarly, if you box someone's ears (Please don't do this, just take my word for it!) the victim will experience touch (to put it mildly), but also a ringing of the ears.

Electrical stimulation of various sensory receptors can also give rise to sensations -- which is how cochlear implants (and, soon, retinal implants) work.

Proof that the modality of sensation can't be defined in terms of either the proximal stimulus or the sensory receptor comes from electrical stimulation of the afferent nerves leading from the receptor organ to the central nervous system, or of the sensory projection areas in the cerebral cortex. Either case produces sensory experience in some modality, vision or audition or whatever, even though there has been no proximal stimulus in the usual sense nor any activity in any sensory receptor per se.

Considerations

such as these led the German physiologist Johannes Muller,

in 1826, to formulate the Doctrine of Specific

Nerve Energies.

The same cause, such as electricity, can simultaneously affect all sensory organs, since they are all sensitive to it; and yet, every sensory nerve reacts to it differently; one nerve perceives it as light, another hears its sound, another one smells it; another tastes the electricity, and another one feels it as pain and shock. One nerve perceives a luminous picture through mechanical irritation, another one hears it as buzzing, another one senses it as pain. . . He who feels compelled to consider the consequences of these facts cannot but realize that the specific sensibility of nerves for certain impressions is not enough, since all nerves are sensitive to the same cause but react to the same cause in different ways. . . (S)ensation is not the conduction of a quality or state of external bodies to consciousness, but the conduction of a quality or state of our nerves to consciousness, excited by an external cause.

According to Muller, the modality of sensation is not determined by the proximal stimulus alone, but rather by the stimulation of particular nerves. These nerves are ordinarily responsive to some specific proximal stimulus, but they can also respond to others. You can have a sensory experience without a proximal stimulus, so long as some particular part of the nervous system is activated.

Muller

had the right idea, but the DSNE must be restated in light

of subsequent evidence.