Language and Communication

Once we have engaged in some cognitive activity -- perceived an object or remembered an event, induced a concept, deduced category membership, rendered a judgment, made an inference, solved a problem -- we may use our capacity for language to communicate what we have done to someone else. But language is not just a tool for communication. It is also a tool for thought.

Communication Between Nonhuman Animals

Many

animal species have the ability to communicate with other

members of the same species.

The pheromones discussed in the lectures on Sensation also count as vehicles for communication between nonhuman animals -- and, perhaps, between humans as well. Pheromones appear to communicate aggression, sexual interest, and the like. Of course the odor "landscape" for humans, especially, is complicated by the existence of "exogenous" odors, such as cigarette smoke and other air pollutants, soap, deodorants, and perfumes, which can obscure the messages from relatively weak "endogenous" odors.

But nonverbal

communication goes beyond pheromones, and includes a wide

variety of "instinctual"behaviors discussed in the lectures on

Learning.

- Animals may exchange signals during the mating process -- as in the male stickleback's zig-zag dance, or the female stickleback's head-up receptive posture.

- Or they may communicate threats to intruders on their territory -- as in the head-down threat posture of the male stickleback.

- There are displays of submission (wolves, when fighting, will expose their throats), and alarm at the sight of a predator (distress calls in birds subject to predation).

- Animals display signals of their intention: birds will raise and lower their tails before takeoff, but this activity serves no aerodynamic purpose.

- There are signals of conflict: the phoebe makes a particular call just before a change in movement.

- And signals of the location of food: bees perform a "waggle dance" that indicates the relative position of food relative to the sun and their hive.

The Waggle-Dance of the Honeybee

The waggle dance has been described as the

most abstract and complex form of nonhuman communication. It

is performed by worker honeybees (sterile females) who have

been foraging for food. After returning to the hive, the

foragers waggle their bodies as they move along the surface

of the honeycomb; then they circle to the right, returning

to their starting point, and start the waggle all over

again; then they circle to the left, return to their

starting point, and the process continues, so that the

pattern forms a "Figure 8".

- The angle of the waggle, from vertical, is equal to the angle of the vectors formed by lines connecting the hive to the sun and to the food source. If the food source is at a 45o angle from the sun, then the waggle line will be 45o away from vertical.

- The distance of the waggle -- essentially, the number of waggles performed by the bee -- indicates the distance of the food source from the hive. The more waggles, the farther away the food.

- Different subspecies have different "dialects": for German honeybees, each waggle corresponds to about 50 meters of distance; for Italian honeybees, each waggle corresponds to only about 20 meters. But unlike the dialects of human language, these dialects are innate, carried on the genes. German honeybee larvae, raised in Italian honeybee nests, still dance "German" -- causing all sorts of confusion when they communicate to their Italian hive-mates!

For his discovery of the waggle-dance of the honeybee, Karl von Frisch shared the Nobel Prize in Physiology or Medicine with Nikko Tinbergen and Konrad Lorenz.

The waggle dance is extensively discussed by James Gould & Carol Grant Gould in The Honeybee (1988) and The Animal Mind (1999).

These are all social displays: instinct-like innate expressive movements, that do not have to be learned, and that are performed in narrowly defined situations. Other species have more complicated communication systems, that seem to be acquired through learning.

The classic case

here is bird song. Male birds sing, but females do not. Each

species has a characteristic song, which is used to identify

potential mates. Within each species, there are also

territorial "dialects", variations on the basic song that seem

to help preserve the gene pool in a particular area.

Studies of sparrows indicate that bird songs seem to be learned through exposure to other males, but this is not the usual form of learning. In the natural setting, all young males acquire the dialect sung by their parents. In the laboratory, where the young from one territory are exposed to the dialect from another territory, they will learn whatever dialect they are exposed to. However, sparrows cannot learn the songs of other species. There appears to be a critical period for song-learning, similar to that seen in imprinting in ducks and geese (remember?), between about 1 and 7 weeks of age. If a young sparrow is exposed to its song before it is seven days old, or after it is 50 days old, it will sing only a crude approximation of its song. Interestingly, practice is not important. After exposure, the young male will remain silent for several months. Then, it will sing its song perfectly, the very first time.

There are analogous effects in the female sparrow. As noted, females don't sing; but they do respond to the song of their parents. Exposure of young females under the same conditions as described above, yield effects on response to songs analogous to those seen in males. Moreover, females show the effects of administration of male hormones. If given a dose of testosterone, the female will sing the song to which she was exposed when young.

Apparently, the bird inherits, as part

of its innate biological endowment, a crude template of its

species' song. This template is then refined through

experience -- through exposure to whatever dialect of the song

is sung by its parents. By virtue of this exposure the bird

then produces a detailed version of its own song. This is an

instance of learning: a relatively permanent change in

behavior that occurs as a result of experience. But notice

that this learning does not occur through reinforcement: there

is no practice, and no regime of rewards and punishments.

Bird-song learning is innate, but that doesn't mean that the songs themselves are invariant. A study led by Elizabeth Derryberry at the University of Tennessee, compared the songs of white-crowned sparrows before and after the "lockdown" imposed in California in 2020 as a response to the Covid-19 pandemic. One of the results of the lockdown was a significant diminution in ambient noise in the environment -- not least because there were fewer cars on the road, fewer planes flying overhead, fewer people talking and fewer dogs barking. For a little while, urban environments sounded more like rural environments (some of the data for this study was collected along the Bay Trail in Richmond, a city just north of UCB). As a result, Derryberry and her colleagues were able to show that the birds sang at lower volume levels -- apparently because they no longer had to compete with the noise around them. Derryberry et al. conclude that "These findings illustrate that behavioral traits can change rapidly in response to newly favorable conditions, indicating an inherent resilience to long-standing anthropogenic pressures such as noise pollution". True, but we shouldn't push it: remember those baby sea turtles threatened by evolutionary traps caused by man-made lighting, as discussed in the lectures on Learning.

Whale song has some of the same

qualities as bird song, but we know very little about the

conditions under which it is learned, and performed.

Linguistic Communication Between Humans

Bird song shows some parallels to human

speech. Every normal child learns to speak his or her native

language. This learning occurs naturally, without

reinforcement, even if the child is raised in an extremely

impoverished linguistic environment. There is also a critical

period for development.

- If children are linguistically isolated until puberty, they show very poor language skills thereafter.

- This is also true for deaf children. If they are

exposed to sign language from an early age, they will

learn to use it fluently, just as normal-hearing children

learn a spoken language.

- But if exposure is delayed, they will have difficulty

becoming fluent signers.

- Deaf children of deaf parents (who can sign), show no

delays in language acquisition.

- But deaf children of hearing parents, who ordinarily do not use sign language, may be delayed or impaired if they are not exposed to sign language elsewhere, such as preschool.

- Children who receive cochlear implants also show no

problems in oral language acquisition.

- There is some controversy within the Deaf community (who use an upper-case "D" to signify that they consider themselves part of a particular culture) about cochlear implants. Some object to implants because it suggests that deafness is a handicap, instead of a "difference", and because they signify accommodation to the "hearing" culture. Others believe that implants give deaf people the maximum flexibility in their interactions with other people, deaf and hearing.

- One approach that is definitely sub-optimal is a

reliance on lip-reading and speech alone. Both

these skills are extremely difficult for deaf people

to acquire, and the time spent in the learning process

effectively hampers the learning of other, more

optimal skills, resulting in relatively poor

linguistic ability.

- That doesn't mean that deaf people shouldn't be

taught to lip-read and speak. Deaf people

should be able to take advantage of all available

communication resources. But it does mean that

exclusive reliance on lip-reading and speech is not

in the best interests of deaf people.

- For more on this controversy, see:

- When the Mind Hears: A History of the Deaf by Harlan Lane (1984).

- Deaf in America: Voices from a Culture by Carol Padden and Tom Humphries (1988).

- I Can Hear You Whisper: An Intimate Journey

Through the Science of Sound and Language by

Lydia Denworth (2014).

- If children learn a second language after puberty, that

language is spoken with an accent.

- Recovery from aphasia is more likely in children than in

adults. But in the final analysis, human language is

something else.

People can learn a second language, and, especially if they start early enough, they can learn it pretty well. And the same goes for a third, as many Europeans will attest. But how many languages can one individual master? If the critical period lasts as long as 17 years, there's more opportunity than if it lasts only until age 12, but still, you've got to practice to really master a language, and there are only so many hours in a day. Still, people who speak multiple languages are known as polyglots, and people who can speak more than 11 languages are known as hyperpolyglots. They don't just have a couple of words or phrases at their command; they are able to converse at length with native speakers. Their linguistic abilities are so extreme that there is speculation that there might be a biological difference -- something in the brain -- between them and mere mortals, but no such difference has been found so far. On the other hand, there's practice. Alexander Arguelles, a well-known hyperpolyglot, reckons that he spends up to 40% of his waking hours on language study and practice. For more on hyperpolyglotism, including Arguelles, see "Maltese for Beginners" by Judith Thurman, New Yorker,09/03/2018).

The Reading Wars

Learning oral language, permitting speaking and listening, occurs effortlessly in all normal humans. Learning a written language, permitting reading and writing, is more problematic. Apparently, the brain evolved for speaking but not for writing -- not surprisingly, given that written language was invented only about 5000 years ago -- not long enough for the brain to have evolved a module for written language. Spoken language is acquired simply by being exposed to it; written language has to be deliberately and effortfully taught and learned. But how to teach reading -- ah, that's a matter of some controversy -- so controversial, in fact, that the debate has been called "The Reading Wars".

Whole-language instruction became very popular, not least because it is fun for both students and teachers. It's epitomized by the "Dick and Jane" readers that were so popular in schools. The only problem is that, for most students, the whole-word method is not the best way to teach reading -- a point made vigorously by Rudolf Flesch in his 1955 book, Why Johnny Can't Read. Students taught by the whole-word method can read simple, familiar texts, but they have difficulty decoding unfamiliar, more complex material. Let me be clear: the whole-word method isn't wholly, or even largely, responsible for the poor reading skills of the typical American elementary school pupil. Poverty and poor funding are much more important culprits. But all the scientific evidence points to some variant of phonics as generally the best method of teaching reading -- not least because it makes whole-word instruction even easier (for the record, I went through the whole "Dick and Jane" series -- but not before I was drilled in phonics!).For coverage of this research, see Language at the Speed of Sight: How We Read, Why So Many Can't, and What Can Be Done About It (2017) by Mark Seidenberg, a distinguished psycholinguist and cognitive neuroscientist at the University of Wisconsin. |

Language is qualitatively different from even the most complex communication system of nonhuman species. Human language is differentiated from other communication systems by five properties.

- Meaning: Language expresses ideas. This is sometimes called semanticity, referring to abstract, symbolic representations -- words -- that mean the same thing to all speakers of a language.

- Reference: Language refers to the real or imagined world of objects and events. Related to this is the property of displacement, meaning that language can refer to events in the past or the future as well as in the immediate present.

- Interpersonal: Language is used not just as a tool for thought, but also to communicate one's ideas to someone else -- to change their opinions, or to accomplish one's goals.

- Structure: Linguistic utterances are organized according to the rules of grammar. Prescriptive grammar is a set of authoritative recipes: how language ought to be. Descriptive grammar is a set of descriptions of natural speech: how language is used. The rules of grammar are finite in number. But they permit the generation of an infinite number of different, meaningful sentences.

- Creativity: People can generate and understand novel utterances, that have never been heard or spoken by them (or anyone else) ever before. This is also known as productivity. For example, it's been estimated that there are 1 nonillion (or 1 trillion quintillion) meaningful 20-word sentences in English.

That's 1,000,000,000,000,000,000,000,000,000,000, or 1030 sentences.

Each of us can understand each of these effortlessly (provided we know what the words mean). But there are only 3 billion seconds in a century. Therefore, there is simply not enough time for us to have learned the meaning of each of these sentences by exposure, even at the rate of 1/sec for an entire lifetime.

These properties combine to make human language unique in nature. Social displays are stereotyped and obligatory; speech is creative and optional. Birds are known to improvise on their songs, but the basic song remains unchanged.

Some primates can

be trained to perform some linguistic tasks. The chimpanzees

Washoe (who was trained first by Allen and Beatrix Gardner at

the University of Nevada, Reno -- located in Washoe County,

Nevada -- and later by Deborah and Roger Fouts at Central

Washington University, and who died in 2007 at the age of 42,

having been trained and Nim Chimsky (a pun by her trainer,

Herbert Terrace of Columbia University, on the name of Noam

Chomsky, the godfather of modern linguistics), and the

gorillas Koko and Michael (trained by Penny Patterson, a

former graduate student at Stanford), have learned some

aspects of American Sign Language (chimpanzees lack the proper

vocal apparatus for anything resembling human speech); and the

chimpanzee Sarah (trained by David Premack, at the University

of Pennsylvania) has learned to string tokens together in

order to form elementary "sentences". Kanzi, a bonobo

studied by Sue Savage-Rumbaugh (originally at Georgia State

University and later at the Iowa Primate Learning Sanctuary),

learned to communicate with humans using a keyboard of 300

"lexigrams" representing various concepts in "Yerkish", an

artificial symbol system named after the Yerkes Primate

Research Center, where he was born (he also learned to

comprehend some spoken English).

And not just

primates. Alex, an African grey parrot trained by Irene

Pepperberg acquired over 100 words in the course of his

30-year lifespan. Like a preschooler, he learned is

colors, and basic shapes, to count a little, and to categorize

objects. Alex, whose name was derived from Pepperberg's

"Avian Learning Experiment", actually got an obituary in the New

York Times ("Alex, a Parrot Who Had a Way with Words,

Dies" by Benedict Carey, 09/10-2007, from which the picture is

drawn; see also "The Communicators" by Charles Siebert, an

appreciation of Alex and Washoe, who died the same year as

Alex, New York Times Magazine, 12/30/2007).

And not just

primates. Alex, an African grey parrot trained by Irene

Pepperberg acquired over 100 words in the course of his

30-year lifespan. Like a preschooler, he learned is

colors, and basic shapes, to count a little, and to categorize

objects. Alex, whose name was derived from Pepperberg's

"Avian Learning Experiment", actually got an obituary in the New

York Times ("Alex, a Parrot Who Had a Way with Words,

Dies" by Benedict Carey, 09/10-2007, from which the picture is

drawn; see also "The Communicators" by Charles Siebert, an

appreciation of Alex and Washoe, who died the same year as

Alex, New York Times Magazine, 12/30/2007).

However, the training of these animals is very arduous, requiring lots of time, effort and reinforcement. And there are big individual differences among chimps and gorillas in their ability to learn language. No primate, even under ideal conditions, has shown the language ability that a normal two-year-old human child acquires effortlessly under the most impoverished conditions. The general consensus is that chimpanzees can acquire some aspects of language semantics, learning the meanings of symbolic "words", but have little or no capacity for linguistic syntax, or the ability to string words together into meaningful utterances. This suggests that human language is indeed a unique ability in nature.

Nim Chimpsky was raised like a human infant in an apartment near Columbia University, and when Terrace's program of research ended, Nim retired to a primate field station at the University of Kansas. He is the subject of a biography by Stephanie Hess, Nim Chimpsky, the Chimp Who Would Be Human (2008), and a documentary film, Project Nim, directed by Peter Marsh (2010), based on Hess's book. For Terrace's own take on Nim, see his book, Why Chimpanzees Can't Learn Language and Only Humans Can (2019; see review by David P. Barash in "Aping Our Behavior", Wall Street Journal, 12/14/2019). Terrace argues that while chimps and other animals can use "words" (and other symbols) imperatively (so, for example, the word food means something like Give me food), they don't use them declaratively (i.e., to name things in conversation, as when we say to someone, I think this food is too spicy). Animals might be able to learn the correspondence between words (and other symbols) -- semantics, in other words -- they don't have syntax, so they don't have the ability to combine symbols to express new ideas. He also argues that while they might be able to acquire concrete concepts, such as food, they don't have the ability to acquire abstract concepts such as justice. Barash, an evolutionary psychologist, isn't sure that chimps and other creatures are utterly lacking in linguistic ability -- he thinks that there might well be some chimp(s), out there somewhere, who might have it. We just may not have encountered them yet.

Koko was born in the San Francisco Zoo on July 4, 1971, and died at the Gorilla Foundation, the home established for her in the Bay Area by her trainer, Penny Patterson, on June 19, 2018 (see her obituary in the New York Times, 06/21/2018). There's no doubt that Koko was able to use signs to communicate with Patterson and others, though a fierce debate raged over the precise extent of her linguistic abilities. Koko had semantics, to a degree, using arbitrary signs to convey meaning, but it's not clear that she had syntax, which is what allows language to express an infinite variety of meanings, and which distinguishes language from other forms of communication.

Sarah was also retired from research, and spent her last 13 years in the company of other chimpanzees at Chimp Haven. She died in 2019, and got a great obituary in the New York Times ("The World's Smartest Chimpanzee Has Died", by Lori Gruen, 08/10/2019). I have more to say about Sarah in my lectures on the origins of consciousness and the development of social cognition.

Kanzi

is, in some respects, a sadder story. Savage-Rumbaugh raised Kanzi in an intact family unit consisting of his mother, Matata, and his sister, Panbanisha, and eventually other relatives. Exposed to the keyboard as an infant, he quickly learned to use it to communicate with the human researchers by matching Yerkish lexigrams to spoken English words directed at various objects and actions. Savage-Rumbaugh claimed that, in one test, he demonstrated understanding of 72% of 660 novel sentences such as "Go get the ball that's outside". Panbanisha also learned to communicate via the lexigrams. Interestingly, Matata, who started the project as an adult, was much less successful in learning Yerkish. Savage-Rumbaugh also suggested that the bonobos' acquired a form of speech, with distinct vocalizations specific to certain Yerkish words. Eventually, conflict developed among the staff: Savage-Rumbaugh and some other staff began to consider the lab environment as a kind of "Pan/Homo" hybrid culture ("Pan" being the label for the species containing chimpanzees and bonobos), and wanted to continue to explore issues pertaining to language and culture; others wanted to "put the bonobo back in the bonobos", as it were -- instead of forcing them to live in something like human culture. There were management and funding problems as well, and cross-complaints alleging mistreatment of the animals. But the overriding issue has to do with the ethics of treating bonobos as if they were human -- as opposed to letting them live something like their normal lives. The research center still operates, and Kanzi and his family still live there, but Savage-Rumbaugh effectively lost her position (as of July 2020 she was still trying to regain it). Instead of her work on Pan/Homo "hybrid culture", most research involves more traditional procedures for the study of primate cognition -- although there's still considerable use of the Yerkish keyboards. For more detail, see "The Divide" by Lindsay Stern, Smithsonian magazine, 07-08/2020, from which these illustrations are taken.

For an interesting perspective on animal language and

human-animal communication, see "Buzz Buzz Buzz" by Michelle

Nijhuis (New York Review of Books,08/20/2020),

reviewing books on interspecies communication by the Dutch

philosopher Eva Meijer. In books such as Animal

Languages and When Animals Speak: Toward an

Interspecies Democracy, Meijer argues that instead of

trying to teach animals English (or ASL, or whatever), we

should try to learn their languages -- ho they "speak"

to us through the sounds and gestures they make. She

further argues that, because animals are able to communicate

their desires to us, they are "political actors" who deserve

to be recognized by our political system -- as individuals

with both "negative rights" (e.g., not to be confined,

tortured, or killed) and "positive rights (e.g., to preserved

and protected habitats).

Hierarchical Organization of Language

Language is organized hierarchically, into a number of different levels of analysis.

At the lowest level, the phoneme is the smallest unit of speech. Similarly, written language has an elementary graphemic level consisting of the letters of the alphabet.

At the next level, the morpheme is the smallest unit of speech that carries meaning. In English, there are about 50,000 of these: roots, stems, prefixes, and suffixes. There are two classes of morphemes: open-class morphemes consist of nouns, verbs, adjectives, and adverbs (so called because new members can be added to the class just by inventing new words, like "radar" and "snarf"); closed-class morphemes consist of articles, connectives, prepositions, prefixes, and suffixes. Phonemes are combined into morphemes by phonological rules that specify which combinations are "legal" in a particular language.

At the next level, the word consists of one or more morphemes -- a root or stem, plus (perhaps) a prefix or suffix. In English, there are about 200,000 of these. Morphemes are combined into words according to the same phonological rules. Knowledge of the meanings of individual words is stored in the mental lexicon.

At the next level,

phrases and sentences consist of strings of one

or more words that express a meaningful proposition. In

English, or any other language, the number of possible phrases

and sentences is essentially infinite. Again, there are two

classes of these.

- Language basics are simple sentences consisting of open-class morphemes, such as "Mommy go store".

- Language elaborations are complex sentences including closed-class morphemes, such as "My mommy goes to the store".

At each level of the

hierarchy, combinations of lower-level elements are governed

by rules. Grammatical rules govern how words can be strung

together. Just a finite number of phonetic elements

(phonemes) can be combined into a larger but finite

number of morphemes and words, which in turn can be combined

by a finite set of grammatical rules into an

infinite number of propositions. These grammatical

rules, known as syntax, make possible the creativity of human

language. But the rules of syntax aren't the only rules of

language:

- Phonological rules specify which speech sounds can go together, and graphemic rules specify which letters can appear together.

- Orthographic rules specify how various speech sounds are represented visually.

- Morphological rules specify how morphemes are combined to convey meaningful information.

Phonology

Each language has its own set of phonemes -- in English, there are about 40.

Other languages have other

sets of phonemes.

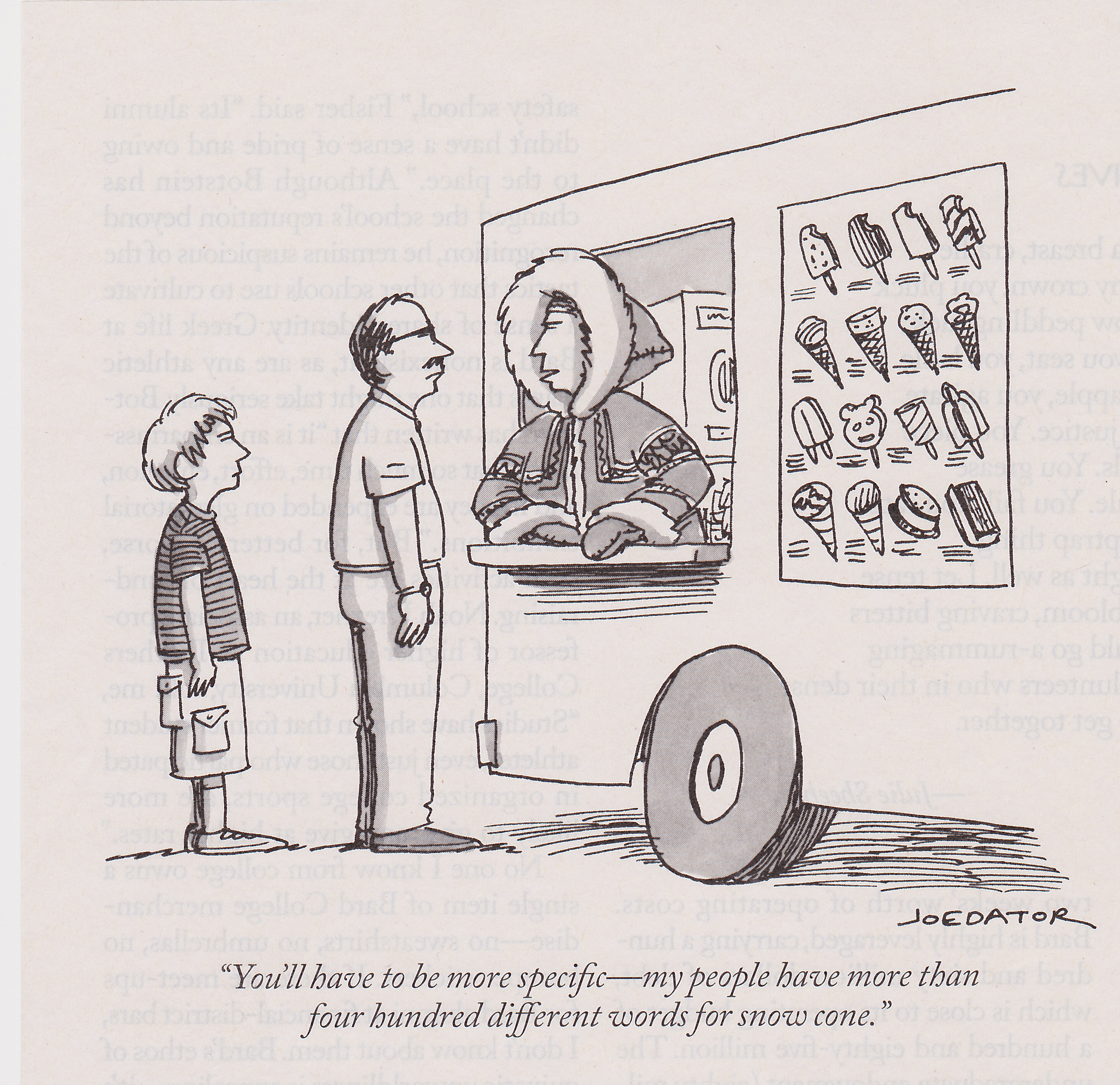

- For example, Hawaiian has only 12 phonemes, a, e, I, o, u, and h, k, l, m, n, p, and w. So there are fewer speech sounds to make up Hawaiian words than, for example, English words. Which is why so many Hawaiian words are long -- like humuhumunukunukuapua'a, which refers to a kind of fish. With so few letters, and correspondingly few phonemes, it takes longer strings to make unique words.

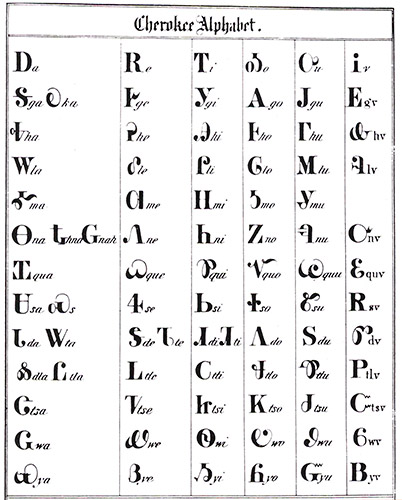

- By contrast, Cherokee, a Native American language, has

some 85 separate speech sounds. When Sequoyah invented a

script for Cherokee, in the early 19th century, he adopted

letters from the Roman, Cyrillic (Russian), and Greek

alphabets, and Arabic numerals, but these letters, in

Cherokee, do not correspond to their sounds in these

languages.

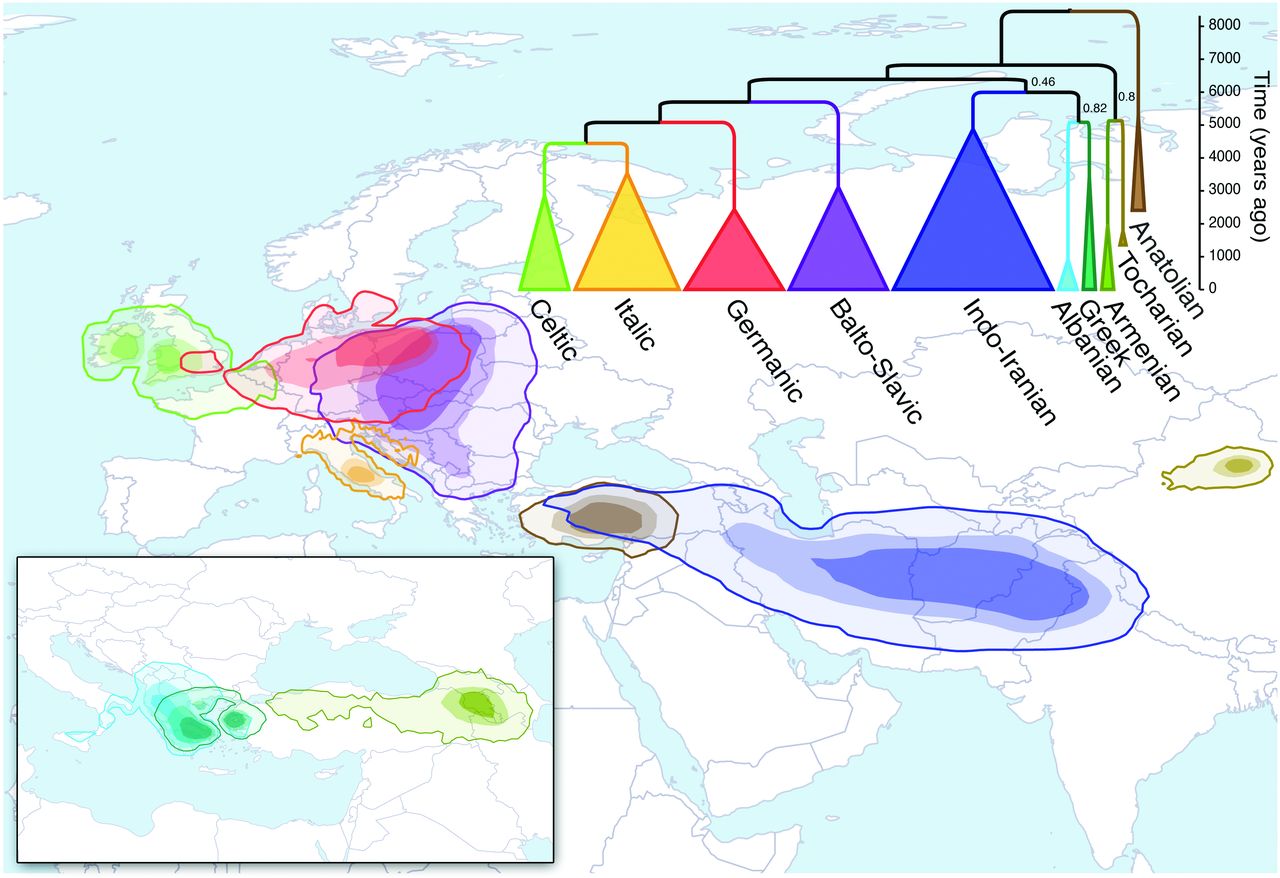

- Hungarian (or Magyar), a Finno-Ugric language, has a number of phonemes that do not appear in English or other Indo-European languages, such as ' (pronounced as in b one, though longer.

Ranjit Bhatnagar, a sound artist, has invented the Speak-and-Play, an electronic keyboard that produces digitized samples of his voice producing the 40 English phonemes (in principle, it could be programmed to use other voices, and other sets of phonemes). It was introduced by Margaret Leng Tan, an avant-grade virtuoso of the toy piano, at the UnCaged Toy Piano Festival (New York Times, 12/14/2013). Link to a video demonstrating the Speak-and-Play system.

The Alphabet

The language that humans are wired by

evolution to produce and understand is spoken

language. By contrast, written language is a

relatively recent cultural invention, and some languages

(those of preliterate cultures) don't have a written form

(except perhaps one jury-rigged by anthropologists and

missionaries). All normal children learn to understand

their native tongue effortlessly, but some of these same

individuals have difficulty learning to read. Oral

language is hard-wired into us; we learn to read with

difficulty. The source of this difficulty is

illustrated by the "Reading Wars" which dominate American

elementary education. How best to get children to

convert letter strings into words, and word strings into

sentences? We start with the alphabet.

Essentially, the alphabet is a way of expressing the phonemes of a language in written symbols (some languages, like Chinese and Japanese, have written forms, but not alphabets). The earliest known alphabet emerged in Egypt about 2000 BCE, as some Semitic-speaking group parlayed a small set of hieroglyphics into a set of symbols representing the speech sounds of their language. The Phoenician alphabet, source of the Roman letters familiar in a large number of written languages, emerged about 1000 BCE.

Like the rest of language, alphabets

continually evolve. Written language apparently began

with logograms, like the characters in Chinese or Japanese,

where symbols stand for whole words. Then it shifted

to logosyllabaries, in which symbols stand for syllables,

not whole words. And finally to the alphabet, in which

individual letters correspond to single units of

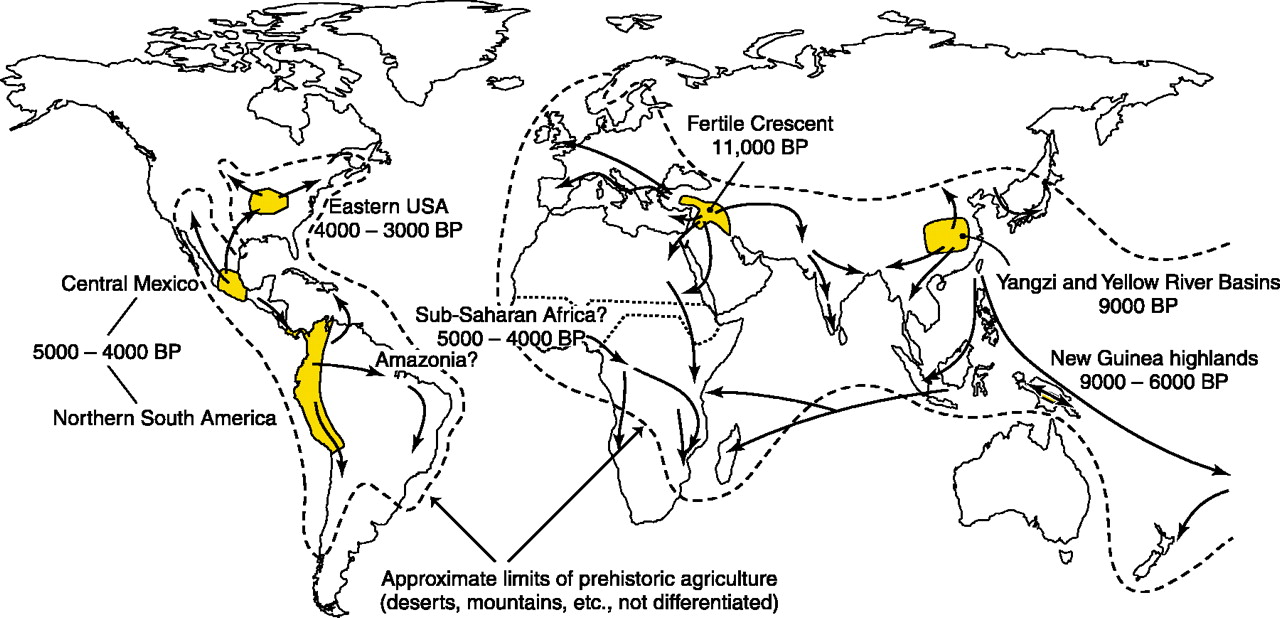

sound. Writing, in whatever form, apparently emerged

independently in four or five different geographical areas:

- Mesopotamian cuneiform, around 3000 BCE.

- Egyptian hieroglyphics, also around 3000 BCE -- because

the two systems don't have anything in common, the

conclusion is that they arose independently -- although

the idea of writing may have been transmitted from

one culture to the other.

- Chinese writing, dating to about 1500 BCE. Again,

Chinese characters are very different from cuneiform or

hieroglyphs

- Mayan glyphs, first appearing in Mesoamerica roughly 1500 years before European contact.

- A written version of Rongaronga was used by the

inhabitants of Easter Island (Rapa Nui) at the time

of first contact with Europeans in 1722. This counts

as a possible independent invention, because there was no

written language in use in the Polynesian islands from

which Rapa Nui's settlers migrated. Unfortunately,

European settlers destroyed most of the written Rongaronga

artifacts, and the only inhabitants who could read or

write the language were taken into slavery in the 19th

century. So there's just not much material to

compare to other writing systems.

Writing systems come in four basic forms:

- The familiar alphabet, usually consisting of two or three dozen signs that represent consonants (C) and vowels (V).

- A syllabary, whose signs represent various CV

combinations known as syllables. Cherokee is a good

example, with some 85 signs representing all the syllables

found in the spoken language, like qua, tai,

and tse.

- Logographic systems, like Chinese, where the signs

(logographs) represent whole words, or at least morphemes,

rather than phonemes or syllables.

- Hybrid systems, which combine an alphabet with

logograms. Examples are Egyptian hieroglyphs and the

characters of written Japanese (which look like Chinese to

the untutored person, but actually are quite

different).

There's a special challenge in deciphering ancient writing

systems, in "extinct" languages that are no longer spoken by

anyone. The classic example is Egyptian hieroglyphics,

which were translated (by Jean-Francois Champollion, a

French linguist) only by virtue of the Rosetta Stone,

uncovered during the Napoleanic Wars, which inscribed the

same text in hieroglyphics, and two other scripts, which

were already known. For more on the process of

transcribing extinct languages, see "Decoding the Lost

Scripts of the Ancient World" by Joshua Hammer, National

Geographic, 01/2026.

Some interesting facts about the alphabet:

- In English, the letters V (derived from the Latin U) and

J (derived from the Latin I), are of relatively recent

origin.

- Cherokee was the first Native American language to be given a written form, and Sequoyah's work is the only instance whereby an entire system of writing was invented by a single individual.

- The Cyrillic alphabet, used in Russian and other Slavonic languages, comes close: it is named for Sts. Cyril and Methodius, 9th-century Christian missionaries in Bulgaria who invented the script.

- The pinyin ("spelling sounds") system for

Mandarin Chinese also comes close. Traditional

Mandarin is written in the form of ideographs,

picture-like characters which represent words, but which

give no indication of how the words are pronounced.

As a result, learning Mandarin entails memorizing

thousands of individual characters -- both their meaning

and their pronunciation. Beginning in the 1950s,

following earlier less-successful attempts at

Romanization, Zhou Youguang, a Chinese linguist, and

others developed pinyin, which represents

pronunciation with the Roman alphabet, plus some

diacritical marks to represent tones (which also carry

meaning in Mandarin). The pinyin system was

officially adopted by China in 1958, and by Taiwan in

2009.

- Zhou himself led an interesting life: like many other

Chinese intellectuals, he was sent to a labor camp

during the Cultural Revolution; he lived to 111 (see

"Zhou Youguang, Who Made Writing Chinese as Simple as

ABC" his obituary by Margalit Fox, New York Times,

01/14/2017).

- A similar process of romanization is the romaji ("roman letters") system, originally developed in the 16th century by Yajiro, a Japanese convert to Christianity, and which gained popularity beginning during the Meiji era of the 19-20th centuries, and especially after World War II.

- And also the hangul system in Korean,

introduced in the15th century by scholars in the court

of Sejong the Great.

For histories of the English alphabet, see:

- Alphabet Abecedarium by Richard A. Firmage (1993);

- Language Visible: Unraveling the Mystery of the Alphabet from A to Z by David Sacks (2004);

- The Story of Writing: Alphabets, Hieroglyphs and Pictograms (2007);

- The Greatest Invention: A History of the World in Nine Mysterious Scripts (2022) by Silvia Ferrara, reviewed in "Alphabet Politics" by Josephine Quinn (New York Review of Books 01/19/2023);

- Inventing the Alphabet: The Origins of Letters from Antiquity to the Present (2022), also reviewed by Quinn.

- Not to mention the suitably titled Writing and Script: A Very Short Introduction (2009) by Andrew Robinson.

- And, in case you were wondering, Why Q Needs U (2025) by Danny Bate: 26 chapters, one for each letter in the alphabet, along with a compact history of writing from the time of the ancient Egyptians (reviewed in The Economist, 11/06/2025.

For an overview of writing in general, see:

- Writing from Invention to Decipherment (2024), ed. by Silvia Ferrara, Barbara Montecchi, and Miguel Valério.

Also, two striking hour-long documentaries (directed by David Singleton) which aired as part of the Nova science series on PBS in 2020. These are absolutely fascinating, and well worth your time to watch (links below).

- A to Z: The First Alphabet

discusses how writing emerged c. 3100 BCE, roughly simultaneously in the cuneiform system of Mesopotamia and the hieroglyphics of Egypt (the earliest Chinese characters have been dated to c. 1200 BCE), and how these systems were transformed into alphabets using the rebus principle. The rebus principle is, simply, that the sounds associated with pictograms can be combined to represent the sound of an unrelated, nonpictographic word. In the example on the right, an image of a pen touching a knee is the rebus for penny (get it?). The story is that various characters were transformed into symbols representing the sounds associated with them. Watch the film to see how this got us to the alphabet.

- A to Z: How Writing Changed the World traces how the letters of the Latin alphabet were ideally shaped to serve as the basis for the moveable type invented b Johannes Gutenberg in the mid-15th century, thus promoting both the expansion of literacy and the scientific revolution. Today, languages like English, Russian, Greek, and Arabic all have their unique alphabets, as discussed in the lectures on Perception (and Chinese has the "pinyin" system). But in the 17th century, the German philosopher (and co-inventor of calculus) Gottfried Leibniz envisioned a universal alphabet common to all the world's languages: we're not there yet.

The infant's speech apparatus can produce all the phonemes in

all known natural language -- this is what comes out when

babies babble. However, during the babbling period the infant

gradually narrows its repertoire of phonemes to those of its

native language -- that is, the language(s) to which the

infant is exposed. This results in an accent when the person

learns to speak a new language as an older child or an adult.

Long before they can produce any words,

even very young infants can detect speech sounds in the

auditory stream. Studies employing EEG showed that monolingual

infants -- that is, infants raised in a household in which

only one language is spoken -- respond differentially to

speech sounds, compared to other kinds of sounds, as early as

6 months of age -- regardless of whether these phonemes are

from their "home" language or another language, to which they

haven't been exposed. By the age of 12 months, however, these

monolingual infants clearly distinguish between their "native"

phonemes and the "foreign" ones -- a phenomenon known as perceptual

narrowing. The story was different, however, for infants

raised in "bilingual" homes, in which they were exposed to two

different languages. Like their monolingual counterparts,

these infants did not discriminate between the two languages

at 6 months of age; but by 12 months, they were clearly

discriminating between the two sets of phonemes.

Somewhat in the manner of imprinting in

geese (described in the lectures on Learning) there is a

critical period for the learning of phonology, beginning about

6 months of age. However, this does not occur in a

vacuum. It must seem obvious that the child has to hear

people speaking. But, in fact, exposure to speech,

lots of speech, is critical for language development. The more

the merrier. There may be an innate Language Acquisition

Device, as Chomsky has proposed; but language acquisition also

depends on exposure to language, and the social interaction

that comes with it. Nature and nurture, as always, work

together.

This perceptual learning of speech sounds occurs even in the womb. Infants prefer speech sounds and rhythms drawn from the language to which they were exposed during infant development -- and this is true of bilingual as well as monolingual infants.

The perceptual learning of speech

sounds is a form of statistical learning, as discussed

in the lectures on Learning.

That is, as they are exposed to language, infants naturally

pick up, without benefit of reinforcement, the statistical

regularities in what they hear. From this experience they

acquire knowledge about the regularities of speech sounds, how

phonemes are assembled into morphemes, how morphemes are

assembled into words, and how words are assembled into phrases

and sentences.

To take a classic example, consider the

findings of a study by Patricia Kuhl:

- At the age of 6-8 months, American, Taiwanese, and Japanese infants do not reliably differentiate between the syllables ra and la and qi and xi.

- The syllables ra and la are common in English, but not in Japanese or Cantonese.

- The Japanese syllable ra sounds to English ears like a blend of ra and la).

- However, at the age of 10-12 months, American and Taiwanese infants clearly distinguish between ra and la, while the Japanese do not.

- And the Taiwanese infants clearly distinguish between qi

and xi, while the American infants do not.

Does this mean that Skinner was right and Chomsky wrong -- that language is not special after all, and that language acquisition is simply a variant on classical conditioning - -albeit without reinforcement? Not exactly. In the first place, Skinner was completely wrong about the necessity of reinforcement. As we have known since the time of Tolman and Harlow, learning -- acquiring knowledge of the world through experience --just happens, whether we're trying to learn or not, and whether we're rewarded for learning or not. Statistical learning is a powerful learning mechanism, and it is the process by which children acquire most of their knowledge of language -- just as it is the process by which they acquire most of their knowledge about the world, through simple observation. But there's also a role for something like an innate Language Acquisition Device. It turns out that all of the natural languages of the world share a relatively small number of universal patterns. Artificial languages that do not share these patterns are difficult to learn, whereas those that share the "universals" of natural languages are learned readily by both children and adults. So, it seems as if we come prepared -- remember preparedness, from the Learning lectures? -- to learn some kinds of patterns and rules easily. That element of preparedness, wired into the human genome and brain by evolution, is the Language Acquisition Device.

The classic example of observational

rule-learning in language is the past tense of verbs in

English, discussed at length by the psychologist Steven Pinker

(Words and Rules: The Ingredients of Language, 2000 --

a truly wonderful book that should be read by everyone

interested in language; but first read Pinker's earlier and

more accessible book, The Language Instinct: How the Mind

Creates Language, 1994).

- The rule, of course, is to add the suffix /-d/

(or /-ed/) to the stem, yielding joke-joked

or play-played.

- Of course there are exceptions, such as sing-sang

or buy-bought, and these have to be memorized.

- When young children learn English, they typically will overgeneralize the rule, yielding conversations like the following (reported by Pinker):

- Child: My teacher holded the baby rabbits and we patted them.

- Adult: Did you say your teacher held the baby rabbits?

- Child: Yes.

- Adult: Did you say she held them tightly?

- Child: No, she holded them loosely.

- A similar overgeneralization occurs when children learn the plurals of nouns, which are usually -- but not always -- formed by adding /-s/.

What's interesting about all this is

that the child has never heard an adult say "holded".

Never. Nor has the child ever been reinforced for saying

"holded". Never. If anything, she's been

corrected. The child gets "holded" not by imitating

adults, much less by virtue of being reinforced. The

child gets "holded" because she has abstracted a rule

from hearing adults, and then generalized it to new words.

Sound and Meaning

Words are made of phonemes, which indicate how the word is to be pronounced. But what's the relationship between the sounds of words and their meanings? The debate goes back at least as far as Plato's Cratylus. In that dialog, Hermogenes argues that there is an arbitrary relationship between a word and its meaning; Cratylus disagrees, and Socrates came down somewhere in the middle. And 2500 years later, modern linguistics stands with Socrates. Words are symbolic representations of objects, events, and ideas, which don't typically resemble -- in appearance or pronunciation -- the things they represent. But there are exceptions:

- Wolfgang Kohler, one of the founders of the Gestalt

movement in psychology, showed subjects a pointed, star-like

shape and a rounded, cloud-like shape, and asked them which

would be called takete and which baluba,

both nonsense words in the subjects' native Spanish.

They overwhelmingly associated the pointed object with takete,

and the rounded one with baluba.

- Ramachandran and Hubbard (2001) repeated the experiment

with native English speakers, using the nonsense words bouba

and kiki, and got the same results.

R&H argued that this bouba/kiki effect, as they

dubbed it, represents a kind of linguistic synesthesia, in

which the sound of a word, or perhaps its physical appearance

is associated with its meaning. A word like bouba,

with its soft vowels an consonants, is associated with soft

things, while a word like tiki, with its hard vowels

and consonants, is associated with sharp things.

Whistle While You SpeakWe ordinarily think of spoken language in terms of

words strung into phrases and sentences, but that's

not necessarily the case. In the sign

languages used by deaf people, for example,

meaning is conveyed by gestures instead of spoken

words. Another example is whistling language, such

as Silbo Gomero used on La Gomera, an island

of about 22,000 people in the Canary Islands

archipelago (see "'Special and Beautiful': Whistled

Language Echoes Around This Island" by Raphael Minder,

New York Times, 02/19/2021). Accounts of

the language go back as far as 15th-century Spanish

explorers, and after conquest by Spain the language

was adapted to Spanish. According to Milner,

"Silbo Gomero... substitutes whistled sounds that vary

by pitch and length for written letters [by which he

really means phonemes or words]. Unfortunately

there are fewer whistles than there are letters in the

Spanish alphabet, so a sound can have multiple

meanings, causing misunderstandings" which have to be

resolved by reference to context [not unlike more

familiar spoken languages, as discussed below].

In 2009, Silbo Gomero was added to UNESCO's list of

"Intangible Cultural Heritage of Humanity", which

noted that it is "the only whistled language in the

world that is fully developed and practiced by a large

community" (other islands in the archipelago, such as

El Hierro, have their own whistled languages).

It is now taught as an obligatory part of the school

curriculum on La Gomero. Milner suggests that

whistling languages evolved because of the unique

geography of the Canary Islands, whose mountains,

plateaus, and ravines make traveling by foot

difficult. Whistles carry much farther than

speech, facilitating communication across long

distances. |

Syntax

Phonology has to do with the sound of

speech. Syntax has to do with the grammatical rules by which

words and phrases are strung together to create meaningful

utterances. These grammatical rules should not be confused

with the ones you learned in elementary school. Normal

children have all the grammar they need long before they enter

into formal schooling -- they pick it up from hearing other

people speak, and especially from being spoken to.

Moreover, much of this grammatical knowledge is itself

unconscious, in the sense that children (and adults) are not

consciously aware of the rules they're following when they

speak and listen, write and read. They gain explicit

knowledge of some of these rules later, during formal

instruction. It's in school that you learn the rules for

sentence agreement: that the nouns, verbs, and

adjectives in a sentence must agree in number: we say The

horse is strong, but The horses are strong.

When you make a mistake, your teacher corrects you until you

get it right.

But there are other grammatical rules

which you're not expressly taught, and which you just

know intuitively. A classic example, adapted from Joseph

Danks and Sam Glucksberg (1971) are the rules for ordering

adjectives: every native speaker of English agrees that it is

correct to say big red Swiss tables but not to say Swiss

red big tables -- it just sounds wrong. But no

schoolchild is ever taught this rule, and if you asked people

to tell you what the rule is, they couldn't say. And, in

fact, professional linguists have debated over the precise

nature of the rule for years. One proposal, from the British

linguist Mark Forsyth (in The Elements of Eloquence,

2013), is that adjectives should be ordered as follows:

opinion, size, age, shape, color, origin, material, and

purpose, as in This a lovely little old rectangular green

French silver whittling knife. For present

purposes, the actual rule doesn't matter: what matters is that

there is such a rule, everyone follows it intuitively,

but nobody (except maybe professional linguists) can tell you

what the rule is.

(There are other rules, governing phonology and morphology as well). For example, the suffix -s that marks plural English words is pronounced differently like zzz or ess, depending on the previous consonant. But nobody ever taught anybody that rule, either; native speakers of English employ it reliably by age 3 -- that is, before any elementary school teacher has taught them anything about English. And if I asked you why you say fades with the -zzz sound but gaffes with the -s sound, you couldn't tell me, because it depends on whether the preceding consonant is "voiced" or "unvoiced", and you probably don't know what that means (unless you've taken a linguistics class!).

Phrase (Surface) Structure

Phrase structure rules govern the surface

structure of language -- the utterance as it is expressed by

the speaker, and heard by the listener. Phrase structure

grammar is represented by rewrite rules of the following form:

Noun ==> man, woman, horse, dog, etc.

Verb ==> saw, heard, hit, etc.

Article ==> a, an, the

Adjective ==> happy, sad, fat, timid, etc.

Noun Phrase ==> Art + Adj + N

Verb Phrase ==> V + NP

Sentence ==> NP + VP

These last three rules can generate 4800 different legal sentences just from the 13 words specified in the first four words (try it out!). All these sentences have the following form:

The 1st noun phrase verbed the 2nd noun phrase.

Now if you recall that there are roughly 200,000 words in English, and more being invented every day, you will understand what we mean when we say that an infinite number of sentences can be generated from a finite number of words.

Phrase

structure rules look like those you learned in 7th-grade

English. But they also have psychological reality. Consider

the famous poem by Lewis Carroll, Jabberwocky, from Through

the Looking-Glass and What Alice Found There

(1871), the sequel to Alice in Wonderland (1865):

'Twas brillig, and the slithy toves

Did gyre and gimble in the wabe

All mimsy were the borogroves

And the mome raths outgrabe."Beware the Jabberwock, my son!

The jaws that bite, the claws that catch!

Beware the Jubjub bird, and shun

The frumious Bandersnatch!"He took his vorpal sword in hand:

Long time the manxome foe he sought --

So rested he by the Tumtum tree

and stood awhile in thought.And, as in uffish thought he stood

The Jabberwock, with eyes aflame

Came whiffing through the tolgey wood,

And burbled as it came!One, two! One, two! And through, and through

The vorpal blade went snicker-snack!

He left it dead, and with its head

He went galumphing back."And hast though slain the Jabberwock?

Come to my arms, my beamish boy!

O frabjous day! Callooh, Callay!"

He chortled in his joy.'Twas brillig, and the slithy troves

Did gyre and gimble in the wabe

All mimsy were the borogroves

And the mome raths outgrabe.

We don't know what the poem means,

exactly, because so many of the "words" are unfamiliar. But we

do know something of the meaning, simply because the structure

of the poem follows the rules of English grammar. For example,

we know that the toves, whatever they are, were slithy,

whatever that is; and that they were gyring and gimbling,

whatever those actions are, in the wabe, whatever that is;

that the borogroves were mimsy; and the mome raths were

outgrabe.

Actually, it's perfectly clear what Jabberwocky means, at least to Humpty-Dumpty

"Jabberwocky" had been subjected to endless exegeses

by linguists. See, also The Annotated Alice

by Martin Gardner, which contains references to much

of this scholarly material. You can read a

summary in "The Frabjous Delights of Seriously Silly

Poetry" by Willard Spiegelman (Wall Street Journal,

04/04/2020). Spiegelman reminds us that some of

the "nonsense" words coined by Carroll, such as galumphing

and chortled, have entered the standard

English dictionary. As for the Jabberwock itself, it's a creature of

Carroll's imagination, and probably best left to the

reader's imagination, as well. Conjure up your

own image, and then check out the

classic illustration by John Tenniel. Or,

better yet, buy a copy of the book, with all of

Tenniel's delightful illustrations, to share with a

child (or adult) you know. And while you're at

it, get a copy of Gardiner's Annotated Alice

for your coffee table. |

In fact, over subsequent years some of Carroll's neologisms actually made it into the English dictionary. Christopher Myers (2007) has produced an illustrated version of Jabberwocky intended to introduce the poem to children. But in his book, the Jabberwock is turned into a kind of monstrous basketball player. That may be fine for getting kids to read the book, but the real beauty of the poem is in its parody of classic English poetry, and in its demonstration of just how much meaning is contained in the syntax alone.

The

psychological reality of phrase-structure grammar is also

indicated by experiments on memory for strings of pseudowords.

For example, in an experiment by Epstein subjects were asked

to memorize letter strings of letters such as the following:

THE YIG WUR VUM RIX HUM IN JAG MIV.

Another group was asked to memorize versions of the strings that had been altered with prefixes and suffixes, so that they mapped onto phrase structure grammar:

THE YIGS WUR VUMLY RIXING HUM IN JAGEST MIV.

Strings of the second type were easier to memorize compared to those of the first type, even though they contained more letters. The reason is that our knowledge of phrase structure grammar provides a scheme for organizing the strings into chunks resembling the parts of speech. This organization supports better memory (remember the organization principle?).

As

a further demonstration, consider an experiment by Fodor and

Bever (1965) on signal detection. In this study, subjects

heard a click superimposed on a spoken sentence, and they are

asked to indicate where, in the sentence, the click occurred.

If the click was located at the boundary between a noun phrase

and a verb phrase, it was accurately located. But if the click

were presented within a noun phrase or a verb phrase, it was

usually displaced to the boundary between them. This indicates

that noun phrases and verb phrases are perceived as units.

Deep Structure

Phrase structure grammar is important, but it is not all there is to syntax. For example, at first glance, sentences with similar surface structures seem similar in meaning:

JOHN SAW SALLY.

JOHN HEARD SALLY.

However, similar surface structures don't guarantee similarity of meaning. Consider the following sentences:

JOHN IS EASY TO PLEASE.

JOHN IS EAGER TO PLEASE.

The differences in meaning can be demonstrated by rephrasing them in passive voice:

IT IS EASY TO PLEASE JOHN.

IT IS EAGER TO PLEASE JOHN.

Moreover, sentences with different surface structures may have similar meaning:

JOHN SAW SALLY.

SALLY WAS SEEN BY JOHN.

IT WAS JOHN WHO SAW SALLY.

IT WAS SALLY WHO WAS SEEN BY JOHN, WASN'T IT?

So clearly something else is needed besides surface structure grammar.

This "something

else", according to the linguist Noam Chomsky, is

transformational grammar, a set of rules that can generate

many equivalent surface structures, and also uncover kernel of

meaning common to many different surface structures. This

"kernel of meaning" is what is known as the deep structure of

the sentence. We get from deep structure to surface structure

by means of transformational rules. These rules can produce

many equivalent surface structures from a single deep

structure.

The deep structure of a sentence can be represented by its basic propositional meaning representing the basic thought underlying the utterance:

Prop ==> NP + VP

The surface structure of a sentence consists of a proposition and an attitude:

Att --> assertion, denial, question, focus on object, etc.

S --> Att + Prop

Thus:

Proposition: THE BOY HIT THE BALL.

Assertion: THE BOY HIT THE BALL.

Denial: THE BOY DID NOT HIT THE BALL.

Question: DID THE BOY HIT THE BALL?

Focus on Object: THE BALL WAS HIT BY THE BOY.

Combination: THE BALL WAS NOT HIT BY THE BOY, WAS IT?

Something like Deep Structure, and transformational grammar, is logically necessary in order to understand language. But, as empirical scientists, we also need evidence of its psychological reality. In this regard, some evidence is provided by the utterances of novices in a language, such as infants and immigrants. These utterances tend to mimic the hypothesized deep structure:

I NO GO SLEEP.

WHY MOMMY HIT BILLY?

Further evidence is provided by studies of memory for paraphrase. In such experiments, subjects study a sentence, and then are asked to recognize studied sentences from a set of targets and lures.

HE SENT A LETTER TO GALILEO.

Interest in the experiment comes from recognition errors (false alarms) made in response to alternative phrasings. Phrasings that represent a different proposition, but the same attitude, are correctly rejected:

GALILEO SENT A LETTER ABOUT IT TO HIM.

But phrasings that represent the same proposition, albeit with a different attitude, tend to be falsely recognized:

A LETTER ABOUT IT WAS SENT TO GALILEO BY HIM.

This kind of evidence indicates that memory encodes the "gist" of a sentence, its kernel of meaning, rather than the details of its surface structure.

Further evidence is provided by a study of meaning verification. Subjects study a standard sentence:

THE BOY HIT THE BALL.

Then they are presented with test sentences, and asked to indicate which have the same meaning as the standard. Sentences that involve only a single transformation are correctly verified very quickly:

HAS THE BOY HIT THE BALL?

However, sentences that involve two transformations take more time:

WAS THE BALL HIT BY THE BOY?

This indicates that, in understanding the sentence, the language processor strips away the surface structure to unpack the deeper kernel of meaning below -- and this unpacking takes time.

In an

early, now-classic experiment, Savin & Perchonock (1965)

presented subjects with sentences followed by a string of

eight unrelated words (that is, words that were unrelated to

the sentence. They were then asked to repeat the sentence

verbatim, and to recall as many of the words as possible. The

sentences represented either a kernel of meaning, such as

The boy hit the ball

or one or more transformations, such as

PassiveNegativeNegative + Passive |

The ball was hit by the boyThe boy did not hit the ballThe ball was not hit by the boy |

The

finding was that subjects remembered the most words when

presented with the simple sentence representing the kernel of

meaning. When they had to process a single transformation,

they remembered fewer words. And when they had to process two

or more transformations, they remembered even fewer words.

Apparently, processing the transformations took up space in

what we would now call working memory, leaving less

capacity left over to hold the words.

Universal Grammar

Chomsky has proposed that, the human

ability to acquire and understand any natural language is

based on the fact that some grammatical knowledge is part of

our innate biological equipment. Not that we are born knowing

English or Chinese, obviously. A child born of

English-speaking parents, but raised from birth in a

Chinese-speaking household, will speak fluent Chinese, without

even an English accent. And a child born of Chinese-speaking

parents, but raised from birth in an English-speaking

household, will speak fluent English without any trace of

Chinese accent. For Chomsky, language acquisition occurs as

effortlessly as it does because humans are born with a special

brain module or system -- he sometimes calls it a "Language

Acquisition Device" or LAD -- that permits us to learn any

language to which we are exposed. At the core of the LAD is a

body of linguistic knowledge that Chomsky calls 'Universal

Grammar" or UG -- a set of linguistic rules or principles that

apply to all languages, spoken by anyone, anywhere. UG and the

LAD come with us into the world as our basic mental equipment,

just like we are born with lungs and hearts and eyes and ears.

They are part of the biological stuff that makes us human, and

which separates us from other species, such as chimpanzees,

which for all intents and purposes lack the human capacity for

language.

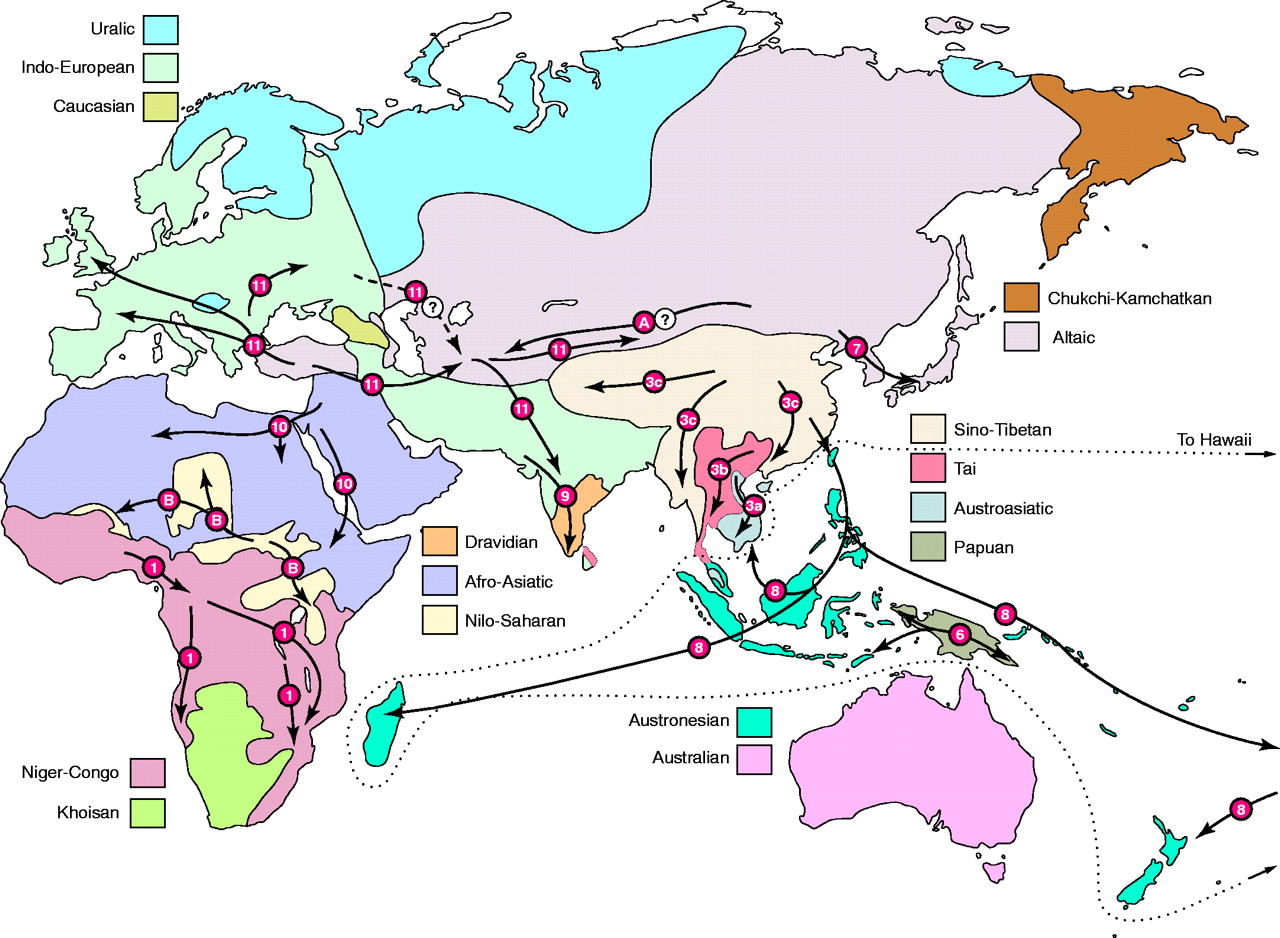

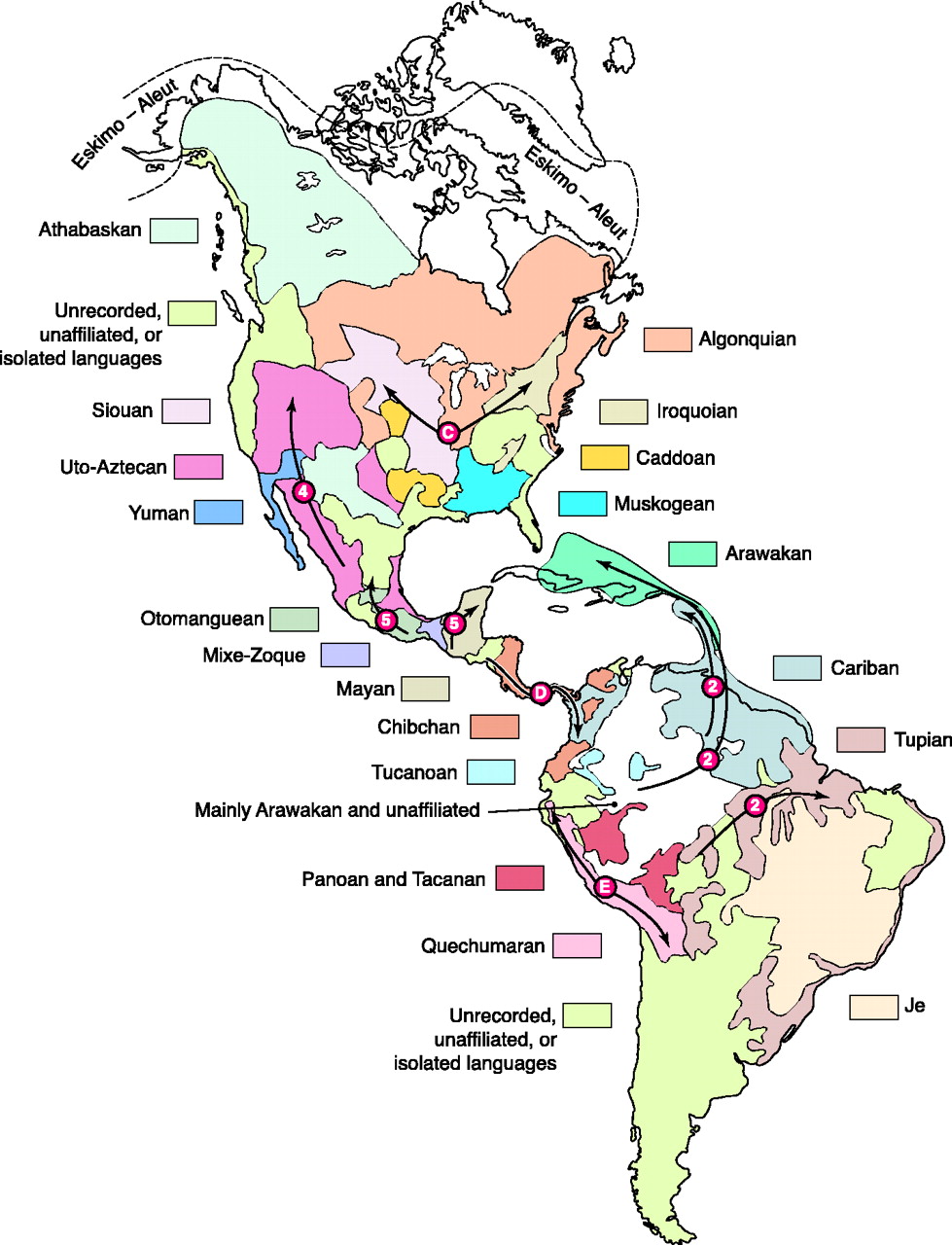

According to Chomsky, all natural languages, whether English or Chinese or Hindi-Urdu or Swahili or whatever -- have the principles of UG in common. What makes them different is due to the fact that each language has a different package of parameters. It's a little like buying a car: every car has four wheels and an internal-combustion engine, but some cars have leather seats and other cars are convertibles. You get the basic equipment whatever car you buy; it's only the options that are different. But the options are linked together: if you buy a standard-shift car, it comes with a clutch and a different kind of gearshift, as well as a gauge to indicate the engine's RPMs. So it seems to be with language: if, for example, a language has the rule that verbs must precede objects (John shoveled the snow) will also place relative clauses after the nouns that they modify (John shoveled the snow, which was three feet deep). Not all languages have either feature (there are languages that say, in essence, John the three-foot deep snow did shovel), but a language like English, which has one feature, apparently also must have the other.

Of course, the words are different, too. In English we say "man" where the Spanish say "hombre". But Chomsky is not talking about words. He's talking about syntax: the fundamental structure of the grammatical knowledge that allows us to put words together into meaningful sentences.

Chomsky's theory is widely accepted, though mostly on grounds of plausibility: there has to be something like UG, and an innate LAD, to enable people to learn their native language as easily and as well as they do. But gathering empirical evidence for the theory has proved difficult, because it requires investigators to have an intimate knowledge of several languages that are very different from each other. Still, there has been some interesting progress toward empirical tests of Chomsky's theory.

For example, Mark Baker, a linguist who studied under Chomsky at MIT, has shown that the world's languages differ from each other in one or more of a relatively small set of 14 parameters. Baker claims to have identified more than a dozen of these already, and he thinks there may be as many as 30.

- For example, some languages are polysynthetic, which means that they can express in one (usually long) word a meaning that another language would take many words to convey. Mohawk, a Native American language, is polysynthetic, as is Mayali, an aboriginal language spoken in northern Australia. English, by contrast, is not; nor are most other known languages.

- As another example, languages differ in terms of head direction, which dictates whether modifiers are added before or after the phrases they modify. For example, in English, we would say

"I will put the book on the table".

Welsh, spoken in the British Isles, and Khmer, spoken in Cambodia, also put modifiers in the front of phrases. By contrast, Lakota, another Native American language, Japanese, Turkish, and Greenlandic (an "Eskimo" language) put the modifiers at the end. In Lakota, you would say something like

"I table the on book the put will".

Different languages make different

"choices" among these parameters. Presumably, when a child

learns his or her native language, he or she starts out with

UG, and then fits what he or she hears into the scheme. It's

as if an infant raised in an English-speaking household says,

"OK! I got it! This is not a polysynthetic language, and it's

got forward head-direction. None of this goes on consciously,

of course, and you don't actually learn about these parameters

unless you take a college course in comparative linguistics.

But there does seem to be a universal grammar underlying all

languages, and we seem to know it innately. And whatever

differences there are between languages, somehow we have to

grasp them very quickly in order for us to become fluent

speakers as early as we do. So, it makes sense that, in

addition to UG, the various parametric "options" are also laid

out in our brains, ready to categorize the language we're

exposed to from birth.

Critique of Universal Grammar

On the other hand, work by Michael Dunn suggests that linkage between various aspects of language grammar isn't as tight as Chomsky assumes it is. His survey indicates that the world's languages contain a lot more random linkages among these features, suggesting that flipping the switch for verb-object order, for example, doesn't also control noun-relative clause order.

Moreover, Daniel Everett, a linguist

who did Christian missionary work in the Amazon (his memoir of

that time is entitled Don't Sleep, There Are Snakes,

has claimed that one particular tribe, the Piraha, speak a

language whose structure is inconsistent with Chomsky's theory

of UG. Chomsky (and others) has claimed that recursion

is the essential property of human language, distinguishing it

from all other modes of communication (like birdsong), and the

key to its creativity. That is, we can embed clauses within

sentences to make infinitely long sentences -- and, more

important, sentences which have never been uttered

before. What nonhuman animals lack, even chimpanzees, is

the ability to combine meaningful sounds (what would be our

phonemes and words) into new sounds that have a different

meaning.

So, beginning with

John gave a book to Lucy

we can simply keep adding

clause:

- John, who is a good husband, gave a book to Lucy.

- John, who is a good husband, gave a book to Lucy, who loves reading.

- John, who is a good husband, gave a book, which he wanted to read himself, to Lucy, who loves reading.

And so on: you get the idea.

Anyway, Everett claims that the Piraha

language doesn't have the property of recursion -- nor, for

that matter, does it have number terms or color words. Far

from being a unique human faculty, Everett concludes that

language is a product of general intelligence. It is an

invention, not unlike the bow and arrow, which can arise

independently in lots of different cultures, but whose

particulars are shaped by the culture that produced it. So far

from being universal, particular languages are shaped by

particular cultural needs. Far from being dependent on a

uniquely human faculty, like Chomsky's Language Acquisition

Device, language is a product of general intelligence, like

the bow and arrow. The Piraha, whose culture is focused

completely on the concrete here-and-now, just don't need

recursion, so their language doesn't have it. But if language

is shaped by culture, then it isn't universal in the way

Chomsky says it is. You can't imagine how controversial this

claim is -- so much so that no fewer than three independent

research teams have visited the Piraha to figure out how their

language works. It doesn't help that Everett has been

reluctant to release the data he collected in the field.

However, an independent corpus of 1,000 Piraha sentences,

collected by another missionary linguist, appears to show no

evidence of recursion. But even it that's true, it doesn't

show that the Piraha can't generate or understand recursive

sentences. It would just show that they don't routinely do

it.

Everett has expanded his argument in Language:

The Cultural Tool (2012). Far from depending on

the existence of a separate Language Acquisition Device,

Everett and other Chomsky critics argue that language learning

is just a special instance of learning in general. To be

sure, spoken language (as opposed to sign language)

depends on a distinctly human vocal apparatus, but language

itself is "just" a complex function mediated by a very complex

brain. In Everett's formula:

Language = Cognition +

Culture + Communication.

Language isn't an innate human faculty,

in this point of view. Rather, it's a tool that can be

used by a culture or not. And when it's used by a

culture, it's shaped by the culture in which it's used.

We'll see this idea crop up again later, when we consider the

Sapir-Whorf hypothesis that language determines

thought. What people talk about it (semantics), and how

they talk about it (syntax), are both determined by culture,

including cultural values.

Everett expanded his argument with

Chomsky in How Language Began: The Story of Humanity's

Greatest Invention (2018). The subtitle tells it

all: Language isn't something that evolved through the

accidents of natural selection; language is something that

humans invented for purposes of exchanging

information. For that reason, there will be cultural

differences among languages -- like the language of the

Piraha, which doesn't involve recursion, and doesn't have

color or number terms, because the Piraha don't see the need

for them. But beyond this debate, there lies a basic

difference about the function of language: Chomsky think that

language is primarily a tool for thinking, while Everett

thinks that language is primarily a tool for

communication. As Everett puts it:

Language did not fully begin when the first hominid uttered the first word or sentence. It began in earnest only with the first conversation, which is both the source and the goal of language.

A variant on Everett's critique has been

promoted by Michael Tomasello (2003) in his usage-based

theory of language acquisition (for a a brief summary,

see "Language in a New Key" by Paul Ibbotson and Michael

Tomasello, Scientific American, 11/2016).

Essentially, Tomasello denies any need to postulate anything

like LAD or UG, and instead argues that language is a product

of a general-purpose learning system, coupled with other

general-purpose cognitive abilities, such as the ability to

classify objects and make judgments of similarity.

Tomasello begins by outlining some

empirical problems with Chomsky's theory (which, as I've

noted, has changed considerably since he first announced it in

the 1950s).

- Some aboriginal Australian languages don't package words neatly into separate noun phrases and verb phrases, "outliers" which suggested that UG was not universal after all.

- Some "ergative" languages, such as Basque, have grammatical features that are not found in "accusative" languages, such as Spanish, French, or Portuguese. For example, where an "accusative" language would say The boy kicked the ball, an "ergative" language would say something like The ball the boy kicked.

- To be fair, though, it was findings such as this that

led Chomsky and his colleagues to the develop the

"Principles and Parameters" version of the theory

described above.

- And of course, there are languages such as Piraha which don't seem to make use of recursion, which Chomsky believes lies at the heart of language.

Now, in response to such findings, one might simply make

adjustments to the theory, and in fact that's what Chomsky has

done. But Tomasello believes that enough challenging

evidence has accumulated that Chomsky's theory should be

thrown out entirely, and something new substituted -- or, in

Tomasello's case, something old: statistical learning,

of the sort discussed earlier in the context of

phonology. The general idea is that through this general

learning mechanism children pick up on the regularities of

whatever language they're exposed to. Although this

sounds a bit like B.F. Skinner's approach to language -- well,

it is, except that it depends on observational learning

rather than reinforcement. In that sense, the

usage-based theory is more "cognitive" and less

"behavioristic". This general statistical-learning

process is augmented by other general processes, such as

attention, memory, categorization, and the ability to

understand other people's intentions (which is discussed in

the Lectures on

"Psychological Development"..

The point of this is not that Chomsky is right and Everett wrong, or vice-versa (though, frankly, I'm rooting for Chomsky). Chomsky's theories about human language have dominated linguistics, psychology, and cognitive science for more than 50 years (since his earliest publications, in the 1950s), and he attracts allies and foes in equal number. For my money, Chomsky's basic view of language as a unique human component of our cognitive apparatus is probably more right than wrong, but I'm not enough of a linguist -- I'm not any linguist at all -- to engage in a detailed critique of his theoretical views. Nor is there any need for such a critique at this level of the course. All I ask is that you appreciate Chomsky's approach to language. If you get really interested in language, you'll have plenty of opportunity to delve deeper into its mysteries in more advanced courses.

For an entertaining introduction to Chomsky's thought, both psychological and political, see "Is the Man Who Is Tall Happy", a documentary film by Michel Gondry (2013). the title is taken from a sentence that Chomsky often uses to illustrate his ideas.

Also the Noam Chomsky website at www.chomsky.info.

For more details on Baker's work, see his book, The Atoms of Language: The Mind's Hidden Rules of Grammar (2001).

Everett's work, and the controversy surrounding it, is

presented in his book, Language, the Cultural Tool

(2012), and in "The Grammar of Happiness", a documentary

film scheduled to be shown on the Smithsonian Channel in

2012.

The Everett-Chomsky debate has made it into popular

culture. Tom Wolfe, the pioneer of the "New

Journalism" has done for language (and the theory of

evolution) what he did earlier for modern art (in the