Introduction

| For an essay on developments in psychology from 1967 (when I took introductory psychology as a college sophomore) to 2017 (when I taught my last intro class and retired from the University), see "50 Years of Psychology: A Personal Look Back a "Intro". |

William James

(1842-1910), the great Harvard philosopher and leader of early

American psychology, famously defined psychology as "the science

of mental life" (in his Principles of Psychology,

1890). The goal of psychology as a science is to describe and

explain states of mind -- or, as we would now put it, the mental

structures and processes that underlie human experience,

thought, and action. Quite simply, the task of psychology is to

understand how our minds work, just as the job of physics is to

explain how the physical world works, and the job of biology is

to explain how living things work, the job of sociology is to

explain how societies work, and the job of political science is

to explain how political institutions work.

William James

(1842-1910), the great Harvard philosopher and leader of early

American psychology, famously defined psychology as "the science

of mental life" (in his Principles of Psychology,

1890). The goal of psychology as a science is to describe and

explain states of mind -- or, as we would now put it, the mental

structures and processes that underlie human experience,

thought, and action. Quite simply, the task of psychology is to

understand how our minds work, just as the job of physics is to

explain how the physical world works, and the job of biology is

to explain how living things work, the job of sociology is to

explain how societies work, and the job of political science is

to explain how political institutions work.

But what exactly does the mind do?

In the 19th century, a group of

theorists known as the phrenologists, led by Franz

Joseph Gall (1758-1828) and Johann Gaspar Spurzheim (1776-1832)

attempted to classify all the things the mind did, and relate

each activity to a particular part of the brain. Gall came up

with a list of 27 "mental and moral faculties", and Spurzheim

added 10 more. The phrenologists distinguished between various

kinds of love (physical love, parental love, friendliness),

different aspects of perception (form, location, color, tune,

and time), different kinds of thinking (comparing and causal

reasoning), and various traits and attitudes (wit,

secretiveness, benevolence, and conscientiousness). That's a lot

of mental functions, and even so the phrenologists got into

debates over precisely how many there were. (We'll talk more

about the phrenologists in the lectures on the Biological Bases of Mind and

Behavior.)

In the 19th century, a group of

theorists known as the phrenologists, led by Franz

Joseph Gall (1758-1828) and Johann Gaspar Spurzheim (1776-1832)

attempted to classify all the things the mind did, and relate

each activity to a particular part of the brain. Gall came up

with a list of 27 "mental and moral faculties", and Spurzheim

added 10 more. The phrenologists distinguished between various

kinds of love (physical love, parental love, friendliness),

different aspects of perception (form, location, color, tune,

and time), different kinds of thinking (comparing and causal

reasoning), and various traits and attitudes (wit,

secretiveness, benevolence, and conscientiousness). That's a lot

of mental functions, and even so the phrenologists got into

debates over precisely how many there were. (We'll talk more

about the phrenologists in the lectures on the Biological Bases of Mind and

Behavior.)

But

even before the phrenologists arrived on the scene, a succinct

answer was provided by the German philosopher Immanuel Kant

(1724-1804), in his famous (and pretty much unreadable) Critique

of Judgment (1790):

But

even before the phrenologists arrived on the scene, a succinct

answer was provided by the German philosopher Immanuel Kant

(1724-1804), in his famous (and pretty much unreadable) Critique

of Judgment (1790):

"There are three absolutely irreducible faculties of mind: knowledge, feeling, and desire".

In other words, mental states consist of the thoughts, feelings, and desires that pass through our minds when we're conscious. It is my virtue of our minds that we acquire and use knowledge, and experience emotions, moods, needs, and wants -- how we know things, feel what we feel, and desire what we desire. By saying that these states were "irreducible", Kant meant simply that thoughts, feelings, and desires were in some sense independent of each other, and one was not derivative of one or more of the others (this is a controversial point, and not all psychologists accept it, as we'll see in our discussions of emotion and motivation).

In the 20th century, the American

psychologist Ernest R. (Jack) Hilgard (1904-2002) recast Kant's

three "faculties" as the "Trilogy of Mind" (1980):

In the 20th century, the American

psychologist Ernest R. (Jack) Hilgard (1904-2002) recast Kant's

three "faculties" as the "Trilogy of Mind" (1980):

- cognition, having to do with knowledge and beliefs (perceiving, remembering, thinking, learning, problem-solving, communicating, and the like);

- emotion, having to do with affect, moods, and feelings (of pleasure, pain, and the like); and

- motivation, having to do with drives, needs, desires, purposes, and goals (tendencies to approach and avoid, and the like).

So, the scope of psychology is very broad, including:

- questions about basic processes, or how the mind works;

- the origins of mind, in terms of the evolution of the species and the development of the individual;

- individual differences in the organization of mental life;

- pathology, the disorders of mind that characterize various mental illnesses;

- applications of psychological knowledge in education (enhancing teaching and learning), psychotherapy (prevention and treatment of mental illness), the workplace (enhancing worker productivity and managerial effectiveness), and the like.

Why do we care about these mental states? At the very least, we care about them because thoughts, feelings, and desires are things we experience, and we want to know more about them -- just as we want to more about the stars in the skies, the earth beneath our feet, and the other creatures that live here with us.

But more than idle curiosity is at stake here. We want to understand how our minds work because we believe that mental states play a causal role in our behavior. The underlying philosophical rationale for scientific psychology is the Doctrine of Mental Causation, which states in formal terms, that

Mental states are to action as cause to effect.

In other words, that what we do depends

on what we think and feel and want. Understanding how our

minds work, why we think and feel and want as we do, then, is

essential to understanding why we do what we do.

Psychology is the science of mental life, but it's also one of a

number of behavioral sciences like anthropology or economics,

political science or sociology. All the behavioral

sciences are concerned with understanding the behavior of human

beings and other animals -- how individuals interact with the

physical world and with each other. But each of these

behavioral sciences has its own preferred way to explain why we

behave the way we do.

For example, consider a rather dramatic behavioral observation: that some person, X, has committed suicide. The question naturally arises: "Why did he kill himself?". Psychology offers three general types of answers for consideration:

- a cognitive explanation,e.g., " X killed himself because he believed he was worthless";

- an emotional explanation, e.g., " X killed himself because he felt worthless"; or

- a motivational explanation, e.g., " X killed himself because he no longer wanted to live".

A psychological explanation of behavior explains an individual's action by invoking his or her mental states. And psychology, as a science, seeks to understand the nature of these cognitive, emotional, and motivational states: how they relate to each other, how they relate to what is going on in the brain and the rest of the body, and how they relate to the individual's behavior.

Not everyone agrees with this idea, by the way. Some philosophers (and some psychologists, too) dismiss mental causation as mere folk psychology -- a set of naive, traditional ideas about the mind and behavior, unsupported by scientific evidence, that are doomed to be replaced by a more sophisticated, truly scientific view.

- According to the most radical of these views, our mental states are epiphenomenal, and play no causal role in behavior. From this point of view, folk psychology is something to be abandoned as science progresses, just as we have abandoned concepts like phlogiston and ether.

- According to another view, known as reductionism (see below), psychological theories are only halfway points in the explanation of behavior, and that truly scientific explanations are found at the biological level of neural interactions, or even at the level of physics and chemistry.

The Domain of Psychology

Psychology is a behavioral science, one of a number of disciplines (including anthropology, biology, economics, history, political science, and sociology) that seek to understand how individuals (whether humans or nonhuman animals) interact with each other and the world in which they live. Each of these sciences has a particular scope, and a particular set of concepts by which it seeks to explain behavior. As described above, psychology explains behavior in terms of the individual's mental state -- the organism's thoughts, feelings, and desires.

Herbert Gintis, an economist, has suggested that all the behavioral sciences are united around the following principles:

- As a species, humans have certain universal characteristics which are the product of their evolutionary history.

- Genetic changes form the basis for cultural evolution.

- Cultural transmission is generally conformist.

- Human preferences are socially programmable, by which individuals internalize norms.

- Human interactions are strategic in nature.

- The brain is a decision-making organ.

- Individuals generally act rationally.

This is a good start, though

we can quibble about some of the points. Three things,

however, are for sure:

- The mind is a decision-making organ.

- The mind is what the brain does.

- Human action, including social interaction, is determined by these decisions.

Psychology as a Social Science

Psychology is also a social

science, because humans are social beings as well as

intelligent beings. We live in groups, rather than in isolation,

and we cooperate and compete and make exchanges with each other

on a daily basis. Our individual mental lives take place in this

social context, and so we have to understand it in order to

understand what we think, and what we do. Accordingly,

psychology must be concerned with a number of problems:

- Social Interaction: how individuals relate to each other in the ordinary course of everyday living.

- Social Influence: how other people, as individuals and in groups, influence the individual person's experience, thought, and action; and, reciprocally, how individuals influence the groups of which they are part.

- Social Cognition: in general, social psychologists take the position that important social interactions are cognitively mediated: that the way we behave with each other is determined by our knowledge and beliefs about ourselves, others, and the situations in which we encounter them. Accordingly, psychology is interested in how we form impressions and make judgments concerning ourselves, others, and social situations, and how we encode and retain social knowledge in memory. The repertoire of knowledge and skills that we use to navigate the social world is sometimes called social intelligence.

- Cultural Psychology: psychologists are also interested in the impact of sociocultural factors on individual cognitive processes -- e.g., do people in different cultures think differently?

- Emotion and Motivation: for largely historical reasons, the study of emotion and motivation has largely been the province of social psychologists (although this situation is changing, and emotion and motivation are emerging as independent sub-disciplines within psychology, much as cognition is now).

- Personality: psychologists are also interested in

individual differences in mental functioning, the origins of

these differences, and their expression in social behavior.

"No man is an island", as the English poet John Donne put

it. These individual differences are usually manifested in

social situations, or at least become apparent in social

comparisons.

In general, while cognitive

psychology studies mind in the abstract, social psychology

studies mind in action, or mind in social

relations -- how beliefs, attitudes, and other mental

states translate into interpersonal and inter-group behavior.

Psychology as a Biological Science

The brain is the

physical basis of the mind, and so psychology must also be a biological

science. The attempt to understand how the mind works

naturally leads to questions concerning the biological basis of

mental life --the anatomical structures and physiological

processes involved in cognition, emotion, and motivation.

Accordingly, psychologists are interested in a number of

biological topics, including:

- Neuroanatomy: the structure and organization of the nervous system.

- Neurophysiology: the neural processes involved when we perceive an object, shift our attention, remember something, speak, listen, write, or read to another, feel sad or happy, hungry or thirsty, dream, and hallucinate.

- Molecular and Cellular Biology: how neurons work, and how they communicate with each other. In particular, because memory is recorded in connections among neurons, psychologists are interested in the molecular and cellular changes that accompany learning, remembering, and forgetting.

- Endocrinology: the nervous system is one way that

various parts of the body communicate with each other; the

endocrine system is another. In particular, psychologists

are interested in how hormonal changes affect mental

functioning, and how mental processes, operating through the

nervous system, might affect the body's hormonal environment

-- a topic known as psychoneuroendocrinology.

- Immunology: It is also widely held that stress and other emotional states, again operating through the nervous system, can affect the body's vulnerability and response to disease -- a topic known as psychoneuroimmunology.

- Genetics and Evolutionary Biology: the field of behavior genetics is concerned with hereditary influences on mental and behavioral processes, while the field known as evolutionary psychology, a successor to sociobiology, is concerned with the evolutionary origins of human experience, thought, and action.

Interestingly, modern biology

has an expressly social aspect, ecology, which

is concerned with the study of interacting how groups of

organisms, such as species, each of which occupies its own

environmental niche. Ecology is concerned with the organism's

adaptation to its environment, and its relations with other

organisms. For humans, mental processes play a crucial role in

adaptation and interaction. For this reason, psychology may be

viewed as part of an interdisciplinary field known as human

ecology.

Psychology as a Physical Science

Because mental life

is a result of the activity of the brain, and the brain in turn

is an electrochemical system, psychology is also in some sense a

physical science.

- In fact, some of the earliest scientific psychologists

were interested in psychophysics, which

traces the relations between the physical properties of

stimuli and the psychological properties of the

sensory-perceptual experiences to which they give rise.

- More generally, knowing how the nervous system works as a

physical system can help us to understand how the mind

works. Consider, for example, the importance of optics, a

branch of physics, for understanding our sense of vision.

- More recently, advances in biophysics have led to the development of brain-imaging technologies, such as PET (positron-emission tomography), MRI (magnetic resonance imaging), and MEG (magnetoencephalography), which allow us to see the brain at work while subjects perform various mental tasks.

Levels of Explanation

The idea of psychology as a physical science, at least in part, raises the question of reductionism -- the idea that the principles of psychology can be reduced to principles of physical science. Such a reduction would allow us to eliminate reference to such "folk-psychological" terms as belief, attitude, and the like, and explain behavior solely in terms of neural functions. The question is whether knowing the physical state of a person's brain will allow us to predict his or her thoughts, feelings, desires, and actions.

The

doctrine of reductionism was succinctly stated in a quotation

attributed to Ernest Rutherford (1871-1937), a British physicist

who won the 1908 Nobel Prize (ironically, in chemistry!) for his

work on radiation:

There is only one science, and it is physics; all the rest is stamp collecting.

Reductionism is particularly

favored among physicists and psychologists and philosophers who

might be diagnosed as suffering from "physics envy", and it

remains at topic of considerable debate among psychologists and

philosophers.

Here's a nice example of biological reductionism. Zietsch et al. (2-15) reported a genetic study of marital infidelity -- what the biologists euphemistically call "extra-pair mating". In a group of more than 7,000 twins and siblings, they calculated that about 53% of the variability in infidelity was due to genetic factors (62% for men, 40% for women), and the rest due to environmental factors. Moreover, by genetic typing of a subsample of the subjects indicated that infidelity was associated with the presence of any of five variants (technically, single nucleotide polymorphisms) of a particular gene, known as AVPR1A. Now, Zietsch et al. were very careful not to be reductionistic. They pointed out that genetic and environmental variance were split about 50-50 (when the two sexes are combined). And they also pointed out that, while genes might contribute to the motive to be unfaithful, the environment provides the opportunity to do so. Nevertheless, some commentators immediately jumped to the conclusion that, when it comes to marital infidelity, "our genes make us do it". For example, writing in the New York Times, Richard Friedman, a prominent psychiatrist, wrote the following ("Infidelity Lurks in Your Genes", New York Times, 05/24/2015):

We are accustomed to thinking of sexual infidelity as a symptom of an unhappy relationship, a moral flaw or a sign of deteriorating social values.... But... it turns out that genes, gene expression and hormones matter a lot.... We have long known that men have a genetic, evolutionary impulse to cheat, because that increases the odds of having more of their offspring in the world. But now there is intriguing new research showing that some women, too, are biologically inclined to wander, although not for clear evolutionary benefits. Women who carry certain variants of the vasopressin receptor gene are much more likely to engage in "extra pair bonding," the scientific euphemism for sexual infidelity.... For some, there is little innate temptation to cheat; for others, sexual monogamy is an uphill battle against their own biology.

In the final analysis, however, psychology links three different levels of the explanation of behavior: the psychological level, emphasizing the individual's mental states; the sociocultural level, emphasizing social structures and processes external to the individual; and the biophysical level, emphasizing physicochemical structures and processes. The psychological level of explanation has links "up" to the sociocultural level as well as links "down" to the biophysical level, but psychologists must favor explanations of behavior that refer to the individual's mental states.

To return to the example of suicide:

To return to the example of suicide:

- At the psychological level of analysis, psychologists explain behavior in terms of beliefs, feelings, and desires, as described earlier:

At the sociocultural level of

analysis, however, it may be that suicidal

behavior can be influenced by social and cultural forces.

At the sociocultural level of

analysis, however, it may be that suicidal

behavior can be influenced by social and cultural forces.- For example, in World War II, Japanese kamikaze pilots obeyed the orders of their superior officers.

- And the members of the People's Temple in Jonestown, Guyana (originally based in Oakland) obeyed the orders of Jim Jones, their cult leader to "drink the Kool-Aid", leading to the mass suicide known as the "Jonestown massacre".

- Or, for that matter, the perpetrators of the terror attacks of September 11, 2001, ostensibly done in the name of Islam.

At the biophysical level of analysis, suicidal behavior may be influenced by genetic predispositions (if, for example, suicide ran in families), or by certain neurotransmitters or hormones.

- For example, identical twins are more alike in their

suicidal tendencies than are fraternal twins, suggesting

that there is a genetic (as well as an environmental)

predisposition to suicide (there is also an environmental

component to suicide, as we'll discuss later in the

lectures on Psychological

Development).

- Suicide also seems to be related to a disorder in the serotonin system in the prefrontal cortex of the brain (see "Why? The Neuroscience of Suicide" by Carol Ezzell, Scientific American, 02/03).

Suicide is very complex. For an up-to-date overview of the literature, see Suicidal: Why We Kill Ourselves (2018) by Jesse Bering. And if you're having suicidal thoughts, call the National Suicide Prevention Lifeline at 1-800-273-8255.

Each of these levels of analysis is appropriate, and is favored

by particular disciplines (sociologists and anthropologists

prefer the sociocultural level of analysis, while geneticists

and neuroscientists prefer the biophysical level). A biophysical

analysis is not superior to a psychological or a sociocultural

analysis; the levels are just different. No level is

more legitimate than any other, and none carries any special

privilege.

However, the three levels of analysis are almost certainly interrelated. For example, a discipline known as sociobiology (a term coined by the evolutionary biologist E.O. Wilson) attempts to account for various sociocultural phenomena directly in terms of biological processes such as evolution by natural selection.

From a psychological point

of view, however, the processes studied at the sociocultural and

biophysical levels of analysis generally find their pathways to

the individual person's behavior through the psychological

level. For example, a person's suicidal behavior may reflect his

belief in the authority of a charismatic leader or the

righteousness of a cause (Japanese kamikaze pilots

during World War II, the residents of Jonestown, Guyana, the

suicide bombers of the Middle East, or the terrorists of

September 11, 2001 come to mind). Alternatively, his feelings

of depression may be the product of genetic predisposition, or

engendered by certain hormonal changes.

When all is said and done,

however, psychology, as the science of mental life, includes all

three levels of analysis, and tries to link them together by

asking the following sorts of questions:

- How does the mind work?

- What is the relationship between mind and body?

- What is the relationship between the individual and society?

Psychological explanations of

behavior are always at the level of the individual's mental

state, but other levels can also be of interest for what they

tell us about the mind -- the sociocultural matrix in which the

individual resides, and the biological bases of mental life.

The Philosophical Backdrop for Modern Psychology

The questions about mind addressed by scientific psychology have their origins in age-old philosophical questions. These have been summarized as follows by the philosopher Thomas Nagel:

- How do we know the world?

- How do we know the contents of another person's mind?

- What is the relation between mind and body?

- How do words acquire meaning?

- Do we have free will?

- What is good?

- What is just?

- What happens when we die?

- What is the meaning of life?

The same

sorts of questions were posed in another way by the artist Paul

Gaugin (1848-1903), in the title of one of his famous "Tahitian"

paintings: Where do we come from? What are we? Where are we

going? (1897) Note the baby on the right-hand side of the

painting, with the elderly woman on the left.

The same

sorts of questions were posed in another way by the artist Paul

Gaugin (1848-1903), in the title of one of his famous "Tahitian"

paintings: Where do we come from? What are we? Where are we

going? (1897) Note the baby on the right-hand side of the

painting, with the elderly woman on the left.

Historically, psychology is an offshoot

of philosophy, addressing these philosophical questions about

the mind with the tools of empirical science. (Psychology is an

offshoot of physiology as well, as some of the earliest

scientific psychologists worked on problems of sensation; but

that is another story.)

A Comic-Book History of the Philosophy of Mind

It should be understood that there was a time when nobody, not even philosophers, were asking questions about the nature of the mind. Before the 9th century BCE, questions about mental life weren't discussed by philosophers, at least in the West. Julian Jaynes, in his very provocative book, The Origins of Consciousness in the Breakdown of the Bicameral Mind (1976), notes that there are very few references to consciousness or mental life in early literature (e.g., the Iliad and the Odyssey). To the extent that characters in this ancient literature had thoughts, feelings, and desires, these were not mental acts of the person, but rather things put into his or her mind by the gods.

Around the 6th century BCE, things began to

change. Characteristic of the philosophical tradition of the

"Golden Age" of Greece (Socrates, c. 469-399 BCE; Plato, c.

427-347; Aristotle, 384-322 BC) was the recognition that

people's thoughts, feelings, and desires were their own. This is

the origin of consciousness to which Jaynes refers -- the point

at which people became aware of the contents of their own minds

as such, and also aware of their ability to control these

contents themselves. Similar things were happening in Eastern

philosophy, by the way: for example, Buddha taught that ideas

came from sensations, while Confucius taught that individuals

could think for themselves. These were novel, revolutionary

ideas at the time.

Around the 6th century BCE, things began to

change. Characteristic of the philosophical tradition of the

"Golden Age" of Greece (Socrates, c. 469-399 BCE; Plato, c.

427-347; Aristotle, 384-322 BC) was the recognition that

people's thoughts, feelings, and desires were their own. This is

the origin of consciousness to which Jaynes refers -- the point

at which people became aware of the contents of their own minds

as such, and also aware of their ability to control these

contents themselves. Similar things were happening in Eastern

philosophy, by the way: for example, Buddha taught that ideas

came from sensations, while Confucius taught that individuals

could think for themselves. These were novel, revolutionary

ideas at the time.

This philosophical tradition

developed further in the West -- first in the hands of certain

philosophers of the medieval Christian Church (e.g., Augustine

of Hippo, 354-430; Thomas Aquinas, 1225-1274), and later by the

philosophers associated with the Enlightenment of 17th and 18th

century Europe. The Enlightenment philosophers were particularly

interested in the debate over the relation between knowledge and

experience. On this question, there merged two fundamental

points of view which still divide philosophers, and

psychologists, today.

This philosophical tradition

developed further in the West -- first in the hands of certain

philosophers of the medieval Christian Church (e.g., Augustine

of Hippo, 354-430; Thomas Aquinas, 1225-1274), and later by the

philosophers associated with the Enlightenment of 17th and 18th

century Europe. The Enlightenment philosophers were particularly

interested in the debate over the relation between knowledge and

experience. On this question, there merged two fundamental

points of view which still divide philosophers, and

psychologists, today.

- The rational tradition, also known as nativism, promoted by Rene Descartes (1596-1650) and Kant, taught that at least some knowledge was independent of experience.

- By contrast, the empiricist tradition associated with John Locke (1632-1704), David Hume (1711-1776), and George Berkeley (1685-1753) taught that all knowledge comes through experience.

For highly readable introductions to Enlightenment philosophy, see:

- The Story of Philosophy: The Lives and Opinions of the Greater Philosophers by Will Durant (1st Ed., 1926; 2nd Ed., 1962). This is the classic one-volume history of philosophy -- and you've gotta love the subtitle.

- The Dream of Enlightenment: The Rise of Modern Philosophy (2016) by Anthony Gottlieb, the second volume in his history of philosophy (the first volume, the Dream of Reason, covers the period from the ancient Greeks to the Renaissance).

These and other Enlightenment philosophers posed questions that psychologists now try to settle scientifically, and we will talk about them from time to time in this course. But the Enlightenment philosophers were not ready to pursue a science of mental life. In large part, we owe this reluctance to the doctrine of dualism proposed by Descartes at the very beginning of the Enlightenment period. Descartes held that mind and body were different substances. For example, in contrast to the body, the mind occupied no space. Thus, the argument went, the mind can't be measured; and because science depends on measurement, a science of the mind was impossible.

This position was undercut in the 19th

century, with the development of classical psychophysics.

Ernst Weber (1840) and Gustav Fechner (1860) measured the

relations between physical stimuli and the corresponding sensory

experience -- demonstrating, in the process, that sensory

experience was not only measurable but lawful. At roughly the

same time, Hermann von Helmholtz (1856-1966) was conducting

experimental investigations into the mechanisms of spatial

perception.

In addition, Franciscus Donders (1868) showed that mental processing could be measured in terms of the time it takes a person to perform a mental operation -- introducing the reaction time or response latency methodology which forms such an important part of modern scientific psychology.

Similar methods were eventually applied to the so-called "higher" mental processes -- including memory, thought, and any other mental function that went beyond immediate sensory-perceptual experience. For example, Hermann Ebbinghaus (1885) invented the nonsense syllable (strings of letters consisting of a consonant, a vowel, and a consonant, such as TUL, that are pronounceable but meaningless) in order to study the formation of associations between ideas -- and, in the process, showed quantitatively how memory was related to experience. At about the same time, Mary Whiton Calkins (1896), invented the paired associate technique, consisting of one nonsense syllable paired with another, to the same purpose. Ivan Pavlov (1898), a physiologist working in Russia, and Edward L. Thorndike (1898), a psychologist working in the United States, pioneered the study of learning in animals. In this way, in the latter quarter of the 19th century, a science of the mind began to emerge.

In the 20th century, psychology broke away from both philosophy and physiology, and established itself as an independent science. For most of this century, psychological and philosophical analyses of mind proceeded somewhat independently. This was partly due to the so-called "behaviorist revolution" in psychology, which began in the late teens and 1920s. The behaviorists, as their very name implies, studiously avoided any reference to mental states (because they can't be publicly observed), and confined their analyses to tracing the relations between observable physical stimuli, and observable behavioral responses.

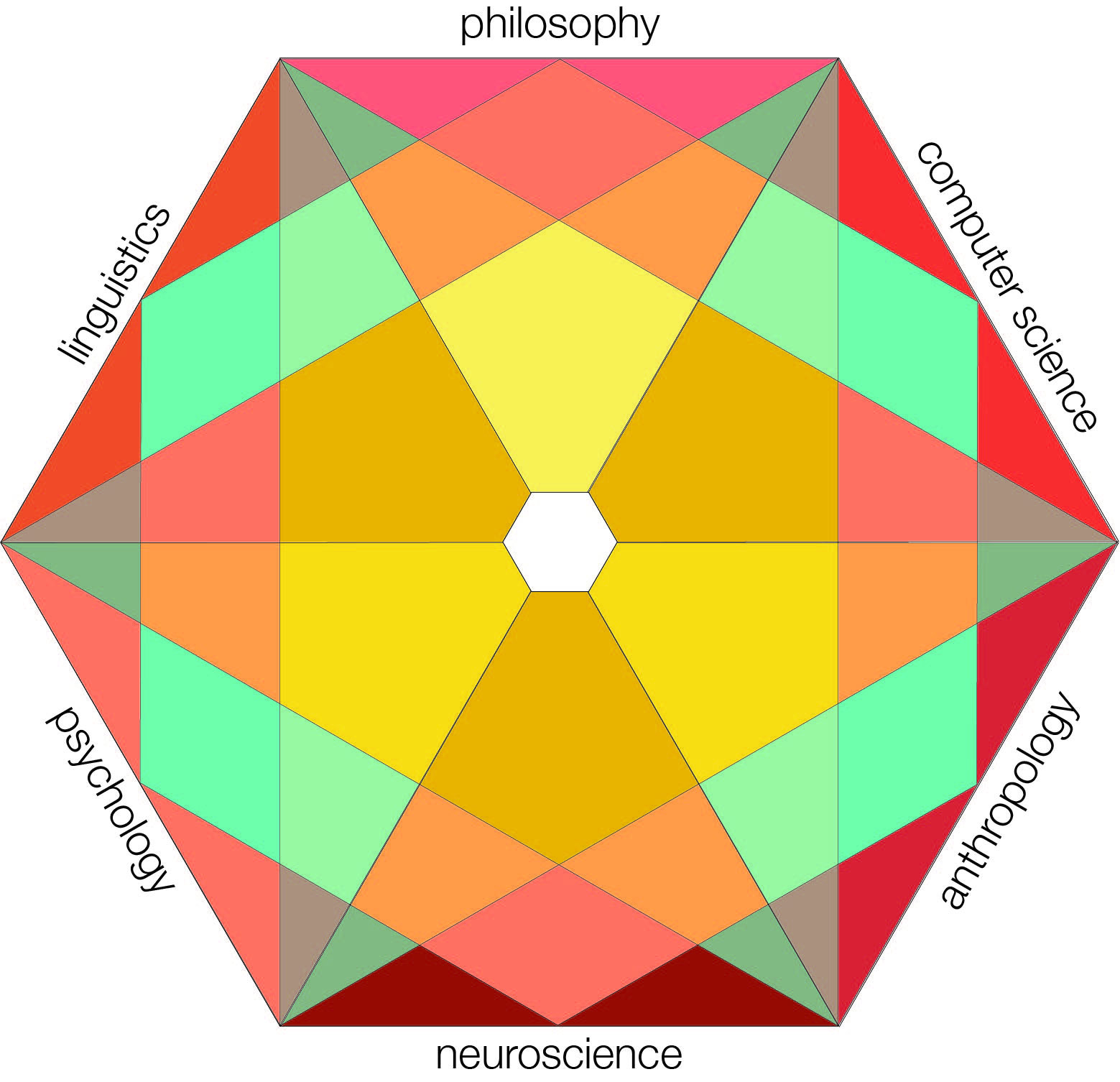

But questions about mental life didn't go away. They returned to the forefront in the 1950s and 1960s, when the so-called "cognitive revolution" reinstated mind as a -- some would say the -- proper subject matter for psychology. Now, psychologists and philosophers work together, along with linguists, computer scientists, neurologists, and anthropologists, in the interdisciplinary field known as cognitive science.

The Relation of Psychology to Philosophy

Modern philosophy can be divided into four main branches. Logic is concerned with the formal principles of reasoning, and the criteria by which judgments and inferences are to be held valid. Aesthetics has to do with the nature of beauty and art. Ethics has to do with questions of good and evil, moral duty, and obligation. Metaphysics has to do with the fundamental nature of being and reality.

Metaphysics, in turn, has three main branches: ontology, which is concerned with the nature of existence; cosmology, which is concerned with the nature of the universe; and epistemology, which is concerned with the nature of knowledge.

Each of these branches of philosophy has is psychological counterpart.

- Obviously, logic and epistemology are closely related to psychology as a cognitive science, because both are concerned with the nature of knowledge, and how knowledge is acquired, represented, and used.

- Ethics relates more to psychology as a social science, because both are concerned with the relations between individuals and groups; cognitive psychologists also study the processes by which we make judgments about what is good and what is just.

- Aesthetics also has a cognitive component to it, because beauty is something which is perceived; but also a social component, as the standards for beauty and artistic character vary from time to time and place to place.

In addressing questions of mind (or, for that matter, any other question), philosophers use three main methods: introspection, or the detailed inquiry into their own experiences and beliefs; linguistic analysis, or the meanings of words and the ways in which they are used in language; and logical argument, based on principles developed in the study of rational judgment.

There is much to be gained by such efforts. However, even philosophers realize that they are not necessarily adequate to the task of understanding and explanation. In the first place, introspection can be biased by inaccuracies and distortion in perception and memory. Moreover, introspection can only reveal what is consciously accessible, not what might be going on outside of phenomenal awareness. Moreover, there is a difference between the principles that might be postulated on the basis of philosophical principle and those that actually govern human experience, thought, and action. For example, philosophers sometimes assume that people follow the principles of normative rationality when making judgments. That is, they engage in careful calculation of probabilities, according to mathematical logic. However, people are generally poor judges of the probabilities of events. To take another example, philosophers sometimes assume that people follow a principle of utilitarianism in their behavior, maximizing pleasure and minimizing pain; but this tendency is reversed in individuals who are depressed or masochistic.

Like the universe as a whole, the mind

cannot be understood by thought and reason alone. Just as

astronomers ask empirical questions about cosmology with the

tools of their science, so do psychologists ask empirical

questions about the mind. The characteristic features of the

scientific method in psychology are the systematic

observation of events under controlled

conditions; observations are collected in formal

experiments which test explicit hypotheses

derived from some theory; observations derived

from these experiments are expressed in quantitative

terms, which are then subjected to statistical tests

to determine the validity of the investigator's inferences. For

more details, see the corresponding Lecture Supplement, "Beyond

Impressions: Descriptive and Inferential Statistics".

The Essence of Science

The essential methods of

science, whether it's a science like physics or a science like

psychology (or, for that matter, a science like sociology) began

to emerge about 1000 years ago. In fact, one writer has called

the scientific method "The Best Idea of the Millennium" ("Eyes

Wide Open" by Richard Powers, 04/18/99). Here's a capsule

history of the development of the scientific method, partly

inspired by Powers' article.

For a more thorough analysis of the scientific method, see The Scientific Method: An Evolution of Thinking from Darwin to Dewey by Henry M Cowles (2020). The essence of the scientific method is often presented as a sequence of five steps: observation, hypothesis, prediction, experiment, confirmation. But as Jessica Riskin, a historian of science at Stanford University, points out in her review of Cowles's book, you could make a reasonable case for almost any of the 120 permutations of these five steps ("Just Use Your Thinking Pump", New York Review of Books, 07/02/2020). Although the scientific method can be traced back to Bacon and Newton in the 17th century, the term "scientist" itself is very young, having been introduced only in 1834 by William Whewell -- and it didn't catch on until much later in the 19th century (the British Association for the Advancement of Science was founded only in 1831; before that). Before that, people like Newton and Galileo, and even Darwin, thought of themselves as natural philosophers, and if British natural philosophers were really famous they were members of The Royal Society for Improving Natural Knowledge, which received its royal warrant in 1663. Cowles concludes that "The rise of 'the scientific method' was less a success than a tragedy", because its "monopolistic claim on transcendent truth" (Riskin's words) set science apart from the humanities.

The Shift from Authority and Intuition to Observation

The Enlightenment of the 17th

and 18th centuries gave rise to a Scientific Revolution in which

divine revelation and arguments from authority and intuition

were supplanted by the search for universal principles through

systematic observation, controlled experimentation, and the

mathematization of universal laws (think of Galileo and Newton).

Islam and the Scientific RevolutionAbu-Ali Al-Hasan Ibn al-Haythem (known in the West as Alhazen), an Islamic scholar born c. 965 AD in Basra (then a city in Persia, now a city in Iraq) and died c. 1040 in Cairo, Egypt, resolved a dispute that was itself almost a century old about the nature of vision. Euclid, Ptolemy, and other ancient authorities had argued from reason that light radiated from the eye, and fell on objects, allowing them to be seen. Aristotle, in contrast, argued that light was radiated by objects and fell on the eye. Both had perfectly reasonable arguments favoring their views, but nobody adduced any evidence for them (Bertrand Russell somewhere noted that Aristotle held the view that women had fewer teeth than men; although he was married twice, it apparently never occurred to him to look in either wife's mouth to see if this was true). Alhazen performed the following simple experiment (among others): he asked observers simply to look at the sun, which burned their eyes (so don't try this at home!). This simple experiment demonstrated conclusively that light was radiated (emitted or reflected) by objects, not by the eyes. Alhazen's treatise on optics was translated into Latin in the 13th century, as part of the revival of interest in ancient learning known as scholasticism. European appreciation of his work on the geometry of vision led eventually to the invention of techniques for visual perspective in painting, pioneered by the Italian artist Giotto (1267-1337). In recognition of his achievements in mathematics and physics, a crater on the Moon is named in his honor. But for our purposes Alhazen's most important contribution was his appeal to empirical evidence, rather than to authority, abstract theory, or rational argument, to settle disputes about the nature of the world. |

Controlled Experiment

Roger Bacon (1214-1294), an

English scholastic philosopher, read Alhazen's work in

translation, and developed his point of view into a full-blown

philosophy of science based on empirical evidence derived from

controlled experiments, in which variables are manipulated one

at a time to see which have an effect on some outcome. Aristotle

had argued against such experiments, on the ground that the

world must be understood as a whole, not in terms of

particulars. In his Opus Majux (1267), Bacon discussed

four causes of ignorance:

- appeals to incompetent authority;

- influence of custom;

- opinions of the ignorant crowd;

- displays of wisdom that cover up what we don't know.

Vaccines and Autism: A Case Study in Bad Science

McCarthy's alarm, in turn, was stimulated by a 1998 paper by Wakefield et al. published in Lancet, a leading British medical journal. They reported 12 children "with a history of normal development followed by a loss of acquired skills, including language", and various gastrointestinal problems. Nine of the children were subsequently diagnosed with autism, one as psychotic, and two with encephalitis. All had received the measles-mumps-rubella (MMR) vaccine. On the basis of these cases, Wakefield et al. suggested that "there is a causal link between measles, mumps, and rubella vaccine" and autism.

The first thing to say is that subsequent research, with a larger group of subjects, has shown no association between the MMR vaccine and autism (or any other neurodevelopmental disorder). None. Nil. Nada. Zip. Zilch. The definitive study was reported by Jain et al,. (JAMA, 2015), based on a database of 34 million people enrolled in American health insurance plans. They classified 95,727 children as to whether they received the MMR vaccine or not, how many doses of the vaccine they received, and whether or not they had an older sibling with autism, and then looked at the incidence of autism. Having received the MMR vaccine had no effect on the risk of autism -- even among children with an older sibling who had the diagnosis, who therefore were at higher risk for autism to begin with. There's just nothing there.

That's how science is supposed to go, as we refine our understanding. All well and good, but (and it's a big "but") the Wakefield study should never have been published in the first place. Why? Because it lacked even elementary controls.

If we want to say that the MMR vaccine causes autism, we need to to take one group of children who got the MMR vaccine, and another group -- a control group -- who didn't, and compare their rates of autism. That's the sort of thing that was done in the followup studies, to disprove the idea that there is any association between the vaccine and the illness. None. Nil. Nada. Zip. Zilch. Wakefield et al. didn't even try to evaluate a control group. They just found 12 kids with a particular syndrome, noted that they had all received the MMR vaccine -- not too surprising, if you think about it, since almost every child in the US and the UK receives the vaccine, and then concluded -- not just erroneously, but illegitimately -- that there might be a causal link between the one and the other.

In the aftermath of the Wakefield publication, enough problems were found with the study (long story, and irrelevant to the point I'm making here) to warrant its retraction -- a relatively rare event in science. The Editor of Nature declared the study fraudulent, not just wrong, and retracted it; 10 of Wakefield's 12 co-authors disavowed the paper; Wakefield himself lost both his academic affiliation and his license to practice medicine. So, science corrected itself.

But the point here is that the absence of even an elementary comparison group -- never mind the absence of a representative sample of vaccinated children -- means that there never was any valid scientific evidence for an association between MMR and autism. Which means, in turn, that the study should never have been published in the first place.

Why the Wakefield study was published, given these elementary considerations, is something of a puzzle, and the fact that it was ever published in the first place is something of a scandal. There were clearly major failures in editorial handling of the manuscript. But the bottom line is this: you can't make claims that X causes Y, or even that X is associated with Y, without a proper control or comparison group.

Parsimony

William of Ockham

(1290-1349), another English scholastic, promoted another

scientific principle, which has come to be known as the Law

of Parsimony, or "Ockham's Razor". Given a number

of different explanations for a particular outcome, Ockham

argued, the simplest explanation is to be preferred over more

complicated ones. Ockham fully realized that the universe might

be very complex; what he argued against was unnecessary

theoretical complication.

"Experimental Philosophy"

With the principles of

empirical observation, controlled experiment, and parsimony in

hand, the stage was set for the revolution in "experimental

philosophy" announced by Francis Bacon (1561-1626; apparently no

relation to Roger), another English philosopher. In summing up

the scientific method, Bacon sought to banish the four "idols of

the mind":

- the idols of the tribe, or perceptual illusions;

- the idols of the cave, or personal biases;

- the idols of the marketplace, or linguistic confusions;

- the idols of the theater, or philosophical dogma.

The new science was

exemplified by Galileo Galilei (1564-1642), who used the

telescope to confirm Copernicus' theory that the Earth revolved

around the Sun; William Gilbert (1540-1603), who discovered

magnetism; Johannes Kepler (1571-1630), who discovered that the

planets travel in elliptical, not circular, paths; and William

Harvey (1578-1657), who discovered the circulation of the blood.

Utility of Knowledge

These facts revealed by the

new experimental philosophy were all very interesting, if at the

time they were not particularly useful. But Francis Bacon also

promoted the notion of practical knowledge -- the use of

scientific knowledge to improve the condition of mankind. From

Bacon we get the idea that "knowledge is power"-- an idea

that lies at the heart of our post-industrial "knowledge

economy" based on information and information services. But we

wouldn't get there for a long time (first we had to have an

industrial revolution).

Induction

Francis Bacon also held the

view that science progresses by the steady accumulation of

empirical facts derived from empirical observation. In this inductive

method, facts come first, and theory comes later, induced

from the facts, and explaining the facts. To have a theory first

smacked of the argument from authority or intuition that both

Bacons, Roger and Francis, fought against. Facts, impartially

collected, would speak for themselves. Once a theory emerged

from the data, however, it was never taken as the last word. It

was always subject to continued verification by new

evidence, which would confirm or deny it.

The Hypothetico-Deductive Method

The

Baconian (and Newtonian) emphasis on observation and induction

lasted through the 19th century, and well into the 20th. For

example, Charles Darwin ((1809-1882) induced the theory of

evolution by natural selection from observations of the

geographical distribution of finch beaks and tortoise shells.

But in the 20th century, Karl Popper (1902-1994) convincingly

argued that induction was insufficient to guarantee true

knowledge. To use his example, if all you observe are white

swans, you might well conclude that all swans are white, but

this conclusion would be disproved by the observation of even a

single black swan (like the ones my wife photographed in

Melbourne, Australia). He also pointed out that Einstein's

theory of relativity had shown that Newtonian physics, which had

been regarded as definitive since the 17th century, was not

right. Accordingly, Popper argued that science should

employ a strategy of falsification, seeking evidence

that would disprove a hypothesis. Scientific theories

generate specific hypotheses, which are tested against

data generated by experiments designed to show that

those hypotheses are false. Popper described this method as conjecture

followed by refutation. The best hypothesis is the

one left standing after all the alternatives have been

disconfirmed. For Popper, the difference between a genuine

science, like physics, and a pseudoscience, like Marxian

economics or Freudian psychoanalysis, is that the propositions

of physics can be falsified, while those of psychoanalysis

cannot. Popper's arguments for falsification rather than

verification won the day, and the logic -- or at least the

rhetoric -- of falsification is part of the everyday rhetoric of

science.

The

Baconian (and Newtonian) emphasis on observation and induction

lasted through the 19th century, and well into the 20th. For

example, Charles Darwin ((1809-1882) induced the theory of

evolution by natural selection from observations of the

geographical distribution of finch beaks and tortoise shells.

But in the 20th century, Karl Popper (1902-1994) convincingly

argued that induction was insufficient to guarantee true

knowledge. To use his example, if all you observe are white

swans, you might well conclude that all swans are white, but

this conclusion would be disproved by the observation of even a

single black swan (like the ones my wife photographed in

Melbourne, Australia). He also pointed out that Einstein's

theory of relativity had shown that Newtonian physics, which had

been regarded as definitive since the 17th century, was not

right. Accordingly, Popper argued that science should

employ a strategy of falsification, seeking evidence

that would disprove a hypothesis. Scientific theories

generate specific hypotheses, which are tested against

data generated by experiments designed to show that

those hypotheses are false. Popper described this method as conjecture

followed by refutation. The best hypothesis is the

one left standing after all the alternatives have been

disconfirmed. For Popper, the difference between a genuine

science, like physics, and a pseudoscience, like Marxian

economics or Freudian psychoanalysis, is that the propositions

of physics can be falsified, while those of psychoanalysis

cannot. Popper's arguments for falsification rather than

verification won the day, and the logic -- or at least the

rhetoric -- of falsification is part of the everyday rhetoric of

science.

Popper applied this

methodology even to politics, in his book The Open Society.

For Popper, as Colin McGinn put it, science was the model

for civilization ("Looking for a Black Swan", New York

Review of Books, 11/21/02). Given the evidence of his

posthumous collection of essays, All Life Is Problem

Solving, it was also the model for life. For Popper, even

elementary instances of learning consisted in the generation of

a hypothesis, in the form of an expectation, followed by an

empirical test of that hypothesis, until the organism settles on

a hypothesis that cannot be falsified. Similarly, in politics,

policymakers must be open to feedback and criticism from those

-- the voters -- who are affected by their policies.

Lately, the proliferation of

the Internet and social networking has led to the rise of a new

movement in the social sciences known as "Big Data",

involving data sets containing thousands of variables from

millions of subjects. These data sets are then analyzed,

mostly by variants of the correlation coefficient, to reveal

whatever relationships may exist in the data. For the most

part, the analysis of Big Data is entirely inductive. That

is to say, the analysts do not approach the data with any

specific hypothesis in mind, but rather, seek only to explore it

to identify any relationships that exist. As with any

exploratory data analysis, the results should not be taken at

face value: if p is set at .05, then 5% of the

relationships will be "significant" just by chance.

Accordingly, some way has to be found to replicate the analyses

-- perhaps by splitting the sample in half and determining

whether any relationships observed in one half are also observed

in the other half, a procedure known as double

cross-validation.

The hazards of a reliance on Big Data was nicely illustrated by an article by Seth Stephens-Davidowitz, an economist, who analyzed the Google database for searches related to depression ("Dr. Google Will See You Now", New York Times, 08/11/2013). He uncovered a number of potentially meaningful patterns. For example, searches increase in frequency during the winter and decrease during the summer, and suggested that people prone to depression should consider moving someplace warm. Cute, but in fact, epidemiological data shows that the incidence of depression in Minnesota and Wisconsin is actually lower than that in Alabama and Mississippi. Moreover, the result obscures a more important finding, of seasonal affective disorder, a form of depression already well known to psychiatrists. "Big Data" is, essentially, correlational in nature, and correlation is not causation. Warmth doesn't protect against depression: sunlight does; and it only protects against some forms of depression. But we'd never know this from an analysis of Google searches; we only know this from careful epidemiological research that tests specific hypotheses.

Science and Democracy

Robert Lawrence Kuhn, host of Closer to Truth: Science, Meaning, and the Future, a PBS television series on science, has argued that "scientific literacy energizes democracy", by fostering a stance of critical thinking, including demanding both evidence for claims made by authorities and political elites, and logical reasoning from evidence to conclusions "Critical thinking is the essence of the scientific method. Knowing the difference between assumption and deduction, and between presumption and proof, can alter one's outlook and transform an electorate. Moreover, Kuhn argues, scientific thinking fosters diversity through "the capacity to respect pluralistic political positions -- the essence of democracy -- since members can understand that no position, not even their own, can be 'proved' to be the correct one with anywhere near absolute certainty" ("Science as Democratizer", American Scientist, 09-10/03).

As Dennis Overbye has written ("Elevating Science, Elevating Democracy", New York Times, 01/27/2009):

The knock on science from its cultural and religious critics is that it is arrogant and materialistic.... Worse, not only does it not provide any values of its own, say its detractors, it also undermines the ones we already have, devaluing anything it can't measure, reducing sunsets to wavelengths and romance to jiggly hormones. It destroys myths and robs the universe of its magic and mystery.

So the story goes.

But this is balderdash. Science is not a monument to received Truth but something that people do to look for truth. That endeavor, which as transformed the world in the last few centuries, does indeed teach values. Those values, among others, are honesty, doubt, respect for evidence, openness, accountability and tolerance and indeed hunger for opposing points of view. These are the unabashedly pragmatic working principles that guide the buzzing, testing, poking, probing, argumentative, gossiping, gadgety, joking, dreaming and tendentious cloud of activity... that is slowly and thoroughly penetrating every nook and cranny of the world....

It is no coincidence that these are the same qualities that make for democracy and that they arose as a collective behavior about the same time that parliamentary democracies were appearing. If there is anything democracy requires and thrives on, it is the willingness to embrace debate and respect one another and the freedom to shun received wisdom. Science and democracy have always been twins.

In 2017,

with an American president who routinely lies, misstates facts,

and prevaricates, and authorities in some political quarters

speak of "personal truth" and "alternative facts", Time

magazine ran a cover story asking whether truth itself is dead

(04.03/2017). Not necessarily. As Frank Wilczek (a

Nobel-laureate theoretical physicist) put it: "science plays a

vital role in defining the boundaries of rational discourse"

because it is the best way we have of determining what is true

and what isn't. ("No, Truth Isn't Dead", Wall Street Journal,

06/24/2017). Wilczek goes on to state that:

In 2017,

with an American president who routinely lies, misstates facts,

and prevaricates, and authorities in some political quarters

speak of "personal truth" and "alternative facts", Time

magazine ran a cover story asking whether truth itself is dead

(04.03/2017). Not necessarily. As Frank Wilczek (a

Nobel-laureate theoretical physicist) put it: "science plays a

vital role in defining the boundaries of rational discourse"

because it is the best way we have of determining what is true

and what isn't. ("No, Truth Isn't Dead", Wall Street Journal,

06/24/2017). Wilczek goes on to state that:

In science, the meaning of "truth" is more substantial, and less susceptible to cynical manipulation. Empirical facts -- that is, experienced events -- are the gold standard of truth.... Science builds on experience, using logic and... working assumptions [like Newton's laws of motion and gravity].... Yet a hallmark of science is that we spell out and continually check our assumptions -- and, if necessary, modify them. That discipline keeps science honest, reliable and on-track.... "Truth", in the context of morality, law, and politics, is a very different concept from logical or scientific truth. In those domains, science, with its cautious attitude, can't provide all the answers. Different groups of people make different assumptions, which will sometimes lead to very different conclusions. But when any version of "truth" contradicts scientific truth (let alone empirical truth), sensible people must reject it.

The Cycle of Theory and Evidence

Verification and

falsification are both forms of empiricism, but Popper reversed

the traditional Baconian position with respect to the relation

between theory and evidence. For Bacon, evidence came first, in

the form of observations, and theory came later, by induction.

For Popper, however, theory comes first: in this way, he is the

culmination of a tradition of rationalism that goes back to Rene

Descartes, a 17th-century French philosopher, who attempted to

derive true knowledge from reason alone (and thus initiated

modern philosophy). The scientist first confronts a problem,

such as why the earth appears to revolve around the sun or why

apples fall off trees. Then, he or she offers a number of

theories, or at least hypotheses, as to which that might be the

case. Then, he or she proceeds to test these hypotheses,

attempting to disconfirm them, and retains as true the one

hypothesis that fails to be disconfirmed. "Truth", however, is

always provisional: some future observation may disconfirm even

this theory. In practice, however, the relation between theory

and hypothesis is more complex than this.

Where do Popper's "problems"

come from? They exist in the form of puzzling observations that

demand some sort of explanation. A lot of scientific research

consists in an investigator publishing an observation, or

conducting an experiment that is less intended to test a

hypothesis than it is to "poke nature and see what happens".

From such observational studies, the investigator may then

suggest a theory, or at least a hypothesis (see below). He or

she may also perform a verificationist test that indicates that

the theory/hypothesis is provisionally true, or at least should

be taken seriously by others. Later, another scientist may

propose a new theory, and perform a falsificationist test that

pits one theory against another. But nobody goes to the trouble

of testing every conceivable hypothesis against data

from an experiment specifically designed to reject that

hypothesis. However much scientists embrace the Popperian logic

of falsificationism at the level of rhetoric, actual

scientific work involves both induction and deduction, both

verification and falsification.

A reliance on empirical

evidence is the defining feature of science -- more so than

controlled exerimentation (evolutionary biologists and

cosmologists don't do it), more so than mathematics (Darwin and

Mendel didn't use any beyond arithmetic). In The

Knowledge Machine (2020), Michael Stevens, asserts the

iron rule of explanation: no matter how appealing a theory

might be on other grounds, scientific theories are validated

only by empirical explanation. Scientists may come up with

their hypotheses in a variety of ways, including intuitive

appeal or mathematical elegance, but when it comes to

testing a hypothesis, the only thing that counts is the data.

By the way, about that word theory. A "theory" is often misinterpreted as mere speculation, as when creationists or proponents of "intelligent design" criticize the theory of evolution as "just a theory". But in science, a theory is an overarching explanatory framework that accounts for a large set of empirical observations, and goes beyond observable facts to make plausible inferences about facts that have not yet been observed. These inferences are hypotheses, and experiments are conducted, one way or another, to reveal new facts that will determine whether these hypotheses are correct. Evolution by natural selection is a theory. So is general relativity. Theories are never tested whole. Rather, theories generate specific hypotheses, which are then tested against empirical data.

Publication

Research unreported is research undone. It is not until scientific findings are made public, so that other investigators can confirm them, and incorporate them into their theories, or refute them (or at least set limits on them), that research is completed. In order to facilitate publication of scientific research, the first scientific journals were founded in the late 17th century -- the Journal des Savants in France (1665), and the Philosophical Transactions of the Royal Society, in England (1667). The first scientific journals devoted to psychology appeared only in the late 19th century:

- Mind (1876), founded by Alexander Bain, published mostly philosophical analyses of mental life, but did publish some psychological work -- notably William James' famous essay on "What is an Emotion?".

- Philosophische Studien (Philosophical Studies, 1881), founded by Wilhelm Wundt primarily to publish studies from his own laboratory (other departments of psychology, including Wisconsin and Berkeley, also sponsored such "house organs").

- American Journal of Psychology (1887), founded by G. Stanley Hall as part of a group of pioneering American scientific journals sponsored by Johns Hopkins University, and intended as an expressly psychological counterpart to Mind.

- Zeitschrift fur Psychologie und Physiologie der Sinnesorgane (Journal for the Psychology and Physiology of the Sense Organs, 1890), founded by Hermann von Ebbinghaus to publish a wider variety of studies than those produced by Wundt's laboratories. In the 19th century, scientific psychology was often referred to as "physiological" psychology, to distinguish it from merely "philosophical" work. Later, the references to "physiology" and the "sense organs" were dropped and the journal was renamed simply the Zeitschrift fur Psychologie.

- Psychological Review (1894), founded by Mark Baldwin and James McKeen Cattell, and now the "jewel in the crown" of the American Psychological Association's publication program.

Transparency

Publication is an important aspect of the transparency of the scientific method. When scientists report their empirical findings, they also describe the method by which those findings were obtained, so that other scientists can repeat what they have done and confirm or disconfirm their observations. This allows scientists to build on each other's work, so that science is a cumulative enterprise. But this also allows others to critique the experimenter's method, and find flaws that, when corrected, might yield different and more valid results. We do not have to have "been there" when the experiment was done. We can understand, and critique, the experiment solely on the basis of the experimenter's report of his method.

Replication

Scientists confirm or disconfirm each other's findings through replication -- that is, one scientist does what another scientist says he did, and sees if she gets the same result. Actually, there are two kinds of replication:

- direct replication, in which the initial study's methods are repeated as precisely as possible;

- conceptual replication, which depart from the original procedure in various ways, to test a hypothesis that is conceptually related to the original.

Even direct replications are never precisely identical to the original. An experiment conducted on subjects recruited from Stanford isn't exactly the same as the original conducted on subjects recruited from Berkeley; and one conducted in 2012 may employ subjects who are quite different from those in an original conducted in 1962 -- even if they are all college students. So "replication" is a matter of degree. What is really important is that the replication be faithful to the spirit of the original; and follow the letter of the original as closely as possible.

Beginning in the 2010s, psychology and other fields began to

face a kind of replication crisis -- which is to say, a

number of highly visible experiments failed to be replicated,

casting doubts, not just on the original studies, and the

researchers who conducted them, but also on the field in general

(Pashler & Wagenmakers, 2012).

- In 2014, the Many Labs (ML) project attempted to replicate

13 effects reported in social psychology journals, 11 of which

were successfully replicated (Klein et al., Social

Psychology, 2014). Two effects, which in fact had

aroused controversy when first published, failed to replicate

- In 2015, the Open Science Collaboration (OSC; Science,

2015) reported the results of 100 attempts to replicate

effects reported in 2008 in three prominent psychology

journals, representing a wider sample of psychological

research. Only 47% of the replications were deemed

successful, in that they yielded statistically significant

effects similar to the originals.

At first glance, however, the outcome of the OSC study would

seem to impeach the validity of much psychological research, if

not psychology in general, but this would be misleading (as

noted by Gilbert et al., Science, 2016). For

example, some of the "replications" weren't true replications,

in that they departed significantly from the procedures of the

original study. For example, one study of white Americans'

stereotypes concerning African-Americans was "replicated" in

Italy. And a study which asked Israelis to imagine the

consequences of military service was "replicated" by asking

Americans to imagine the consequences of a honeymoon.

These procedural infidelities also occurred in some of the ML

"replications" as well. In fact, replications whose

procedures were endorsed by the authors of the original study

succeeded 60% of the time, compared to 15% of studies which were

not endorsed.

Still, 60% isn't a great hit rate, either, and does suggest

that psychology does faces a kind of replication crisis.

Social Science

From roughly the time of

Roger Bacon onward, science has been concerned with

understanding the natural world of physics and biology. Under

the influence of Descartes' distinction between mind and body,

the 18th-century German philosopher Immanuel Kant asserted that

there could never be a science of the mind, because the mind was

not a physical entity that could be measured. It was not until

the mid-19th century that Weber, Fechner, Helmholtz, and other psychophysicists

showed that "lower" mental processes such as sensation and

perception could be subject to scientific analysis, and only in

the late 19th century that Ebbinghaus and others showed that the

same was true for "higher" mental processes such as memory. Also

in the mid-19th century, August Comte (1798-1857), a French

philosopher, showed that science could reveal the workings of

social groups as well as the workings of the individual mind.

Based as they are on controlled observation and the testing of

hypotheses derived from theory, the "social" sciences of

sociology, anthropology, economics, and political science are

every bit as much sciences as are the "natural" sciences like

physics, chemistry, and biology.

Some philosophers of science

have claimed that social science can't be real science

because it isn't predictive; it can only explain things that

happened, in a post-hoc manner. The sciences are real

sciences, because they entail testing predictions in

experiments. This claim is bogus.

- In the first place, there are plenty of natural sciences

that explain, but cannot predict. Seismology and

meteorology are the classic examples: earth scientists can't

predict earthquakes or tornadoes, but they have a very good

understanding of how they happen.

- And while it's true that we can't manipulate the features

of whole societies in order to do experiments, we can gain

predictive knowledge by conducting smaller-scale

experiments, as social psychologists (and some sociologists)

do. Lots of economists -- just not Alan Greenspan, the

Chairman of the Federal Reserve, and his company of

neoclassical True Believers -- predicted the financial

crisis of 2008.

The real difference between the natural and social sciences is that the phenomena of natural science are observer-independent. Galaxies, tectonic shifts, and hurricanes exist regardless of whether there is anyone to observe them. But the phenomena of social science are different, because they are observer-dependent: they are the product of human cognitive activity -- of consciousness, if you will. They don't exist unless there is an observer present to experience -- and construct -- them. Mountains and molecules have features that are intrinsic to their physics. But marriage and money depend for their existence on human attitudes: they are what they are only by virtue of agreement between conscious beings.

In this respect, economics

may be an intermediate case. When Adam Smith, in The

Wealth of Nations (1776), referred to the "invisible

hand", he seemed to imply that the laws of economics -- of

supply and demand and so forth -- are observer-independent

phenomena that occur regardless of human cognitive

activity. But many of the facts of economics are

observer-dependent. Like currency: a dollar bill is worth

a dollar only because someone says it is; as a piece of paper,

it has no intrinsic, observer-independent value. And

markets: you can have a capitalist economy, or a socialist

economy, or a mixed economy, etc.; one economic system may be

better than another, what economy you have depends on someone's

choice. Capitalism isn't in physics.

For a provocative discussion of the status of economics as a science -- a discussion that has implications for psychology -- see:

- "What Is Economics Good For?" by Alex Rosenberg and Tyler Curtain (New York Times, 08/25/2013).

- "Economics as Science" by Eric S. Maskin (New York Times, 08/29/2013),

- and the dialogue stirred by Maskin's letter (New York times, 09/01/2013).

And for that matter,

psychology may also be an intermediate case, because it is both

a biological science, interested in the relations between mental

states and brain activity, and a social science, interested in

the relations between mental structures and processes and social

structures and processes. (Psychology is primarily a

social science, though, because it is fundamentally concerned

with the individual's mental life: his or her knowledge and

beliefs, feelings and desires.)

- As a natural science, psychology is concerned with discovering universal laws like the psychophysical laws, or George Miller's "magical number 7, plus or minus two". But even these laws are observer-dependent, because they wouldn't be true in the absence of an observer.

- As a social science, psychology is concerned with how the thoughts, feelings, and desires of the individual relate to what is going on in the world around him -- a world that is very much a social world created by human minds.

In the final analysis,

however, both economics and psychology are social sciences,

because the phenomena they study are essentially human

constructions, and their processes can be altered by the very

predictions that social scientists make.

- The financial crisis of 2008 might have been averted if policymakers had listened to dissident economists, and enforced stricter regular on the financial-services industry.

- If I predict, based on your prior test scores, that you'll get a poor grade in Psychology 1, you might study harder just to prove me wrong.

Both cases differ from the natural sciences. In seismology and meteorology, predicting an earthquake doesn't make it happen; and if a hurricane is predicted, nothing can be done to prevent its formation. That's because earthquakes and hurricanes are observer-independent phenomena. Financial crises, and individual choices, aren't.

Psychology (on its "social"

side) and the other social sciences are sometimes characterized

as "soft science", in contrast to allegedly "hard"

sciences like physics and chemistry. But that's a

misnomer. As Theodore Lowi, a prominent political scientist,

once remarked, "political science is a harder science than the

so-called hard sciences because we confront an unnatural

universe that requires judgment and evaluation". The same

is true for psychology as a social science.

Big Science

Well into the 20th century,

most science was done by individual investigators, often

independently wealthy or supported by wealthy patrons. Galileo

essentially worked alone; so did Darwin and the Curies. But in

the 1930s and 1940s, and especially with the Manhattan Project

to build the atomic bomb during World War II, all that changed:

now much science is was done by large teams of investigators