Learning

Having discussed the biological basis of mind and behavior in the brain and the rest of the nervous system, we're now in a position to discuss what it is that the mind does. To be brief, the purpose of the mind is to know the world: to form internal, mental representations of the external world, and to plan and execute responses to the objects and events we encounter there.

In terms of human

information processing, the mind performs a

sequence of activities:

- picking up information through sensory and perceptual processes;

- storing this information in memory;

- transforming it through thought;

- communicating it through language; and

- executing relevant actions through the skeletal musculature.

Traditionally,

this activity was described in terms of the formation of associations

of three types:

- between environmental events;

- between environmental events and the organism's responses to them; and

- between the organism's own actions and their effects on the environment.

Reflexes, Taxes, and Instincts

Some of these associations are innate or inborn, part of the organism's native biological endowment.

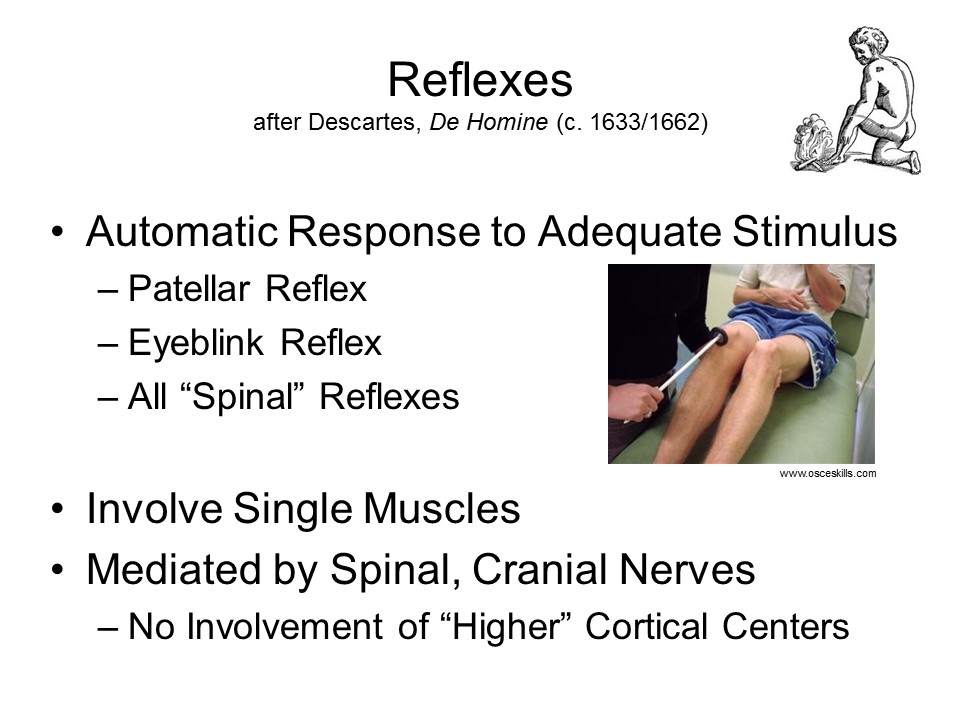

Reflexes

The

The  reflex

is the simplest possible connection between an environmental

stimulus and an organismic response. Examples are:

reflex

is the simplest possible connection between an environmental

stimulus and an organismic response. Examples are:

- the patellar reflex, the "knee-jerk" reflex commonly tested during routine physical examinations;

- the eyeblink reflex, where the eye closes in response to a puff of air striking the cornea;

- the other spinal reflexes, such as those that are preserved in cases of paraplegia.

Reflexes are automatic, in that they occur inevitably in response to an adequate stimulus, and occur the first time that stimulus is presented. They do not require the involvement of the higher centers in the nervous system: they persist even when the spinal cord is severed from the brain. Most reflexes are fairly simple, but even fairly complicated activities can be reflexive in nature.

The 19th-century French physiologist

Flourens conducted a series of classic studies of reflexes in

the decorticate pigeon. He removed both lobes of the cerebral

cortex in the bird, and then attempted to determine which

patterns of behavior remained in the repertoire. Certain

behaviors were preserved:

- the animal righted itself when its equilibrium was disturbed;

- it walked when it was pushed, and flew when thrown into the air;

- it would swallow when water was introduced into its beak;

- and when irritated, it would move away from the stimulus.

However, other behaviors

disappeared:

- it did not flee from the irritation;

- it did not avoid obstacles placed in its path;

- it showed no voluntary action (i.e., behavior in the absence of any apparent stimulus);

- and it showed no signs of emotionality.

Thus, Flourens characterized the decorticate pigeon as a reflex machine, that merely reacted to external stimulation by means of reflexes, but displayed no spontaneous or self-initiated behavior.

Human

beings also come "prewired" with a repertoire of reflexes:

automatic responses to stimulation that appear soon after

birth, before the infant has had any opportunity for learning.

Some of these are reflexes of approach, elicited by weak stimuli, and which have the effect of increasing contact with the stimulus.

- Similarly, if the sole of the foot is touched, the response will be "plantarflexion": the toes will stretch and turn downward.

Other stimulus-response patterns are reflexes of avoidance, which are elicited by intense or noxious stimuli, and have the effect of decreasing contact with the stimulus.

- If the palms or soles are scratched, pinched, or pricked, there will be spreading of the fingers or toes, and withdrawal of the hands or feet (in the case of the feet, the toes will also show "dorsiflexion", or turning upward -- the "Babinski reflex").

A

very interesting set of behaviors is the stepping

reflex. Infants appear to "learn to walk", but this

appearance is deceiving. If the infant's body is supported,

and it is moved forward along a flat surface, it will show

synchronized stepping. If its toes strike the riser of a set

of stairs, it will lift its feet. Neonates don't learn to

walk: they can't walk because their skeletal musculature has

not matured so that they can support themselves.

A

very interesting set of behaviors is the stepping

reflex. Infants appear to "learn to walk", but this

appearance is deceiving. If the infant's body is supported,

and it is moved forward along a flat surface, it will show

synchronized stepping. If its toes strike the riser of a set

of stairs, it will lift its feet. Neonates don't learn to

walk: they can't walk because their skeletal musculature has

not matured so that they can support themselves.

Despite the large repertoire of reflexes, infants do not show much initiation of directed activity. The behaviors of the young infant are pretty much confined to reflexes, which are gradually replaced with voluntary action.

Reflexes are an important part of the

organism's behavioral repertoire, but they have their

limitations.

- They permit only a small number of responses to be elicited by stimulation.

- They do not permit action to be controlled by internal goals or intentions.

With subsequent development, reflexes tend to disappear. But they are not abolished entirely: the knee-jerk and rooting reflexes can be elicited in adults; and adult paraplegics display a full repertoire of reflexes. However, adult behavior is dominated by voluntary action, and reflexes slip into the background.

Reflexes involve relatively small

portions of the nervous system. In principle, the

reflex arc requires only three neurons -- though in

practice, spinal reflexes involve entire afferent and efferent

nerves, as well as the spinal cord. Other innate

stimulus-response connections consist of more complicated

action sequences, that involve larger portions of the nervous

system, and skeletal musculature.

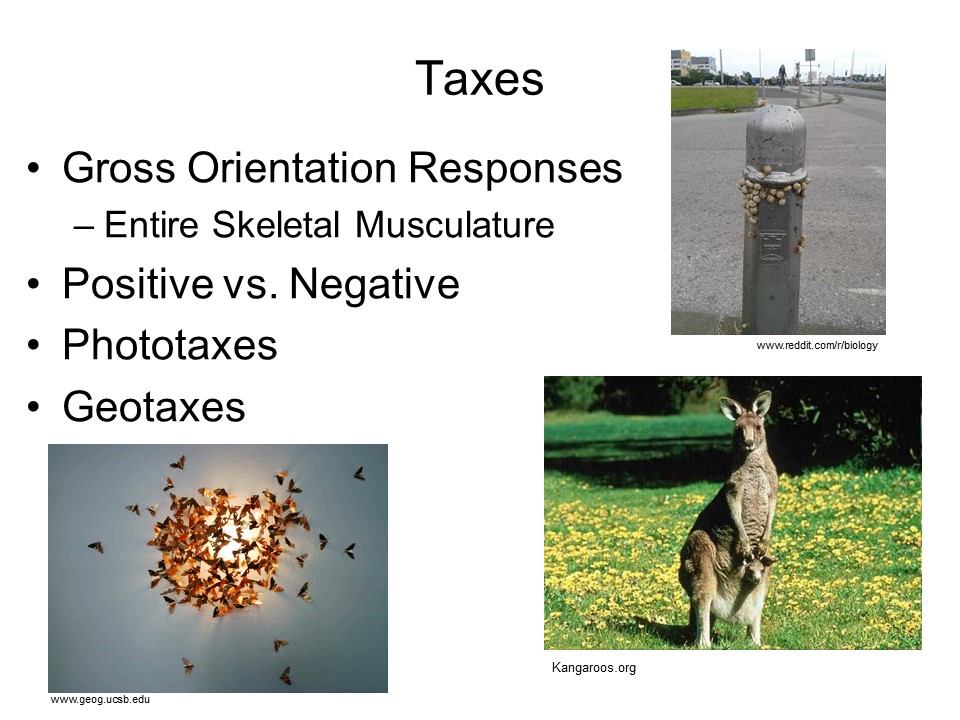

Taxes

A

taxis (plural, taxes) is a gross orientation

response: after presentation of a stimulus, the whole organism

turns and moves. Taxes come in two forms:

A

taxis (plural, taxes) is a gross orientation

response: after presentation of a stimulus, the whole organism

turns and moves. Taxes come in two forms:

- In positive taxes, the organism moves toward the stimulus. A common example is a moth flying into a candle.

- In negative taxes, the organism moves away from the stimulus. A common example is a cockroach scurrying out of the light (actually, it is responding to slight breezes created by the motion, rather than light, such as a human entering a room and turning on a light).

Phototaxes involve responses to light, geotaxes involve responses to gravity (these can be observed in worms and ants as they move up and down inclines).

There are actually lots of other taxes, which can be observed mostly at the cellular level:

- Chemotaxes, or responses to the presence of certain chemicals in the environment;

- Thermotaxes, or responses to warmth or cold;

- Rheotaxes, or responses to the movement of fluids;

- Magnetotaxes, or responses to magnetic fields; and

- Electrotaxes, or responses to electrical fields.

Taxes are not simple reflexes, because they involve the entire skeletal musculature of the organism. But they are still innate, and involuntary.

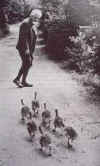

Taxes and Reflexes in the Neonate Kangaroo

The behavior of

the newborn kangaroo illustrates an effective combination of

reflexes and taxes. The kangaroo, like all marsupials (e.g.,

the opossum), has no placenta. The female gives birth after

one month of gestation, and carries the developing fetus in

a pouch. But how does the fetus get into the pouch?

Immediately after birth, the newborn climbs up the mother's abdomen -- perhaps by virtue of a negative geotaxis. If it reaches the opening of the pouch, it reverses its behavior and climbs in -- maybe a positive geotaxis. If it does not encounter the opening of the pouch, it will continue climbing until it reaches the top, stop -- or maybe fall off -- and eventually die. The mother kangaroo has no way of helping the infant -- the appropriate behaviors simply aren't in her instinctual repertoire, and -- a point I'll expand on later -- she has no opportunity to learn them through trial and error).

Once in the pouch, if the neonate encounters a nipple, it will attach to it and begin to nurse -- probably a variant on the (rooting reflex. If not, it will simply stop at the bottom of the pouch and eventually die.

Assuming that all goes well, the baby kangaroos emerges from the pouch after about six more months of gestation.

Note that the neonate gets in the pouch by its own automatic actions, with no assistance from its mother. The behavior is entirely under stimulus control, and if it fails to contact the appropriate stimulus it will simply die.

Instincts

Other innate behaviors involve more

complicated action sequences, and more specific,

discriminating responses. These are known as instincts or

fixed action patterns. Instincts have several important

properties. As a rule, they are:

- complex, stereotyped patterns of action,

- rigidly organized,

- innate,

- unmodified by learning,

- species-specific (i.e., some species show them but others do not); and

- universal within the species (i.e., every member of the species shows the behavior under appropriate conditions).

Instincts are studied by ethology, a branch of behavioral biology devoted to understanding animal behavior in natural environments, viewed from an evolutionary perspective. As a biological discipline ethology asks four questions about behavior -- all of them variants on Why does an animal behave the way it does?

- Causation: What are the mechanisms by which the behavior works?

- Function: What is the survival value of the behavior?

- Ontogeny: How does the behavior arise in the life of the individual?

- Phylogeny: How did the behavior arise in the evolution of the species?

Note, however, the focus of ethology on behavior

-- and, in particular, on natural behavior.

Ethologists analyze animal behavior in its ecological and

evolutionary context; they do experiments, but their

experiments are performed under field conditions (or something

very closely resembling them), not in the sterile confines of

the laboratory. Ethologists are not really psychologists,

because they are interested only in behavior, not in mind per

se. Nevertheless, psychology is a big tent, and many

ethologists have found their disciplinary home in a department

of psychology, as well as in departments of biology

(especially integrative biology as opposed to molecular and

cellular biology).

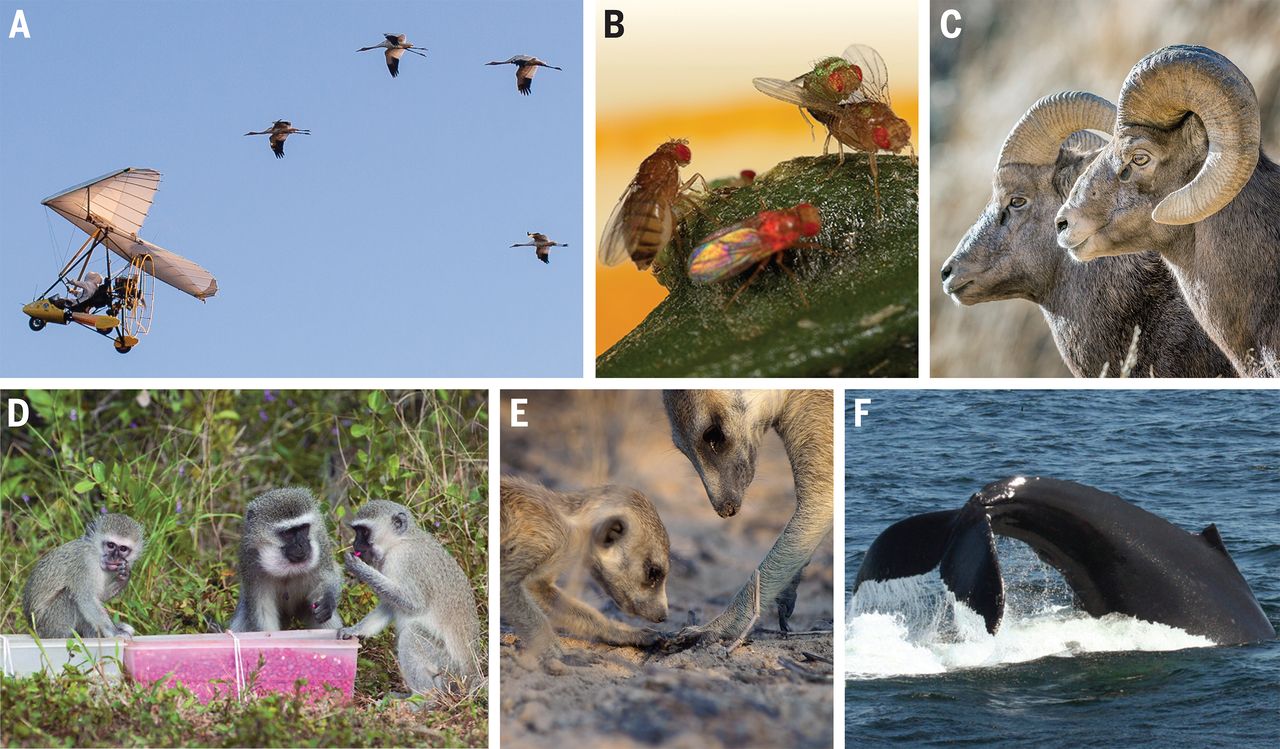

An observation by Konrad Lorenz

illustrates the role that evolutionary thinking played in the

ethologists' analysis of behavior ("The Evolution of

Behavior", Scientific American, December 1958):

[I]s it not possible that beneath all the variations of individual behavior there lies an inner structure of inherited behavior which characterizes all the members of a given species, genus or larger taxonomic group -- just as the skeleton of a primordial ancestor characterizes the form and structure of all mammals today?

Yes, it is possible! Let me give an example which, while seemingly trivial, has a bearing on this question. Anyone who has watched a dog scratch its jaw or a bird preen its head feathers can attest to the fact that they do o in the same way. The dog props itself on the tripod formed by its haunches and two forelegs and reaches a hind leg forward in front of its shoulder. Now the odd fact is that most birds (as well as virtually all mammals and reptiles) scratch with precisely the same motion! a bird also scratches with a hind limb (that is, its claw), and in doing so it lowers its wing and reaches its claw forward in front of its shoulder.

One might think that it would be simpler for the bird to move its claw directly to its head without moving its wing, which lies folded out of the way on its back. I do not see how to explain this clumsy action unless we admit that it is inborn. Before the bird can scratch, it must reconstruct the old spatial relationship of the limbs of the four-legged common ancestor which it shares with mammals.

A Nobel Prize for Ethology

Three important ethologists, Konrad Lorenz, Nikolas (Niko) Tinbergen, and Karl Von Frisch, won the 1973 Nobel Prize in Physiology or Medicine for their pioneering research on instincts (four years earlier, in 1969, Tinbergen's father Jan had shared the first Nobel Prize in Economics for his pioneering research on econometrics). For an intellectual biography of Tinbergen, see Niko's Nature: A Life of Niko Tinbergen and His Science of Animal Behaviour by H. Kruuk (2003).

The

The  concept

of instinct is well illustrated in Konrad Lorenz' research on

imprinting in newly hatched ducks and geese.

Once out of the egg, the hatchling follows the first moving

object it sees. This is usually the mother, but the hatchling

will also follow a wooden decoy, block of wood on wheels, or

even a human -- provided that it is the first moving object

that the bird sees. The emphasis on the "first" moving object

is somewhat overstated, because there is a critical

period for imprinting: the imprinted object must be

present soon after birth; if exposure to a moving object is

delayed for several hours or days, imprinting may not occur at

all. If imprinting occurs, the imprinted object will be

followed even under adverse circumstances, over or around

barriers, etc. When the imprinted object is removed from the

bird's field of vision, the bird will emit a distress call. If

imprinting has occurred to an unusual object that object will

be preferred to the bird's actual parent, or any other

conspecific animal.

concept

of instinct is well illustrated in Konrad Lorenz' research on

imprinting in newly hatched ducks and geese.

Once out of the egg, the hatchling follows the first moving

object it sees. This is usually the mother, but the hatchling

will also follow a wooden decoy, block of wood on wheels, or

even a human -- provided that it is the first moving object

that the bird sees. The emphasis on the "first" moving object

is somewhat overstated, because there is a critical

period for imprinting: the imprinted object must be

present soon after birth; if exposure to a moving object is

delayed for several hours or days, imprinting may not occur at

all. If imprinting occurs, the imprinted object will be

followed even under adverse circumstances, over or around

barriers, etc. When the imprinted object is removed from the

bird's field of vision, the bird will emit a distress call. If

imprinting has occurred to an unusual object that object will

be preferred to the bird's actual parent, or any other

conspecific animal.

Link

to a film of Lorenz demonstrating imprinting.

Link

to "Mallards on a Mission", a more recent YouTube video

showing the strength of the imprinting response.

The power and perils of imprinting are vividly illustrated by an incident that occurred in Spokane, Washington, in 2009. George Armstrong, a banker, had been watching a female duck nesting on a ledge outside his office window. In the usual course of events, the ducklings would hatch, imprint on their mother, and then follow her as she led them to water. But --they're on a ledge! And they can't fly yet!. The mother duck knew nothing of this. She's built to wait until her eggs have hatched, and then go to water; and ducklings are built to follow her. The mother jumped off the ledge and -- she's built for that, too -- flew down to the street. The chicks were stranded. Armstrong went out on the street, stood below the ledge, and caught each of the ducklings as they stepped off the ledge, instinctually following their mother (actually, he had to collect a couple from the ledge). Then he served as a crossing guard while the mother collected her young and led them to water. The power of imprinting is that the ducklings will follow their mother -- or Konrad Lorenz everywhere. The peril of imprinting is that the behavior has been selected for a particular environmental niche -- in the case of ducks, the grassy area near water where they usually nest; if that environment changes, for whatever reason, the instinctive behavior may be very maladaptive.

Link to a video of Armstrong catching the ducks (sorry about the ad).

There are actually two kinds of imprinting. What we've been discussing is filial imprinting, which concerns the relationship between adult and youngster. There is also sexual imprinting, which concerns the relations between males and females. At the International Crane Foundation, in Baraboo Wisconsin, there once lived a Siberian crane named Tex (now deceased), who imprinted on the Foundation's manager, George Archibald. In order to get George to mate with a female crane, Archibald had to perform an imitation of the Siberian crane mating dance!

Imprinting is extremely indiscriminate: basically, the bird imprints on the first object that moves within the critical period. However, other instincts are much more discriminating.

Another good example of an instinct is

the alarm reaction in some birds subject to

predation by other bird

Another good example of an instinct is

the alarm reaction in some birds subject to

predation by other bird  s

(studied by Tinbergen). If an object passes overhead, the

birds will emit a distress call and attempt to escape.

However, these birds do not show alarm to just any stimulus:

it must have a birdlike appearance; moreover, birdlike figures

with short (hawk-like) necks elicit alarm, while those with

long (goose-like) necks do not (the length and shape of the

tail and wings is largely irrelevant).

s

(studied by Tinbergen). If an object passes overhead, the

birds will emit a distress call and attempt to escape.

However, these birds do not show alarm to just any stimulus:

it must have a birdlike appearance; moreover, birdlike figures

with short (hawk-like) necks elicit alarm, while those with

long (goose-like) necks do not (the length and shape of the

tail and wings is largely irrelevant).

Imprinting and the alarm reaction involve, basically, only one organism. Other instincts involve the coordinated activities of two (or more) species members.

A

good example is food-begging in herring

gulls (studied by Tinbergen). Hatchling birds don't forage for

their own food, but must be fed a predigested diet by their

parents. But the parents do not do this of their own accord.

Rather, the chick must peck at the parent's bill: the parent

then regurgitates food, and presents it to the chick; the

chick then grasps the food and swallows it. But the chick will

not peck at any bird-bill. Rather, the bill must have a patch

of contrasting color on the lower mandible. The precise colors

involved do not matter much, so long as the contrast is

salient. Food-begging exemplifies the coordination of

instinctive behaviors: the patch is the releasing

stimulus for the hatchling to peck; and the peck is

the releasing stimulus for the parent to present food.

A

good example is food-begging in herring

gulls (studied by Tinbergen). Hatchling birds don't forage for

their own food, but must be fed a predigested diet by their

parents. But the parents do not do this of their own accord.

Rather, the chick must peck at the parent's bill: the parent

then regurgitates food, and presents it to the chick; the

chick then grasps the food and swallows it. But the chick will

not peck at any bird-bill. Rather, the bill must have a patch

of contrasting color on the lower mandible. The precise colors

involved do not matter much, so long as the contrast is

salient. Food-begging exemplifies the coordination of

instinctive behaviors: the patch is the releasing

stimulus for the hatchling to peck; and the peck is

the releasing stimulus for the parent to present food.

An excellent example of a complex,

coordinated sequence of instinctual behaviors is provided by

the "zig-zag" dance, part of the mating

ritual of the stickleback fish (Tinbergen).

An excellent example of a complex,

coordinated sequence of instinctual behaviors is provided by

the "zig-zag" dance, part of the mating

ritual of the stickleback fish (Tinbergen).

A male stickleback, when it is ready to mate, develops a red coloration on its belly.

It then establishes its territory by fighting off other sticklebacks. But he fights only sticklebacks, not other species of fish; and only males; and only males who display red bellies and enter his territory in the head-down "threat posture" (other colorations indicate that the other male is not ready to mate; other postures indicate that the other male is only passing through the territory; in either case, there is no territorial fighting).

Experiments by Tinbergen, employing "dummy" models of fish, show that It actually doesn't matter much whether the other fish looks like a stickleback, so long as it has a red-colored belly. Sticklebacks without red bellies may enter this fish's territory, because they don't constitute threats.

Other experiments, in which fish were enclosed in capsules to control their orientation, show that a male who elicits aggression when it enters a territory with its head down will not elicit aggression if it enters the territory with its head level -- perhaps indicating that it is just "passing through".

After the territory has been cleared of threatening males, the male builds a nest out of weeds.

Then he entices a female into the nest -- but only a female stickleback who enters his territory with a swollen abdomen, and in the head-up "receptive posture".

The female enters the nest only if the male displays a red belly, and performs a "zig-zag" dance.

Once in the nest, the female spawns eggs -- but only if she is stimulated at her hind quarters.

Once the eggs are laid, the female leaves the nest and the territory.

The male fertilizes the eggs, fans them to maintain an adequate oxygen supply around them, and cares for the young after hatching (until they're ready to go off to school).

When the young are hatched the red belly fades, and the male no longer incites males and attracts females -- until the next mating cycle starts.

Notice the serial organization to this pattern of stickleback behaviors. It is as if each act is the releasing stimulus for the next one. There is no flexibility in this sequence: once initiated, it does not stop, provided that the appropriate releasing stimulus is present. If any element in the sequence is left out, the entire sequence will stop abruptly. All three parties go through this pattern of behaviors, even if one of them doesn't remotely resemble a stickleback. For example, a female, ready to mate, will enter the nest if she observes a tongue depressor, painted red on one half, imitate the zig-zag dance!

Tinbergen observed a similar pattern in the mating behavior of the black-headed gull ("The Courtship of Animals", Scientific American, November 1954).

A unmated male settles on a mating territory. He reacts to any other gull that happens to come near by uttering a "long call" and adopting an oblique posture. This will scare away a male, but it attracts females, and sooner or later one alights near him. Once she has alighted, both he and she suddenly adopt the "forward posture". Sometimes they may perform a movement known as "choking". Finally, after one or a few seconds, the birds almost simultaneously adopt the "upright posture" and jerk their heads away from each other. Now most of these movements also take place in purely hostile clashes between neighboring males. They may utter the long call, adopt the forward posture and go through the choking and the upright posture.

The final gestures in the courtship sequence -- the partners' turning of their heads away from each other, or "head-flagging" -- is different from the others: it is not a threat posture. Sometimes during a fight between two birds we see the same head-flagging by a bird which is obviously losing the battle but for some reason cannot get away, either because it is cornered or because some other tendency makes it want to stay. This head-flagging has a peculiar effect on the attacker: as soon as the attacked bird turns its head away the attacker stops its assault or at least tones it down considerably. Head-flagging stops the attack because it is an "appeasement movement" -- as if the victim were "turning the other cheek". We are therefore led to conclude that in their courtship these gulls begin by threatening each other and end by appeasing each other with a soothing gesture.

Sounds a little like Shakespeare's The Taming of the Shrew. Or any of a number of screwball comedies from the 1930s.

For a good treatment of instinctual behavior, see N. Tinbergen,The Study of Instinct (1969).

For a positive treatment of sociobiology, see E.O. Wilson,Sociobiology: The New Synthesis (1975).

For extensions of sociobiology to psychology, see The Adapted Mind : Evolutionary Psychology and the Generation of Culture edited by Jerome H. Barkow, Leda Cosmides, and John Tooby (1992), and Evolutionary Psychology: The New Science of the Mind (1999) by David M. Buss.

Instincts in Humans?

Taxes and instincts are important elements in behavior, especially of invertebrates, birds, and reptiles. Some psychologists and behavioral biologists argue that much human behavior is also instinctual in nature. One of the first to make this argument was MacDougall, who argued that human behavior was rooted in instinctual behaviors related to biological motives. One of his examples, which is offered here without comment (except to note that similar descriptions could be made of the behavior of men), is reminiscent (at least in tone) of what Tinbergen discovered in sticklebacks:

The flirting girl first smiles at the person to whom the flirt is directed and lifts her eyebrows with a quick, jerky movement upward so that the eye slit is briefly enlarged. Flirting men show the same movement of the eyebrows. After this initial, obvious, turning toward the person, in the flirt there follows a turning away. The head is turned to the side, sometimes bent toward the ground, the gaze is lowered, and the eyelids are dropped. Frequently, but not always, the girl may cover her face with a hand and she may laugh or smile in embarrassment. She continues to look at the partner out of the corners of her eyes and sometimes vacillates between looking at, and looking away.

Among modern biological and social

scientists, this point of view is expressed most strongly by

the practitioners of sociobiology,

especially E.O. Wilson, who argue that much human social

behavior is instinctive, and part of our genetic endowment.

More recently, similar ideas have been expressed by proponents

of evolutionary psychology such as Leda

Cosmides, John Tooby, and David Buss. At their most strident,

evolutionary psychologists claim that our patterns of

experience, thought, and action evolved in an environment

of early adaptation(EEA) -- roughly the African

savanna of the Pleistocene epoch, where homo sapiens

first emerged about 300,000 years ago -- and have changed

little since then. Although this assertion is debatable, to

say the least, the literature on instincts makes it clear that

evolution shapes behavior as well as body morphology. Many

species possess innate behavior patterns that were shaped by

evolution, permitting them to adapt to a particular

environmental niche. Given the basic principle of the

continuity of species, it is a mistake to think that humans

are entirely immune from such influences -- although humans

have other characteristics that largely free us from

evolutionary constraints.For a discussion of evolutionary

psychology, see the lectures on Psychological Development.

Meanings of "Instinct"

The concept of instinct has had a difficult history in psychology, in part because early usages of the term were somewhat circular: some theorists seemed to invoke instincts to explain some behavior, and then to use that same behavior to define the instinct. But, in the restricted sense of a complex, discriminative, innate response to some environmental stimulus, the term has retained some usefulness. For example, the psychologist Steven Pinker has referred to language as a human instinct.

Nevertheless, the term instinct has

evolved a number of different meanings, as outlined by the

behavioral biologist Patrick Bateson (Science,

2002):

- present at birth (or at a particular stage of development);

- not learned;

- developed before it can be used;

- unchanged once developed;

- shared by all members of the species (at least those of the same sex and age);

- organized into a distinct behavioral system (e.g., foraging);

- served by a distinct neural (brain) module;

- adapted during evolution;

- differentiated across individuals due to their possession of different genes.

Bateson correctly notes that one meaning of the term does not necessarily imply the others. Taken together, however, the various meanings capture the essence of what is meant by the term "instinct".

From Instinct to Learning

Innate response tendencies such as food-begging can be very powerful behavioral mechanisms, especially for invertebrates and non-mammalian vertebrate species. In their natural environment, some species seem to live completely by virtue of reflex, taxis, and instinct.

Limitations on Innate Behaviors

But at the same time, these innate behavioral mechanisms are extremely limited. They have been shaped by evolution to enable the species to fit a particular environmental niche, which is fine so long as the niche doesn't change. When the environment does change, evolution requires an extremely long time to change behavior (or body morphology, for that matter) accordingly -- much longer than the lifetime of any individual species member.

Consider, for example, the behavior of

newborn sea turtles. Female turtles lay their eggs on the

beach above the tide line, and these eggs hatch at night in

the absence of the parents. As soon as they have hatched, the

hatchlings begin walking toward the water (what you might call

a "positive aquataxis"): when they reach it, they begin to

swim (another innate behavior), and live independently.

However, the young turtles are not really walking toward the

water: they are walking toward the reflection of the moon on

the water (thus, a positive phototaxis). This hatching

behavior evolved millions of years ago. Since then, however,

the beaches where the turtles hatch have become crowded with

hotels, marinas, oil refineries, and other light sources.

Accordingly, these days, the hatchling turtles will also move

toward these light sources, and die before they ever

reach water. The animals' behavior evolved when the only light

in the environment was from the sun and the moon, and they

just don't know any better.In order to prevent a disaster,

beach-side hotels and oil refineries now take steps to employ

different kinds of light, or block their lights entirely.

Consider, for example, the behavior of

newborn sea turtles. Female turtles lay their eggs on the

beach above the tide line, and these eggs hatch at night in

the absence of the parents. As soon as they have hatched, the

hatchlings begin walking toward the water (what you might call

a "positive aquataxis"): when they reach it, they begin to

swim (another innate behavior), and live independently.

However, the young turtles are not really walking toward the

water: they are walking toward the reflection of the moon on

the water (thus, a positive phototaxis). This hatching

behavior evolved millions of years ago. Since then, however,

the beaches where the turtles hatch have become crowded with

hotels, marinas, oil refineries, and other light sources.

Accordingly, these days, the hatchling turtles will also move

toward these light sources, and die before they ever

reach water. The animals' behavior evolved when the only light

in the environment was from the sun and the moon, and they

just don't know any better.In order to prevent a disaster,

beach-side hotels and oil refineries now take steps to employ

different kinds of light, or block their lights entirely.

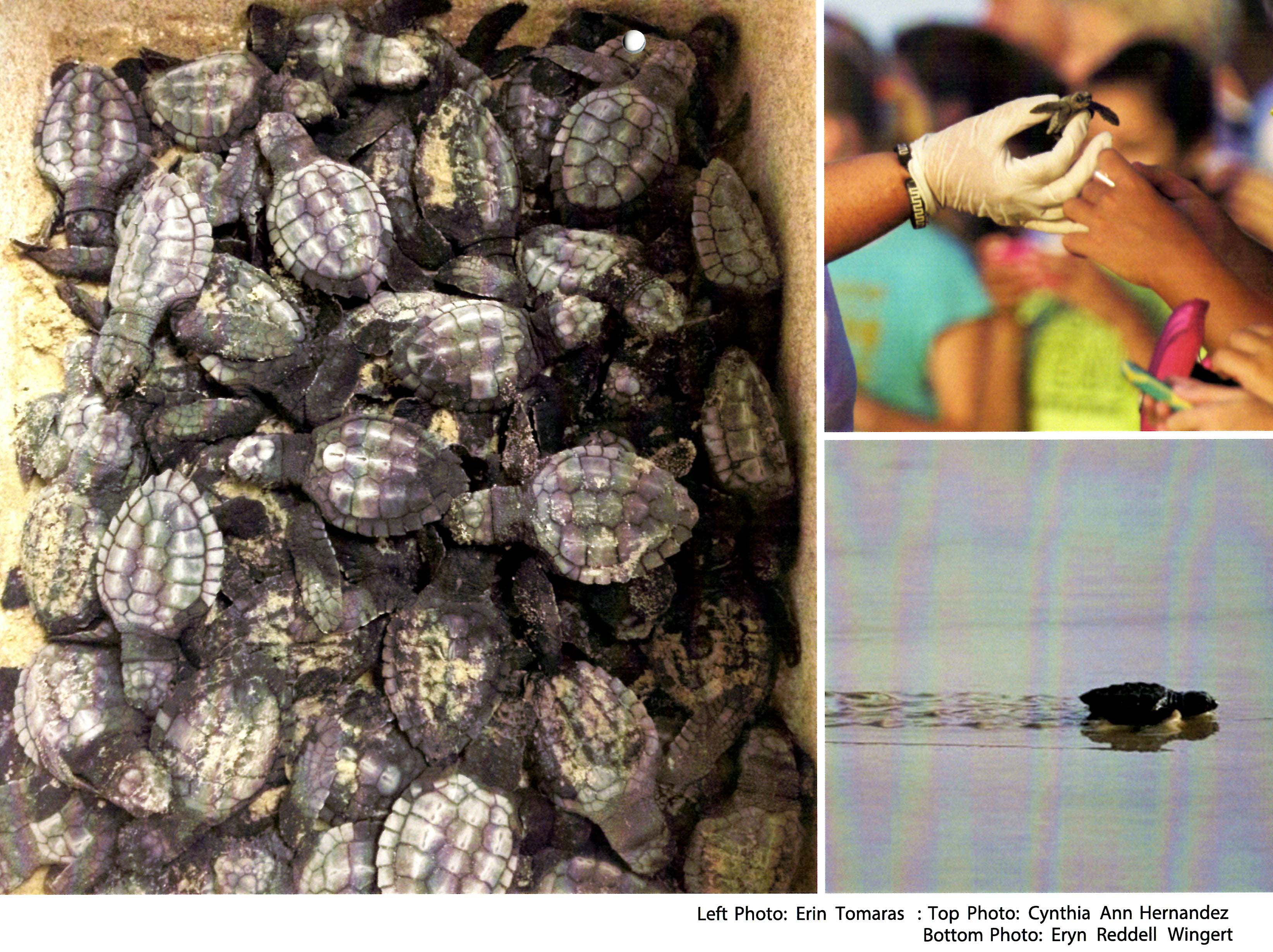

Hatching Behavior in Ridley's Sea

Turtles

|

|

| Here's how

hatching works in Ridley Sea Turtles. Images

from the 2014 calendar of Sea Turtle Inc., an

organization devoted to education and recovery

efforts. |

|

Female sea turtle lays eggs above the high-water line. |

|

Here's a clutch of newly hatched sea turtles, before they make their run for the water. |

|

And here they are, headed toward the water -- or, more properly, the light. |

|

Here's a close-up view. |

|

Notice that they're not distracted by the presence of people (in this case, onlookers at a release of hatchlings fostered by the organization). This is pure instinct. All they care about is the reflected light. and they don't even care about that. It's just mindless innate behavioral response. |

|

Now perhaps,

there is some subtle difference (like polarization) between

moonlight and electrical light. If so, individual animals who

can make this distinction, moving toward one and not the

other, will survive, reproduce, and, over time, generate more

individuals who can make this distinction. But again this

takes time -- assuming that any individual can make the

distinction in the first place. But even so, each individual

gets only one chance. If it makes the right "choice", this

behavioral tendency will pass on to successive generations,

and the species may eventually come to distinguish between

"good" and "bad" light -- provided that the species doesn't go

extinct first. But that just illustrates the point that

evolved behavior patterns take a very long time to change.

Now perhaps,

there is some subtle difference (like polarization) between

moonlight and electrical light. If so, individual animals who

can make this distinction, moving toward one and not the

other, will survive, reproduce, and, over time, generate more

individuals who can make this distinction. But again this

takes time -- assuming that any individual can make the

distinction in the first place. But even so, each individual

gets only one chance. If it makes the right "choice", this

behavioral tendency will pass on to successive generations,

and the species may eventually come to distinguish between

"good" and "bad" light -- provided that the species doesn't go

extinct first. But that just illustrates the point that

evolved behavior patterns take a very long time to change.

In June 2011, a group of diamondback terrapins caused the temporary shutdown of Runway 4 Left at New York's Kennedy International Airport. And it's happened before. The runway crosses a path that the turtles take from Jamaica Bay one side to lay their eggs on the sandy beach on the other side. Usually, in egg-laying season, the runway is not in frequent use, due to prevailing winds. But that day was an exception, and the turtles brought takeoffs and landings to a halt for about an hour until they could be moved to their destination (we don't know what happened when they tried to get back in the water). It's another example of the difficulty that animals have in adjusting evolved patterns of behavior to rapidly changing environmental circumstances. (See "Delays at JFK? This Time, Blame the Turtles" by Andy Newman,New York Times 06/30/2011).

Here's

another example: seabirds, like albatrosses, feed their young

through the same sort of instinctual food-begging shown by

herring gulls. Adult albatrosses forage over open water, dive

to catch fish swimming near the surface, and then regurgitate

the fish into the mouths of their young. But it's not only

fish that are near the surface. There's a lot of garbage in

the ocean, as well. The birds don't know the difference --

they're operating solely on reflex. That garbage is of

relatively recent vintage, so there hasn't been enough time --

assuming it were even possible -- for the birds to evolve a

distinction between fish and garbage. The result is that adult

albatrosses pick up garbage and regurgitate it into the bills

of their chicks, who promptly die of starvation -- such as

this albatross chick photographed on Midway Atoll in the

Pacific.

And

here's yet another example, a little closer to home. Wind

farms like the one in Altamont Pass produce a large amount of

electrical energy for California, reducing carbon emissions

from coal-fired plants, and our dependence on Middle East oil.

But they also create a hazard for birds, especially raptors,

who like to forage for small mammals over open areas. Never

mind that wind farms are built where there is strong, steady

wind, and therefore often on migratory flight paths. The

result is that a large number of raptors and other birds are

killed every year because they run into the blades of the

windmills.

And

here's yet another example, a little closer to home. Wind

farms like the one in Altamont Pass produce a large amount of

electrical energy for California, reducing carbon emissions

from coal-fired plants, and our dependence on Middle East oil.

But they also create a hazard for birds, especially raptors,

who like to forage for small mammals over open areas. Never

mind that wind farms are built where there is strong, steady

wind, and therefore often on migratory flight paths. The

result is that a large number of raptors and other birds are

killed every year because they run into the blades of the

windmills.

In general, we can identify several limitations on innate response patterns:

- The releasing stimulus must be physically present in the current environment. There is no way for the animal to respond to an image or idea or memory of a releasing stimulus.

- Instincts and similar fixed action patterns only permit responses to be elicited by external stimuli; they do not permit action to be directed by internal goals.

- Because the response patterns are built in over evolutionary time, the organism cannot respond flexibly to new stimuli, or quickly generate new behaviors in response to old or new stimuli.

Thus the problem: everyday life

requires many organisms to go beyond simple, innate patterns

of behavior, and acquire new responses to new stimuli in their

environment.

Evolutionary Traps

Ecologists and evolutionary biologists are becoming increasingly aware of the problems caused by rapid environmental change. The United Nations Summit on Sustainable Development, held in Johannesburg, South Africa, in 2002, drew international attention to the fact that "nature", far from being "natural", has in fact been remade by human hands. According to Andrew C. Revkin, "People have significantly altered the atmosphere, and are the dominant influence on ecosystems and natural selection (see his article, "Forget Nature. Even Eden is Engineered", and other articles in a special section on "Managing Planet Earth",New York Times, 08/20/02). Even in the early part of the 20th century, Revkin notes, the geochemist Vladimir I. Vernadsky had suggested that "people had become a geological force, shaping the planet's future just as rivers and earthquakes had shaped its past". Now in the 21st century, with the growth of megacities, the increase in population, and the disappearance of the forests, to name just a few trends, we are beginning to recognize, and deal with, the impact of human activity on the environment.

The human impact on the environment doesn't just affect the conditions of human existence. Nature is a system, and what we do affects animal and plant life as well, and sometimes in non-obvious ways.

In a recent paper in Trends in Ecology & Evolution (10/02), Paul W. Sherman and his colleagues, Martin A. Schlaepfer and Michael C. Runge, detail a number of "evolutionary traps", mostly caused by the impact of human activity which alters the natural environment -- activity which goes beyond the simple destruction of habitat, which would be bad enough. More subtle changes alter the environment in such a way that a species' evolved patterns of behavior are no longer adaptive, reducing the chances of individual survival and reproduction, and eventually leading to the decline and extinction of the species as a whole. As Sherman puts it, "Evolved behaviors are there for adaptive reasons. If we [disrupt] the normal environment, we can drive a population right to extinction" ("Trapped by Evolution" by Lila Guterman,Chronicle of Higher Education, 10/18/02).

The concept of evolutionary trap is a variant on the more established notion of an ecological trap, in which animals are misled, through human environmental change, to live in less-than-optimal habitats, even though more suitable habitats are available to them. For example, Florida's manatees have progressively moved north, attracted by the warm water discharged by power plants; but when the plant goes down for maintenance, the water cools to an extent that they can no longer survive in it.

Some examples of evolutionary traps:

- The male buprestid beetle (Julodimorphabakewelli) of Australia recognizes the female of its species as a brown, shiny object with small bumps on its surface. However, this is also what some Australian beer bottles look like. Accordingly, males will frequently be found attempting to mate with beer bottles, instead of with more appropriate partners. The solution is to get Australians not to litter.

- American wood ducks,Aix sponsa, build nests in the cavities of dead trees. When wildlife managers constructed nesting boxes for them, in an attempt to help them meet the demands of habitat loss, the animals actually declined. The reason is that female wood ducks adapted to the loss of natural nesting places by following each other to the few sites that were still available. When the artificial nesting boxes appeared, they all gravitated to the same ones, and laid too many eggs in individual boxes to incubate properly. The solution was to hide the boxes in the woods, increasing the likelihood that individual ducks would find their own nesting sites.

- Male Cuban tree frogs,Osteopilus septentrionalis, attempt to mate with females that are actually roadkill (at least they don't move!). Not only does this increase the chance that they themselves will be run over by cars and trucks, but of course the exercise yields no offspring.

- Due to global warming, yellow-bellied marmots,Marmota flaviventris, come out of hibernation too early in the season for food to be available, and so many will starve.

- Insects are famously attracted to light, and this positive phototaxis includes artificial light at night (ALAN). Entomologists and ecologists have become increasingly concerned about the effect if ALAN on insect populations, because ALAN threatens the survival of the species, with effects that may be felt throughout entire ecosystems.

- Mayflies provide a startling case in point.

Mayfly larvae sit on the water: after they hatch,

females live only a few minutes (males live for a day or

two), during which time they mate and lay eggs back on

the water. But those that live near bridges are

attracted to the light, missing the opportunity to mate

closer to the water surface; and many of those who do

mate drop their eggs on the reflective pavement instead

of in the water. The result is fewer mayflies in

the next generation. And since mayflies help

control algae and serve as fishfood, the effects of this

interruption of the mating cycle are felt throughout

their ecosystem. Similar effects of ALAN are affecting

other insect species: it's been estimated that ALAN has

reduced insect populations by 80% in some areas; and

that because of ALAN, as many as 40% of insect species

may be headed for extinction. (For more

information, see "Fatal Attraction to Light at Night

Pummels Insects" by Elizabeth Pennisi, Science,

05/07/2021.)

Learning Defined

In vertebrates, and especially mammalian species, everyday action goes beyond such innate behavior patterns. These organisms can also acquire new patterns of behavior through learning.

Psychologists define learning as: a relatively permanent change in behavior that occurs as a result of experience.

This definition excludes changes in behavior that occur as a result of insult, injury, or disease, the ingestion of drugs, or maturation. Learning permits individual organisms, not just entire species, to acquire new responses to new circumstances, and thereby to add behaviors to the repertoire created by evolution. In addition,social learning permits one individual species member to share learning with others of the same species (this is one definition of culture). The pace of social learning far outstrips that of evolution, so that learning provides a mechanism for new behavioral responses to spread quickly and widely through a population. Although all species are capable of learning, at least to some degree, learning is especially important in the natural lives of vertebrate species, and especially in mammalian vertebrates. Like us. And, it turns out, most human learning is social learning: we learn from each other's experiences, and we have even developed institutions, like libraries and schools, that enable us to share our knowledge with each other.

Classical Conditioning

One

important form of learning, classical conditioning,

was accidentally discovered by Ivan P. Pavlov, a Russian

physiologist who was studying the physiology of the digestive

system in dogs (work for which he won the Nobel Prize in

Physiology or Medicine in 1904). Pavlov's method was to

introduce dry meat powder to the mouth of the dog, and then

measure the salivary reflex which occurs as

the first step in the digestive process. Initially, Pavlov's

dogs salivated only when the meat powder was actually in their

mouths. But shortly, they began to salivate before the powder

was presented to them -- just the sight of the powder, or the

sight of the experimenter, or even the sound of the

experimenter walking down the hallway, was enough to get the

dogs to salivate. In some sense, this premature salivation was

a nuisance. But Pavlov had the insight that the dogs were

salivating to events that were somehow associated with the

presentation of the food. Thus, Pavlov moved away from

physiology and initiated the deliberate study of the psychic

reflex -- not, as the term might suggest, something out of the

world of parapsychology, but rather a situation where the idea

of the stimulus evokes a reflexive response. Pavlov called

these responses conditioned(or conditional)reflexes.

One

important form of learning, classical conditioning,

was accidentally discovered by Ivan P. Pavlov, a Russian

physiologist who was studying the physiology of the digestive

system in dogs (work for which he won the Nobel Prize in

Physiology or Medicine in 1904). Pavlov's method was to

introduce dry meat powder to the mouth of the dog, and then

measure the salivary reflex which occurs as

the first step in the digestive process. Initially, Pavlov's

dogs salivated only when the meat powder was actually in their

mouths. But shortly, they began to salivate before the powder

was presented to them -- just the sight of the powder, or the

sight of the experimenter, or even the sound of the

experimenter walking down the hallway, was enough to get the

dogs to salivate. In some sense, this premature salivation was

a nuisance. But Pavlov had the insight that the dogs were

salivating to events that were somehow associated with the

presentation of the food. Thus, Pavlov moved away from

physiology and initiated the deliberate study of the psychic

reflex -- not, as the term might suggest, something out of the

world of parapsychology, but rather a situation where the idea

of the stimulus evokes a reflexive response. Pavlov called

these responses conditioned(or conditional)reflexes.

In

honor of Pavlov's discovery, this form of learning is now

called "classical" conditioning -- the term was coined

by E.R. Hilgard and D.G. Marquis in their foundational text, Conditioning

and Learning (1940). A classical conditioning

experiment involves the repeated pairing of two stimuli, such

as a bell and food powder. One of these stimuli naturally

elicits some reflex, while the other one doesn't. With

repeated pairings, the previously neutral stimulus gradually

acquires the power to evoke the reflex. Thus, classical

conditioning is a means of forming new associations between

events (such as the ringing of a bell and the presentation of

meat powder) in the environment.

In

honor of Pavlov's discovery, this form of learning is now

called "classical" conditioning -- the term was coined

by E.R. Hilgard and D.G. Marquis in their foundational text, Conditioning

and Learning (1940). A classical conditioning

experiment involves the repeated pairing of two stimuli, such

as a bell and food powder. One of these stimuli naturally

elicits some reflex, while the other one doesn't. With

repeated pairings, the previously neutral stimulus gradually

acquires the power to evoke the reflex. Thus, classical

conditioning is a means of forming new associations between

events (such as the ringing of a bell and the presentation of

meat powder) in the environment.

The apparatus for Pavlov's experiments

included a special harness to restrict the dog's movement; a

tube (or fistula) placed in its mouth to collect

saliva, a mechanical device for introducing meat powder to its

mouth, and some kind of signal such as a bell.

Some writers have questioned whether Pavlov actually used a bell, as the myth has it. Pavlov was actually unclear on this detail in his own writing, and he probably used a buzzer, or a metronome, more often than he used a bell. And the bell was more like a doorbell then a handbell. He also used a harmonium to present different musical notes, and even electrical shock. A major problem is that the Russian words that Pavlov used to label his "bell" don't translate unambiguously into English. For an excellent biography which puts Pavlov's work in its social and scientific context, see Ivan Pavlov: A Russian Life in Science by Daniel P. Todes (2014).

- In Phase 1 of the conditioning procedure, Pavlov presented the sound (or whatever) and the food separately. The dog would salivate to the food but make no response to the bell.

- In Phase 2, Pavlov presented the sound immediately followed by food. The dog would still salivate to the food; but after several trials, it would begin to salivate to the sound as well.

- In Phase 3, Pavlov presented the sound alone, no longer followed by food. After several more trials, the conditioned salivary response would eventually disappear.

The Sad Case of Edwin B. Twitmeyer

Actually, Pavlov wasn't alone in discovering classical conditioning. He had a co-discoverer in Edwin B. Twitmeyer (1873-1943), who reported on "Knee Jerks Without Stimulation of the Patellar Tendon" in his doctoral dissertation, completed in 1902 at the University of Pennsylvania (yay!), and at the 1904 meeting of the American Psychological Association, held in Philadelphia (Pavlov first reported his discovery at the 1903 International Medical Congress, in Madrid).

Just as Pavlov's discovery of the conditioned salivary reflex was accidental, so was Twitmeyer's. His dissertation was actually concerned with variability in the patellar reflex -- which, as an innate reflex, was supposed to be invariant. It wasn't. The patellar reflex is elicited, as everyone who has had a physical exam knows, by striking the patellar tendon (roughly on the kneecap) with a rubber hammer. Twitmeyer used a bell to warned his subjects that the hammer-blow was coming, and after about 150 pairings of bell and hammer, one of his subjects gave an involuntary knee-jerk response after hearing the bell, but before the hammer struck. Twitmeyer subsequently confirmed this observation in the remainder of his subjects.

Although Twitmeyer's dissertation was privately published, and thus not widely accessible, an abstract of his 1904 APA talk was published in the widely read Psychological Bulletin for 1905. The APA talk itself got a chilly reception: After Twitmeyer concluded his presentation William James, who was chairing the session, adjourned the meeting for lunch, effectively precluding any substantive discussion. Twitmeyer himself never followed up on his discovery. Having already been appointed to the psychology faculty at Penn after receiving his bachelor's degree from Lafayette College in 1896, he rose to the rank of full Professor in 1914, and served as Director of Penn's Psychological Laboratory and Clinic. Twitmeyer identified himself as a clinical psychologist, and most of his subsequent research concerned speech disorders, especially in children.

The Basic Vocabulary of Classical Conditioning

The

procedure just described illustrates the basic vocabulary of

classical conditioning:

- The unconditioned stimulus (or US) is a stimulus (like the presentation of meat powder) that reliably evokes a reflexive response (like salivation).

- The unconditioned response (or UR) is the innate reflexive response that is reliably evoked by an unconditioned stimulus.

- The conditioned stimulus (or CS) is a stimulus (such as the ringing of a bell) that does not itself reliably evoke any particular reflexive response. In classical conditioning, the CS is paired with the US.

- The conditioned response (or CR) is the response that comes to be evoked by a previously neutral conditioned stimulus (CS), after many pairings between the CS and the US. The CR generally resembles the UR.

As with the "bell-buzzer" debate, Pavlov

didn't actually call his stimuli and responses "conditioned"

and "unconditioned". Rather, the term he used in Russian

translates better as "conditional" and "unconditional".

And as we'll see later, the suffix "-al" is actually more

appropriate. to make a long story short, the conditioned

response is conditional on presentation of the

conditioned stimulus. But Pavlov's first translators

used the terms "conditioned" and "unconditioned", which

frankly sound better in English, and it's too late to change

our vocabulary now.

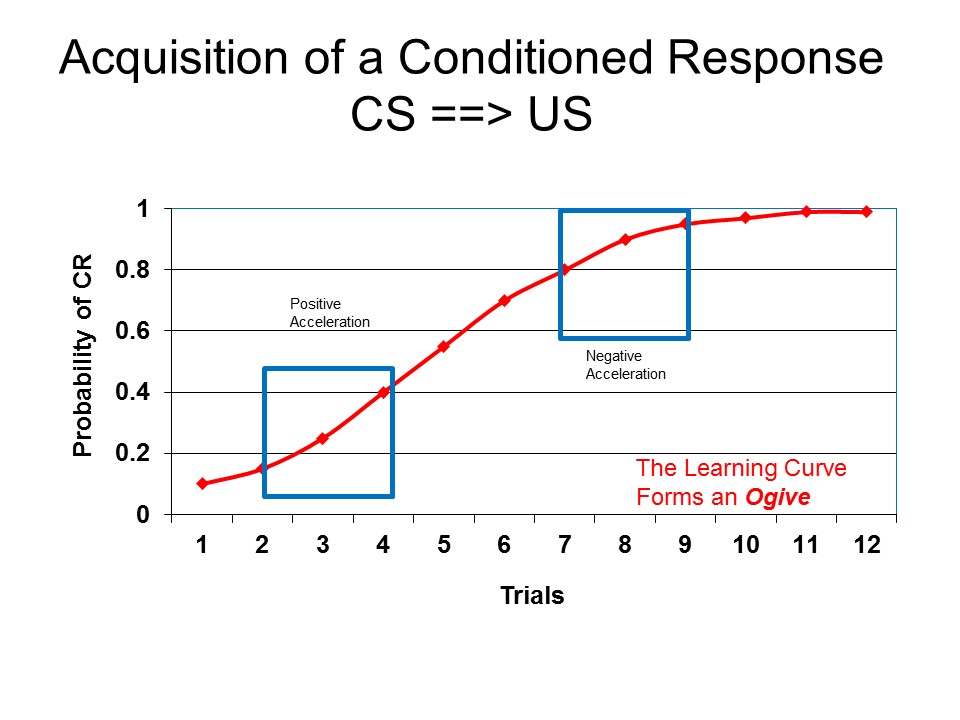

The process by which a conditioned stimulus acquires the power to evoke a conditioned response is known as acquisition. In traditional accounts of conditioning, acquisition of the CR occurs by virtue of the reinforcement of the CS by the subsequent US. The strength of the CR is measured in various ways:

- The magnitude of the CR (such as the number of drops of saliva or its liquid volume). The magnitude of the CR is typically limited by the magnitude of the UR.

- The probability that the CR will occur at all (e.g., the likelihood that any amount of salivation will follow presentation of the bell.

On the initial acquisition trial, when the CS and the US are paired for the very first time, there is only an unconditioned response to the US; there is no conditioned response to the CS.

On later trials, we begin to observe a response that resembles the UR, occurring after presentation of the CS but before presentation of the US. This is the first appearance of the CR.

Even later, we may observe the CR immediately after the presentation of the CS, well before the presentation of the US.

The characteristic "sigmoidal" or

S-shaped curve portraying the acquisition of the CR is

an ogive, in which there is a slow increase in

response strength on the initial trials, followed by a rapid

increase in middle trials, and a further slow increase in the

last trials, ending in a plateau.

The characteristic "sigmoidal" or

S-shaped curve portraying the acquisition of the CR is

an ogive, in which there is a slow increase in

response strength on the initial trials, followed by a rapid

increase in middle trials, and a further slow increase in the

last trials, ending in a plateau.

- The learning curve is commonly characterized as negatively

accelerated, and that's true so far as the middle

and latter portions of the learning curve are

concerned.

- But the very early portions are more accurately characterized as positively accelerated.

If

you look across a number of different textbooks, you will

see two different versions of the “classic” learning curve. The general form

of the learning curve, illustrated above, is presented again

on the left-hand side of this image. Conditioning

begins slowly, then speeds up (positive acceleration), then

slows down (negative acceleration), and finally plateaus

out. We obtain

this curve when the organism is naive, has had no prior

learning experience, or the behavior being learned is

relatively complex. With

simple behaviors, or organisms which have had some prior

learning experience, as in the right-hand portion of the

slide, we see only the negatively accelerated portion of the

learning curve: the conditioned response accrues strength

rapidly at first, and then slows down. It's as if the organism

already knows about learning, or doesn't have much to

learn.

Actually, learning can occur even before a CS is

paired with a US. When a

novel stimulus (NS), such as Pavlov's bell,

is presented for the very first time, the organism will show

an reflexive orienting response (OR) -- perhaps a

startle response -- to that stimulus. But if that

stimulus is presented repeatedly, all by itself, the

magnitude of the OR will progressively diminish. This

is known as habituation. It counts as learning because there

is a change in behavior -- in this case, a change in the

OR -- that occurs as a result of experience.

Habituation is the very simplest form of learning, and has been observed in animals as simple

as protozoa (Penard, 1947) -- and since protozoa are one-celled creatures, you

can't get any simpler than that!

- Habituation is an

example of nonassociative learning, because the

organism is not acquiring an association between CS and

US novel -- for the simple reason that there is no

US. Actually, technically speaking, there isn't a

CS, either. It's just a stimulus.

.

- Classical conditioning "proper" is classified as associative learning, because the organism does forms an association between the CS and US (or, according to some theories, between the CS and the UR).

- But the label "nonassociative" is something of a misnomer. When psychologists were first studying learning, they believed that organisms acquired associations between environmental stimuli and behavioral responses. In habituation, though, there's just a stimulus, and there's no response -- at least, once habituation has occurred. But that's not the right way to think about it. Later, when considering the research of Leo Kamin, we'll revisit habituation and see that, ff anything, habituation entails acquiring an association after all -- between a stimulus and nothing.

If the NS is now paired with a US, so that the NS becomes a CS, conditioning will occur. However, the CR will be acquired at a slower rate than if there had been no prior habituation trials. This phenomenon is known as latent inhibition (Lubow, & Moore, 1959).

Extinction is the process by which the CS loses the power to evoke the CR. Extinction occurs by virtue of unreinforced presentations of the CS -- that is, presentation of the CS alone, without subsequent presentation of the US. When the CS is no longer paired with the US, the CR loses strength relatively rapidly.

On the first extinction trial, there is a strong CR: after all, the organism does not yet "know" that the US has been omitted.

On later trials, the magnitude of the CR falls off, until it disappears entirely.

On extinction trials, the CR

loses strength relatively rapidly. But it is not lost

entirely, and it is possible to demonstrate that the CR is

still present, in a sense, even after it seems to have

disappeared.

On extinction trials, the CR

loses strength relatively rapidly. But it is not lost

entirely, and it is possible to demonstrate that the CR is

still present, in a sense, even after it seems to have

disappeared.

Habituation can be thought of as a special case of extinction, in that the organism learns not to respond to the NS.

Spontaneous recovery is the unreinforced revival of the conditioned response. If, after extinction has been completed, we allow the animal a period of inactivity, unreinforced presentation of the CS will evoke a CR. This CR will be smaller in magnitude that that observed at the end of the acquisition phase, but CR strength will increase with the length of the "rest" interval.

If we continue with unreinforced presentations of the CS, the spontaneously recovered CR will diminish in strength -- it is extinction all over again.

If we continue with new reinforced presentations of the CS, the CR will grow in strength. The reacquisition of a previously extinguished CR is typically faster than its original acquisition, a difference known as savings in relearning.

During extinction, formal extinction trials can continue after the CR has disappeared, a situation known as extinction below zero. Of course, there is no further visible effect on the CR -- it is already at zero strength. However, extinction below zero has two palpable consequences: spontaneous recovery is reduced (though not eliminated), and reacquisition is slower (but still possible).

Spontaneous recovery, savings in relearning, and extinction below zero, have important implications for our understanding of the nature of extinction. Extinction is not the passive loss of the CR: the organism does not "forget" the original association between CS and US, and extinction does not return the organism to the state it was in before conditioning occurred. Spontaneous recovery and savings in relearning are expressions of memory, and they show clearly that the association between CS and US has been retained, even though it is not always expressed in a CR. Rather, it seems clear that the CR is retained but actively suppressed. Extinction does not result in a loss of the CR, but rather imposes an inhibition on the CR. The strength of the inhibition grows with trials, producing the phenomenon of extinction below zero. The inhibition also dissipates over time, producing spontaneous recovery. Thus, reacquisition isn't really relearning. Rather, it is a sort of disinhibition. Both acquisition and extinction, learning and unlearning, are active processes by which the organism learns the circumstances under which the CS and the US are linked.

Other major phenomena of classical conditioning can be observed once the conditioned response has been established. For example, The organism may show generalization of the CR to new test stimuli, other than the original CS, even there have been no acquisition trials on which these new stimuli have been associated with the US. The extent to which generalization occurs is a function of the similarity between the test stimulus and the original CS.

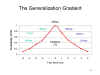

The generalization gradient

is an orderly arrangement of stimuli along some physical

dimension (such as the frequency of an auditory stimulus). The

more closely the test stimulus resembles the original CS, the

greater the CR will be. The generalization gradient provides

one check on generalization: having been conditioned to

respond to one stimulus, the organism will not respond to any

and all stimuli. Response is greatest to test stimuli that

most closely resemble the original CS. In 1987, Roger

Shepard, a cognitive psychologist at Stanford, proposed a universal

law of generalization (ULG), and claimed that this

constituted the first universal law -- like Newton's laws of

physics -- in psychology. According to the ULG, the

likelihood of generalization decreases as an exponential

function of the distance between two stimuli in psychological

space. If not precisely universal, the ULG is nearly so:

it has been found to apply across a large number of conceptual

domains, sensory modalities, and holds for a variety of

species.

The generalization gradient

is an orderly arrangement of stimuli along some physical

dimension (such as the frequency of an auditory stimulus). The

more closely the test stimulus resembles the original CS, the

greater the CR will be. The generalization gradient provides

one check on generalization: having been conditioned to

respond to one stimulus, the organism will not respond to any

and all stimuli. Response is greatest to test stimuli that

most closely resemble the original CS. In 1987, Roger

Shepard, a cognitive psychologist at Stanford, proposed a universal

law of generalization (ULG), and claimed that this

constituted the first universal law -- like Newton's laws of

physics -- in psychology. According to the ULG, the

likelihood of generalization decreases as an exponential

function of the distance between two stimuli in psychological

space. If not precisely universal, the ULG is nearly so:

it has been found to apply across a large number of conceptual

domains, sensory modalities, and holds for a variety of

species.

Generalization, Frequency, and Musical Pitch

In discussing generalization of response among stimuli, it is easiest to use the example of the frequency of tones, because differences in frequency -- whether a tone is high or low -- are easy to appreciate. And the example is accurate so far as it goes. If you condition an animal to a tone CS of 250 cycles per second (cps; also known as hertz, abbreviated hz, after the physicist Heinrich Rudolf Hertz, 1857-1894), it will emit a stronger conditioned response to a tone of 300 hz than to one of 350 hz -- because a tone of 300 hz more closely resembles a tone of 250 hz than does a tone of 350 hertz.

With humans, though, things

can get a little more complicated, because musical pitch is

also related to the frequency of tones, but similarity among

pitches is not just a matter of relative frequency.

Thus, when tones are presented in the context of the

diatonic scale familiar in Western music, the generalization

gradient may be distorted by the vicissitudes of pitch

similarities.

With humans, though, things

can get a little more complicated, because musical pitch is

also related to the frequency of tones, but similarity among

pitches is not just a matter of relative frequency.

Thus, when tones are presented in the context of the

diatonic scale familiar in Western music, the generalization

gradient may be distorted by the vicissitudes of pitch

similarities.

- Tones that are an octave apart, such as Middle C and third-space C on the treble clef, are perceived as more similar than any other pair of tones.

- Tones that are a major fifth apart, such as Middle C and second-line G on the treble clef, are also perceived as highly similar.

- And tones that are a major third apart, such as Middle C and first-line E on the treble clef, are also perceived as similar, though not as similar as those separated by an octave or a major fifth.

Consider an experiment in which a subject is initially conditioned to respond to a tone of 262 hertz, roughly corresponding to Middle C. Such a subject may well show larger conditioned responses to tones of 524 hz (roughly 3rd-space C), 392 hz (second-line G), and 262 hz (1st-line E), than to either B-flat (233 hz) or D (292 hz), even though the former tones are more distant from the original CS, in terms of frequency, than the latter.

However, this may only occur if we establish a musical context for the tones in the first place -- for example, by embedding the C in the other pitches of the diatonic scale.Or by beginning the experiment by playing a tune in the key of C major. There are some experiments to be done here (hint, hint).

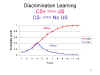

Discrimination provides a

further check on generalization. Consider an experiment in

which we present two previously neutral stimuli:

one, the CS+, is always reinforced by the

unconditioned stimulus; the other, the CS-,

is never reinforced. As conditioning proceeds, the CS+ will

come to elicit the CR, but the CS- will not acquire this

power. If the CS+ and CS- are close to each other on the

generalization gradient, both will initially elicit a

conditioned response. But as conditioning proceeds, the CR to

the CS+ will grow in strength, while the CR to the CS- will

extinguish. The CR is only elicited by CSs that are actually

associated with the US.

Discrimination provides a

further check on generalization. Consider an experiment in

which we present two previously neutral stimuli:

one, the CS+, is always reinforced by the

unconditioned stimulus; the other, the CS-,

is never reinforced. As conditioning proceeds, the CS+ will

come to elicit the CR, but the CS- will not acquire this

power. If the CS+ and CS- are close to each other on the

generalization gradient, both will initially elicit a

conditioned response. But as conditioning proceeds, the CR to

the CS+ will grow in strength, while the CR to the CS- will

extinguish. The CR is only elicited by CSs that are actually

associated with the US.

New

conditioned responses can also appear even if they are very

dissimilar to the original conditioned stimulus. Consider the

phenomenon known as sensory preconditioning,

which occurs before acquisition trials in which a CS is paired

with a US .

New

conditioned responses can also appear even if they are very

dissimilar to the original conditioned stimulus. Consider the

phenomenon known as sensory preconditioning,

which occurs before acquisition trials in which a CS is paired

with a US .

- In Phase 1 of a sensory preconditioning experiment, two neutral stimuli,CS1 and CS2, are initially presented together, without any reinforcing US. Neither of these CSs elicits any particular reflexive response. Because no US is involved, there will be no evidence of any CR being formed.

- In Phase 2, the CS2 is reinforced by pairing it with a US, until a CR appears.

- In Phase 3, we test CS1. If we have done the experiment right, the CR will also appear in response to CS1, even though CS1 has never been paired with the US.

Something similar happens in higher-order

conditioning, except that the first two phases are

reversed, so that higher-order conditioning occurs after

acquisition trials in which CS is paired with US.

Something similar happens in higher-order

conditioning, except that the first two phases are

reversed, so that higher-order conditioning occurs after

acquisition trials in which CS is paired with US.

- In Phase 1 of a higher-order conditioning experiment, a neutral stimulus,CS1, is paired with a reinforcing US, just as in the standard classical conditioning paradigm, until the usual CR appears.

- In Phase 2, CS1 is preceded by another neutral stimulus, CS2, without any reinforcing presentation of any US.

- In Phase 3, we test CS2. Again, if we have done the experiment right, the CR will also appear in response to CS2 -- even though, as in sensory preconditioning, CS1 has never been paired with the US.

The Scope of Classical Conditioning

By means of acquisition, extinction, generalization, discrimination, sensory preconditioning, and higher-order conditioning, stimuli come to evoke and inhibit reflexive behavior even though they may not have been directly associated with an unconditioned stimulus. By means of classical conditioning processes in general, reflexive responses come under the control of environmental events other than the ones with which they are innately associated.

The phenomena of classical conditioning

are ubiquitous in nature, occurring in organisms as simple as

the sea mollusk and as complicated as the adult human being.

- Aplysia, a marine mollusk also known as the sea nonhare, has a only about 10,000 neurons in its entire nervous system, compared to 86 billion or more in the human nervous system; it doesn't have a brain as such, but its neurons are organized into nine ganglia (remember that the brain can be thought of as one huge ganglion). Nevertheless, the animal can display habituation and acquire simple conditioned responses. Prof. Eric Kandel of Columbia University shared the 2000 Nobel Prize for Physiology and Medicine for his work with Aplysia examining synaptic synaptic changes during learning (see Kandel, Science, 2001).

- An even simpler organism, the Caribbean box jellyfish (Tripedalia

cystophora), has only about 1,000 neurons

distributed across its tentacles (roughly speaking), but

no central nervous system as such. Nevertheless, the

animal can learn to avoid obstacles as it moves around its

environment, forming associations between visual and tactile

stimuli (Bielecki et al., Current Biology,

2021).

Pavlov himself thought that all learning entailed classical conditioning, but this position is too extreme. Still, classical conditioning is important because, in a very real sense,

The laws of classical conditioning are the laws of emotional life.

Classical conditioning underlies many of our emotional responses to events -- our fears and aversions, our joys and our preferences.

Instrumental Conditioning

At roughly the same time as Pavlov was

beginning to study classical conditioning, E.L. Thorndike, an

American psychologist at Columbia University, was beginning to

study yet another form of learning -- what has come to be

known as instrumental conditioning. Beginning in

1898, Thorndike reported on a series of studies of cats in

"puzzle boxes". The animals were confined in cages whose doors

were rigged to a latch which could be operated from inside the

cage. The animal's initial response to this situation was

agitation -- particularly if it was hungry and a bowl of food

was placed outside the cage. Eventually, though, it would

accidentally trip the latch, open the door, and escape -- at

which point it would be captured and placed back in the cage

to begin another trial.

At roughly the same time as Pavlov was

beginning to study classical conditioning, E.L. Thorndike, an

American psychologist at Columbia University, was beginning to

study yet another form of learning -- what has come to be

known as instrumental conditioning. Beginning in

1898, Thorndike reported on a series of studies of cats in

"puzzle boxes". The animals were confined in cages whose doors

were rigged to a latch which could be operated from inside the

cage. The animal's initial response to this situation was

agitation -- particularly if it was hungry and a bowl of food

was placed outside the cage. Eventually, though, it would

accidentally trip the latch, open the door, and escape -- at

which point it would be captured and placed back in the cage

to begin another trial.

Over

successive trials, Thorndike observed that the latency of the

escape response progressively diminished. Apparently, the

animals were learning how to open the door -- a learning which

seemed to be motivated by reward and punishment.

Over

successive trials, Thorndike observed that the latency of the

escape response progressively diminished. Apparently, the

animals were learning how to open the door -- a learning which