For background on sensation and perception, see the General Psychology Lecture Supplements on Sensation and Perception. |

Social cognition is the

study of the acquisition, representation, and use of social

knowledge -- in general terms, it is the study of social

intelligence.

A comprehensive theory of social

cognition must contain several elements (Hastie & Carlston,

1980; Kihlstrom & Hastie, 1987):

The first two of these facets have to do with perception and attention -- the processes by which knowledge is acquired.

Or are they? Actually, a prior question is: Where does social knowledge come from?

When asked about knowledge in

general, psychology and cognitive science offer two broad

answers -- and a third, correct answer.

And so it

is with social cognition: it, too, begins with social

perception:

We can begin by distinguishing between sensation and perception.

Sensation

has to do with the detection of stimuli in the environment -- in

the world outside the mind, including the world beneath the

skin. To make a long story short: Sensory processes:

For example, the rods and cones in the retina of the eye convert light waves emitted by an object into neural impulses that flow over the optic nerve to the occipital lobe.

Perception

gives us knowledge of the sources of our sensations -- of the

objects in the environment, and of the states of our bodies:

What objects are in the world outside the mind, where they are

located, what they are doing, and what we can do with

them. Put another way, perception assigns meaning to

sensory events.

In many

respects, sensation is not an intelligent act.

By contrast, perception is the quintessential act of the intelligent mind. Perception goes beyond the mere pickup of sensory information, and involves the creation of a mental representation of the object or event that gives rise to sensory experience. In order to form these mental representations, the perceiver (in the lovely phrase of Jerome Bruner, a pioneering cognitive psychologist) "goes beyond the information given" by the stimulus, combining information extracted from the current stimulus with pre-existing knowledge stored in memory, employing processes of judgment and inference.

In

cognitive psychology, there are basically two views of

perception.

By any standard, the constructivist view dominates research and theory on perception. But, as we will see, the ecological view also finds its proponents.

The study of social perception begins with an analogy between social and nonsocial objects. The study of social perception assumes that any person is an object who has an existence independent of the mind of the perceiver. Accordingly, the perceiver's job is to extract information from the stimulus array to form an internal, mental representation of the external object of regard.

The term person

perception was introduced by Bruner and Tagiuri (Handbook

of Social Psychology, 1954) to reflect the status of

persons as objects of knowledge. As with any other aspect

of perception, they argued that a number of factors influence

perceptual organization:

Jerome Bruner and the "New Look" in PerceptionBruner was a pioneering cognitive psychologist and cognitive scientist. Among his notable accomplishments was the introduction of what he called a "New Look" in perception, which sought to redirect perception research from an analysis of stimulus features to an analysis of the perceiver's internal mental states. Although perception is obviously a field of cognitive psychology, to some extent Bruner's New Look was influenced by psychoanalysis, as when he argued that emotional and motivational processes interacted with cognitive processes -- so that, in some sense, our feelings and desires affect what we saw. |

Our impressions of other people are typically represented linguistically, often as trait adjectives. Consider a survey by the Washington Post, which asked respondents to describe in three words the various candidates for the Democratic and Republican presidential nominations. The most frequent responses, arranged as "tag clouds" in which the font size represents the frequency with which the word was used, looked like these:

Here's a

similar survey, conducted over Facebook by the Daily Beast, a journalism

website (with, perhaps, a somewhat liberal bent), following the February 2012 Republican

presidential debates. During the debate, CNN

correspondent John King had asked each of the candidates to

describe themselves in one word. The Daily

Beast polled subscribers to its Facebook page with

the same question, resulting in the following word

clouds.

For good measure, they also asked their Facebook

subscribers to describe Barack Obama, who was

unopposed for the Democratic nomination.

In 2015, in the run-up to the 2016 election, YouGov.com, a global online community that promotes citizen participation in government, conducted a similar survey in which visitors to the organization's website were asked to characterize some of the leading presidential candidates in one word (at the time, there was some indication that Mitt Romney would join the race). Separate word clouds were constructed from the responses of people who liked and disliked each candidate.

Often, the stimulus information for person perception also comes in verbal form, as a list of traits and other descriptors. This is certainly the case with the self-descriptions that appear in "personals" ads in newspapers and magazines. But it is also true when we describe other people. Consider this passage from the Autobiography of Mark Twain, the author describes the countess who owned the villa in Florence where he and his family stayed in 1904:

"excitable, malicious, malignant, vengeful, unforgiving, selfish, stingy, avaricious, coarse, vulgar, profane, obscene, a furious blusterer on the outside and at heart a coward."

Or, this description of Osama Bin Laden,

which the former chief of the CIA's "Bin Laden Issues Station"

has endorsed as a "reasonable biographical sketch" of the man.

Or, this description of Osama Bin Laden,

which the former chief of the CIA's "Bin Laden Issues Station"

has endorsed as a "reasonable biographical sketch" of the man.

When Fiske and Cox (1979) coded peoples'

open-ended descriptions of other people, they identified six

major categories:

Because verbal lists of traits are easy to compose and present to subjects, many studies of person perception begin with traits, and proceed from there. This is reasonable, because so much of our social knowledge is encoded and transmitted via language.

Actually, the study of person

perception began before 1954, with the work of Solomon Asch

(1946). Like Lewin (about whom you've already heard), and

Fritz Heider (about whom you'll hear a lot in the future), Asch

was a German refugee from Hitler's Europe. And like them, he was

heavily influenced by European Gestalt psychology. Much of

Asch's early work was on aspects of nonsocial perception, but he

brought the Gestalt perspective to bear on problems of social

psychology in his classic textbook, Social Psychology

(1952), which was the first social psychology text to be written

with a unifying cognitive theme running throughout.

Asch (1946) set out the problem of social perception as follows:

[O]rdinarily our view of a person is highly unified. Experience confronts us with a host of actions in others, following each other in relatively unordered succession. In contrast to this unceasing movement and change in our observations we emerge with a product of considerable order and stability.

Although he possesses many tendencies, capacities, and interests, we form a view of one person, a view that embraces his entire being or as much of it as is accessible to us. We bring his many-sided, complex aspects into some definite relations....

How do we organize the various data of observation into a single, relatively unified impression?

How do our impressions change with time and further experiences with the person?

What effects in impressions do other psychological processes, such as needs, expectations, and established interpersonal relations, have?

In

addressing these questions, Asch set out two competing theories:

Obviously as a Gestalt psychologist, Asch had a pre-theoretical preference for the latter theory.

In order to study the process of person perception, Asch (1946) invented the impression-formation paradigm. He presented subjects with a trait ensemble, or a list of traits ostensibly describing a person (the target) -- varying the content of the ensemble, the order in which traits were listed, and other factors. The subjects were asked to study the trait ensemble, and then to report their impression of the target in free descriptions, adjective checklists, or rating scales.

Asch's

first experiment compared the impressions engendered by two

slightly different trait ensembles. Subjects were

presented with one of two trait lists, which were identical

except that target A was described as warm while target

B was described as cold.

Asch's

first experiment compared the impressions engendered by two

slightly different trait ensembles. Subjects were

presented with one of two trait lists, which were identical

except that target A was described as warm while target

B was described as cold.

After studying the trait ensemble, the

subjects reported their impressions in terms of a list of 18

traits, presented as bipolar pairs such as generous-ungenerous.

After studying the trait ensemble, the

subjects reported their impressions in terms of a list of 18

traits, presented as bipolar pairs such as generous-ungenerous.

The two ensembles

generated two quite different impressions,

The two ensembles

generated two quite different impressions,  with A perceived in

much more positive terms than B. There were significant

differences between the two impressions on 10 of the 18 traits

in subjects' response sets. A later experiment, varying

only intelligent-unintelligent, yielded similar results.

with A perceived in

much more positive terms than B. There were significant

differences between the two impressions on 10 of the 18 traits

in subjects' response sets. A later experiment, varying

only intelligent-unintelligent, yielded similar results.

But when the

experiment was repeated (Experiment 3), with the words polite

and blunt substituted for warm and cold,

there were relatively few differences between the two

impressions.

But when the

experiment was repeated (Experiment 3), with the words polite

and blunt substituted for warm and cold,

there were relatively few differences between the two

impressions.

From these and related results, Asch concluded that traits like warm-cold and intelligent-unintelligent were central to impression formation, while traits like polite-blunt were not. In Asch's view, central traits are qualities that, when changed, affect the entire impression of the person. Other traits are more peripheral, in that they make little difference

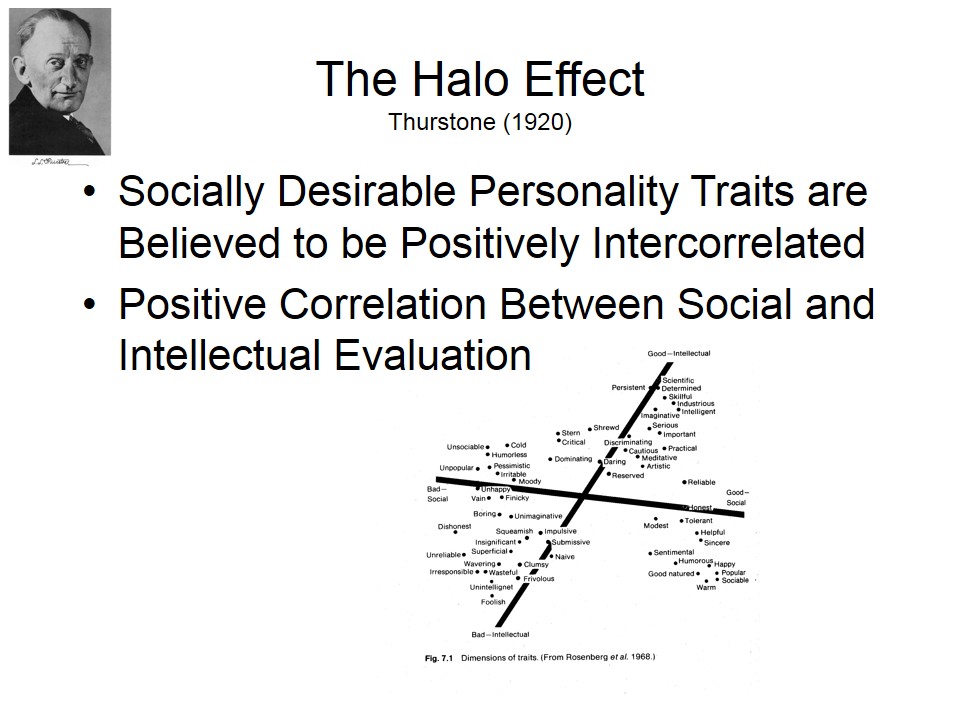

Although being described as warm rather than cold led the target to be described in highly positive terms, Asch distinguished the effect of central traits from the halo effect described by Thurstone, by which targets described with one positive trait tend to be ascribed other positive traits as well. Being described as warm rather than cold does not lead to an undifferentiated positive impression; the warm-cold effect is more differentiated than that.

As

proof, Asch pointed out that warm-cold is not

always central to an impression. In his Experiment 2,

where warm and cold were embedded in a different

trait ensemble, there were few differences between the resulting

impressions. If anything, the person was perceived as somewhat

dependent, rather than the glowingly positive terms that emerged

from Experiment 1.

As

proof, Asch pointed out that warm-cold is not

always central to an impression. In his Experiment 2,

where warm and cold were embedded in a different

trait ensemble, there were few differences between the resulting

impressions. If anything, the person was perceived as somewhat

dependent, rather than the glowingly positive terms that emerged

from Experiment 1.

Consistent with Gestalt views of perception, the effect of one piece of information (whether the person is warm or cold) depends on the entire field in which that information is embedded. To explain why traits are sometimes central and other times peripheral, Asch offered the change of meaning hypothesis, which holds that the total environmental surround changes the meaning of the individual elements that comprise it. Remember, for Gestalt psychologists, the distinction between figure and ground is blurry, because both figure and ground are integrated into a single unified perception. Perception of the figure affects perception of the background, and perception of the background affects perception of the figure.

In

addition to studying the semantic relations among stimulus

elements, Asch also studied

In

addition to studying the semantic relations among stimulus

elements, Asch also studied  their temporal

relations. After all, he argued, impression-formation is

extended over time: as we gradually accumulate knowledge about a

person, our impression of that person may change. In his

Experiment 6, Asch presented subjects with two identical trait

ensembles, except that intelligent was the first trait

listed for target A, and the last trait listed for target

B. The two impressions differed markedly, revealing an order

effect in impression formation.

their temporal

relations. After all, he argued, impression-formation is

extended over time: as we gradually accumulate knowledge about a

person, our impression of that person may change. In his

Experiment 6, Asch presented subjects with two identical trait

ensembles, except that intelligent was the first trait

listed for target A, and the last trait listed for target

B. The two impressions differed markedly, revealing an order

effect in impression formation.

In order to explain order effects, Asch held that the initial terms in the trait ensemble set up a "direction" that influences the interpretation of the later ones. The first term sets up a vague but directed impression, to which later characteristics are related, resulting in a stable view of the person -- just as our perception of a moving object remains stable, even though our perspective on it may change over time.

Taken together, Asch's studies illustrate principles of person perception that are familiar from the Gestalt view of perception in general. The whole percept is greater than the sum of its stimulus parts, because the elements interact with each other; just as the perception of the individual stimulus elements influences perception of the entire stimulus array, so the perception of the entire stimulus array influences the perception of the individual stimulus elements.

Asch's

1946 experiments set the agenda for the next 20 to 30 years of

research on person perception and impression formation, which

basically sought clarification on questions originally posed by

Asch himself:

Interestingly, however, a recent large-scale study failed to replicate one of Asch's findings: the "primacy of warmth" effect, by which warm-cold serves is not only a central trait, but more important to impression-formation than the other big central trait, intelligent-unintelligent. Nauts et al. (2014) carefully repeated Asch's (1946)procedures (for his Studies I, II, and IV), in a sample of 1140 subjects run online via Mechanical Turk.

So, just to be clear, Nauts et al. confirmed that arm-cold and intelligent-unintelligent are central to impressions of personality; but they failed to find, as he claimed, that warm-cold was more important than intelligent-unintelligent.

- Despite the impression given by their title, Nauts et al. actually replicated the primary findings of Asch's study.

- Content analysis of the subjects' open-ended descriptions of the targets (a quantitative procedure that was not available to Asch in 1946) showed that subjects used the terms warm, cold, and intelligent more often than any other trait term. This is evidence for the importance of these dimensions to impression-formation.

- In addition, subjects who saw warm (cold) in the trait ensemble described the targets in more (less) positive terms, compared to those who saw polite (blunt).

- So, how could Nauts et al. claim a failure to replicate Asch?

- It turned out that warm-cold was not used more often than intelligent-unintelligent in subjects impressions, contradicting the "primacy of warmth" hypothesis. In fact, intelligent-unintelligent appeared more often in the impressions than warm-cold.

- When subjects were asked to rank how important the various items in the trait ensemble were to their overall impressions, they ranked intelligent-unintelligent higher than warm-cold.

Asch's distinction between a central and a peripheral trait was made on a purely empirical basis: He discovered that some traits, such as warm-cold and intelligent-unintelligent, exerted a disproportionate effect on impressions of personality, while others, such as polite-blunt, did not. But although he could predict the effects of central traits on impressions, he had no theory that would enable him to predict which traits would be central, and which peripheral.

So what makes a trait central as opposed to peripheral? Julius Wishner (1960) offered a plausible answer. He administered a 53-item adjective checklist, derived from the checklists that Asch had used, asking his subjects to describe their acquaintances (often, their teacher in introductory psychology). Using the power of high-speed computers that simply were not available to Asch in 1946 (and which, frankly, are dwarfed by the computational power of the simplest laptop or even palmtop computer today), Wishner calculated the correlations between each trait and every other trait in the list. Examining the matrix of trait intercorrelations, Wishner observed that traits such as warm-cold and intelligent-unintelligent, which Asch had identified as central, had significant correlations with many other traits (e.g., mean rs = .62 and .56, respectively); by contrast, peripheral traits such as polite-blunt had relatively few correlations (e.g., mean r = .43).

The upshot of Wishner's study is that central traits carry more information than peripheral traits, in that they have more implications for unobserved features of the person. By virtue of their high intercorrelations with other traits, knowing that a person is warm or intelligent tells us a great deal about the person, while knowing that a person is polite does not. In the same way, a change in one central trait, from warm to cold or from intelligent to unintelligent, implies changes in many other traits as well, while a change from polite to blunt does not.

Wishner's findings also explained why a trait like warm-cold was not always central: it depends on the precise list of traits on which subjects make their ratings. Any trait from the stimulus ensemble will function as a central trait, so long as it is highly correlated with many of the traits on the response list.

Wishner's solution to the problem of central traits made his paper a classic in the person-perception literature, but it is not completely satisfactory. For example, it might be nice if "centrality" was a property of the trait itself, and did not depend on the context provided by the response set (though, frankly, Asch, as a Gestalt psychologist, might not think this was so desirable!). Are there any traits that are inherently central?

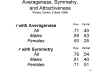

Seymour

Rosenberg (1968), making use of even more computational power

than had been available to Wishner, factor-analyzed the

intercorrelations among a large number of trait terms, yielding

a hierarchical structure consisting of subordinate

traits, primary traits, secondary and even tertiary

traits. He discovered that Asch's central traits tended to

load highly on two very broad superordinate factors of

personality ratings representing two dimensions:

Interestingly, these two "superfactors" are not entirely independent of each other: people who tend to be described in positive social terms also tend to be described in positive intellectual terms. There is a "super-duper" factor of evaluation which runs through the entire matrix of personality traits, and gives rise to Thurstone's halo effect.

Pulling

all of this material together, we can conclude that central

traits have two properties:

Talking with StrangersGladwell also discusses other, more prosaic examples of egregious misunderstanding, which he explains with two basic principles, both drawn from social-science research.

Gladwell probably makes too much of two relatively small principles (he made similar mistakes in Blink and The Tipping Point), but his book only underscores the importance of understanding both how we perceive other people, and how accurate, or inaccurate, those perceptions can be. |

While a great deal of personality research has been devoted to determining the hierarchical structure of personality traits (e.g., the Big Five structure of neuroticism, extraversion, agreeableness, conscientiousness, and openness to experience), it seems likely that laypeople possess some intuitive knowledge of the structure of personality as well. In fact, Asch's concept of the central trait assumes that laypeople possess some intuitive knowledge about the relations among personality traits. If they did not, all traits would be created equal, none more central, or more peripheral, to impression formation than any other.

The term implicit

personality theory (IPT) was coined by Bruner and Tagiuri

(1954 -- they were busy that year) to refer to "the naive,

implicit theories of personality that people work with when they

form impressions of others". Bruner and Tagiuri understood

that person perception entailed "going beyond the information

given" by combining information extracted from the stimulus with

information supplied by pre-existing knowledge. In other

words, in the course of person perception the person must make

use of knowledge that he or she possesses about the relations

among various aspects of personality.

The term implicit

personality theory (IPT) was coined by Bruner and Tagiuri

(1954 -- they were busy that year) to refer to "the naive,

implicit theories of personality that people work with when they

form impressions of others". Bruner and Tagiuri understood

that person perception entailed "going beyond the information

given" by combining information extracted from the stimulus with

information supplied by pre-existing knowledge. In other

words, in the course of person perception the person must make

use of knowledge that he or she possesses about the relations

among various aspects of personality.

People's implicit theories of personality may be quite different from the formal theories of personality researchers. In fact, compared against formal theories resulting from methodologically rigorous research, they may even be wrong. But right or wrong, they are used in the course of person perception.

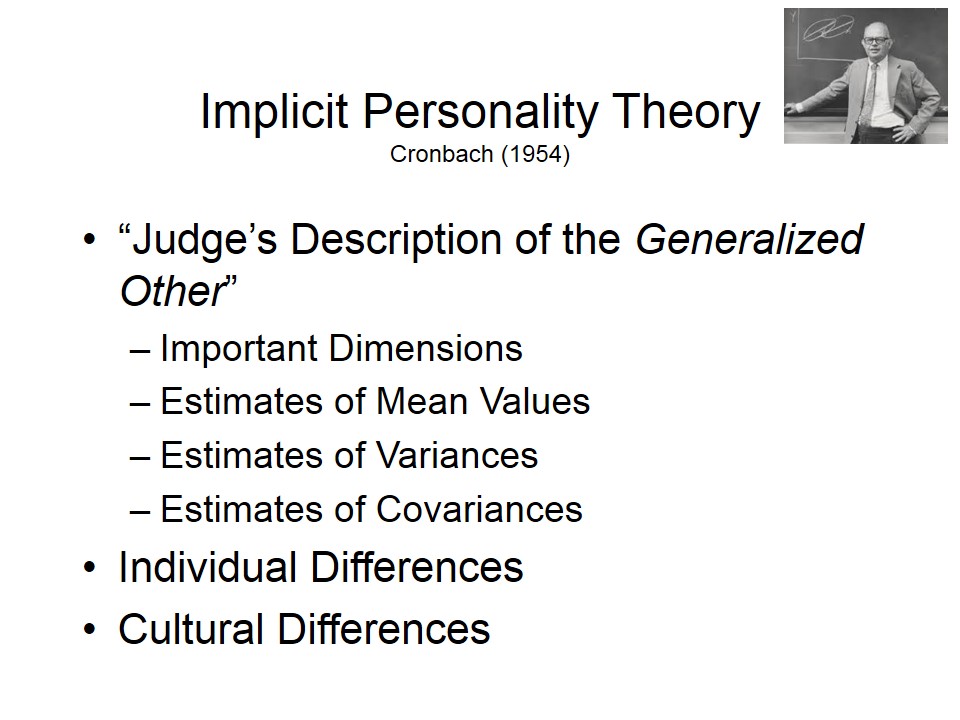

The domain of IPT was further

explicated by L.J. Cronbach in his contribution to a 1954 book

on person perception edited by Bruner and Tagiuri (it was a very

good year for person perception). Cronbach discussed IPT

in the context of personality ratings made by judges in

traditional trait-oriented personality research, and suggested

that in addition to information derived from the judge's

observations of the target, the ratings will be influenced by

the "Judge's description of the generalized Other" -- that is,

by the judge's beliefs about what people are like in

general. In Cronbach's view, IPT consists of several

elements:

The domain of IPT was further

explicated by L.J. Cronbach in his contribution to a 1954 book

on person perception edited by Bruner and Tagiuri (it was a very

good year for person perception). Cronbach discussed IPT

in the context of personality ratings made by judges in

traditional trait-oriented personality research, and suggested

that in addition to information derived from the judge's

observations of the target, the ratings will be influenced by

the "Judge's description of the generalized Other" -- that is,

by the judge's beliefs about what people are like in

general. In Cronbach's view, IPT consists of several

elements:

Cronbach believed that IPT was widely shared within a culture, but he acknowledged that there might also be individual differences in IPT. For example, some people might assume that most people are friendly and well-meaning, and that becomes the "default option" when they make judgments about some specific person; but other people might assume that most people are hostile and aggressive. In addition, he suggested that there may be cultural differences in IPT. For example, within Western culture, IPT seems to be centered on clusters of traits, or stable individual differences in behavioral dispositions; but other cultures might have more "situationist" or "interactionist" views of personality.

Following

Cronbach, an expanded concept of implicit personality theory

might look like this:

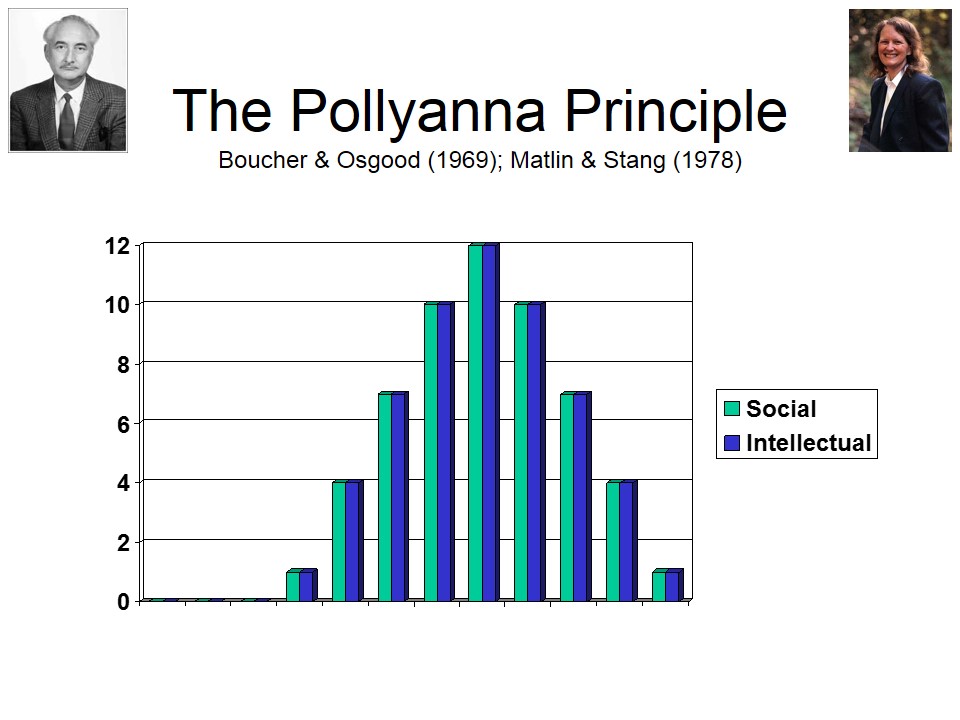

For example, people might think that

Rosenberg's traits of social and intellectual "good-bad" are

normally distributed, with populations right about the midpoint.

For example, people might think that

Rosenberg's traits of social and intellectual "good-bad" are

normally distributed, with populations right about the midpoint.

Osgood's "Pollyanna Principle"

reflects the assumption that the distribution of positive traits

in the population is skewed toward the positive end of the

continuum.

Osgood's "Pollyanna Principle"

reflects the assumption that the distribution of positive traits

in the population is skewed toward the positive end of the

continuum.

Or, people might believe that

there is a bimodal distribution of social and intellectual

goodness, with most people tending toward "good" but a substantial

minority tending toward "bad".

Or, people might believe that

there is a bimodal distribution of social and intellectual

goodness, with most people tending toward "good" but a substantial

minority tending toward "bad".

Thorndike's "halo effect"

reflects the assumption that socially desirable traits, whether

social or intellectual, are positively correlated with each

other, as in the two-dimensional structure uncovered by

Rosenberg.

Thorndike's "halo effect"

reflects the assumption that socially desirable traits, whether

social or intellectual, are positively correlated with each

other, as in the two-dimensional structure uncovered by

Rosenberg.

Note for statistics mavens: the correlations between variables can be represented graphically by vectors, with the angles between vectors reflecting the correlation between them such that r is equal to the cosine of the angle at which the vectors meet (this is how factor analysis can be done geometrically). Thus, two variables that are uncorrelated with each other (r = 0.0) are represented by two vectors that meet at right angles (cos 90o = 0); two variables that are perfectly correlated (r = 1.0) are represented by two vectors that overlap completely (cos 0o = 1.0); and two variables that are highly but not perfectly correlated, say .60 < r < .65, are represented by vectors that meet at an angle of about 45o (cos 45o = 0.635).

Implicit

theories of personality have been studied through the

application of multivariate statistical methods, such as factor

analysis, multidimensional scaling, and cluster analysis to

various types of data:

But because these techniques are very time-consuming, developments in implicit personality theory had to await the proper technology, particularly the availability of cheap, high-speed computational power. In the 1960s, as appropriate computational facilities became widely available, two competing models of implicit personality theory began to emerge.

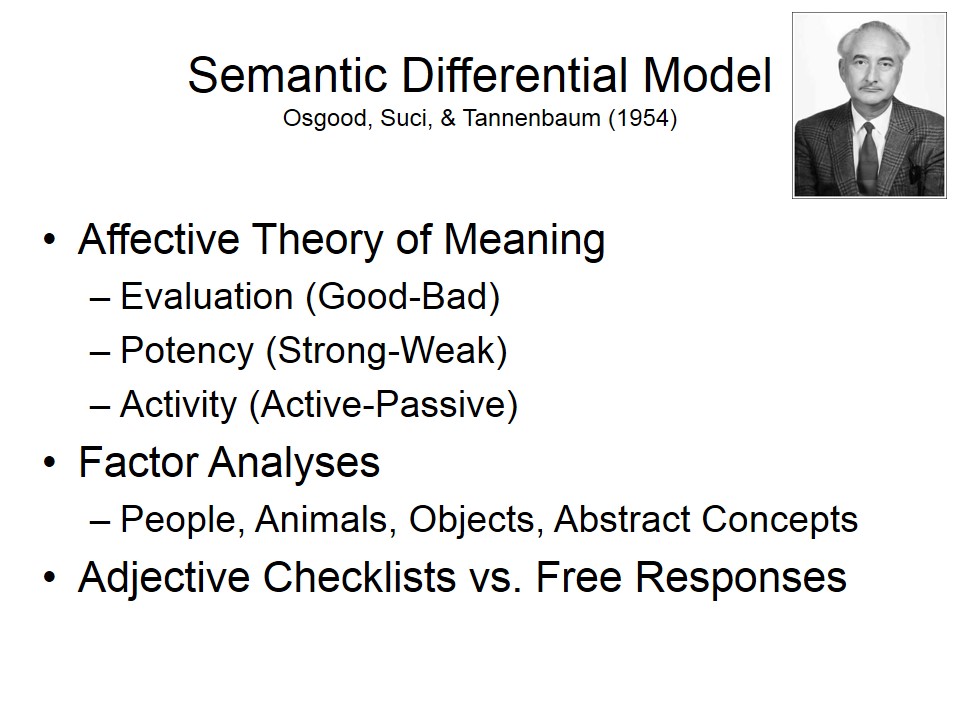

The first of these was a semantic

differential model of IPT based on Charles Osgood's

tridimensional theory of meaning (e.g., The Measurement of

Meaning by Osgood, Suci, & Tannenbaum, 1957).

According to Osgood, the meaning of any word can be represented

as a point in a multi-dimensional space defined by three

vectors:

The first of these was a semantic

differential model of IPT based on Charles Osgood's

tridimensional theory of meaning (e.g., The Measurement of

Meaning by Osgood, Suci, & Tannenbaum, 1957).

According to Osgood, the meaning of any word can be represented

as a point in a multi-dimensional space defined by three

vectors:

In this EPA scheme, closely related words are represented by points that lie very close to each other in this space. Osgood's method was to have subjects rate objects and words on a set of bipolar adjective dimensions. When these ratings were factor analyzed, the three dimensions of evaluation, potency, and activity came out regardless of the domain from which the objects and words were sampled -- people, animals, inanimate objects, even abstract concepts. If these three dimensions are the fundamental dimensions of meaning, they are likely candidates for implicit personality theory -- the cognitive framework for giving meaning to people and their behaviors -- as well.

The principal problem with the semantic differential model is that the best evidence for the three factors came from studies employing adjective checklists, where the adjectives on the list were deliberately chosen to represent evaluation, potency, and activity. Accordingly, it seems possible that evaluation, potency, and activity came out of analyses of adjective ratings because, however unintentionally, they were built into these ratings to begin with. Interestingly, Osgood's three dimensions were not clearly obtained from free-response data which was not constrained by the experimenter's choices. Left to their own devices, without the experimenter's constraints, subjects' cognitive structures look somewhat different from Osgood's scheme.

For example, Rosenberg and Sedlak (1972) asked subjects to provide free descriptions of 10 people each. These investigators then selected the 80 traits that occurred most frequently in these descriptions, and then submitted the 80x80 matrix of trait co-occurrences to a technique of multivariate analysis called multidimensional scaling (why only 80 traits? An 80x80 matrix, generating more than 3,000 unique correlation coefficients, exhausted the computational power available at the time). They found that Osgood's evaluation and potency factors were highly correlated (r = .97): people who were perceived as good were also perceived as strong. Osgood's activity factor was quite weak in the data, but was also positively correlated with the evaluation and potency factors (r = .57). Accordingly, Rosenberg & Sedlak concluded that the evaluation dimension dominated people's implicit theories of personality.

Based on

free-description data such as this, Rosenberg proposed an

alternative evaluation model of IPT. He argued that

evaluation was the only perceptual dimension common to all

individuals, and that any additional dimensionality came from

correlated content areas such as social and intellectual

evaluation. The figure graphically represents the loadings

on the two dimensions of a representative set of trait

adjectives. Note that Asch's central traits, warm-cold and intelligent-unintelligent,

lie fairly close to the axes that define the two-dimensional

space.

Kim and Rosenberg (1980) offered a direct test of the two models. In the Rosenberg and Sedlak (1972) study, and other studies of implicit personality theory, individual subjects rated only a single person, and then the subjects' responses were aggregated, so that the resulting IPT structure reflected the average "Judge's description of the generalized Other". But averaging may obscure the structures that exist in individual Judges' minds. It is entirely possible that individual judges have something like the Osgood structure in their heads, but when their responses are aggregated, only evaluation remains. Accordingly, Kim and Rosenberg decided to compare the adequacy of the two models at the individual level (again, this is the kind of analysis that can only be done when computing resources are cheap). Because multivariate analysis requires multiple responses, they had subjects describe themselves and 35 other people that they knew well; and they collected both free descriptions and ratings on an adjective checklist. Multidimensional scaling of the individual subject data revealed that something resembling Osgood's three-dimensional EPA structure appeared in only 8 of the 20 subjects studied, and in 3 of these 8, the potency and activity dimensions were not independent of evaluation. More important, the evaluation dimension emerged from every individual subject's data set.

Kim and

Rosenberg concluded that that Osgood's EPA structure was an

artifact of aggregation across subjects. All subjects use

evaluation as a dimension for person perception; some use

potency, some use activity, and some use both, and these

dimensions are strong enough to create the appearance of a major

dimension when data is aggregated across subjects. Potency

and activity are positively correlated for some subjects, and

negatively correlated for others; these tendencies balance out,

and give the appearance that potency and activity are

independent of each other and of evaluation. But this is

an illusion produced by data aggregation. In their view,

only the evaluation dimension is genuine; the potency and

activity dimensions are largely artifacts of method.

Fiske et al. (Trends in

Cognitive Sciences, 2007) has drawn new attention to Rosenberg's

work by claiming that warmth and competence are

universals in social cognition, which exert a powerful influence

on how we interact with other people. In particular, she

has argued that various combinations of warmth and judgment

characterize various out-group stereotypes. This is true

in both "individualist" and "collectivist" (or 'independent" and

"interdependent") societies. Fiske has even argued that

there is a specific module in the brain, located in the medial

prefrontal cortex (MPFC), which constitutes a "social evaluation

area". In any event, she and her colleagues have argued

that assessments of warmth and competence are made automatically

and unconsciously -- even though they may not necessarily be

accurate.

Implicit theories of personality are like formal

scientific theories, except that they are "naive" and

"implicit". Recently, scientific research on personality

has focused on a five-factor model of personality structure

originally proposed by Norman (1963). In his research,

Norman examined subjects' ratings of other people on a

representative set of trait adjectives. Factor analysis

reliably revealed five reliable dimensions of personality:

Goldberg

(1981) proposed that the Big Five comprised a universally

applicable structure of personality. By universally

applicable Goldberg meant that it could be used to assess

individual differences in personality under any circumstances:

Goldberg noted that the Big Five are so ubiquitous that they have been encoded in language, as familiar trait adjectives like extraverted and cultured. Of course, if ordinary "laypeople" (not just trained scientists) notice these dimensions enough to evolve words for them, the Big Five structure may exist in people's minds as well as their behavior. That is, the Big Five may well serve as the structural basis for people's implicit theories of personality, as well as a formal theory of personality structure.

Along

these lines, I have often thought of the Big Five as The Big

Five Blind Date Questions -- representing the kind of

information that we want to know about someone that we're

meeting for the first time, and will be spending some

significant time with:

If these are indeed

the kinds of questions we ask about people, then it seems like the

Big Five -- and not just a single dimension of evaluation --

resides in our heads as an implicit theory of personality.

As it happens, we can fit The Big Five into a hierarchical

structure of implicit personality theory.

If these are indeed

the kinds of questions we ask about people, then it seems like the

Big Five -- and not just a single dimension of evaluation --

resides in our heads as an implicit theory of personality.

As it happens, we can fit The Big Five into a hierarchical

structure of implicit personality theory.And in fact, there is some empirical evidence that The Big Five -- whatever its status as a scientific theory of personality -- serves as an implicit theory of personality as well.

The evidence comes from a

provocative study by Passini and Norman (1966), who asked

subjects to use Norman's adjective rating scales to rate total

strangers -- people they had never met before, and with

whom they were not permitted to interact during the ratings

session. The subjects were simply asked to rate others as

they "imagined" them to me. Nevertheless, factor analysis

yielded The Big Five, just as had earlier factor analyses of

ratings of people the subjects had known well. Note that

the Passini and Norman study violates the traditional assumption

of personality assessment: that there is some degree of

isomorphism between personality ratings and the targets' actual

behavior. In this case, the judges had no knowledge of the

targets' behavior. The Big Five structure that emerged

from their ratings was not in their targets' behavior -- simply

because they had no knowledge of their targets' behavior; but it

certainly existed in the judges' heads, as a "description of the

generalized Other".

Based on this evidence, it may be that the Big Five provides a somewhat more differentiated implicit theory of personality than the two-dimensional evaluation model promoted by Rosenberg and Sedlak. If so, we would have another answer to the question of what makes a central trait central. Just as R&S argued that central traits loaded highly on the two dimensions of evaluation, perhaps central traits load highly on one or the other of the Big Five dimensions of personality. Certainly that's true for warm-cold, which loads highly on extraversion, and intelligent-unintelligent, which loads highly on openness.

By now there have been many studies similar to that of Passini and Norman, all with similar results: every factor structure derived from empirical observations has been replicated by judgments of conceptual similarity. Thus, we do seem to carry around in our heads an intuitive notion concerning the structure of personality -- the co-occurrences among certain behaviors, the covariances among certain traits, the notion that certain things go together, and other things contradict each other. This conceptual structure -- this implicit personality theory -- is thus cognitively available to influence people's experience, thought, and action in the social world.

The

existence of implicit personality theory is interesting, but in

some sense it is also troublesome, because it raises a difficult

question that has long bedeviled theorists of perception in

general -- the question of realism vs. idealism:

But this kind of evidence is problematic. In principle, factor analysis should be applied to objective observations. But for pragmatic reasons, this is generally impossible in the domain of personality research, simply because it is very difficult to perform the systematic observations of behavior that are required for this purpose. Because we have no direct measurements of personality traits, factor analysis is generally applied to rating data -- subjective impressions of behavior and traits; judgments that rely heavily on memory.

The problem is the reconstructive nature of memory retrieval. Memory for the past is contaminated by expectations and inferences. When factor analysis is applied to memory-based ratings, therefore, we cannot be sure what the factor matrix represents: the structure residing in the personalities of the targets, or the structure residing in the minds of the raters.

The fact is, we know

from studies like Passini and Norman's that the structure of

personality -- and, specifically, the Big Five structure that is

so popular -- resides in the minds of raters.

The fact is, we know

from studies like Passini and Norman's that the structure of

personality -- and, specifically, the Big Five structure that is

so popular -- resides in the minds of raters.  Therefore,

it is possible that the structure of personality is to some

degree illusory -- in a manner somewhat resembling the Moon

illusion familiar to perception researchers. The moon

looks larger on the horizon than at zenith, even though it

isn't, because of "unconscious inferences" made by perceivers

that take account of distance cues in estimating size.

Perhaps personality raters make similar sorts of unconscious

inferences in rating other people's personalities (or their

own). Note that the existence of a moon illusion doesn't

imply that there is no moon. It simply means that the moon

isn't as big as it looks.

Therefore,

it is possible that the structure of personality is to some

degree illusory -- in a manner somewhat resembling the Moon

illusion familiar to perception researchers. The moon

looks larger on the horizon than at zenith, even though it

isn't, because of "unconscious inferences" made by perceivers

that take account of distance cues in estimating size.

Perhaps personality raters make similar sorts of unconscious

inferences in rating other people's personalities (or their

own). Note that the existence of a moon illusion doesn't

imply that there is no moon. It simply means that the moon

isn't as big as it looks.

Similarly, in the realm of person perception, our expectations and beliefs can distort our person perceptions, and thus our person memories; in particular, our expectations and beliefs about the coherence of personality can magnify our perception of that coherence.

Research has already established that the structure of personality exists in the mind of the observer. The important question is whether it also has an independent existence in the world outside the mind. As in the moon illusion, we usually take a modified realistic view of perception -- that our perceptions are fairly isomorphic with the world. Accordingly, we may assume that our beliefs about personality are to some extent isomorphic with the actual structure of personality. But are there really?

The

controversy about the nature of implicit personality theory is

reflected in two competing hypotheses:

Thus, we

can test the accurate reflection hypothesis against the

systematic distortion hypothesis by comparing the structures

derived from three types of data:

One such

experiment, by Shweder and D'Andrade (1980), employed 11

categories of interpersonal behavior as target items: these were

behaviors such as advising, informing, and suggesting.

Shweder and D'Andrade

then constructed correlation matrices representing the structural

relations among all 11 behaviors. They then performed 7

different tests of the correspondence between the matrices.

Shweder and D'Andrade

then constructed correlation matrices representing the structural

relations among all 11 behaviors. They then performed 7

different tests of the correspondence between the matrices.

For example, examining the correlations between parallel cells of the matrices, they observed the following pattern of correlations:

Aggregating

the results across the 7 different tests, they observed the

following pattern of correlations:

Systematic Distortion or Accurate Reflection?Although the Shweder & D'Andrade study seems quite compelling, it has come under criticism from advocates of the accurate reflection hypothesis. In particular, UCB's own Prof. Jack Block has been an ardent defender of the notion that memory-based ratings, and implicit theories of personality, are accurate reflections of external reality. See, in particular, an exchange between Shweder and D'Andrade and Block, Weiss, and Thorne that appeared in the Journal of Personality & Social Psychology for 1979. |

To be honest, the systematic distortion hypothesis is somewhat paradoxical, because it seems to refute the realist assumption that there is a high degree of isomorphism between the structure of external reality and our internal mental representations of it. Where does implicit personality theory come from, if not from the world outside? According to the ecological perspective on semantics, "the meanings of words are in the world" ( a quote from Ulric Neisser): our cognitive apparatus picks up the structure of the world, and so our mental representations are faithful to that structure. But they apparently aren't, at least in the case of person perception, where it is very clear that our cognitive structures depart radically from the real world that they attempt to represent.

So where else might implicit personality theory come from? How do our behaviors and traits become schematized, organized, and clustered into coherent knowledge structures?

D'Andrade

and Shweder have suggested a number of possibilities:

Regardless of the ontological status of implicit personality theory, Asch's initial question remains on point: How do we integrate information acquired in the course of person perception into a unitary impression of the person along some dimension? Asch (1946) considered two possibilities: either we simply sum up a list of a person's individual features to create a unitary impression, or the unitary impression is some kind of configural gestalt. Asch clearly preferred the gestalt view to the additive view, a preference that integrated social with nonsocial perception, but his impression-formation paradigm has permitted later investigators to consider simpler alternatives.

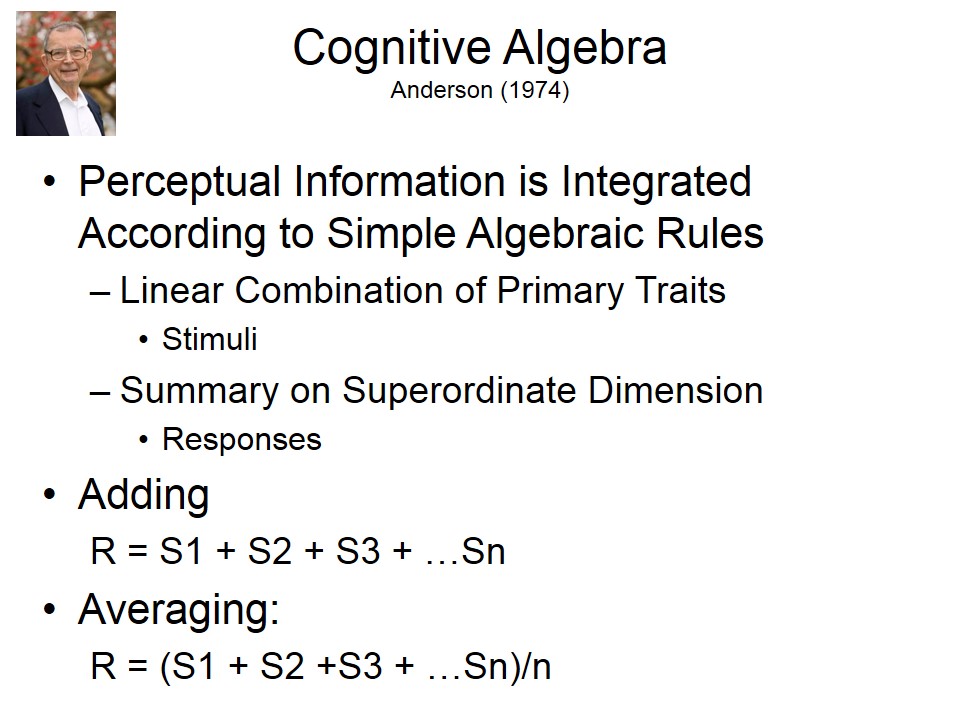

Chief among these investigators

has been Norman Anderson (1974), who has promoted cognitive

algebra as a framework for impression formation and for

cognitive processing in general. According to Anderson,

perceptual information is integrated according to simple

algebraic rules, which take information (about, say, primary

traits) and performs a linear (algebraic) combination that

yields a summary of the trait information in terms of a

superordinate dimension (say, a superordinate trait).

Chief among these investigators

has been Norman Anderson (1974), who has promoted cognitive

algebra as a framework for impression formation and for

cognitive processing in general. According to Anderson,

perceptual information is integrated according to simple

algebraic rules, which take information (about, say, primary

traits) and performs a linear (algebraic) combination that

yields a summary of the trait information in terms of a

superordinate dimension (say, a superordinate trait).

In

particular, Anderson has considered two very simple algebraic

models (where S = stimulus information and R = the impression

response):

Anderson's

basic procedure follows the Asch paradigm:

Anderson's

basic procedure follows the Asch paradigm: Anderson's experiments include critical

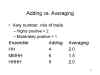

comparisons that afford a test of the adding and averaging

models of impression formation. Assume that the trait

ensemble includes a mix of traits:

Anderson's experiments include critical

comparisons that afford a test of the adding and averaging

models of impression formation. Assume that the trait

ensemble includes a mix of traits:

Thus, the adding and averaging functions give the following values to various trait ensembles:

| Trait

Ensemble

|

Adding

|

Averaging

|

| HH

|

4

2+2 |

2.0

(2+2)/2 |

| MMHH

|

6

1+1+2+2 |

1.5

(1+1+2+2)/4 |

| HHHH

|

8

2+2+2+2 |

2.0

(2+2+2+2)/4 |

Thus, the adding rule predicts that both the HHHH and the MMHH ensemble will be preferred to the HH ensemble; but the averaging rule predicts no difference between HH and HHHH, and that both will be preferred to MMHH.

When Anderson (1965)

actually performed the comparison, the empirical results were a

little surprising:

When Anderson (1965)

actually performed the comparison, the empirical results were a

little surprising:

Anderson

resolved the conflict by adding three new assumptions:

In a revised test,

Anderson set aside the matter of stimulus weightings.

Instead of asking subjects whether they were biased positively

or negatively, he simply assumed that positive and negative

biases would average themselves out, so that the average subject

could be considered to be neutral at the outset (in fact, there

is probably an average positive bias, but the essential point

remains intact). Accordingly, a value of 0 was entered

into the adding and averaging equations, along with the values

of the stimulus information. Of course, adding 0 does

nothing to sums; but it can have a marked effect on

averages. Compare, for example, the following table to the

table just above:

In a revised test,

Anderson set aside the matter of stimulus weightings.

Instead of asking subjects whether they were biased positively

or negatively, he simply assumed that positive and negative

biases would average themselves out, so that the average subject

could be considered to be neutral at the outset (in fact, there

is probably an average positive bias, but the essential point

remains intact). Accordingly, a value of 0 was entered

into the adding and averaging equations, along with the values

of the stimulus information. Of course, adding 0 does

nothing to sums; but it can have a marked effect on

averages. Compare, for example, the following table to the

table just above:

| Trait

Ensemble

|

Adding

|

"Weighted"

Averaging

|

| HH

|

4

0+2+2 |

1.33

(0+2+2)/2 |

| MMHH

|

6

0+1+1+2+2 |

1.20

(0+1+1+2+2)/4 |

| HHHH

|

8

0+2+2+2+2 |

1.60

(0+2+2+2+2)/4 |

When Anderson (1965)

actually performed the comparison, the empirical results were

less confusing:

When Anderson (1965)

actually performed the comparison, the empirical results were

less confusing:

So, this

experiment seemed to offer decisive evidence favoring the

weighted averaging model of impression formation. In the

weighted averaging model, the perceiver's final impression

builds up slowly, and is heavily constrained by his or her

initial bias and first impressions.

Cognitive Algebra as Mathematical ModelingAnderson's cognitive algebra is an attempt to represent a basic cognitive function as a mathematical formula. As such, cognitive algebra is intended to be a formal mathematical model of the impression-formation process. So, if you've always been wary of mathematical modeling (perhaps because you've thought it was too dry, or perhaps because of a little math phobia), but you've followed the arguments about cognitive algebra so far, then Congratulations! You've just successfully worked your way through a mathematical model of a psychological process. |

Anderson's cognitive algebra, and especially the weighted-averaging rule, is an extremely powerful framework for studying social judgment. Cognitive algebra can be applied to any social judgment, so long as the stimulus attributes are quantifiable, and so long as the perceiver's judgment response can be expressed in numerical terms.

But

cognitive algebra also has some problems:

Despite these problems, lots of work in social cognition has been done within the framework of cognitive algebra -- so much so that we could devote an entire course to it. But we won't.

Another prominent model

of person perception is the Social Relations Model (SRM) developed by David Kenny

(1994; for earlier versions, see Kenny & LaVoie, 1984;

Malloy & Kenny, 1986; Kenny, 1988), based on the early work

of the existential psychiatrist (before he became an "anti-psychiatrist") R.D.

Liang (Liang et al., 1966).

Link to an overview of the Social Relations Model, based on Kenny's 1994 book, Interpersonal Perception: A Social Relations Analysis. For critical reviews of the SRM, see the review of Kenny's book by Ickes (1996), as well as the book review essays published in Psychological Inquiry (Vol. 7, #3, 1996).

The SRM is focused on dyadic relations -- that is, relations between two people, say Andy and Betty, and in particular how these two people perceive each other. For purposes of illustration, let's suppose that Andy perceives Betty as high in interpersonal warmth. In Kenny's s analysis, this perception -- or impression -- has a number of components, such that A's perception of B's warmth is given by the sum of four quite different perceptions (actually, five, depending on how you count):

Because the

relationship among the components is additive, you can think of

the SRM as a version of Anderson's additive model for

impression-formation. But because the addition includes a

constant, which reflects A's biased view of people in

general, it's actually a weighted additive model.

But it's not necessarily a strictly additive model (which would

go against Anderson's results, which favor averaging). The

relationship component may well be achieved by an

averaging process. We're not going to get into that

detail: it will be enough just to explore the surface features

of the SRM.

This is because the SRM is actually quite complicated, because Kenny has built into the model two features that are not found in other, simpler models of person perception:

In applying the SRM,

Kenny prefers to employ a round-robin research design, in which each person in a group

rates everyone else in the group, as well as themselves.

The particular rating scales can be selected for the

investigator's purposes, but we might image that the ratings are of likability

(Anderson), warmth and competence (Rosenberg, Fiske), or the

Big Five traits of extraversion,

neuroticism, agreeableness, conscientiousness, and openness to

experience. Of course, selection of the traits

to be rated matters a great deal: results may differ

greatly if subjects are forming impressions of a

person's masculinity, sexual orientation,

or likeability, or extraversion.

Note that in the round-robin design, the perceiver is also a target, and the target also a perceiver -- just as in the General Social Interaction Cycle, the actor is also a target and the target also an actor. The SRM treats the individual as both subject and object, stimulus and response, simultaneously.

The mass of data from the round robin design is then decomposed into three components:

With the round-robin

design in hand, Kenny can proceed to address a number of

questions about interpersonal perception. Here are these questions, and short

answers, based on some 45 studies reported in Kenny's 1994

monograph. .

Like Anderson's

cognitive algebra, Kenny's

Social Relations Model is

more a method than a

theory. The

round-robin design, coupled

with sophisticated

statistical tools, can be

used to partition person

perception into its various

components, and so to answer

a wide variety of questions

about a wide variety of

topics in social

cognition.

Research on impression-formation, from Asch (1946) to Anderson (1974) and beyond, has largely made use of trait terms as stimulus materials. This is certainly appropriate, because -- as Fiske & Cox (1979) demonstrated, as if we needed any proof -- we often describe ourselves and others in terms of traits. Working with traits injects substantial economies into impression-formation research, because they're easy to manage. Moreover, traits may fairly closely represent the way information about people is stored in social memory. But it's also clear that an exclusive focus on traits can give a distorted view of the process of impression formation, because traits are not really -- or, at least, not the only -- stimulus information for social perception.

We don't walk around the world with our traits listed on our foreheads, to be read off by those who wish to form impressions of us. Rather, the real stimulus information for person perception consists of our physical appearance, our overt behavior, and the situational context in which they appear. Accordingly, in addition to describing how we make use of trait information to form impressions of personality, a satisfactory account of person perception needs to answer a different sort of question -- to wit:

Or, put another way,

Again, the same

question about perception

occurs in the social domain

as in the nonsocial domain.

In

the nonsocial

domain, the

stimulus

information

for perception

consists of

patterns of

physical

energy (the

proximal

stimulus)

radiating from

the distal

stimulus, and

impinging on

the

perceiver's

sensory

surfaces.

In the social

domain, the

stimulus

information

for perception

consists of a

person's

surface

appearance and

overt

behavior.

These include,

among others,

the person's:

PhysiognomyThe use of physical features to make inferences about character and personality has its roots in physiognomy, a pseudoscience in which a person's character was judged according to stable features of the face -- much as the 19th-century phrenologists judged character from the bumps and depressions on the skull. The word physiognomy comes from the Greek Physis (nature) and gnomon (judge), and began with the observation that some people looked like certain animals. It was only a short step, then, to infer that those individuals shared the personality traits presumed to be characteristic of those animals. More generally, physiognomy was based on the assumption that a person's external appearance revealed something about his internal personality characteristics. References to physiognomy go back at least as far as Aristotle, who wrote in his Prior Analytics (2:27) that

Aristotle (or perhaps one of his students) actually produced a treatise on the subject, the Physiognomonica. Physiognomy

fell into

disrepute in the

medieval period,

and was revived

by Giambattista

della Porta (De

humana

physiognomia,

1586), Thomas

Browne (Religio

Medici,

1643), and

Johann Kaspar

Lavater (Physiognomische

Fragmente zur

Beforderung

der

Menschenkenntnis

and

Menschenliebe,

1775-1778).

And

it's been

revived again,

much more

recently.

In a study of

transactions on

a peer-to-peer

lending site

(Prosper.com),

Durate (2009)

showed that

people could

make valid

judgments of

trustworthiness

based on a

head-shot: the

criterion was

the applicant's

actual credit

rating and

history.

|

||||

| Here are some physiognomic drawings by Charles LeBrun (1619-1690) a French artist who helped establish the "academic" style of painting popular in the 17th-19th centuries (from Charles LeBrun -- First Painter to King Louis XIV). | ||||

|

|

|

|

|

A

good example of

social-perception research

involving descriptions of

physical stimuli is the work

of Ekman (1975, 2003; Ekman

& Friesen, 1975) and

others on facial expressions

of emotion. In his

work, Ekman has been

particularly concerned with

determining the "sign

vehicles" by which people

communicate information

about their emotional states

to other people. The

fact that such communication

occurs necessarily entails

that there is a receiver

who is able to pick up on

the communications of a sender

-- and this information

pickup is exactly what we

mean by perception.

Facial Expressions in ArtAmong the many formalisms taught in European painting academies in the 17th-19th centuries were standards for the depiction of emotion on the face. Among the most popular of these texts was the Methode pur apprendre a dessiner les passions proposee dans une conference sur l'expression general et particuliere (1698) by Charles LeBrun, a leader of French academic painting. Here are samples from LeBrun's book, showing how various emotions should be depicted (from Charles LeBrun -- First Painter to King Louis XIV). |

||

| Anger | Desire | Fear |

| Hardiness | Sadness | Scorn |

| Simple Love | Sorrow | Surprise |

One of Ekman's

most famous findings is that

people can reliably "read"

certain emotions from the

expressions on people's

faces. This is true

even when the sender and

receiver come from widely

disparate cultures.

Close analysis of these

expressions shows that each

of them is comprised of a

particular configuration of

muscle activity. These

include:

Ekman's

system for coding the facial

musculature is known as the

Facial Action Coding System

(FACS). The system has

more than 60 coding

categories for various

muscle action units (like

the Inner Brow Raiser

or the Lip Corner Puller)

and other action descriptors

(such as Tongue Out

or Lip Wipe).

Each basic emotion, and

every variant on each basic

emotion, can be described as

a unique combination of

these coding

categories. And each

coding category is

associated with a specific

pattern of muscle activity.

Cross-cultural

studies show

that Ekman's

basic emotions

are highly

recognizable

across cultures.

Nelson and

Russell (2013)

summarized

several

decades' worth

of such

studies,

involving

subjects from

literate

Western

cultures

(mostly,

frankly,

American

college

students),

literate

non-Western

cultures

(e.g., Japan,

China, and

South Asia),

and

non-literate

non-Western

cultures

(e.g.,

indigenous

tribal

societies in

Oceania,

Africa, and

South

America).

Subjects

from all three

cultures

recognized

prototypical

displays of

the six basic

emotions at

levels

significantly

and

substantially

better than

chance.

This is consistent

with the hypothesis

that the basic

emotions, and

the apparatus

for producing

and reading

their displays

on the face,

is not a

cultural

artifact but

something that

is, indeed,

biologically

basic.

Evidence

like this is

generally

taken as

support for

the universality

thesis

that facial

expressions of

the

basic emotions

are

universally

recognized.

They are a

product of our

evolutionary

heritage,

innate (not

acquired

through

learning),

and shared

with at least

some nonhuman

species

(especially

primates).

Recognition of

these emotions

is a product

of "bottom-up"

processing of

stimulus

information --

essentially a

direct,

automatic

readout from

the target's

facial

musculature.

And, as the

evidence

shows, they

are invariant

across

culture.

The ability to

read the basic

emotions from

the face

does not depend

on contact

with Western

culture,

literacy, or stage

of economic

development.

The universality

thesis

has its

origins in the

work of

Darwin, and

also in the

writings of

Sylvan

Tomkins, who

was Ekman's

mentor; but it

is most

closely

associated

these days

with Ekman

himself.

The

universality

thesis is widely

accepted, but

there are

those who have

raised

objections to

it, arguing

that, at

the very

least, it has

been

overstated.

They note, in

the first

place, that

recognition of

the basic

emotions is not,

in

fact, constant

across cultures.

If you

look at the

results

of the Nelson

&

Russell (2013)

review,

depicted

above, you'll

see clearly that,

while recognition

is

significantly

and

substantially

above

chance

levels, there

are also

substantial

and

significant

cultural

differences.

Only happiness,

apparently, is

truly

universally

recognized.

Recognition

of surprise,

and especially

the more

negative

emotions,

drops off

substantially

as we move to

literate

non-Western

and then

non-literate

non-Western

cultures.

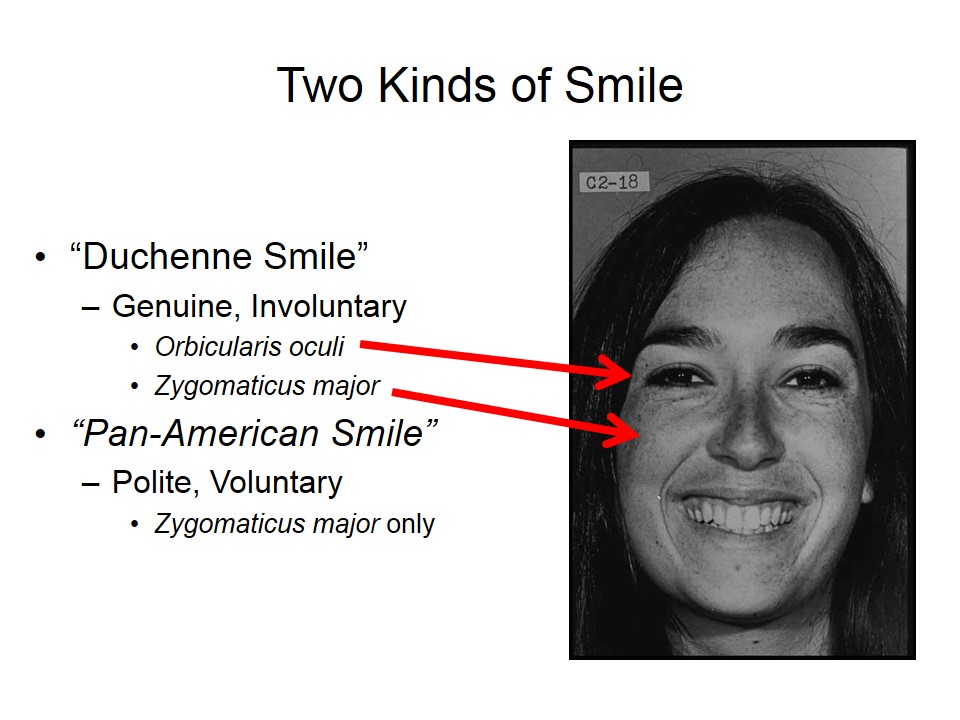

Just as the face may be the pre-eminent social stimulus, so the smile may be the pre-eminent social behavior.

So,

for example, Ekman distinguishes between two

kinds of smile:

So,

for example, Ekman distinguishes between two

kinds of smile:

According to the

Associated Press, customer-service employees

at the Keihin Electric Express Railway Company

in Japan can check their smiles against the

Okao Vision face-recognition software system,

to make sure that they are smiling properly at

customers "Japan Train Workers check Grins

with Smile" by Jay Alabaster, Contra Costa

Times 07/26/2009). It's not clear

whether they're being checked against a

Duchenne smile or a Pan American smile.

According to the

Associated Press, customer-service employees

at the Keihin Electric Express Railway Company

in Japan can check their smiles against the

Okao Vision face-recognition software system,

to make sure that they are smiling properly at

customers "Japan Train Workers check Grins

with Smile" by Jay Alabaster, Contra Costa

Times 07/26/2009). It's not clear

whether they're being checked against a

Duchenne smile or a Pan American smile.

And as another example,

anger involves a large number of

muscles. So, as your grandmother told

you, it really does take more muscles to frown

than to smile. So smile and save your

energy.

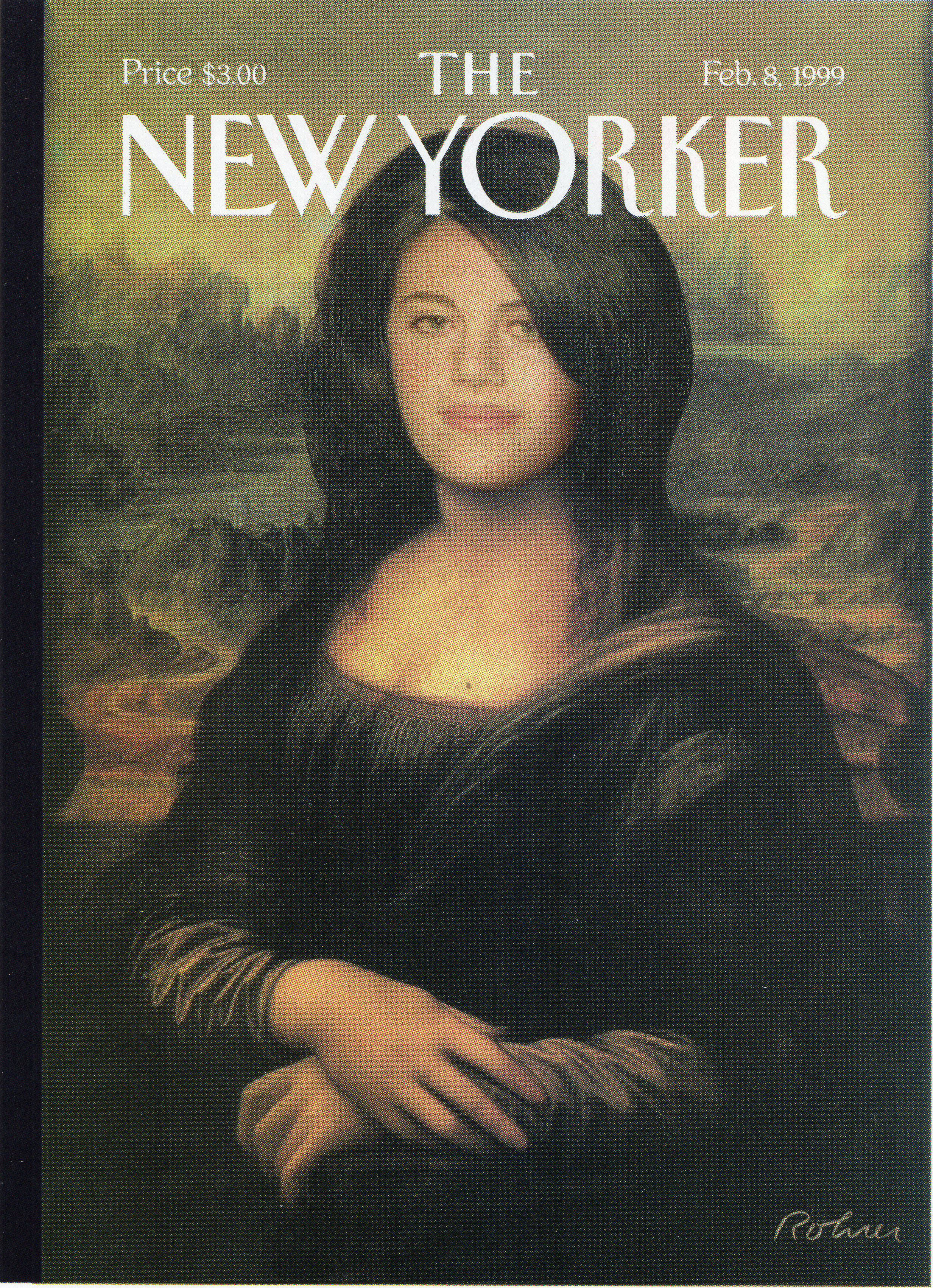

Ekman's

Ekman's  analysis of facial

emotion has been used to offer a solution to a

famous question in art history: what is it

about the smile of Mona Lisa, in

Leonardo da Vinci's famous painting (c.

1503-1505)?. It's not just Nat "King"

Cole who has found this smile

mysterious. Part of the mystery of the

smile is the ambiguous way it's painted --

which, according to a conventional theory,

reflects the "archaic smiles" in the ancient

Greek and Roman paintings and sculpture that

so inspired Leonardo and other artists of the

Renaissance.

analysis of facial

emotion has been used to offer a solution to a

famous question in art history: what is it

about the smile of Mona Lisa, in

Leonardo da Vinci's famous painting (c.

1503-1505)?. It's not just Nat "King"

Cole who has found this smile

mysterious. Part of the mystery of the

smile is the ambiguous way it's painted --

which, according to a conventional theory,

reflects the "archaic smiles" in the ancient

Greek and Roman paintings and sculpture that

so inspired Leonardo and other artists of the

Renaissance.

In

2005 NIcu Sebe, a computer-vision researcher at the University of Amsterdam, scanned the Mona Lisa with an emotion-recognition program he developed with colleagues at the Beckman Institute of the University of Illinois, and based on Ekman's analysis of facial expressions of basic emotions. Using this program, he determined that the Mona Lisa's smile consisted of 83% happiness, 9% disgust, 6% fear, and 2% anger (New Scientist, 12/17/05). So that's part of the mystery. Or maybe it's just a smirk, as in this New Yorker cartoon by Emily Flake (08/30/2021).

On the other hand, Peter Schjeldahl, commenting on the sale (for almost half a billion dollars) of another Leonardo painting, Salvator Mundi, remarked on the "ambiguous Mien" of Jesus, and went on to write "Giving an ambiguous character an ambiguous mien doesn't seem a stop-the-presses innovation. The trick of it, by the way is the same as that of the "Mona Lisa": painting different expressions in the eyes and in the mouth. When you look at one, your peripheral sense of the other shifts, and vice versa. You try to reconcile the impressions, with frustration that seeks and finds relief in awe."

Since then, work on computer

recognition of emotion, based largely on

facial cues, has progressed apace, evolving

into a new sub-discipline known as affective

computing. Among the most highly

developed of these systems is Affdex,

a product of Affectiva,

an offshoot of the MIT Media Lab. Based

largely on Ekman's FACS system, Affdex scans

the environment for a face, isolates it

from its background, and identifies major

regions such as mouth, nose, eyes, and

eyebrows -- distinguishing between non

deformable points,

such as the tip of the nose, which

remain stationary, and deformable

points, such as the corners

of the lips, which change with different

facial expressions. It

computes various geometric

relations between these points,

and compares the current face to a

very large number of other faces, previously

analyzed, stored in

memory. It then outputs a

probabilistic judgment of

whether the face is displaying

such basic emotions as

happiness, disgust, surprise,

concentration, and

confusion. It can

distinguish between social

smiles and genuine "Duchenne"

smiles, and between real and

feigned pain. And it does

this in real time.

For an article on affective computing, including the story of how market forces turned Affdex from an emotional prosthetic for autistic people into a marketing tool, see "We Know How You Feel" by Raffi Khatchadourian, New Yorker, 01/19/2015.

FACS is intended for use by professional researchers and clinicians, including computer analysis of facial expressions. But the fact that people can reliably read emotions from other people's faces suggests that our perceptual systems are sensitive to changes in facial musculature. Ekman's FACS system is a formal description of the physical stimulus that gives rise to the perception of another's emotional states.

The stimulus faces used in much of Ekman's research comes from actors posing various expressions according to Ekman's instructions, but we can read emotional states from people's faces in other circumstances as well.

Consider, for example, photographs taken in April 2000, when Elian Gonzalez, a Cuban boy who had lost his mother during an attempt to escape from Cuba, was being sheltered by some of his mother's relatives in Miami. Elian's father, who was estranged from his mother, and had remained in Cuba, demanded that he be returned to Cuba. The US Department of Justice, for its part, determined that, legally, custody of the boy should be given to his closest living relative -- his father, who was in Cuba. The Miami relatives refused to turn Elian over for repatriation, and in the final analysis an armed SWAT team from the Border Patrol forced its way into the relatives' house to retrieve the boy. Little did the officers know that a newspaper reporter and photographer were already in side the house. The resulting remarkable sequence of photographs shows the surprise of one officer when he discovered the photographer in the room.

Like Ekman, Paula Niedenthal and her colleagues have cataloged a number of different types of smiles, but the differences they observe go far beyond patterns of facial activity.. It turns out (to quote the headline in the New York Times over an article by Carl Zimmer, 01/25/2011), that there's "More To a Smile Than Lips and Teeth". Some smiles are expressions of pleasure, while others are displayed strategically, in order to initiate, maintain, or strengthen a social bond; some smiles comprise a greeting, others display embarrassment -- or serve as expressions of power. Niedenthal has been especially active in examining the process of smile recognition -- recognizing the differences among smiles of pleasure, embarrassment, bonding, or power. She proposes that smiles are "embodied" in perceivers through a process of mimicry, in which different types of smiles initiate different patterns of brain activity in the perceiver -- patterns that are similar to those in the brain of the person doing the smiling.

In one

experiment, Niedenthal

found, not surprisingly,

that subjects could

accurately distinguish

between these different

types of smiles. But

when they held a pencil

between their lips,

essentially interfering with

the facial musculature that

would mimic the target's

smile, accuracy fell off

sharply. Similarly,

the judgments of subjects in

the "pencil" condition were

more influenced by the

contextual background, than

by the smiles

themselves.

Apparently, mimicry plays an

important role in the

recognition of smiles (and,

probably, other facial

expressions as well).

Niedenthal's

work exemplifies a larger

movement in cognitive

psychology known as embodied

cognition or grounded

cognition. For

most of its history,

psychology has assumed that

the brain is the sole

physical basis of mental

life. Embodied cognition

assumes that other bodily

processes -- in this case,

the facial musculature --

are also important

determinants of mental

states. And so is the

environment -- like the

context in which a smiling

face appears.

Proponents of embodied

cognition do not deny the

critical role of the brain

for the mind. They

just argue that other

factors, in the body outside

the brain, and in the world

outside the body, are also

important.

Full disclosure: Prof. Niedenthal worked in my laboratory as an undergraduate. But even so, her work on the smile is the most thorough analysis yet. For an overview of her work on smiles, see P.M. Niedenthal et al. "The Simulations of Smiles (SIMS) Model: Embodied simulation and the Meaning of Facial Expression", Behavioral & Brain Sciences, 33(6), 2010.

Ekman and DarwinEkman's

work on facial

expressions of

emotion is

strongly

informed by

evolutionary

theory.

Charles Darwin,

in his book on The

Expression of

the Emotions

in Men and

Animals

(1872), noted

that the facial

expressions by

which humans