Mind and Body

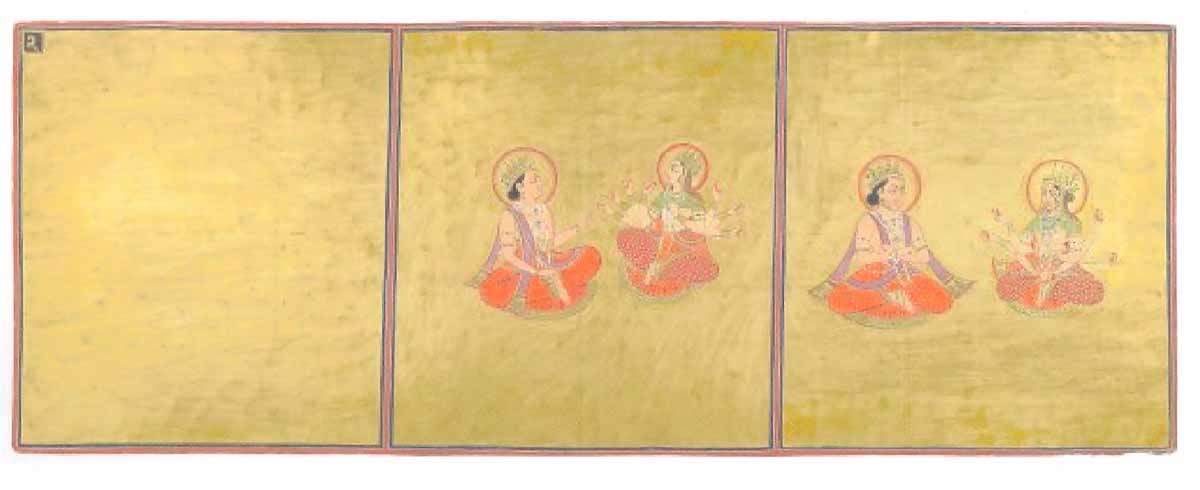

"The Emergence of Spirit and Matter", attributed to Shivdas, painted ca. 1828 during the reign of Maharajah Man Singh, Marwar (now Jodhpur), Rajasthan, northwest India. From Folio 2 from the Shiva Purana, now in the Mehrangarh Museum. The painting is influenced by the ascetic Nath sect of yogis. The left panel represents the "formless Absolute" that preceded Creation. In the center panel, Spirit or Consciousness (the male figure) and Matter (the female figure) are linked through their gaze and posture. In the right panel, Spirit and Matter are separated, with their arms crossed, no longer looking at each other. From Garden and Cosmos: The Royal Paintings of Jodhpur, exhibit at the Arthur M. Sackler Gallery, Smithsonian Institution, 2008-2009.

Consciousness has many aspects,

but the fundamental feature of consciousness is sentience

or subjective awareness. As the philosopher Thomas

Nagel put it in his famous essay, "What Is It Like To Be a Bat?"

(1979),

The fact that an organism has conscious experiences at all means, basically, that there is something it is like to be that organism. There may be further implications about the form of the experience; there may even (though I doubt it) be implications about the behavior of the organism. But fundamentally an organism has conscious mental states if and only if there is something that it is like to be that organism -- something it is like for the organism. We may call this the subjective character of experience. It is not captured by any of the familiar, recently devised reductive analyses of the mental, for all of them are logically compatible with its absence. It is not analyzable in terms of any explanatory system of functional states, or intentional states, since these could be ascribed to robots or automata that behaved like people though they experienced nothing.The relation between mind and body is one of philosophy's great problems, but consciousness plays a special role in making it problematic. It's no trick to make a machine that processes information (well, I can say that now!). All you have to do is take stimulus inputs and process them in some way so that the machine makes appropriate responses. Your home computer does this. So does a 19th-century Jacquard loom. From this perspective, as the computer scientist and artificial intelligence pioneer Seymour Papert once put it, "The brain is just a machine made of meat". The trick lies in making a machine that is conscious.

As Nagel put it in his "Bat" essay,

"Consciousness is what makes the mind-body problem really intractable. Without consciousness, the mind-body problem would be much less interesting. With consciousness, it seems hopeless."How did we get to this point?

A Philosophical Disclaimer

I'm not a philosopher, and I don't

have any advanced training in the history and

methods of philosophy -- or any elementary training,

either, for that matter. In trying to make

sense of philosophical analyses of the mind-body

problem, which I think is necessary in order to have

some perspective on the scientific work, I have

relied on my own common sense, the tutelage of John

Searle (who should not be held responsible for any

of my own misunderstandings), and several excellent

secondary sources.

These authors don't discuss the evolution of mind and consciousness, but I'll have something to say about this topic later, in the lectures on "The Origins of Consciousness".

In addition to these popular general

introductions to philosophy I have particularly

relied on the following:

See also:

|

Dualism

All three major monotheistic religions --

Judaism, Christianity, and Islam -- hold that humans are

different from other animals: because we have souls, and

free will, we are to some extent separate from the rest of

the natural world. In much the same way, Hinduism and

Buddhism accord humans greater spiritual worth than other

animals. Animist cultures, however, may not make such

a clear distinction between humans and animals (or, for that

matter, trees and rocks). For more on cultural

distinctions between humans and animals and other living

things, see How

to Be Animal: A New History of What It Means to Be Human

(2021) by Melanie Challenger (reviewed by John Gray in

"The Mind's Body Problem", New York Review of Books,

12/02/2021), discussed above.

In modern times, at least for many people, the soul has been replaced by the mind. And the modern debate about mind and body has its origins at the beginnings of modern philosophy, with Rene Descartes (1596-1650), a 17th-century French philosopher who lived and worked in Holland for most of his life, and the other thinkers of the Enlightenment.

Our story begins with Rene Descartes

(1596-1650), a French philosopher whose project was nothing

less than the achievement of perfect knowledge of things as

they are, free of distortions imposed by our senses,

emotion, and perspective. To this end he adopted a

posture of methodical doubt without skepticism.

He set out to doubt everything that had been taught by

Aristotle and later Aristotelians, as well as by Aquinas and

other Church fathers (although a devout Catholic, in this

latter respect he showed the influence of the Protestant

Reformation and its attacks on papal authority). At

the same time, he assumed that such certain knowledge was

possible to attain. So he began by doubting everything

-- including the distinction between waking and

dreaming. For all he knew, there might

be an "evil demon" who presents an

illusory image of the external world to

Descartes' sense apparatus, such that all

of Descartes' sensory experiences are, in

fact, illusory -- put bluntly, there's no

external world, only mental

representations created by this evil

demon. Descartes could wonder

whether the world as it appeared to him

was really the world as it existed outside

the mind (if there was such a world at

all; but he couldn't doubt that he was

thinking about this problem. Hence,

he concluded that "I think, therefore I

am" -- his famous formulation of cogito

ergo sum.

Our story begins with Rene Descartes

(1596-1650), a French philosopher whose project was nothing

less than the achievement of perfect knowledge of things as

they are, free of distortions imposed by our senses,

emotion, and perspective. To this end he adopted a

posture of methodical doubt without skepticism.

He set out to doubt everything that had been taught by

Aristotle and later Aristotelians, as well as by Aquinas and

other Church fathers (although a devout Catholic, in this

latter respect he showed the influence of the Protestant

Reformation and its attacks on papal authority). At

the same time, he assumed that such certain knowledge was

possible to attain. So he began by doubting everything

-- including the distinction between waking and

dreaming. For all he knew, there might

be an "evil demon" who presents an

illusory image of the external world to

Descartes' sense apparatus, such that all

of Descartes' sensory experiences are, in

fact, illusory -- put bluntly, there's no

external world, only mental

representations created by this evil

demon. Descartes could wonder

whether the world as it appeared to him

was really the world as it existed outside

the mind (if there was such a world at

all; but he couldn't doubt that he was

thinking about this problem. Hence,

he concluded that "I think, therefore I

am" -- his famous formulation of cogito

ergo sum.

Note, that, technically,

the one thing that Descartes couldn't doubt was that he

was thinking -- that thoughts exist.

The body might indeed be an illusion, but thoughts

definitely exist, precisely because he's engaged in the

act of thinking. It follows, then, that thoughts

exist independently of bodies (i.e., brains).

Descartes also argued for a strict separation between human beings and animals.

Body and Soul, Body and Mind, Body and BrainUnlike

most Western philosophers who came before him,

Descartes was a layman, but he was also a dutiful son of

the Catholic Church. Like Galileo, he advocated a

heliocentric view of the universe. But when he

heard of Galileo's persecution, he suppressed his own

view. As it happens, Church doctrine also

influenced Descartes' thinking about body and

mind. Descartes made his argument for

dualism in part on the basis of his religious beliefs:

Church doctrine held that Man was separated from animals

by a soul, and for Descartes, soul was equivalent to

mind, and mind was equivalent to consciousness.

Mind is the basis for free will, which provides what we

might call the cognitive basis for sin. However, Descartes' dualism was also predicated on rational grounds. It just seemed to him that body and mind were just -- well, somehow different. And so it seems to the rest of us,

too. Paul Bloom, in Descartes'

Baby (2004), notes that even young children

-- once they have a concept of the mind, a topic

we shall discuss later in the lectures on "The

Origins of Consciousness" -- appear to

believe that their minds are somehow

distinct from their bodies.

And

although they know about brains,

they appear to construe their brains as "cognitive

prostheses" that help them

think, instead of organs

that actually do all our thinking. It has been suggested that

dualism is intuitively appealing because

the brain does not perceive itself, the

way it perceives sounds

and lights, the rumblings

of your stomach and dryness of your mouth, your heart

beating rapidly and your face flushing.

There's "no afference in the brain" --

just (just!) interneurons processing

environmental events, manipulating thoughts, and

organizing responses. We can't feel

ourselves thinking. |

Of all of

Descartes' ideas, dualism is perhaps the most

influential. Not for nothing, I suppose, when he died

his skull was buried in a different place than the rest of

his body (see Descartes' Bones: A Skeletal History of

the Conflict Between Faith and Reason by Russell

Shorto). Wolfram Eilenberger, in The Time of the

Magicians (2020), his survey of 20th-century

philosophy, describes modern philosophy as "the

abstraction-fixated, anti-corporeal, consciousness-obsessed

modern age of Rene Descartes and his methodical successors".

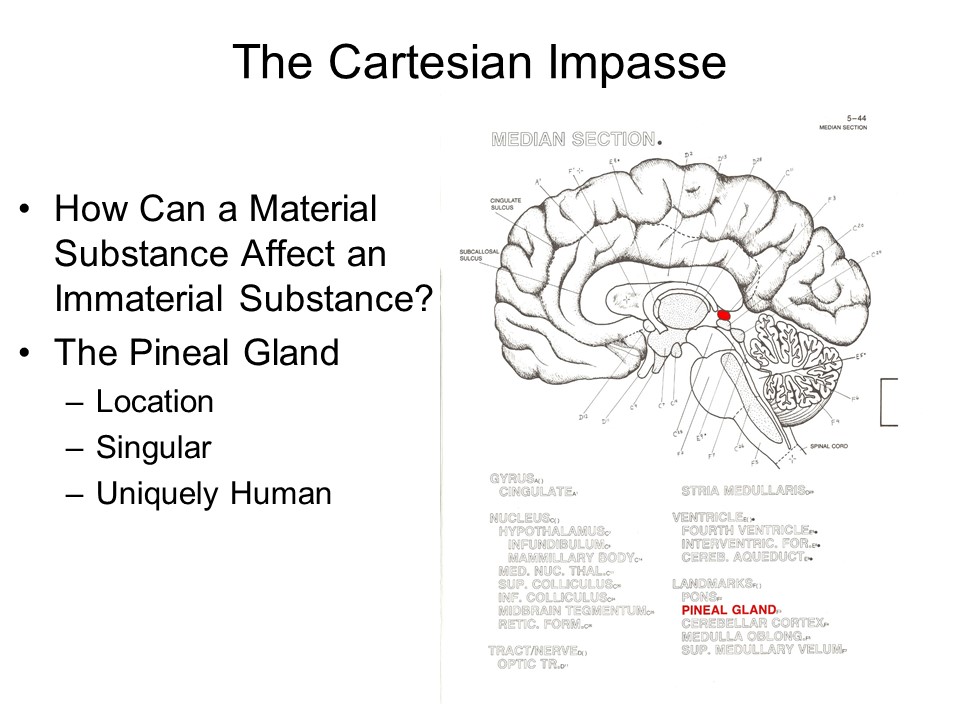

Descartes' dualism is also an interactive

dualism. Mind and body are composed of separate

substances, but they interact with each other.

But how

can a material substance affect an immaterial one, and

vice-versa? Descartes reasoned that this interaction took

place within the pineal gland (also known as the pineal

body), a structure located near the center of the brain. He chose the pineal gland

partly because of its central location, but also because he

believed it to be the only brain structure not duplicated in both

hemispheres (there were other reasons as well).

But how

can a material substance affect an immaterial one, and

vice-versa? Descartes reasoned that this interaction took

place within the pineal gland (also known as the pineal

body), a structure located near the center of the brain. He chose the pineal gland

partly because of its central location, but also because he

believed it to be the only brain structure not duplicated in both

hemispheres (there were other reasons as well).

But aside from locating it in the pineal gland, Descartes had no clear idea how this mind-body interaction took place. This is what the philosopher G.N.A. Vesey referred to as the Cartesian impasse (in The Embodied Mind, 1965).It is now known that the pineal gland is sensitive to light, and when stimulated releases melatonin, a hormone that is important for regulating certain diurnal rhythms. The pineal gland may not be where mind and body meet, but it does seem to be an important component in the biological clock.

And, for that matter, he was wrong on the first point, too: close microscopic examination reveals that the pineal gland is divided into two hemisphere-like structures. But Descartes had no way of knowing this, so we shouldn't count it against him.

Mind and Body in Opera |

Many later

philosophers tried to find a way out of, or around, the

Cartesian impasse. In so doing, they adopted a dualistic

ontology, but not necessarily of Descartes' form, involving

different interacting substances.

Nicholas Malebranche (1638-1715) proposed a view called occasionalism. In his view, neither mind nor body has causal efficacy. Rather, all causality resides with an omniscient, omnipotent God. The presence or movement of an object in a person's visual field is the occasion for God to cause the visual perception of the object in the observer's mind. And the desire to move one's limb is the occasion for God to cause the limb to move.

Baruch Spinoza (1632-1677) proposed dual-aspect theory (also known as property dualism). In his view, both mind and body were causally efficacious within their own spheres. Physical events cause other physical events to occur, and mental events cause other mental events to occur. But both kinds of events were aspects of the same substance, God. Because God cannot contradict himself, he thus ordains an isomorphism between body and mind.

Gottfried Wilhelm Leibnitz (1646-1716) proposed psychophysical parallelism. In his view, mind and body were different, yet perfectly correlated. Leibniz's parallelism abandons the notion of interaction. He saw no way out of Descartes' impasse, because he could not conceive of any way for two different substances, one material and one immaterial, to interact. He also rejected occasionalism as mere hand-waving. The only remaining alternative, in his view, was parallelism: when a mental event occurs, so does a corresponding physical event, and vice-versa. This situation was established by God when he created Man, and doesn't make use of the pineal gland or anything else.

These neo-Cartesian views may seem silly to us

know, but it is important to understand why people were so

concerned with working out the mind-body problem from a

dualistic perspective.

First, there was the influence of Catholic religious doctrine, which held that humans possessed souls that distinguished them from mere animals. Today, the Roman Catholic Church has no problem with the theory of evolution, for the simple reason that, in their view, Darwin's theory applies to the evolution of bodies, whereas souls, including mind and consciousness, comes from God. The equation of soul with mind creates the mind body problem.

We know, intuitively, that mind somehow arises out of bodily processes, but we also know that mind somehow controls our bodily processes. This "somehow" is the great sticking-point of dualism. Apparently, the only way out is to abandon dualism for monism, the ontological position that there is only one kind of substance. But if there is only one substance, which one to choose?

Faced with the

choice, some monists actually opted for mind over body.

Chief among these was George Berkeley (1685-1753, pronounced

"Barklee", like the basketball player), the Anglican bishop

whose poem, "Westward the course of Empire wends its way",

inspired the naming of Berkeley, California (pronounced

"Berklee", like the music school in Boston). By

insisting that there is no reality aside from the mind that

perceives it, Berkeley articulated a position variously known

as immaterialism, idealism, psychic monism,

or mentalistic monism. For Berkeley, only two

types of things exist: perceptions of the world, and the mind

that does the perceiving.

A Zen koan asks: If a tree falls in the

forest, and nobody hears it, does it make a sound? For

Berkeley, the answer is a firm No. Berkeley's

philosophical doctrine, known as esse est percipi -- "to be is to be

perceived" holds that nothing exists independently of

the mind of the person who perceives it.

Obviously, there's no sound unless the

mechanical vibrations in the air generated by the

falling tree fall upon the eardrum of a listener.

But for Berkeley, the tree itself doesn't exist unless there's

an observer

present to perceive it. Strange as it may

seem, Berkeley was what we would now call a

philosophical "empiricist". He believed, like

Locke and other empiricists, that all our

knowledge of the world comes from our

sensory experience; but he concluded that if

all we know of the world is our sensory

impressions of it, then mind itself,

not matter, must be the fundamental

thing.

Where do those sense impressions come from?. From God (Berkeley wasn't an Anglican bishop for nothing). Berkeley was an immaterialist monist, remember. For him, matter doesn't exist. Nobody's ever seen matter, or touched it, or heard it. All we have are sense impressions of brightness, smoothness, loudness, etc. In this sense, Berkeley is a Lockean: all our ideas are, indeed, derived from sense experience. It's just that our sense experiences don't arise from our encounters with matter. They are put into our minds by God, who arranges our sense experiences in such a way that they follow the laws of Newtonian mechanics. We just assume matter exists, in order to account for our sense experiences. But, in reality, matter is just an occult substratum. Applying Occam's Razor, Berkeley just does away with matter as an unnecessary idea. Instead, he posits that our sense impressions are put into our minds by God. Then, employing the Lockean processes of reflection and imagination, we derive other ideas from those sense impressions.

James Boswell (1740-1795) tells the story of what happened when he told Samuel Johnson (1709-1784), about his (Boswell's) inability to refute it (Boswell, Life of Johnson, Vol. 1, p. 471, entry for 08/06/1763):

To which Berkeley would have replied: "Actually, what happened is that God put the idea of the stone in your head, and when you kicked it he put the idea of the painful stubbed toe in your head too".After we came out of church we stood talking for some time together of Bishop Berkeley's ingenious sophistry to prove the non-existence of matter, and that every thing in the universe is merely ideal. I observed, that though we are satisfied his doctrine is not true, it is impossible to refute it. I shall never forget the alacrity with which Johnson answered, striking his foot with mighty force against a a large stone, till he rebounded from it, "I refute it thus.".

Chief among these,

the American psychologist Morton Prince held that

consciousness was a compound of mental elements which he

called -- no kidding -- "mind-stuff" (in The Nature of

Mind and Human Automatism, 1885). In his view, all

material elements possessed at least a small amount of

mind-stuff; when these elements are combined, their

corresponding mind-stuff is also combined, so that

consciousness emerges at a certain level of the organization

of matter. At first glance, this looks like

property dualism, but Prince was really an immaterial

monist. In his words (and punctuation):

[I]nstead of there being one substance with two properties or 'aspects,' -- mind and motion [extension], -- there is one substance, mind; and the other apparent property, motion, is only the way in which this real substance, mind, is apprehended by a second organism: only the sensations of, or effect upon, the second organism, when acted upon (ideally) by the real substance, mind.

However, most

monists opted for body over mind, a position variously known

as materialist monism, or simply materialism.

Among the first materialist monists was Julien Offray de la Mettrie (1709-1751), who claimed that humans, like animals, were automata, with their behaviors governed by reflex. The only difference between humans and animals was that humans were conscious automata, aware of objects and events in the environment, and of their own behavior. For de la Mettrie, mental events exist, as Descartes had said, but they are caused by bodily events and lack causal efficacy themselves. De la Mettrie's book was promptly burned by the Church, and de la Mettrie himself sent into exile.

But his essential position was subsequently adopted by Pierre Jean Georges Cabanis (1757-1808). In a formulation that will be familiar to those who have read much more recent work on the mind-body problem, Cabanis claimed that

"Just as a stomach is designed to produce digestion, so a brain is an organ designed to produce thought".

The views of de la Mettrie and Cabanis set the stage for of epiphenomenalism, a term coined by Shadworth Holloway Hodgson (1832-1912). In Holloway's view, thoughts and feelings had no causal efficacy. They were produced by bodily processes, but they had nothing to do with what the body does.

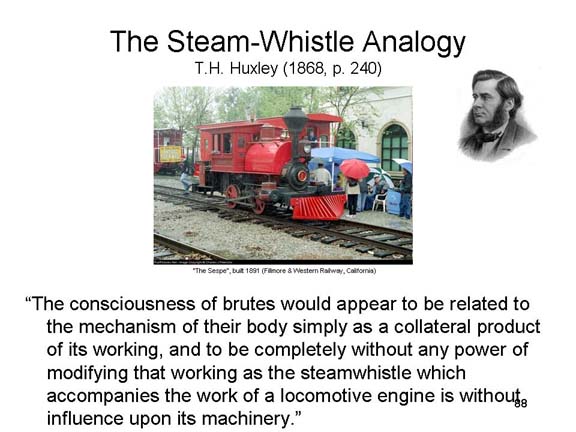

Whereas de la Mettrie thought that (inefficacious) consciousness set humans apart from animals, T.H. Huxley, arguing from the principle of evolutionary continuity, ascribed consciousness to at least some nonhuman animals as well. But Huxley also argued, with Hodgson, that conscious beings were still conscious automata.

Epiphenomenalism solved half the mind-body problem, by concluding that mind and body do not interact. But the other problem persisted, which is how body gives rise to mind in the first place. Of course, if you don't think that mind is important, this question loses much of its hold. But if you think that mind is important, precisely because it has causal powers, than you can't be an epiphenomenalist, or even a proponent of conscious automata. But if you don't want to be an idealist, or a dualist, then what?

Epiphenomenalism

is perfectly captured in one of its earliest expressions, the

steam-whistle analogy of T.H. Huxley (1868),

Darwin's cousin and known as "Darwin's Bulldog" for his

vigorous defense of the theory of evolution by natural

selection:

Epiphenomenalism

is perfectly captured in one of its earliest expressions, the

steam-whistle analogy of T.H. Huxley (1868),

Darwin's cousin and known as "Darwin's Bulldog" for his

vigorous defense of the theory of evolution by natural

selection:

The consciousness of brutes would appear to be related to the mechanism of their body simply as a collateral product of its working, and to be completely without any power of modifying that working as the steam whistle which accompanies the work of a locomotive engine is without influence upon its machinery.

Note, however, that Huxley refers

to "the consciousness of brutes" (emphasis added).

For all that he and Darwin believed about evolutionary

continuity, he still seemed to believe that there was

something special about the consciousness of humans

--to wit, that it might indeed play a causal role in behavior.

However,

even epiphenomenalism acknowledges consciousness -- it just

thinks it's epiphenomenal. So even epiphenomenalism can't

escape what the philosopher Joseph Levine calls the

explanatory gap. Levine argued that purely

materialist theories of mind, which rely solely on anatomy and

physiology, cannot, in principle, explain how the physical

properties of the nervous system give rise to phenomenal

experience. To illustrate his reasoning, consider the

following two physicalist explanations:

In

the Principles of Psychology (1890), William James

embraced the viewpoint that the purpose of consciousness was

to aid the organism's adaptation to its environment.

James rejected both the automaton-theory (and

epiphenomenalism) and the mind-stuff theory, devoting a

chapter of the Principles to each view (James was

close friends with Prince, but friendship has its

limits). But James himself never got beyond Descartes'

impasse. Convinced as a scientist that materialism was

right, but also convinced as a human being that mind had

causal efficacy, James ended up adopting a variant of

psychophysical parallelism - that there was a total

correspondence of mind states with brain states, but that

empirical science can't say more than that. James

considered this stance to reflect an intellectual defeat,

and he simply resigned himself to it and to the Cartesian

impasse (did I mention that James was chronically

depressed?).

In

the Principles of Psychology (1890), William James

embraced the viewpoint that the purpose of consciousness was

to aid the organism's adaptation to its environment.

James rejected both the automaton-theory (and

epiphenomenalism) and the mind-stuff theory, devoting a

chapter of the Principles to each view (James was

close friends with Prince, but friendship has its

limits). But James himself never got beyond Descartes'

impasse. Convinced as a scientist that materialism was

right, but also convinced as a human being that mind had

causal efficacy, James ended up adopting a variant of

psychophysical parallelism - that there was a total

correspondence of mind states with brain states, but that

empirical science can't say more than that. James

considered this stance to reflect an intellectual defeat,

and he simply resigned himself to it and to the Cartesian

impasse (did I mention that James was chronically

depressed?).

James's resignation is echoed in some quarters of contemporary philosophy in a doctrine that has come to be known as Mysterianism, so named by the philosopher Owen Flanagan after the 1960s one-hit rock group "Question Mark and the Mysterians" (their one hit was "96 Tears", which was originally supposed to be titled "69 Tears" until someone thought better of it). Mysterianism holds that consciousness is an unsolvable mystery, and that the mind-body problem will remain unsolvable so long as consciousness remains in the picture.

An early version

of modern Mysterianism was asserted by the philosopher

Thomas Nagel, in his famous 1979 "Bat" essay, which was

really an argument that consciousness is not susceptible to

analytic reduction:

"[T]his... subjective character of experience... is not captured by any of the familiar, recently devised reductive analyses of the mental, for all of them are logically compatible with its absence. It is not analyzable in terms of any explanatory system of functional states, or intentional states, since these could be ascribed to robots or automata that behaved like people through they experienced nothing."

Now, on the surface, this could simply be taken as a statement of dualism. But recall that Nagel's essay began on a note of despair that the mind-body problem is soluble at all (emphasis added):

"Consciousness is what makes the mind-body problem really intractable. Without consciousness, the mind-body problem would be much less interesting. With consciousness, it seems hopeless."

A more recent

version of Mysterianism was outlined by the philosopher

Colin McGinn (1989, 2000), who assumes that materialism is

true, but asserts that we are simply not capable of

understanding the connection between mind and body -- in his

term, gaining cognitive closure on this issue:

We have been trying for a long time to solve the mind-body problem. It has stubbornly resisted our best efforts. The mystery persists. I think the time has come to admit candidly that we cannot resolve the mystery.... [W]e know that brains are the de facto causal basis for consciousness, [but] we are cut off by our very cognitive constitution from achieving a conception of... the psychophysical link.

Whereas James thought that the mind-body problem was a more-or-less temporary failure of empirical science, McGinn argues that the mind-body problem is insoluble in principle, because we simply lack the cognitive capacity to solve it -- to achieve what he calls cognitive closure on the problem.

McGinn draws an analogy between the limits of

psychological knowledge and Piaget's theory of cognitive

development. In Piaget's view, a child at the

sensory-motor or preoperational stage of cognitive

development simply cannot solve certain problems that can be

solved by children at the stages of concrete or formal

operations. He implies that if there were a fifth

stage of cognitive development, or maybe a sixth or seventh,

maybe we could get closure on the mind-body problem.

But since there are inherent limitations on our cognitive

powers, we can't.

A Post-Operational Stage of Thought?Actually, there just might be a post-operational stage, or stages. Piaget's theory implies that the various stages of cognitive development can only be known from the standpoint of a higher stage. So if Piaget was able to identify a stage of formal operations, that implies that somebody -- Piaget himself, at least! -- must be at yet a higher stage of cognitive development. Call this Stage 5. But even Piaget never claimed to have solved the mind-body problem, so if McGinn is right then cognitive closure will have to wait until somebody gets to a sixth or higher stage. In fact, the promise of such higher stages of cognitive development is made explicit by the Transcendental Meditation (TM) movement founded by the Maharishi Mahesh Yogi. One of the Maharishi's followers, the late psychologist Charles N. ("Skip") Alexander, drew explicit parallels between Maharishi's stage theory of consciousness and Piaget's stage theory of cognitive development. And, in fact, TM holds out the promise of achieving through meditative discipline a union of mind and body -- from which standpoint, presumably, the solution to the mind-body problem will be clear. See, for example, Higher Stages of Human Development: Perspectives on Adult Growth, ed. by Alexander and E.J. Langer (Oxford University Press. 1990). |

McGinn hastens to point out that psychology is not alone, among the sciences, in lacking cognitive closure on some problem. Physics and mathematics are the most advanced (and arguably the most fundamental) of the sciences, but physicists labor under the implications of Heisenberg's uncertainty principle, and mathematicians suffer from Godel's incompleteness theorem. Still, physicists and mathematicians manage to get a lot of work done, solving other problems, and that's what psychologists and philosophers should do too.

In other words: if the mind-body problem is

insoluble, we should just get over it.

That's just the position taken by Michael

Schermer, publisher of Skeptic magazine and author

of the "Skeptic" column in Scientific American.

In "The Final Mysterians" (Scientific American,

07/2018), Schermer distinguishes among three forms of

Mysterianism:

Although set aside by James in the latter part

of the 19th century, and by the Mysterians in the latter

part of the 20th century, the mind-body problem has

continued to incite effort in the 20th and now the 21st

centuries, including the widespread embrace of materialist

monism but even a revival of dualism. But first it had

to go through a stage where the mind-body problem itself was

simply rejected.

| A

personal note: Frankly, in all the time I have taught this

course, I have not lost a single night's sleep over the

"Hard Problem" of consciousness -- or, for that matter,

the "Easy Problem", either. I'm content to take

consciousness as a given, obviously and somehow a product

of brain activity, and just leave it at that. There

are other questions about consciousness that I'd prefer to

spend my time and effort on answering -- like the

questions posed in the remainder of this course. I suppose, then, that my stance is a little like the advice of Virgil to Dante, when the latter expresses concern with the distinction between "flesh and spirit" (Dante gets pretty much the same advice from Thomas Aquinas when he asks him how God could create an imperfect world in Paradiso xiii): State contenti, umana gente, al quia.

Rest content, race of men, with the that [i.e., be satisfied with what experience tells you, and don't think too hard about reasons] (Inferno, iii, 37, translated by J.D. Sinclair) |

That was what behaviorism was all about. These philosophers and psychologists effectively solved the mind-body problem by rejecting it, and they did this by rejecting the mind as a proper subject-matter for science.

Within psychology, the seminal documents of the behaviorist revolution are by John B. Watson:

Watson's behaviorism was a reaction to both structuralism and functionalism in the new scientific psychology. To Watson (and he was not alone), introspection seemed to be getting the field nowhere, and had become bogged down in disputes over such issues as whether there could be imageless thought. Watson himself also hated serving as an introspective observer. Watson himself had been trained as a functionalist at Chicago, and had conducted a successful line of research on animal learning, but he balked at the functionalists' attribution of consciousness to nonhuman animals.

Watson's central assertions were as

follows:

Consciousness is simply irrelevant to this program, because consciousness has nothing to do with behavior. New behaviors are acquired as a result of learning (construed as the conditioning of reflexes), and individual differences in behavior are the result of individual differences in learning experiences. Watson's emphasis on learning is exemplified by Watson's dictum :

Give me a dozen healthy infants, well formed, and my own specified world to bring them up in and I'll guarantee to take any one at random and train him to become any type of specialist I might select -- doctor, lawyer, artist, merchant-chief, and yes, even beggar-man and thief, regardless of his talents, penchants, tendencies, abilities, vocations, and race of his ancestors.

Watson left psychology in 1920, when he was fired from his faculty position at Johns Hopkins in the midst of a messy divorce scandal involving his relationship with a graduate student. Eventually Watson found work as the resident psychologist at J. Walter Thompson, the famous advertising agency, in which capacity he invented the "coffee break" as part of a campaign for Maxwell House coffee (See? Psychology has practical benefits!). But behaviorism wasn't harmed by this reversal of his personal fortune, and clearly dominated psychological research from the 1920s to the 1960s -- though not in its pure Watsonian form.

In the 1930s, behaviorists moved beyond Watson's "muscle-twitch" psychology, in which behavior was construed as a string of innate and conditioned reflexes closely tied to the structure of the peripheral nervous system, toward an emphasis on molar rather than molecular behavior. In the words of E.B. Holt (1931), what came to be called neobehaviorism was concerned with "what the organism is doing". For example, at Berkeley E.C. Tolman showed that rats who had been trained to run a maze for food would also swim for food if the maze was flooded. The maze was the same, but because running and swimming involved different muscle movements, what had been learned was a molar behavior -- e.g., "get from Point A to Point B" rather than a particular sequence of muscular movements in response to the maze stimulus.

Moreover, many neobehaviorists held more

liberal attitudes than Watson on the role of mental states

in behavior.

Behaviorism in psychology had its parallel in philosophical behaviorism, whose roots in turn were in earlier approaches to the philosophy of mind.

Logical positivism began in the "Vienna

Circle" of philosophers, such as Herbert Feigl and Rudolf

Carnap, and was promoted in England by A.J. Ayer and in

America by Ernest Nagel. Logical positivism is based

on the verification principle, which states that

sentences gain their meaning through an analysis of the

conditions required to determine whether they are true or

false. There are, in turn, two means of verifying the

truth-value of a statement.

Sentences which cannot be empirically verified, either analytically or synthetically, are held to be meaningless. Because statements about people's mental states are empirically unverifiable, the logical positivists held -- or, at least, they strongly suspected -- that any statement about consciousness -- and indeed the whole notion of consciousness itself -- is simply meaningless.

Linguistic philosophy, which arose through the work of Ludwig Wittgenstein, Gilbert Ryle, and J.L. Austin, argued that most problems in philosophy are based on misunderstandings or misuses of language, and that they simply dissolve when we pay attention to how ordinary language is used. Linguistic philosophers placed great emphasis on reference -- the objects in the objective, publicly observable real world to which words refer. Because mental states are essentially private and subjective, linguistic philosophers harbor the suspicion that mental states don't refer to anything at all, and that mental-state terms like "consciousness" are essentially meaningless.

The Ghost in the Machine. While both

logical positivists and linguistic philosophers focused a

great deal of attention on the terms representing mental

states, the philosophical behaviorists, like their

psychological counterparts, tended to dispense with

mental-state terms entirely. For example, in The

Concept of Mind (1949), Gilbert Ryle argued that the

mind is "the ghost in the machine" (a phrase that inspired

the classic rock album by The Police). For Ryle,

the whole mind-body problem reflects what he called a category

mistake -- a mistake in which facts are represented as

belonging to one domain when in fact they belong to

another. With respect to mind and body, the category

mistake comes in two forms:

The bottom line in philosophical behaviorism is that we cannot identify mental states independent of behavioral states and the circumstances in which behavior occurs. This is also essentially the bottom line for psychological behaviorism, to the extent that it talks about mental states at all. Both psychological and philosophical behaviors seek to get around Descartes' impasse by refusing to talk about mind at all.

The hegemony of the behaviorist program within psychology began to fade in the 1950s, with the gradual appreciation that behavior could not be understood in terms of the functional relations among stimulus, response, and reinforcement.

Interestingly,

the deep sources of the cognitive revolution lie with some

neobehaviorists who made their careers in the study of animal

learning, but who critiqued salient features of the behaviorist

program:

These

early anomalous findings were generally ignored or dismissed by

practitioners of behaviorist "normal science", in a manner that

would make Thomas Kuhn proud. But in the 1960s, evidence

for cognitive constraints on learning mounted rapidly:

These and other studies, following on the pioneering research of Tolman and Harlow (and others) made it clear that we cannot understand even the simplest forms of learning and behavior in so-called "lower" animals -- never mind complex learning in humans -- without recourse to "mentalistic" constructions such as predictability, controllability, surprise, and attention. Although the cognitive revolution was chiefly fomented by theorists who were interested in problems of human perception, attention, memory, and problem-solving, the results of these animal studies increased the field's dissatisfaction with the dominant behaviorist paradigm, and increased support for the cognitive revolution in psychology.

The

cognitive revolution was also fostered by the development of the

high-speed computer, which could serve as a model, or at least a

metaphor, of the human mind:

Put concisely, the computer model of the mind showed how a physical system could, in some sense, "think" by acquiring, storing, transforming (i.e., computing) and using information. In some respects, the computer metaphor solved the mind-body problem in favor of materialism, because it showed how a physical system could operate something like the mind. But the fact that computers could "think" (or at least process information) raised the question of whether computers were, or could be, conscious. Put another way, the fact that computers could "think" without being conscious suggested that maybe consciousness was epiphenomenal after all. In any event, the fact that psychological discourse was re-opened to mental terms revived discussion of the mind-body problem, and promptly stuck us right back in the middle of Descartes' impasse -- exactly where William James had left us in 1890!

One difference between the beginning of the 21st century and the end of the 19th is that not too many cognitive scientists seriously entertain dualism as a solution to the mind-body problem. Almost everybody adopts some form of the materialist stance, for the same reason that James did -- because we understand clearly that mental states are somehow related to states of the brain. At the same time, many people remain closet dualists, for reasons articulated earlier: religious belief, the persistence of folk psychology, or disciplinary commitment to the psychological level of analysis (that's my excuse!). Others, however, have gotten beyond what they see as irrationality, sentimentality, or ideology to proliferate a variety of "new" materialisms -- or, at least, more precise statements of materialist doctrine.

Modern versions of

materialism are based on one or another version of identity

theory, which holds that mental states are identical to

brain states. This position is distinct from

epiphenomenalism, which holds that mental states are the effects

of brain states; and it is also distinct from psychophysical

parallelism, which holds merely that mental states are correlated

with brain states.

Identity

theory comes in two forms:

Types, Tokens, and CategoriesThe type and token versions of identity theory are named by analogy to the type-token difference in categorization. In categorization, type is the category (e.g., undergraduate class), while token is a particular instance of that category (e.g., Psychology 129 as taught in the Spring Semester of 2005. The difference

between the two versions of identity theory has

important implications for scientific research.

Many traditional cognitive scientists prefer token identity theories, which have the benefit of allowing mental talk and folk psychology to remain legitimate discourse. But some cognitive scientists seem to prefer type identity theories, and seek to abandon mental talk as soon as neuroscience permits. The distinction between type and token identity theories has important implications for the neuroscientific effort to identify the neural correlates of conscious mental states. Consider how these experiments are done.

Note that this methodology assumes type identity -- that every brain that is performing a particular task is in the same state as every other brain that is performing that same task. Otherwise, it wouldn't make any sense to average across subjects. If token identity were true, and every individual brain were in a different state when performing the same task, then the activities of the several individual brains would cancel each other out, and the researcher would be left with nothing but noise. But the signal -- the brain signature -- emerges only when you average across individuals. If researchers believed in token identity, they'd go about their studies in a very different way. They'd scan a single individual, on many occasions, and average across trials but not individuals to identify the brain signal, and then conclude that some particular part of the brain is active when this particular individual is performing this particular task. But who cares about particular individuals? Nobody -- not at this level of analysis, at least. The goal of cognitive neuroscience is to obtain generalizable knowledge about the relations between brain activity and cognitive processes. Which is why cognitive neuroscientists average across subjects, not just across trials within subjects -- which shows that they embrace a type identity view of mind-brain relations after all. |

It turns out that when you talk about mind and body, Leibniz' Law isn't necessarily upheld:

Anyway,

the materialist position has many current adherents, especially

among those who wish to abandon folk psychology -- for example.

Of course, eliminative materialism is a program of reductionism -- specifically, eliminative reductionism, entailing the view that the laws of mental life discovered by psychology can (and should) be reduced to the laws of biological life discovered by biology -- and, presumably, thereafter to the laws of physics. The implication of reductionism is that physics is the fundamental science, and everything else is just nonscientific "folk" talk, to be eliminated as soon as science allows. As the physicist Ernest Rutherford once put it:

Never mind that Rutherford got the Nobel Prize in chemistry. He regarded himself as a physicist, and is generally regarded as the father of nuclear physics.

Of course,

such a position is unpalatable to anyone but physicists and

those possessed of physics-envy (and perhaps

philatelists). Partly for that reason, the Churchlands,

Patricia and Paul, have argued (together and separately) for a

program of intertheoretic reductionism, which promises to

preserve the legitimacy of the psychological level of

analysis.

"the real-world findings of neuroscience".

The clear implication is, that mental states aren't real, and that psychology, as the science of mental life, doesn't have anything to do with the real world.

Note that it never occurs to them that neuroscience should be grounded in the real-world findings of psychology, which would be the case if they were really interested in the symbiotic relations between two theories, or two levels of analysis. The clear implication of their work is that the only "real world" is the world of neuroscience. The world of neuroscience can be objectively described, while the world of mind and consciousness is the world of ether, phlogiston, and fairies.

You get a sense of what eliminative reductionism is all about when, as quoted in a New Yorker article on their views, Pat says to Paul, after a particularly hard day at the office,

"Paul, don't speak to me, my serotonin levels have hit bottom, my brain is awash in glucosteroids, my blood vessels are full of adrenaline, and if it weren't for my endogenous opiates I'd have driven the car into a tree on the way home. My dopamine levels need lifting. Pour me a Chardonnay, and I'll be down in a minute" (as quoted by MacFarquhar, 2007, p. 69),

It's funny, until you stop to reflect on the fact that these people are serious, and that their students have taken to talking like this too.

But really, when you step back, you realize that this is just an exercise in translation, not much different in principle from rendering English into French -- except it's not as effective. You'd have no idea what Pat was talking about if you didn't already know something about the correlation between serotonin and depression, between adrenaline and arousal, between endogenous opiates and pain relief, and between dopamine and reward. But is it really her serotonin levels that are low, or is it her norepinephrine levels -- and if it's serotonin, how does she know? Does she have a serotonin meter in her head?

Only by translating her feelings of depression into a language of presumed biochemical causation -- a language that is understood only by those, like Paul, who already have the secret decoder ring.

And even then, the translation isn't very reliable. We know about adrenalin and arousal, but is Pat preparing for fight-or-flight (Cannon, 1932), or tend-and-befriend (S. E. Taylor, 2006)? Is she getting pain relief or positive pleasure from those endogenous opiates? And after going through the first five screens of a Google search, I still couldn't figure out whether Pat's glucosteroids were generating muscle activity, reducing bone inflammation, or increasing the pressure in her eyeballs.

And note that even Pat and Paul

can't carry it off. What's all this about "talk" and "Chardonnay"?

What's missing here is any sense of meaning -- and,

specifically, of the meaning of this social interaction. Why

doesn't Pat pour her own drink? Why Chardonnay instead of

Sauvignon Blanc -- or, for that matter, Two-Buck Chuck? For all

her brain cares, she might just as well mainline ethanol in a

bag of saline solution. and, for that matter, why is she

talking to Paul at all? Why doesn't she just give him a

bolus of oxytocin? But no: What she really wants

is for her husband to care enough about her to fix her a drink

-- not an East Coast martini but a varietal wine that almost

defines California living -- and give her some space -- another

stereotypically Californian request -- to wind down. That's what

the social interaction is all about; and this is entirely

missing from the eliminative materialist reduction.

And note that even Pat and Paul

can't carry it off. What's all this about "talk" and "Chardonnay"?

What's missing here is any sense of meaning -- and,

specifically, of the meaning of this social interaction. Why

doesn't Pat pour her own drink? Why Chardonnay instead of

Sauvignon Blanc -- or, for that matter, Two-Buck Chuck? For all

her brain cares, she might just as well mainline ethanol in a

bag of saline solution. and, for that matter, why is she

talking to Paul at all? Why doesn't she just give him a

bolus of oxytocin? But no: What she really wants

is for her husband to care enough about her to fix her a drink

-- not an East Coast martini but a varietal wine that almost

defines California living -- and give her some space -- another

stereotypically Californian request -- to wind down. That's what

the social interaction is all about; and this is entirely

missing from the eliminative materialist reduction.

The

problem is that you can't reduce the mental and the social to

the neural without leaving something crucial out -- namely, the

mental (and the social). And when you leave out the mental and

the social, you've just kissed psychology (and the rest of the

social sciences) good-bye. That is because psychology isn't just

positioned between the biological sciences and the

social sciences. Psychology is both a biological science

and a social science. That is part of its beauty and it is part

of its tension.

In

her recent memoir, entitled Touching a Nerve (2014), Patricia Churchland writes that she turned from "pure" philosophy to neuroscience when she realized that "if mental processes are actually processes of the brain, then you cannot understand the mind without understanding how the brain works". To which Colin McGinn, reviewing her book in the New York Review of Books, asked why she stopped there. If mental processes are actually processes of the brain, and brain-processes are electrochemical processes, then she should have skipped over the neuroscience and gone straight to physics. And so should everyone else, including historians and literary critics. But if historians and literary critics don't have to be neuroscientists, why is this an obligation for psychologists?

Interestingly,

it's possible to be a materialist monist but not a reductionist. This is the

stance that the late

Donald Davidson (another UCB philosopher ) called anomalous

monism (e.g., in "Mental Events", 2001). Davidson agreed that

the world consists only of material entities, thus rejecting Descartes'

substance dualism; and therefore, all events in the world are

physical events. At the same time, Davidson denied that

mental events, such as believing and desiring, could be explained

in purely physical terms. So, his theory is

ontologically materialistic (because the world

consists only of physical entities) but explanatorily

dualistic (because the network of causal relations

is different for mental events than for physical

events).

In fact, the histories of psychology and of neuroscience show exactly the opposite of what the Churchlands discuss. In every case, whether it is concerned with visual perception or with memory or anything else, theoretical developments in neuroscience have followed theoretical developments in psychology, not the other way around.

Consider,

for example, the amnesic syndrome, as exemplified by patient

H.M., who put us on the road toward understanding the role

of the hippocampus in memory.

But what exactly is that role? The fact is, our

interpretation of the amnesic syndrome, and thus of

hippocampal function, has changed as our conceptual

understanding of memory has changed.

Here, clearly, neuroscientific data hasn't done much constraining: the psychological interpretation of this neurological syndrome, and its implication for cognitive theory, changed almost wantonly, as theoretical fashions changed in psychology, while the neural evidence stayed quite constant.

Here's

another example: what might be called The Great Mental

Imagery Debate -- that is, the debate

over the representation of mental imagery -- or, more

broadly, whether there are two distinct forms of knowledge

representation in memory, propositional (verbal) and

perceptual (imagistic).

So, in the final analysis, neuroscientific evidence was neither necessary nor sufficient to resolve the theoretical dispute over the nature of knowledge representation.

And, of course, interpretation of PET and fMRI brain images requires a correct description of the subject's task at the psychological level of analysis. Without a correct psychological theory in hand, neuroscience can't find out anything about the neural substrates of mental life, because they don't know what the neural substrates are neural substrates of.

As

someone once put it (unfortunately, not me):

But I

have said (Kihlstrom, 2010):

Other cognitive scientists prefer token identity theories. They don't seek correspondence between mental events and brain events. Instead, they classify mental events in terms of their functional roles and ignore the physical systems in which these functions are implemented. Philosophical functionalism is closely related to psychological and philosophical behaviorism, in that all that matters are the functional relations between inputs and outputs -- what goes on in between doesn't much matter.

This philosophical functionalism lies at the basis of many programs of artificial intelligence (AI), which attempt to devise computer programs that will carry out the same operations as minds. The term was originally coined by John McCarthy (then at MIT, later at Stanford), who also invented LISP, a list-processing language which still serves as the backbone of AI.

John

Searle has famously distinguished between two views of AI:

Kenneth Mark Colby (1920-2001)Colby, one of the pioneers of artificial intelligence, was a psychiatrist who graduated from the Yale School of Medicine in 1943, and computer scientist at Stanford and later UCLA. While at Stanford, Colby developed Parry, a computer simulation of paranoia that is sometimes counted as the only computer program to pass the Turing test. After leaving UCLA, Colby founded Malibu Artificial Intelligence Works, and produced a conversational therapy program called Overcoming Depression. Parry was adapted from Eliza, a program developed by Joseph Weizenbaum at MIT that was the first conversational computer program, and simulated the interaction between a psychotherapy patient and a "Rogerian" (as opposed to a Freudian) psychotherapist (Eliza was named for Eliza Doolittle, the central character in George Bernard Shaw's play, Pygmalion, which was in turn the basis for the Broadway musical My Fair Lady). According to Colby's New York Times obituary, Parry and Eliza once had a chat, part of which went like this:

For an engaging account of the Turing Test, and the Loebner Prize awarded annually to the "Most Human Computer", see the Most Human Human: What Talking with Computers Teaches Us About What It Means to Be Alive by Brian Christian (2011). It turns out that there's also a prize for the "Most Human Human", and Christian's book charts his quest to win it. |

Although I agree with Searle, I believe it is important to distinguish yet another form of artificial intelligence. What I call Pure AI is not concerned with psychological theories or mental states at all, but only with the task of getting machines to carry out "intelligent" behaviors. Most robotics take this form. So does most work on chess-playing by machine. Deep Blue, the IBM-produced program that beat Garry Kasparov, the reigning world champion, in 1997, is a really good chess player, but nobody claims that it plays chess the same way that humans do (in fact, we know it doesn't), and nobody claims that it "knows" it is playing chess. It is just programmed with the rules of the game and the capacity to perform incredibly fast information-processing operations. For pure AI, programs are programs.

Kasparov, commenting on his loss, and on chess as a goal for artificial intelligence research, writes:

The AI crowd... was pleased with the result and the attention, but dismayed by the fact that Deep Blue was hardly what their predecessors had imagined decades earlier when they dreamed of creating a machine to defeat the world chess champion. Instead of a computer that thought and played chess like a human, with human creativity and intuition, they got one that played like a machine, systematically evaluating 200 million possible moves on the chess board per second and winning with brute number-crunching force. As Igor Alexander, a British AI and neural networks pioneer, explained in his 2000 book, How to Build a Mind:

By the mid-1990s the number of people with some experience of using computers was many orders of magnitude greater than in the 1960s. In the Kasparov defeat they recognized that here was a great triumph for programmers, but not one that may compete with the human intelligence that helps us to lead our lives.

It was an impressive achievement, of course, and a human achievement by the members of the IBM team, but Deep Blue was only intelligent the way your programmable alarm clock is intelligent. Not that losing to a $10 million alarm clock made me feel any better ("The Chess Master and the Computer" by Garry Kasparov, reviewing Chess Metaphors: Artificial Intelligence and the Human Mind by Diego Rasskin-Gutman, New York Review of Books, 02/11/2010).

In 2009,

computer scientists at IBM announced Watson (named after the

firm's founder, Thomas J. Watson, Sr., not John B. Watson, the

behaviorist), based on the company's Blue Gene supercomputer,

intended to answer questions on "Jeopardy!", the long-running

television game show If successful, Watson would

represent a considerable advance over Deep Blue, because, as

noted in a New York Times article, "chess is a game of

limits, with pieces that have clearly defined powers.

'Jeopardy!' requires a program with the suppleness to weigh an

almost infinite range of relationships and to make subtle

comparisons and interpretations. The software must

interact with humans on their own terms, and fast.... The

system must be able to deal with analogies, puns, double

entendres, and relationships like size and location, all at

lightning speed" ("IBM Computer Program to Take On 'Jeopardy!'"

by John Markoff, 04/27/2009).

In 2009,

computer scientists at IBM announced Watson (named after the

firm's founder, Thomas J. Watson, Sr., not John B. Watson, the

behaviorist), based on the company's Blue Gene supercomputer,

intended to answer questions on "Jeopardy!", the long-running

television game show If successful, Watson would

represent a considerable advance over Deep Blue, because, as

noted in a New York Times article, "chess is a game of

limits, with pieces that have clearly defined powers.

'Jeopardy!' requires a program with the suppleness to weigh an

almost infinite range of relationships and to make subtle

comparisons and interpretations. The software must

interact with humans on their own terms, and fast.... The

system must be able to deal with analogies, puns, double

entendres, and relationships like size and location, all at

lightning speed" ("IBM Computer Program to Take On 'Jeopardy!'"

by John Markoff, 04/27/2009).

The IBM Jeopardy Challenge was taped in January and aired on February 14-16, 2011 (the first game of the two-day match was spread out over two days, to allow for presentations on how Watson worked). It pitted Watson against Ken Jennings, who made a record 74 consecutive appearances on the show in in 2004-5, and Brad Rutter, who won a record $3.3 million in regular and tournament play. In a practice round reported in January, 2011, Watson beat them both, winning $4,400 compared to Jennings' $3,400 and Rutter's $1,200. In the actual contest, Watson won again, $77,147 against Jennings' $24,000 and Rutter's $21,600. The machine made only two mistakes: responding "Toronto" to a question about U.S. cities (you could see the IBM executives' jaws drop open), and repeating one of Rutter's incorrect responses.

In addition to his linguistic skills, fund of knowledge and rapid access to it, Watson may have had a slight advantage in reaction time, though. Milliseconds count in Jeopardy, and if so it would be interesting to see a re-run of the contest with Watson constrained to something like humans' average response latencies.

In the

contest, Watson displayed a remarkable ability to parse language

and retrieve information.

In a later match, however, Watson was beaten by Rep. Rush Holt (D.-N.J.), who had been a physicist before being elected to Congress ("Congressman Beats Watson" by Steve Lohr, New York Times, 03/14/2011). One category that Watson failed in was "Presidential rhymes" (as in "Herbert's military operations": What were Hoover's maneuvers?"). So Watson's not invincible (the machine had won only 71% of its preliminary matches, before the televised contest). The point is not that Watson is always right, or that he wins all the time, or that he doesn't win all the time. The point is that Watson is a pretty good question-answering machine.

It is not clear what kind of AI Watson represents. Probably not Strong AI: nobody claims that Watson will "think", and the newspaper article uses the word "understand" in quotes. Many of the principles undergirding Watson's software are based on studies of human cognition, which suggests that it is not Pure AI either, and might be a version of Weak AI. IBM engineers, interviewed after the Challenge was completed, were reluctant to claim that Watson mimicked human thought processes (see "on 'Jeopardy!', Computer win Is All but Trivial" by John Markoff, and "First Came the Machine That Defeated a Chess Champion" by Dylan Loeb McClain, New York Times, 02/17/2011). Watson's performance on pre-Challenge test runs is described by Stephen Baker in "Can A Computer Win on 'Jeopardy'?", Wall Street Journal, 02/05-06/2011).

David Gelertner, professor of computer science at Yale (and victim of the Unabomber), wrote ("Coming Next: A Supercomputer Saves Your Life", Wall Street Journal, 02/05-06/2011):

Watson is nowhere near passing the Turing test -- the famous benchmark proposed by Alan Turing in 1950, in which a program demonstrates its intelligence by duping a human being, in the course of conversation over the Web or some other network, into believing that it's human and not software.

But when a program does pass the Turing test, it's likely to resemble a gigantic Watson. It will know lots about the superficial structure of language and conversation. It won't bother with such hard topics as meaning or consciousness. Watson is one giant leap for technology, one small step for the science of mind. But this giant leap is a major milestone in AI history.

Which strengthens the case for Pure AI. But even if it had been only remotely successful, instead of spectacularly successful, the article notes that it will be a "great leap forward" in "building machines that can understand language and interact with humans". For example, IBM is using an improved version of Watson's technology to develop a language-based interactive system for medical diagnosis.

Will Watson play again? Probably not. Deep Blue never played chess again after defeating Kasparov (part of him is on display in the Smithsonian). He's made his point, it's unlikely that any human will beat him when Jennings and Rutter couldn't, and Watson may have a slight mechanical advantage in reaction time at the buzzer.

So let's give David Ferucci, who led the IBM development team, the last word (quoted in Markoff's 2011 article):

People ask me if this is HAL [the computer that ran amok in 2001: A Space Odyssey]. HAL's not the focus, the focus is on the computer on Star Trek, where you have this intelligence information seek[ing] dialog, where you can ask follow-up questions and the computer can look at all the evidence and tries to ask follow-up questions. That's very cool.

Well,

maybe not the last word. Technological advances in

artificial intelligence have led researchers to seriously

consider the possibility of mind uploading -- that is,

of preserving an individual's entire consciousness in what William

Graziano, a neuroscientist at Princeton, calls

the digital afterlife (see his 2019

book, Rethinking Consciousness: a Scientific

Theory of Subjective Experience, and "Will

Your Uploaded Mind Still Be You?", an essay

derived from it published in the Wall Street

Journal (09/14/2019). Graziano

argues that all we need to upload an entire

person's mind would be to recreate, in a

sufficiently powerful computer combining a

connectionist architecture with knowledge from

the Human Connectome Project, a complete map of

the individual's 100 trillion-or-so synaptic

connections among the 86 billion-or-so neurons

in the human brain. Graziano points out

that the project is daunting: it took

neuroscientists an entire decade to map all the

connections among the 300 neurons in a crummy

roundworm. But in principle that's how it

would go. And when we flip the switch

(quoting now from his WSJ essay):

A conscious mind wakes up. It has my personality, memories, wisdom and emotions. It thinks it's me. It can continue to learn and remember, because adaptability is the essence of an artificial neural network. Its synaptic connections continue to change with experience....

Philosophically, what is the relationship between sim-me and bio-me? One way to understand it is through geometry. Imagine that my life is like the rising stalk of the letter Y. I was born at the base, and as I grew up, my mind was shaped and changed along a trajectory. One day, I have my mind uploaded. At that moment, the Y branches. There are now two trajectories, each one convinced that it's the real me. Let's say the left branch is the sim-me ad the right branch is the bio-me. The two branches proceed along different life paths, with different accumulating experiences. The right-hand branch will inevitably die. The left-hand branch can live indefinitely, and in it, the stalk of the Y will also live on as memories and experiences.

The Two Functionalisms: A ConfusionWatson's behaviorism, has close ties to philosophical functionalism as well as to philosophical behaviorism. So why did I write earlier that Watson was as much opposed to functionalism as he was to structuralism? Because Watson was opposed to a different functionalism. Philosophical functionalism hadn't even been thought of when Watson was writing in the 1910s (neither had philosophical behaviorism, for that matter). The "school" in psychology known as functionalism was skeptical of the claims made by members of another "school", structuralism, claim that we can understand mind in the abstract. Based on Charles Darwin's (1809-1882) theory of evolution, which argued that biological forms are adapted to their use, the functionalists focused instead on what the mind does, and how it works. While the structuralists emphasized the analysis of complex mental contents into their constituent elements, the functionalists were more interested in mental operations and their behavioral consequences. Prominent functionalists were:

The

functionalist point of view can be summarized in four

points:

Psychological functionalism is often called "Chicago functionalism", because its intellectual base was at the University of Chicago, where both Dewey and Angell were on the faculty (functionalism also prevailed at Columbia University). It is to be distinguished from the functionalist theories of mind associated with some modern approaches to artificial intelligence (e.g., the work of Daniel Dennett, a philosopher at Tufts University), which describe mental processes in terms of the logical and computational functions that relate sensory inputs to behavioral outputs. |

At the turn of the 21st century,

theories of mind and body are fairly represented by the various

books reviewed by John Searle in The Mystery of

Consciousness (1997, reprinting several of Searle's review

articles in the New York Review of Books), by the

replies of the books' authors, and by Searle's rejoinders.

On first glance, most theorists appear to adopt some version of

materialism, holding to the view that consciousness is something

that the brain does.

This is true even for Searle,

who while a vigorous critic of the books under review

nonetheless asserts that consciousness is a causal property of

the brain (see The Rediscovery of the Mind,

1992).

This is true even for Searle,

who while a vigorous critic of the books under review

nonetheless asserts that consciousness is a causal property of

the brain (see The Rediscovery of the Mind,

1992).

For Searle, consciousness emerges at certain levels of anatomical organization. Certainly, the human brain, with its billions and billions (apologies to Carl Sagan) of neurons, and umpteen bazillions of interconnections among them, has the complexity to generate consciousness. This is probably true of the brains of nonhuman primates, which also have lots of neurons and neural connections. It may also be true for dogs and cats (certainly Searle holds that his dog Ludwig is conscious). It may not be true of snails, because they may not have enough neurons and interconnections to support (much) consciousness. It's not true of paramecia, because they don't have any neurons at all.

And it's certainly not true of thermostats. Although some computers might be conscious, if they had enough information-processing units and interconnections, by and large Searle is deeply skeptical of any claim that consciousness can be a causal property of anything other than a brain. Rather than calling himself a materialist, Searle prefers to label his view biological naturalism. Brains produce consciousness, even if silicon chips (and beer cans tied together by string) don't.

But it's not just possessing neurons that's important for consciousness. Anatomy alone isn't decisive. General anesthesia, concussion, and coma render people unconscious, but they don't alter the structure of the nervous system. However, they do alter physiology: how the various structures in the nervous system operate and relate to each other.

Although his biological naturalism is closely related to materialism, Searle strenuously opposes eliminative materialism and other forms of reductionism. For him, consciousness cannot be eliminated from scientific discourse because objective, third-person descriptions of brain processes necessarily leave out the first-person subjectivity that lies at the core of phenomenal experience. First and foremost, consciousness entails first-person subjectivity. This cannot be reduced to brain-processes because any third-person description of brain-processes must necessarily leave out first-person subjectivity. For that reason, every attempt to reduce consciousness to something else must fail, because every reduction leaves out a defining property of the thing being reduced -- in this case, the first-person subjectivity of consciousness. (In making this argument, Searle is basically applying Leibniz's Law.)

Searle

believes that first-person subjectivity is an irreducible

quality of consciousness, and that this irreducible quality is

produced as a natural consequence of human (and probably some

nonhuman) brain processes, but he has no idea how the brain

actually does it. But, he says, that's not a philosophical

problem. The philosopher's job is to determine what

consciousness is; once that's done, the problem can be "kicked

upstairs" to the neurobiologists, whose job it is to figure out

how the brain does it (Searle makes the same argument about free

will).

Searle

is very critical of some of his colleagues, but he takes as

good as he dishes out. In some ways it is unfortunate

that Searle did not include reviews of his own work in The

Mystery of Consciousness, and further exchanges with the

reviewers. Two reviews stand out in particular:

"In the end, Lakoff and Johnson fail in much the same way that Searle does. For just as the debunker needs a little bit of the visionary in him -- enough to leave us feeling that philosophy is worth debunking -- so too does the visionary need a keen instinct for what the debunkers will have to say in response.... The battle between visionaries and debunkers is most fascinating when it takes place within the mind of one individual" (p. 90).

The only way out of this problem, it would seem, is to deny the reality of consciousness -- to say that phenomenal awareness is something that does not really exist, that it is literally an illusion. Or, at least, to say that to the extent that consciousness does exist, it is irrelevant to behavior: That is, consciousness plays no role in the operation of a virtual machine -- a software program that processes stimulus inputs and generates appropriate response outputs, just like a real machine would (which is what computational functionalists think the mind is).

This variant on functionalism appears to be Dennett's position in Consciousness Explained (1991). According to his "Multiple Drafts" theory of consciousness, qualia are things that just happen to happen while stimulus information is being processed from the environment and responses being generated to them. And intentionality doesn't exist either -- it's just a rhetorical "stance" we take when we talk about mind and behavior (Dennett also wrote a book entitled The Intentional Stance).

If

reduction fails, as Searle says it does, then the only way out

for a materialist is to deny the reality of consciousness. For

Dennett, consciousness is literally an illusion, only one of

many "multiple drafts" of phenomenology, and plays no role in

the operation of a virtual machine. When we ask people

what they are thinking, one of these "drafts" pops into

mind. But that draft has no privileged status. It's

just one of many things running along in parallel connecting

inputs and outputs. For Dennett, only behavior matters,

and only behavior actually exists. Conscious mental states

don't have any special significance, and they don't play any

causal role in what is going on. For Dennett, it's behavior

that matters, not phenomenology, because:

Those who recognize in Dennett the earlier arguments of John B. Watson are onto something. For Dennett, the evidence for consciousness is to be found in behavior. First-person subjectivity doesn't count because it's an illusion -- just one of many "multiple drafts" of phenomenology, none of which have any causal impact on behavior or the world.