The Neural Correlates of

Consciousness

"The feeling of an unbridgeable gulf between consciousness and brain-process.... When does this feeling occur in the present case? It is when I (for example) turn my attention in a particular way on to my own consciousness and, astonished, say to myself: THIS is supposed to be produced by a process in the brain! -- as it were clutching my forehead".

Colin McGinn (he of Mysterianism) has put the same feeling another way, reviewing a book of popular neuroscience ("Can the Brain Explain Your Mind?" [review of the Tell-Tale Brain: A Neuroscientist's Quest for What Makes Us Human by V.S. Ramachandran], New York Review of Books, 03/24/2011):

Is studying the brain a good way to understand the mind? Does psychology stand to brain anatomy as physiology stands to body anatomy? In the case of the body, physiological functions -- walking, breathing, digesting, reproducing, and so on -- are closely mapped onto discrete bodily organs, and it would be misguided to study such functions independently of the bodily anatomy that implements them. If you want to understand what walking is, you should take a look at the legs, since walking is what legs do. Is it likewise true that if you want to understand thinking you should look at the parts of the brain responsible for thinking?

Is thinking what the brain does in the way that walking is what the body does?

***

But there is a prima facie hitch with this approach: the relationship between mental function and brain anatomy is nowhere near as transparent as in the case of the body -- we can't just look and see what does what.

***

Why is neurology so fascinating. It is more fascinating than the physiology of the body -- what organs perform what functions and how. I think it is because we feel the brain to be fundamentally alien in relation to the operations of mind -- as we do not feel the organs of the body to be alien in relation to the actions of the body. It is precisely because we do not experience ourselves as reducible to our brain that it is so startling to discover that our mind depends so intimately on our brain.... How can the human mind - -consciousness, the self, free will, emotion, and all the rest - -completely depend on a bulbous and ugly assemblage of squishy wet parts? What has the spiking of neurons got to do with me?

What, indeed?

More recently, Thomas Nagel expressed the same amazement. Nagel became somewhat notorious for his 2012 book Mind and Cosmos: Why the Materialist Neo-Darwinian Conception of Nature is Almost Certainly False -- which one reviewer branded as "the most despised science book of 2012" ("An Author Attracts Unlikely Allies" by Jennifer Schuessler, New York Times, 02/07/2013); Steven Pinker tweeted that the book displayed "the shoddy reasoning of a once-great thinker". The crux of Nagel's argument is that it is extremely unlikely that random evolutionary processes would have produced consciousness, meaning, and moral values. Therefore, their evolution must have been the product of some sort of "cosmic disposition" -- at the very least a teleological tendency to evolve from simpler to more complex structures; more dramatically, a universe "gradually waking up" to consciousness. For this reason, some of his colleagues accused him, although he is an avowed atheist, of siding with creationists against evolutionary theory itself. And indeed, some creationists have interpreted his work as taking their side in their battle with a purely materialist conception of the universe. Still, Nagel's argument is familiar, and consistent with his argument in "What Is It Like To Be a Bat?". That is, standard reductionist accounts of consciousness (and the products of consciousness, like moral values), whether grounded in evolutionary theory or neuroscience, leave something out -- which is "what it's like" to be conscious.

Consciousness is the most conspicuous obstacle to a comprehensive naturalism that relies only on the resources of physical science. The existence of consciousness seems to imply that the physical description of the universe, in spite of its richness and explanatory power, is only part of the truth, and that the natural order is far less austere than it would be if physics and chemistry accounted for everything.

***

Even with the brain added to the picture, they clearly leave out something essential, without which there would be no mind. And what they leave out is just what was deliberately left out of the physical world by Descartes and Galileo in order to form the modern concept of the physical, namely subjective appearances.

Because they can't explain subjective experience, then they can't be the correct explanation of reality, because reality includes subjective experience. A correct explanation would encompass both the ontologically objective world of stars, planets, tectonic plates, life, and photosynthesis, and the ontologically subjective world of consciousness. Since standard accounts can't do this, they must be incomplete -- and, in that sense at least, wrong. This is not anything like a creationist argument. It's just an argument that the standard materialist worldview is incomplete. Instead, Nagel suggests that what we need is a new intellectual revolution, something of the magnitude as Einstein's theory of relativity (or Darwin's theory of evolution?), only after which will we have the conceptual equipment to understand how brain states produce consciousness.

What would such a theory look like?

For a sympathetic but ultimately critical review, see "Awaiting a New Darwin" by H. Allen Orr, New York Review of Books, 02/07/2013. See also "The Nagel Flap: Mind and Cosmos" by John H. Zammito, Hedgehog Review, Fall 2013.

Chalmers's "hard problem", of how brain states produce consciousness, may not be solved in our lifetime -- or ever. But psychology and neuroscience can make progress on an easier problem -- which is to identify those brain structures and processes that are responsible for consciousness.

From a neuroscientific

perspective, this problem is addressed in terms of what the

neuroscientist Cristof Koch has called the neural correlates

of consciousness (or NCCs). But now

the question has been rephrased, from how brain states

cause consciousness to which brain sttes are associated

with consciousness.

As a matter of

historical accuracy, it should be noted that the connection

between brain and consciousnessis a relatively recent

intellectual insight. When they muffified the dead, the

ancient Egyptians removed and discarded the brain, while

preserving the internal organs, because they thought that the

mind resided in them. Similarly, Aristotle held the view

that sensations arose from the heart, and that the function of

the brain was to cool the blood. Hippocrates and Galen

intuited that the brain might have something to do with mind,

but this idea did not really take hold until the 17th century,

when Thomas Willis, an English physician, produced Cerebri

anatome (1664), the first detailed atlas of the brain

(with drawings by Christopher Wren, architect of St. Paul's

cathedral in London). Still, the equation of the heart with the

mind didn't stop governments from decapitating accused criminals

-- as in the case of the St. Paul, a Roman citizen who was

beheaded for the crime of being a Christian. So somebody

must have had the idea that the brain did something important!

In some respects, the neural correlates of consciousness are the "Holy Grail" of cognitive neuroscience. Whatever our dualistic inclinations might be as psychologists, we know that the brain is the physical basis of mind. Working on a piecemeal basis, we already know about how the brain mediates many mental functions involved in sensation and perception, attention, memory, language, and the like. We know, for example, that there are different parts of the brain involved in various aspects of vision, for example. In the same way, we ought to be able to figure out which parts of the brain are crucial for consciousness. That won't answer the question of How brain processes generate consciousness, but it will answer the question of Which brain processes generate consciousness -- which is still no trivial achievement.

According to Koch, the task of identifying NCCs rests on the principle of covariance -- that is, for each and every conscious event, there is a corresponding brain event. In his preferred formulation, the question, Where in the brain do these events occur? may be rephrased as follows: What are the "minimal neuronal mechanisms jointly sufficient for any one specific conscious percept?

Just as a

reminder, the question of NCCs is predicated on some version of

identity theory:

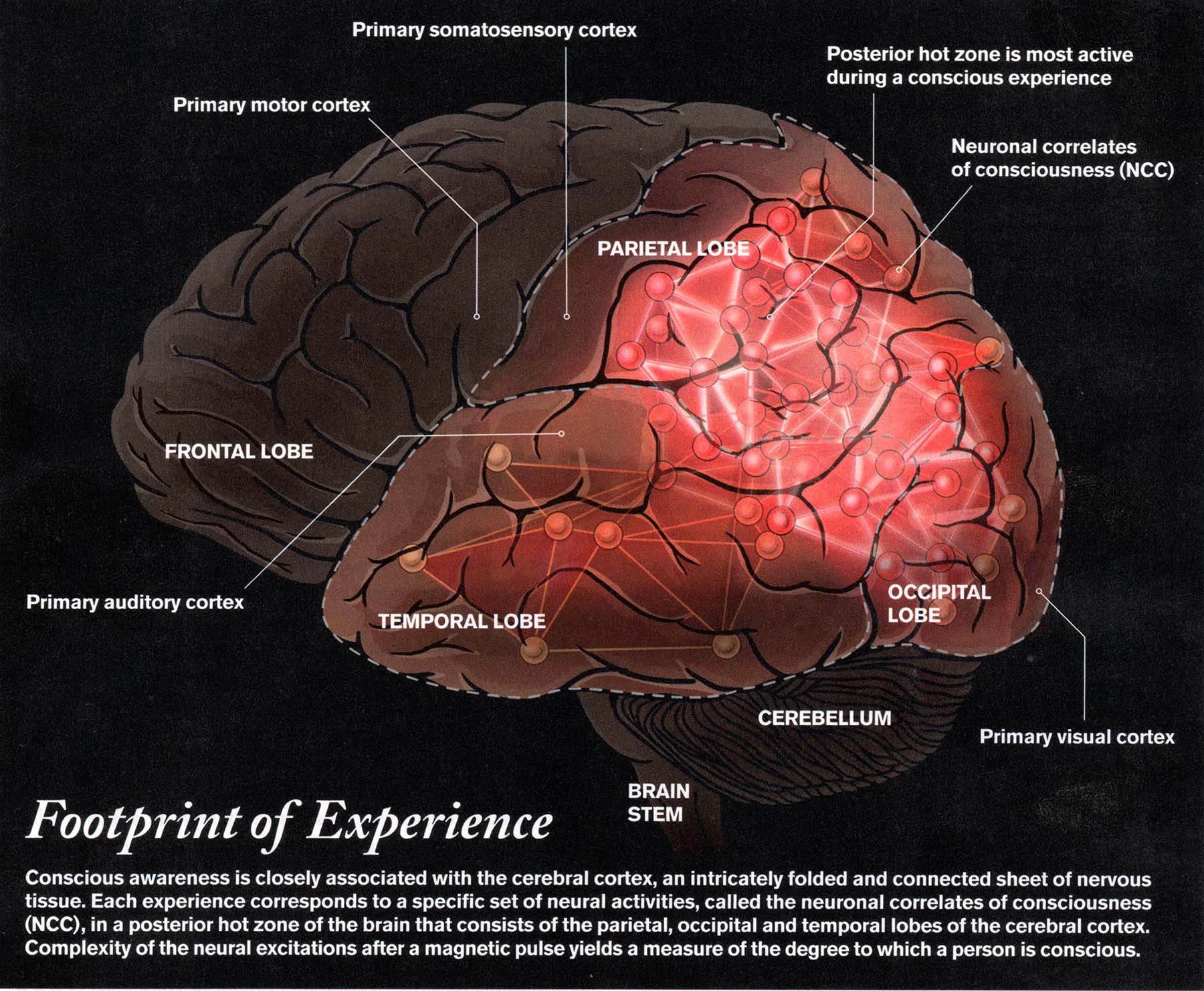

In a recent essay,

Koch has engaged in a kind of process of elimination to identify

the minimal NCC ("What is

Consciousness?", Scientific American, 06/2018, from which

the illustration is taken):

In a recent essay,

Koch has engaged in a kind of process of elimination to identify

the minimal NCC ("What is

Consciousness?", Scientific American, 06/2018, from which

the illustration is taken):So what might be going on in the posterior hot

zone? Koch himself has explored a number of

possibilities. The most important thing to note is that

the PHZ contains the various sensory projection areas -- for

vision, audition, and touch. In vision, for example,

various qualities of sensation -- shape, color, etc. -- are

represented by neural ensembles (NEs). When the

various NEs fire simultaneously, they combine to create a neural

representation of the entire sensory experience -- what Koch

calls an objectNE. This follows the principle,

announced by the Canadian neurophysiologist Donald O. Hebb, that

"neurons that fire together, wire together". That brings

us to...

Along with Francis Crick (the Nobel laureate

who, with James Watson, discovered the double-helix

structure of DNA), Koch earlier proposed that these "minimal

neuronal mechanisms" consist of synchronization at 40

hertz (or 40 cycles per second). Crick and Koch

(1990) assume the existence of essential nodes in

sensory cortex. These are localized bundles of

neurons, each of which represent some feature of a stimulus,

such as its size, location, shape, color, movement, and the

like. Koch proposes that a representation of the

stimulus as a whole requires that these essential nodes be

connected with each other in a coalition of neurons

which, in turn, has reciprocal connections to the

attentional system in the brain. The synchronized

firing, at 40 hz, of the essential nodes comprising a

coalition of neurons connects the entire coalition to the

attentional system, and thus brings the stimulus into

conscious awareness. Alternatively, attention could be

directed toward a particular stimulus, which would then

activate the essential nodes in the relevant coalition and,

again, bring the stimulus into awareness.

For more on the distinction between attention that is captured by a stimulus, in a bottom-up fashion, and attention that is deployed to a stimulus, in a top-down fashion, see the Lecture Supplements on "Attention and Automaticity".

In a revision of the synchronization theory, Koch (2004) has proposed that synchronized firing is necessary, but not sufficient, for conscious awareness. What synchronized firing does do, according to this revised view, is bind the various physical features together into a unitary internal perceptual representation of a stimulus -- for example, a white soccer ball with blue and gold details moving away from the observer from the lower left quadrant to the upper right quadrant of visual space. This unified percept would remain unconscious until the entire coalition of neurons fired in synchrony with an ensemble of consciousness neurons.

Yet another pair

of neuroscientists, Gerald Edelman and Giulio Tononi (2001)

have proposed that what they call the dynamic core

is the key to consciousness. According to Edelman's

theory of neural Darwinism, neural development

proceeds by means of the selection of neurons. Neural

connections that fire a lot are preserved, while neural

connections that go unused are pruned away, in a manner

analogous to natural selection. In their view,

consciousness arises as a function of integrated and

synchronized neural activity involving a large number of

neurons -- and, even more important, a large number of connections

among neurons that permit them to influence each others'

activity -- and in particular, a large number of re-entrant

connections that permit the reciprocal influence of

Neuron A on Neuron B, but also the influence of Neuron B on

Neuron A.

According to Edelman and Tononi,

the dynamic core resides in the thalamocortical system

of the brain, consisting of a bundle of neural fibers (white

matter) connecting the thalamus to various locations of the

cerebral cortex (grey matter). Interestingly, the

thalamocortical system is larger in humans than in other

animals. In normally conscious individuals,

thalamocortical activity oscillates between "feedforward"

transmission from the thalamus to the cortex and "feedback"

transmission from the cortex to the thalamus.

Disruption of the thalamocortical system can result in

disruptions of consciousness, as in certain forms of epileptic

seizures.

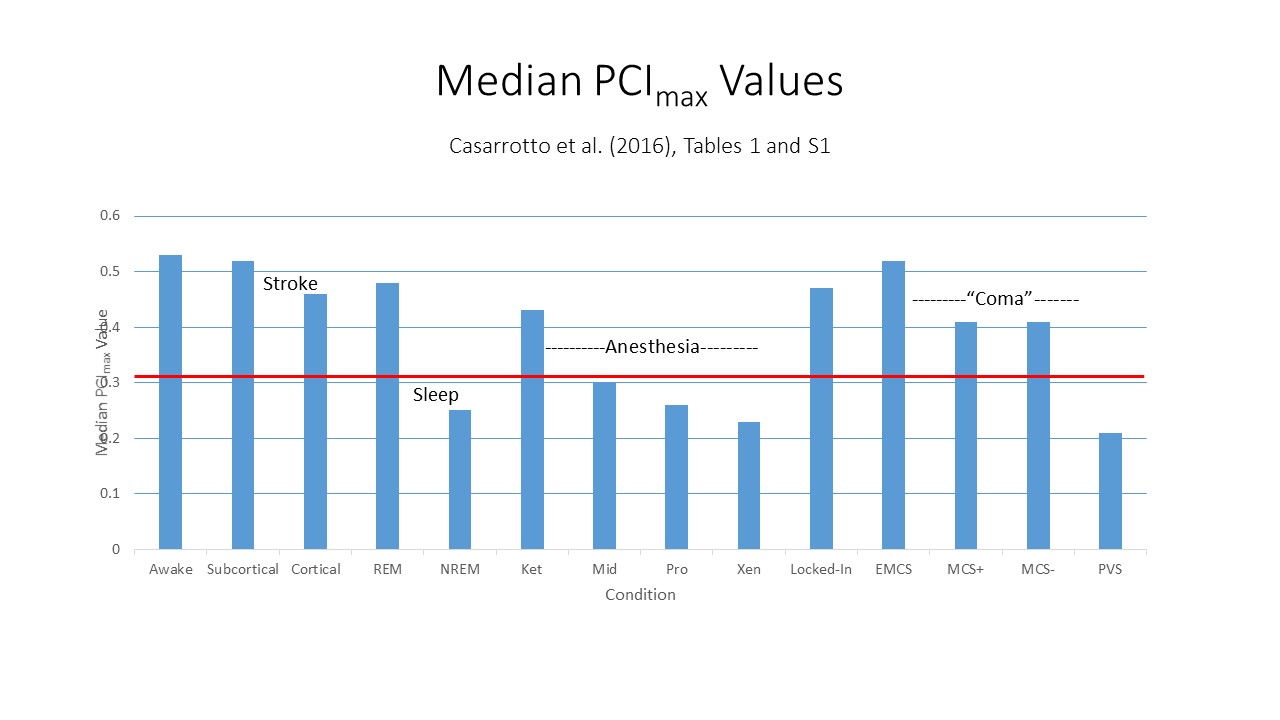

The Perturbational

Complexity Index (PCI, symbolized by the Greek

letter phi) described in the lectures on Mind and Body,

is based on Edelman's and Tononi's work -- especially the

latter. Recall from that presentation that the TMI which

provides the "zap" in "Zap and Zip" is aimed at the

thalamocortical system, and measures response to the

thalamocortical stimulus in the cerebral cortex. In the

2018 essay cited above, Koch promotes the PCI as a

"consciousness" meter which can determine whether, and to what

extent, a person has conscious awareness.

The Perturbational

Complexity Index (PCI, symbolized by the Greek

letter phi) described in the lectures on Mind and Body,

is based on Edelman's and Tononi's work -- especially the

latter. Recall from that presentation that the TMI which

provides the "zap" in "Zap and Zip" is aimed at the

thalamocortical system, and measures response to the

thalamocortical stimulus in the cerebral cortex. In the

2018 essay cited above, Koch promotes the PCI as a

"consciousness" meter which can determine whether, and to what

extent, a person has conscious awareness.

Koch also links PCI to the global neuronal workspace (GNWT) theory of consciousness originally offered by Bernard Baars (as the "global workspace" theory, GWT), and also endorsed by Stanislas Dehaene and Jean-Pierre Changeux, two prominent French psychologists. As discussed in the lectures on Mind and Body, GNWT consciousness is analogous to a mental blackboard, whose contents are accessible to a wide variety of different cognitive systems -- perception, language, problem-solving, processes (this is the function assigned to working memory by other cognitive psychologists). Koch believes that the PHZ is the neural basis of the GW, providing an area where various sensory inputs can be integrated with each other and with other systems. Why all this integration shouldn't go on outside of conscious awareness, and why this area should generate conscious awareness of what is going on in it (or, alternatively, what it is doing) isn't clear. But setting aside the problems with the PCI, also discussed in the lectures on Mind and Body, at least there are principled empirical and theoretical reasons for thinking that the PHZ has something to do with consciousness.

Searle (1992) has

characterized the program for identifying the NCCs as

follows:

Searle (1992) has

characterized the program for identifying the NCCs as

follows:

Again, note that the psychology comes first, and the success of the neuroscientific enterprise depends utterly on the validity of the description of mental functions at the psychological level of analysis.

But answering this question

depends on the definition we adopt of consciousness

itself. Consider, for example, the various definitions

of consciousness offered by John Searle (1992), or those

listed by Rinder & Lakoff (1999):

But answering this question

depends on the definition we adopt of consciousness

itself. Consider, for example, the various definitions

of consciousness offered by John Searle (1992), or those

listed by Rinder & Lakoff (1999):

In the search for the neural correlates of consciousness, the general experimental tactic is to vary the subject's state of consciousness, and then observe correlated changes in brain state. Which of these aspects of consciousness, then, will we correlate with brain activity? It seems likely that different choices are likely to yield different neural correlates of consciousness. Thus, the rationale for the selection has to be carefully considered. This is not a trivial issue, precisely because there are so many different definitions of consciousness around.

Consider, for example, the simple (and commonplace) definition of consciousness with "wakefulness" or "alertness". If you're going to uncover the neural correlates of "wakefulness", the first question is "wakefulness compared to what?". As it happens, there are lots of different conditions that contrast with ordinary waking consciousness:

There are also fringe

cases:

We will discuss many of these states more completely in the lectures on "Anesthesia", "Coma", and "Sleep". For now, let's draw just a simple contrast among three of them:

In coma, the person's eyes are permanently closed (unless he recovers). Coma has been characterized as a complete loss of consciousness.

In the vegetative state, the person's eyes open and close, following something like the normal cycle of waking and sleeping. Because the patient's eyes are sometimes open, and also because the patient is sometimes responsive to intense stimulation, the vegetative state has been characterized as wakefulness without consciousness.

In the locked-in syndrome the person can respond reflexively to auditory and visual stimuli. Because these patients can communicate through blinking and certain eye movements, the locked-in syndrome can be characterized as full consciousness.

On

the

basis of such considerations, Steven Laureys (2005), a Belgian

neurologist, has proposed that there are not one but rather

two continua of consciousness -- one having to do with

wakefulness and the other having to do with consciousness.

Various conditions can then be located within the resulting

two-dimensional space.

We know that the brain changes observed

in coma, for example, are quite different than those

observed in sleep. But the patient is "not conscious"

in either instance. Which comparison, then, will yield

the neural correlate of consciousness? It

follows that there must be at least two neural

correlates of consciousness -- one that regulates

wakefulness, and one that regulates conscious awareness per

se.

But for a start, let's look briefly at the

lower left quadrant of Laureys's graph, where coma

reflects the absence of both wakefulness and awareness -- the

epitome of loss of consciousness. We know that coma is

typically associated with damage to the upper posterior

portion of the brainstem -- a structure known as the reticular

system, including the periaqueductal gray and the

paarabrachial nucleus. Sometimes, the thalamus

is also damaged. The reticular formation sends activation

upward to the thalamus (which is why it is called the reticular

activating system, which in turn distributes the

activation to the rest of the cortex. The RF also governs the

sleep-wake cycle. As a consequence, damage to the RF will

pretty much put the rest of the brain out of commission. So,

if consciousness is defined as wakefulness, then the reticular

formation is the neural correlate of consciousness (which is

why the reticular system is sometimes called the reticular

activating system).

A somewhat different analysis of consciousness

has been proposed by Dehaene et al. ("What is Consciousness,

and Could Machines Have It?, Science,

10/27/2017). Like Laureys, they believe that

consciousness varies along two orthogonal (or independent)

dimensions: global availability and self-monitoring.

They refer to these aspects of consciousness as C1 and

C2, respectively.

But C1 and C2 also work together in a

synergetic fashion. For example, by bringing metacognitive

information into the global workspace, people are able to share

their individual confidence levels, perhaps leading to superior

group performance.

Comatose patients or sleeping subjects are not conscious in the sense that they are not awake, but they are also not conscious in the sense that they are not aware of events in their environment (or, except perhaps in the case of dreaming, of events in their own minds). So if we discover the neural correlate of coma, or the neural correlate of sleep, we do not necessarily know whether that is the neural correlate of wakefulness or of awareness.

So, let's first move outward along the wakefulness continuum, and then upward along the aareness continuum.

As it happens, the persistent

vegetative state (PVS) is defined as wakefulness

without awareness, because PVS patients go through the

normal sleep-wake cycle, but even when "awake" don't respond

to stimulation. PVS patients have damage in the same

midbrain area as do comatose patients, if perhaps not so

extensive, which again suggests that some portions of the

reticular system are critical for wakefulness, and others

are critical for awareness.

As it happens, the persistent

vegetative state (PVS) is defined as wakefulness

without awareness, because PVS patients go through the

normal sleep-wake cycle, but even when "awake" don't respond

to stimulation. PVS patients have damage in the same

midbrain area as do comatose patients, if perhaps not so

extensive, which again suggests that some portions of the

reticular system are critical for wakefulness, and others

are critical for awareness.

In a variant on the PVS, known now as the

minimally conscous state (MCS), patients show

some (limited, occasional, inconsistent) differential

responsiveness to commands and other stimulation. The MCS

involves the same sort of thalamic damage as the PVS, but

apparently not severe enough to abolish awareness entirely.

In a variant on the PVS, known now as the

minimally conscous state (MCS), patients show

some (limited, occasional, inconsistent) differential

responsiveness to commands and other stimulation. The MCS

involves the same sort of thalamic damage as the PVS, but

apparently not severe enough to abolish awareness entirely.

Just to strengthen the case,

patients with the locked-in syndrome show both

wakefulness (i.e., normal sleep-wake cycles) and awareness

(though it is difficult for them to demonstrate this

behaviorally), suffer a pattern of brain damage that differs

markedly from that observed in coma. In the locked-in

syndrome the damage is in the upper anterior portion

of the brainstem, and excludes the reticular

formation. Because the reticular formation is spared,

consciousness and the sleep-wake cycle are also

spared. Again, the damage is in areas above the

trigeminal nerve (V cranial nerve), so that the patient is

able to communicate (and demonstrate awareness) by means of

eye movements.

Link to a National Public Radio story on a case of locked-in

syndrome.

So now we

have the first NCC: the reticular system in the brainstem

sends signals "up" to the rest of the brain to keep it in a

state of arousal or wakefulness. That's why we call it

the reticular activating system (RAS). If the

RAS is damaged, and can no longer perform this function, the

person lapses into a coma, or permanent state of

unconsciousness. Even if the RAS is intact, damage to

the thalamus, generally considered to be a relay station in

the brain, will have the same effect.

As will be discussed in detail later, many psychologists and other cognitive scientists associate consciousness with attention. Attention brings some object or event into consciousness, and we become conscious of an object or event by paying attention (or having attention drawn) to it.

Posner and

Peterson (1990) distinguished among three aspects of

attention. And as it turns out, each of these has its

own neural correlate:

Of particular

relevance here is a neurological condition called neglect

syndrome, often secondary to a stroke, in which patients

appear unaware of objects in the visual field contralateral to

their lesion. For example, if a patient with a lesion in

the right hemisphere is asked to bisect a horizontal line, he

or she may draw a line about 1/4 of the way in from the

right. It is as if the patient does not notice the left

half of the stimulus. The problem is not one of

sensation or perception, because there is no damage to the

visual areas of the brain. Instead, the problem appears

to lie in the brain system(s) mediating visual

attention.

Link to a YouTube video demonstrating unilateral visual neglect.

In some sense, then Balint's syndrome can also be thought of as a disorder of consciousness -- a failure to be consciously aware of two things at once. When Balint's syndrome occurs, it is often associated with bilateral damage to the posterior parietal cortex.

Link

to a YouTube video demonstrating Balint's syndrome.

On the basis of an experimental study of neglect

patients, Posner et al. (1984) also identified three component

executive processes of attention. Each of these

processes appears to have its own particular neural substrate

as well. Together, they comprise what has become known

as the dorsal attention network (DAN):

According to a theory proposed by Zirui Huang

of the University of Michigan (Science Advances, 2020),

consciousness involves a constant shifting of attention outward,

toward some task, or inward, toward task-unrelated

thought. As discussed later in the lectures on Absorption and

Daydreaming, task-unrelated thought is mediated by a

different neural network, known as the default mode network

(DMN). In Huang's theory, the DAN and DMN are

"anticorrelated": the neural correlate of consciousness

alternates between the DAN and DMN, depending on what the

subject is paying attention to. Another way of putting it, I

suppose: if you're conscious you're paying attention to

something, but the neural correlate of consciousness depends on

what you're paying attention to.

Related to the identification of consciousness as attention is the identification of attention with a memory system formerly known as primary or short-term memory, and now generally known as working memory.

For more information on primary and working memory, click on "Memory" in the bSpace navigation bar to get to the Lecture Supplements on "Memory" (Psychology 122); then click on "Primary" in the navigation bar at the top of the page.

Gradually, cognitive psychologists replaced the concept of short-term memory with working memory (Baddeley & Hitch, 1974). For all intents and purposes, working memory is the same as primary or short-term memory, but short-term memory seemed to imply passive storage -- information just sat in short-term memory while it was being rehearsed. Working memory has more active implications -- information resides in working memory while it is being used in other cognitive tasks.

Moreover, the classical multi-store model of memory considered primary (short-term) memory as an intermediary between the sensory registers and long-term (secondary) memory. But Baddeley and Hitch proposed that information is processed directly into long-term memory, with no need for an intermediate way-station like short-term memory. Then, when the cognitive system wants to use knowledge stored in long-term memory, it copies it into working memory.

In Baddeley's original formulation, working memory consisted of a central executive which controls several slave systems: a phonological loop holds speech-based information, and generates "subvocal" speech (i.e., mental repetition) during rehearsal; a visuo-spatial sketchpad rehearses visual information in the form of mental images; and there are, presumably, other slave systems for other modalities. The slave systems maintain items in an active state. These are very transient memories: once rehearsal stops, the items disappear from working memory; and newly incoming information will replace old information.

In more contemporary formulations, working memory consists of temporary memory representations that have been activated by perception or memory. These representations have been activated precisely because they are relevant to current cognitive tasks, and they are used to guide thought and action.

A brain-imaging study by Passingham and

his colleagues revealed a number of locations that are

activated when subjects perform working memory tasks,

including areas of the anterior and dorsolateral prefrontal

cortex, the superior parietal sulcus, and the inferior

frontal gyrus. In addition, some of these areas were

more active when subjects performed a verbal working memory

task, and others were more active when subjects performed a

visuo-spatial task.

Setting aside the question of people who are in some sense totally unconscious, there are experimental problems posed by the variety of conscious experiences had by people who are in every sense of the word conscious. Consider, for example, the technical definition of consciousness in terms of qualia, or the "raw feels" of sensory experience. There is the conscious experience of vision and hearing, of blue and red, sweet and sour.

According to Muller's Doctrine of

Specific Nerve Energies, each modality of sensation is

associated with a different set of neural structures, and

in particular a different projection area in the cerebral

cortex.

According to Helmholtz's Doctrine of Specific Fiber Energies, each quality of sensation is associated with a different set of neural structures. We have already seen how this works out in the case of the trichromatic and opponent-process theories of color vision.

The situation is complicated because there are certain conditions in which people are awake, and thus conscious, but not aware of certain things that are going on (or have gone on) around and aware of things that are going on -- and therefore, in some sense, unconscious. We'll discuss these cases at length later in the course, but for now here's a taste:

Amnesic

patients, who have suffered damage to the hippocampus

and associated structures of the medial temporal lobe

memory system, do not consciously remember their recent

experiences, but "implicit" memory for these same

experiences can manifest itself unconsciously in the

form of "priming" and other effects. The

implication is that the hippocampus (etc.) is the neural

correlate of conscious memory. That's

fine, but....

"Blindsight"

patients, who have suffered damage to the striate cortex

and associated structures of the occipital lobe, do not

consciously see objects presented to their scotoma, but

perception of these same objects can manifest itself

unconsciously much after the manner of "implicit"

memory. The implication is that striate cortex is

the neural correlate of conscious vision, but of

course the striate cortex is irrelevant to conscious

memory, not to mention conscious hearing.

There are also cases of deaf hearing, where patients can make above-chance judgments about the auditory properties of stimuli that they cannot hear, and also of numbsense, where patients can make above-chance judgments about the tactile properties of stimuli that they cannot feel. Just as blindsight supports the suggestion that V1 is a neural correlate of conscious (as opposed to unconscious) vision, so deaf hearing and numbsense supports the suggestion that A1 and S1 are neural correlates of conscious (as opposed to unconscious) hearing and touch, respectively.

Spared priming in amnesia exemplifies a dissociation between explicit (conscious) and implicit (unconscious) memory, just as blindsight, deaf hearing, and numbsense exemplify a dissociation between explicit (conscious) and implicit (unconscious) perception. I'll have more to say about these phenomena in the lectures on "The Explicit and the Implicit", later in the course.

For now, it's only necessary to underscore that amnesic patients are not conscious of past events stored in memory, but they retain a full capacity for conscious seeing; by contrast, blindsight patients are unconscious of visual stimuli, while they're fully capable of consciously recollecting their past. The implication is that the neural correlates of consciousness will differ for memory as opposed to perception, and for visual perception as opposed to auditory perception.

Put another way, there's no single neural correlate of consciousness. The neural correlate of consciousness will depend on what the person is conscious of. Damage to the hippocampus may deprive a patient of conscious access to memory, while damage to Area V1 may deprive a patient of conscious access to visual percepts.

In an early

attempt to account for the sorts of explicit-implicit

dissociations observed in amnesia and other neurological

syndromes, Schacter (1990) suggested that our mental

architecture might contain a module specifically

dedicated to conscious awareness -- a module which he

called the conscious awareness system (CAS).

Based on the neuroscientific doctrine of modularity, he

argued that our mental apparatus contained a number of

separate modules devoted to processing various sorts of

information -- lexical, conceptual, facial, spatial,

episodic memory, and the like, each associated with a

fixed neural architecture. Each of these has

independent connections to the CAS, and each of them has

independent connections to systems mediating response

outputs. A disconnection between the episodic

memory module, say, and the CAS would prevent the person

from consciously remembering past experiences. But

it would not prevent that same patient from being

consciously aware of visual or auditory stimuli.

And it would not prevent knowledge stored in episodic

memory from influencing the person's experience,

thought, or action -- for example, through priming

effects.

According to the doctrine of modularity, each mental module is associated with a fixed neural architecture. The implication is that the CAS is represented physically in a particular part of the brain. Damage to the CAS itself would prevent the person from being conscious of anything. Damage to the specific connection between some processing module and the CAS would prevent the person from being conscious of whatever is processed by that module, without impairing consciousness in general. Exactly where the CAS is located in the brain isn't known -- though, perhaps, the thalamocortical system might be a pretty good place to look. The more specific impairments of consciousness, however, as in amnesia or blindsight, won't reveal the neural correlate of the CAS -- because, in these cases, the problem is in the connection between the CAS and some other mental module. For that, we have to look somewhere in white matter, and use techniques such as Diffusion Tensor Imaging (DTI), a variant on MRI which can reveal the activity of white matter.

All of this is, admittedly, speculative, just to provide some sense of how the neural correlates of consciousness might be identified. But again, the major point is that the neural correlate of consciousness is likely to differ depending on what the person is (or is not) conscious of. If the person is conscious of a visual percept, the striate cortex appears to be critical. If the person is conscious of a past event, then the hippocampus appears to be critical.

Let's make this point one more time,

in a different way. Imagine, for example, that

patterns of brain activity revealed by PET, fMRI, or any

other brain-imaging technology differ, depending on what

the person is doing, mentally. These PET

images were collected by Hannah Damasio (and published

in the New York Times, 05/07/2000) while

subjects were consciously thinking various kinds of

thoughts -- pleasant or depressing, anxious or

irritating. The neural correlates of these

conscious thoughts each differed from the

others.

In order to obtain these

images, Damasio averaged cortical activity across a

number of different pleasant vs. unpleasant

(etc.) thoughts. But, in principle, it ought to be

possible to obtain the neural signatures of particular

individual mental states.

In

fact, it

is possible, not just in principle, but empirically, and

not just in the future, but now. In a study by UCB

professor Jack Gallant and his colleagues (Kay et al., Nature, 2008), two

subjects viewed 1750 photographs of naturalistic scenes

(animals, buildings, people), one at a time, while their

brain activity was recorded by fMRI. The research

takes advantage of the topographic organization of the

visual system, in which each point in the visual field

projects to a specific point on the retina, and this

spatial information is carried through to the visual

cortex (Areas V1-V3) in the

brain. Software designed by Gallant then created a

receptive-field model for each voxel in fMRI images of

the visual area while two

subjects viewed each of the images. In this way,

Gallant's program "learned" to associate particular

images with particular patterns of brain activity.

When the

subjects then viewed a new set of 120 images, the

software was able to "guess" with approximately 82%

accuracy which image the subjects were viewing (the

baserate for such guesses, by chance alone, would be a

mere 0.8%).

In

a second study (Naselaris et al.,

2009) Gallant and his colleagues were actually able to

reconstruct the images from the subjects'

pattern of brain activity -- essentially, by reversing

the algorithm that produced the topographical maps created in the 2008 experiment.

The reconstructions aren't perfect, owing partly to

lack of computational power (for all the power in

Gallant's computers, you need even more!). But

they're not bad.

The upshot is that

Gallant and his colleagues have begun to identify the

neural correlates of consciously viewing a

particular scene -- not just of conscious seeing,

in general. And there was a different patern of

neural activity -- a different

neural correlate -- for each percept.

For more on Gallant's research, see:

- "It's Not Mind-Reading, but Scientists Exploring How Brains Perceive the World" (PBS NewsHour, January 2, 2012)

- "Beyond Localization: Detailed Maps of Visual and Linguistic Information Across the Human Brain" (Psychology Department Colloquium, September 4, 2013).

The search for the neural correlates of consciousness is predicated on the assumption that there are certain patterns of neural activity that are both necessary and sufficient for consciousness. That's the implication of psychophysical parallelism, and this assumption is stated expressly by identity theory, and by Koch's principle of covariance. Consciousness is a product of brain activity, and all you need for consciousness is a brain of a particular type (like ours).

This

assumption is made clear by the thought

experiment of the brain in a vat,

proposed by a philosopher, Hillary Putnam, who

was inspired in turn by a passage of Descartes'

Meditations. Descartes imagined

that there might be an "evil demon" who presents

an illusory image of the external world to

Descartes' sense apparatus, such that all of

Descartes' sensory experiences are, in fact,

illusory -- put bluntly, there's no external

world, only mental representations created by

this evil demon. Descartes could wonder

whether the world as it appeared to him was

really the world as it existed outside the mind

(if there was such a world at all; but he

couldn't doubt that he was thinking about this

problem. Hence, cogito ergo sum.

Similarly, Putnam asked us to imagine that someone's brain has been removed from his skull, supplied with appropriate nutrients, and then connected to a supercomputer which would provide it with inputs and outputs. Accordingly, this brain would continue to perform various cognitive functions, including consciousness of inputs and outputs. But the inputs and outputs themselves would be entirely illusory: the inputs are merely creations of the supercomputer, and for that matter the outputs are just dumped in a wastebasket somewhere. Putnam's question is this:

Good question, and one not just

for science fiction. Robert White

(1926-2010), a prominent transplant surgeon,

Nobel Prize nominee and bioethics advisor to the

pope, had as his ultimate goal the

transplantation of a human head from one body to

another. A devout Catholic, he hoped that

such research would answer the question of

whether the soul resided in the brain or in the

body as a whole. Failing that, he hoped to

keep human brains (like Stephen Hawking's) alive

in jars, where they could think without the

distraction of sensory input. The whole

story is told in Mr. Humble and Dr. Butcher:

A Monkey's Head, the Pope's Neuroscientist,

and the Quest to Transplant the Soul by

Brandy Shallace, reviewed by Sam Kean in "Could

You Transplant a Head? This Real-Life Dr.

Frankenstein Thought So", New York Times

Book Review, 03/21/2021).

But instead of answering this

question, UCB philosopher Alva Noe thinks that

the problem of the brain in a vat reveals a new

aspect of the mind-body problem, and suggests

that the search for NCCs isn't enough.

Consciousness obviously requires a brain, in his

view, and the fact that consciousness is a

product of brain activity motivates, and

justifies, the search for NCCs. However

consciousness is defined, the search for the

neural correlates of consciousness assumes that

they will be found somewhere in the brain -- in

the reticular formation, or in the sensory

projection areas, or in the hippocampus, somewhere

in the brain. It seems obvious that

consciousness is located in the brain, not least

because consciousness is an aspect of mental

life, and mind is what the brain does.

In an essay on art and the

emerging field of "neuroesthetics" ("How Art

Reveals the Limits of Neuroscience", Chronicle

of Higher Education, 09/11/2015), Noe

characterizes what he calls "Descartes's

conception with a materialist makeover as

follows:

"You are your brain; the body is the brain's vessel; the world, including other people, are unknowable stimuli,sources of irradiation of the nervous system".

Returning

to the matter (sorry) of consciousness, Noe

argues that consciousness also requires something

to be conscious of. Brains are

necessary but not sufficient for

consciousness. We're

a brain in a body, and that

body is situated in a

world. Consciousness

also requires a body and a world to be conscious

of. As Noe has stated:

"Consciousness is not something the brain achieves on its own. Consciousness requires the joint operation of the brain, body and world.... It is an achievement of the whole animal in its environmental context."

So consciousness isn't exactly "in there", inside the head. It's more properly located "out there", somewhere in the interaction between mind, body, and world (see Noe's book, Out of Our Heads: Why You Are Not Your Brain, and Other Lessons From the Biology of Consciousness, 2009; also Noe, 2004; Noe & Thompson, 2004).

Noe's got a point: to draw an

analogy, the stomach may digest food, but it's

the person who feels hungry and

eats. Similarly, the brain may be the seat

of consciousness, but it's the person

who's conscious -- a person who lives in

the material world, and in a sociocultural

matrix of other people. Brains don't

perceive and remember: people do.

At the very least, Noe's position would seem to imply that the brain, while necessary, isn't sufficient for consciousness. The brain has to interact with the rest of the organism, and it also has to interact with the world outside the brain. But in order to interact with the rest of the organism, and with the environment, the brain has to be there in the first place.

Or does it?

In a famous clinical study,

Penfield and Jasper (1954) reported on

observations made during the surgical removal of

large portions of the cerebral cortex, as a

radical treatment for epilepsy (the same sort of

operation undergone by Patient H.M.). As

is usually the case in brain surgery, the

operations were performed under only local

anesthetic (there is no afference in the brain,

and the patients should remain conscious so that

the surgeons could avoid cortical tissue known

to be needed for functions such as hearing and

seeing). Remarkably, the patients were

conscious and cooperative throughout the

procedure, and there was no interruption of the

continuity of consciousness, despite the removal

of vast amounts of cerebral cortex. Still,

Penfield did not remove all of the

patients' neocortex.

In a famous clinical study,

Penfield and Jasper (1954) reported on

observations made during the surgical removal of

large portions of the cerebral cortex, as a

radical treatment for epilepsy (the same sort of

operation undergone by Patient H.M.). As

is usually the case in brain surgery, the

operations were performed under only local

anesthetic (there is no afference in the brain,

and the patients should remain conscious so that

the surgeons could avoid cortical tissue known

to be needed for functions such as hearing and

seeing). Remarkably, the patients were

conscious and cooperative throughout the

procedure, and there was no interruption of the

continuity of consciousness, despite the removal

of vast amounts of cerebral cortex. Still,

Penfield did not remove all of the

patients' neocortex.

Merker (2007) reviewed a

body of evidence indicating that both

decorticate animals and anencephalic

children born without a cerebral cortex

nevertheless display signs of

consciousness (the latter condition is

also known as hydranencephaly, because

the missing brain tissue is replaced by

cerebrospinal fluid; it is not to be confused

with hydrocephaly, a less serious

condition in which cortical tissue is present,

but is compressed and deformed by enlarged

ventricles). For example:

Merker (2007) reviewed a

body of evidence indicating that both

decorticate animals and anencephalic

children born without a cerebral cortex

nevertheless display signs of

consciousness (the latter condition is

also known as hydranencephaly, because

the missing brain tissue is replaced by

cerebrospinal fluid; it is not to be confused

with hydrocephaly, a less serious

condition in which cortical tissue is present,

but is compressed and deformed by enlarged

ventricles). For example:

![]() Still, Merker reports that

these children respond differentially to

familiar versus unfamiliar people, and show

distinct preferences for some objects and

situations compared to others. They may

even initiate activities -- though, by virtue of

the fact that they lack a motor cortex, they

have severe motor impairments. Such

behaviors come closer to what we ordinarily mean

by consciousness. But, just to underscore

the point, these children are for all intents

and purposes lacking any cerebral

cortex.

Still, Merker reports that

these children respond differentially to

familiar versus unfamiliar people, and show

distinct preferences for some objects and

situations compared to others. They may

even initiate activities -- though, by virtue of

the fact that they lack a motor cortex, they

have severe motor impairments. Such

behaviors come closer to what we ordinarily mean

by consciousness. But, just to underscore

the point, these children are for all intents

and purposes lacking any cerebral

cortex.

Merker suggests that the data from anencephaly suggests that the thalamocortical complex -- that is, the thalamus and all of the cortical structures to which it projects -- is not necessary for consciousness. In both decorticate animals and anencephalic children, however, the midbrain reticular formation is preserved -- as is the cerebellum.

His conclusion, then, is that, even in the absence of a cerebral cortex, the midbrain reticular formation supports "a primary consciousness by which environmental sensory information is related to bodily action (such as orienting) and motivation/emotion" (p.80). For Merker, and for others, what such patients lack (perhaps) is reflective consciousness, or self-consciousness, in which one is aware of seeing, hearing, and so forth.

Of course, it might be the cerebellum, rather than the reticular formation, that's responsible for this. After all, we now know that the cerebellum performs much more complex functions than we formerly appreciated.

As Merker defines it, primary consciousness consists of seeing, hearing, or otherwise experiencing something. Reflective consciousness is being aware that one is seeing, hearing, or otherwise experiencing something. But, absent awareness, it's not clear that there's something it's like to "experience" something without being aware that one is experiencing it. And if there's "nothing it's like" to experience something without being aware of it -- that is, to "experience" something unconsciously -- then it's not at all clear that these patients are conscious after all.

Again, we do

not yet know how these structures

generate the conscious experience of seeing or

hearing, or seeing red vs. seeing green, or

whatever. This is the "hard problem" of

consciousness, as articulated by David

Chalmers. Actually, the hard problem

comes in two somewhat different forms.

First, how do brain processes produce consciousness? Discovering that synchronization at 40 hz, or the activity of the thalamocortical system, is the neural correlate of consciousness at least tells us "where consciousness is" in the brain. But it still doesn't tell us how the brain achieves consciousness. Think of the example of digestion. We know that the gastrointestinal system "does" digestion, in much the same way that the brain "does" consciousness. But we also know how the gut does it. Food passes from the outside world into the stomach, where hydrochloric acid dissolves it into smaller particles, which pass to the small intestine in the form of chyme, where nutrients are absorbed into the blood, whence what's left of the chyme passes to the large intestine, which returns what's left of the food to the outside world in the form of feces. But how does the brain generate mental states?

It's an interesting question -- and it's the one that kept Wittgenstein up all night ("THIS is supposed to be produced by a process in the brain!"), but I have to admit that it's not a question that keeps me up at night. I assume that the brain does it, and I leave it up to neuroscience to figure out how it does it -- to figure out the mechanics.

But we do know that these neural structures, as opposed to other potential candidates, are critical for consciousness. And that's a start.

It seems obvious that any conclusions concerning the neural correlate(s) of consciousness will be constrained by the control condition selected for contrast with consciousness. Control conditions cannot be selected arbitrarily.

It also seems obvious that, as much as some eliminative reductionists might like to substitute "scientific" discourse about neural events for "folk" discourse about mental states, in the final analysis the neural correlates of consciousness are validated against self-reports, and are only as good as the self-reports obtained by the experimenter. The paradox of eliminative reductionism is that, in order to get rid of self-reports, we have to assume that people's self-reports are accurate reflections of their conscious experiences.

So long as you're studying consciousness, there's just no getting away from subjective self-reports.

So what's the second version of the hard problem? It's this: Why do brain-processes produce consciousness? Or, put another way, Why doesn't all that mental activity, interposed between environmental stimulus and organismal response, go on in the dark? Or, put another way, What is consciousness good for? What function does it serve?

Now, that's a good question. And we know it's a good question because there are some philosophers, and other cognitive scientists, including some psychologists (God love them), who think that consciousness isn't good for anything -- that consciousness has no function, and it plays no causal role in the world.

At the very least, these folks are what the philosopher Owen Flanagan would call conscious inessentialists. They admit that we have consciousness, but argue that consciousness is not essential. We have it, and we use it, but we might just a well not have it, because everything we do consciously we could also do unconsciously.

Most radically, they are

what Flanagan would call epiphenomenalists

-- people who, like Shadworth Holloway Hodgson

and Thomas H. Huxley, believe that

consciousness plays no causal role in the

world. Hodgson apparently said it first,

but Huxley -- cousin to Charles Darwin,

ancestor of Aldous Huxley, author of Brave

New World (and, as we shall see, The

Doors of Perception), put it best in his

steam-whistle analogy:

Most radically, they are

what Flanagan would call epiphenomenalists

-- people who, like Shadworth Holloway Hodgson

and Thomas H. Huxley, believe that

consciousness plays no causal role in the

world. Hodgson apparently said it first,

but Huxley -- cousin to Charles Darwin,

ancestor of Aldous Huxley, author of Brave

New World (and, as we shall see, The

Doors of Perception), put it best in his

steam-whistle analogy:

The consciousness of brutes would appear to be related to the mechanism of their body simply as a collateral product of its working, and to be completely without any power of modifying that working as the steam-whistle which accompanies the work of a locomotive engine is without influence upon its machinery.

Now, to be fair, Huxley was talking about consciousness in nonhuman animals, whom he considered to be conscious automata, and it's not at all clear that he thought that human consciousness was epiphenomenal (though Hodgson probably did!). But it's a fair question: What function does consciousness play in behavior? Is it anything more than the steam expended by a steam-whistle, which has nothing to do with the locomotive? Is it anything more than the froth on a wave (another common analogy), which has nothing to do with the action of the wave on ship or shore?

The answer to that question might be obvious, but it apparently isn't obvious to everyone. But answering that question, and for that matter identifying the neural correlates of consciousness, requires that we be able to contrast some function performed consciously with that same function performed unconsciously.

But before we take up the problem of unconscious mental life in detail, we've got to turn our attention to the other three mind-body problems.

This page was last updated 07/11/2020.