[F]or a state of mind to survive in memory it must have endured for a certain length of time.... All the intellectual value for us of a state of mind depends on our after-memory of it. Only then is it combined in a system and knowingly made to contribute to a result. Only then does it count for us. So that the EFFECTIVE consciousness we have of our states is the after-consciousness....

For James, and for others, "all introspection is retrospection".

In a very real sense, modern scientific research on consciousness began with studies of attention and short-term memory inspired by James' introspective analyses. Consciousness has a natural interpretation in terms of attention, because attention is the means by which we bring objects into conscious awareness. Similarly, maintaining an object in primary (short-term, working) memory is the means by which we maintain an object in conscious awareness after it has disappeared from the stimulus field. Attention is the pathway to primary memory, and primary memory is the product of attention.

Modern descriptions of attention are very

much in James' mold. For example, Chun (2011) described

three aspects of attention:

Like

James,

Chun suggests that there are different types of attention,

though his list is somewhat different.

External attention focuses on objects in the external world, including sensory modality, spatial location, at different points in time, and on objects and their individual features. In this view, visual attention is different from auditory attention, attention to a point in space is different from attention to a point in time, we can attend to objects as well as to locations, and we can attend to local features of objects as well as to the objects themselves. Internal attention focuses on memory ("working" and "long-term"), on current tasks, and the selection of responses. We attend to long-term memory when we intentionally retrieve knowledge from it.

Based on a wide

variety of behavioral and physiological studies, William Prinzmetal (at UCB) has drawn a different

distinction

(anticipated earlier by Wundt (1902) and Posner (1978) between two types of attention.

After James, most work on attention focused on the problem of the span of apprehension, or the question of how many objects could be kept in the field of attention at the same time -- for example, the work of R.S. Woodworth

Research on attention faded

into the background during the heyday of the behaviorist

revolution, but was revived in the context of applied

psychological research conducted around World War II, well

prior to the outset of the cognitive revolution.

Research on such problems as air traffic control and efficient

processing of telephone numbers made two facts clear:

The earliest psychological theories of attention were based on the idea that attention represents a "bottleneck" in human information processing: by virtue of the bottleneck, some sensory information gets into short-term memory, and the rest is essentially cast aside.

The first formal

theory of attention was proposed by Broadbent (1958): aside

from its substantive importance, Broadbent's theory is

historically significant because it was the first cognitive

theory to be presented in the form of a flowchart, with boxes

representing information-storage structures, and arrows

representing information-manipulation processes.

Broadbent's

theory, in turn, was based on Cherry's (1953) pioneering

experiments on the cocktail party phenomenon. At

a cocktail party, there are lots of conversations going on,

and individual guests attend to one and ignore the

others. Cherry argued that attentional selection in such

a situation was based on physical attributes of the stimulus,

such as location, voice quality, etc. He then simulated

the cocktail-party situation with the dichotic listening

procedure, in which two different auditory messages are

presented to different ears over earphones; subjects are

instructed to repeat one message as it is played, but to

ignore the other. The general finding of these

experiments was that people had poor memory for the message

presented over the unattended channel: they did not notice

certain features, such as switch in language, or a switch from

forwards to backwards speech. However, they did notice

other features, such as whether the unattended channel

switched between a male and a female voice.

From experiments

like this Broadbent concluded that attention serves as a

bottleneck, or perhaps more accurately as a filter.

The stimulus environment is exceptionally rich, with lots of

events, occurring in lots of different modalities, each with

lots of different features and qualities. All of this

sensory information is held in a short-term store, but people

can attend to only one "communication channel" at a time:

Broadbent's is a model of serial information processing.

Channels are selected for attention on the basis of their

physical features, and semantic analysis occurs only after

information has passed. Attentional processing is

serial, but people can shift attention flexibly from one

channel to another.

Broadbent's model has two

important implications for consciousness:

Broadbent's filter model of attention

laid the foundation for the earliest multi-store models of

memory, such as those proposed by Waugh & Norman

(1965) and Atkinson & Shiffrin (1968). In these

models, stimulus information is briefly held in

modality-specific sensory registers. A limited

amount of sensory information is transferred to short-term

memory by means of attention, and is maintained in

short-term memory by means of rehearsal. Under certain

circumstances, information in short-term memory can be copied

into long term memory, and information in long-term

memory can, in turn, be copied into short-term

memory.

Broadbent's filter model of attention was a good start, but it was not quite right. For one thing, at cocktail parties our attention may be diverted by the sound of our own name -- a phenomenon confirmed experimentally by Moray (1959) in the dichotic listening situation. In addition, further dichotic listening experiments by Treisman (1960) showed that subjects could shift their shadowing from ear to ear to follow the meaning of the message; when they caught on to the fact that they were now shadowing the "wrong" ear, they shifted their attention back to the original ear. But the fact that they shifted their attention at all suggested that they had processed the meaning of the unattended message to at least some extent. These findings from Moray's and Treisman's experiments meant that there had to be some possibility for preattentive semantic analysis, permitting people to shift their attention in response to the meaning and implications of a stimulus, and not just its physical structure.

For

these reasons, Treisman (1964) modified Broadbent's

theory. In her view, attention is not an absolute filter

on information, but rather something more like an attenuator

or volume control. Thus, attention attenuates, rather

than prohibits, processing of the unattended channel; but this

attenuator can also be tuned to contextual

demands.

At a cocktail party, you pay most attention to the person you're talking to -- attentional selection that is determined by physical attributes such as the person's spatial location. At the same time, you are also attentive for people talking about you -- thus, attentional selection is open to semantic information.

The bottom line is that attention is not determined by physical attributes, but rather can be deployed depending on the perceiver's goals. This situation is analogous to signal detection theory, where detection is not merely a function of the intensity of the stimulus, and the physical acuity of the sensory receptors, but is also a function of the observer's expectations and motives.

Like Broadbent's model, Treisman's model is historically important because it is the first truly cognitive theory of attention. It departs from the image of bottom-up, stimulus-driven information processing, and offers a clear role for top-down influences based on the meaning of the stimulus. Broadbent (1971) subsequently adopted Treisman's modification.

Treisman's revised filter model has important implications for consciousness. In her model, preattentive processing is not limited to information about physical structure and other perceptual attributes. Semantic processing can also occur preattentively, at least to some extent. The question is:

Treisman's theory altered the

properties of the filter/attenuator, but retained its location

early in the sequence of human information processing.

The next stage in the evolution of theories of attention was

to move the filter to a later stage, and then to abandon the

notion of a filter entirely.

Late-selection

theories of attention (Deutsch & Deutsch, 1963; Norman,

1968) held that all sensory input channels are analyzed fully

and simultaneously, in parallel. Once sensory

information has been analyzed, then attention is deployed

based on the pertinence of analyzed information to ongoing

tasks.

Late-selection

theories of attention (Deutsch & Deutsch, 1963; Norman,

1968) held that all sensory input channels are analyzed fully

and simultaneously, in parallel. Once sensory

information has been analyzed, then attention is deployed

based on the pertinence of analyzed information to ongoing

tasks.

The debate between

early- and late-selection theories formed the background for

the controversy, which we will discuss later, concerning

"subliminal" processing -- i.e., processing of stimuli

presented under conditions where they are not detected. The

conventional view, consistent with early-selection theories,

is that subliminal processing either is not possible, or else

is limited to "low level" perceptual analyses. The radical

view, consistent with late-selection theories, is that

subliminal processing can extend to semantic analyses as well

-- because semantic processing, as well as perceptual

processing, occurs preattentively.

The debate between

early- and late-selection theories formed the background for

the controversy, which we will discuss later, concerning

"subliminal" processing -- i.e., processing of stimuli

presented under conditions where they are not detected. The

conventional view, consistent with early-selection theories,

is that subliminal processing either is not possible, or else

is limited to "low level" perceptual analyses. The radical

view, consistent with late-selection theories, is that

subliminal processing can extend to semantic analyses as well

-- because semantic processing, as well as perceptual

processing, occurs preattentively.

The debate between early-selection and late-selection theories was very vigorous, and you can still see vestiges of it today. But, like so many such debates, it seemed to get nowhere (similar endless, fruitless debates killed structuralism; in modern cognitive psychology, similar debates have been conducted over "dual-code" theories of propositional and analog/imagistic representations).

Some theorists cut

through the seemingly endless debate between early- and

late-selection theories by altering the definition of

attention from some kind of filter (with the debate over where

the filter is placed) to some kind of mental capacity.

In particular, Kahneman (1973) defined attention as

mental effort. In Kahneman's view, the

individual's cognitive resources are limited, and vary

according to his or her level of arousal. These

resources are allocated to information-processing according to

a "policy", which in turn is determined by other influences:

In particular, Kahneman (1973) defined attention as

mental effort. In Kahneman's view, the

individual's cognitive resources are limited, and vary

according to his or her level of arousal. These

resources are allocated to information-processing according to

a "policy", which in turn is determined by other influences:

Another

version

of capacity theory likened attention to a spotlight

(Posner et al., 1980; Broadbent, 1982). In the

spotlight metaphor, attention illuminates a portion of the

visual field. This illumination can be spread out broadly,

or it can be narrowly focused. If spread out broadly,

attentional "light" can be thrown on a large number of objects,

but not too much on any of them. If focused tightly,

attentional "light" can provide a detailed image of a single

object, at the expense of all the others. As the

information-processing load increases, the scope of the

attentional beam contracts correspondingly.

Another

version

of capacity theory likened attention to a spotlight

(Posner et al., 1980; Broadbent, 1982). In the

spotlight metaphor, attention illuminates a portion of the

visual field. This illumination can be spread out broadly,

or it can be narrowly focused. If spread out broadly,

attentional "light" can be thrown on a large number of objects,

but not too much on any of them. If focused tightly,

attentional "light" can provide a detailed image of a single

object, at the expense of all the others. As the

information-processing load increases, the scope of the

attentional beam contracts correspondingly.

Similarly,

attention

can be likened to the zoom lens on a camera (Jonides,

1983; Eriksen & St. James, 1986). As load increases, the

lens narrows, so that only a small portion of the field falls

on the "film". But at low loads, a great deal of

information can be processed, though there will be some loss

of detail.

Similarly,

attention

can be likened to the zoom lens on a camera (Jonides,

1983; Eriksen & St. James, 1986). As load increases, the

lens narrows, so that only a small portion of the field falls

on the "film". But at low loads, a great deal of

information can be processed, though there will be some loss

of detail.

The

spotlight metaphor also raised the question of whether the

attentional beam can be split to illuminate two (or more)

non-contiguous portions of space.

The

spotlight metaphor also raised the question of whether the

attentional beam can be split to illuminate two (or more)

non-contiguous portions of space.

Posner's spotlight metaphor has been particularly

valuable in illuminating (sorry) various aspects of

attentional processing. For example, Posner has

described three different aspects of attention, each

associated with its own brain system:

I began these lectures by noting the close relationship between attention and consciousness -- so close, they seem synonymous.

We become conscious of something by paying attention to it.And even if we identify consciousness with working memory, as opposed to attention per se, attention is the route to working memory -- and thus the route to consciousness as well.Mack and Rock (1998, p. ix), have gone so far as to assert that "there seems to be no conscious perception without attention" (emphasis in the original).For example, there are a number of phenomena in which subjects are not consciously aware of events in the environment that are clearly perceptible -- simply because they are not paying attention to them.

A dramatic

case in point is one a neurological

syndrome known as hemispatial neglect, in which

the patient ignores regions of space contralateral to

the site of the lesion. Patients

who show hemispatial neglect typically

have brain damage localized near the junction of the

temporal and parietal lobes in their right hemisphere -- meaning that

they will neglect objects in their left visual

field.

Neglect is a

complicated syndrome, and it presents in many forms.

There is no visual impairment as such -- neglect is really a deficit of attention. Posner and his colleagues have analyzed this attentional deficit in terms of his "spotlight" model of attention. Briefly, Posner argues that the executive control of attention requires that we disengage attention from its current focus, shift it elsewhere, and then engage with some new object or region of space. According to this model, neglect patients have a particular difficulty disengaging attention -- in this case, from the right side of personal space.

Similar sorts of findings have been obtained in neurologically intact subjects.

Earlier in these lectures, I discussed the dichotic listening paradigm developed by Cherry to study the cocktail-party phenomenon. Subjects shadow an auditory passage presented to one ear, and ignore a competing message presented to the other ear. And subjects can do this quite well, failing to notice much about the unattended passage -- unless, at least sometimes, when it contains highly relevant material such as the subject's own name.

In parafoveal vision, subjects may be asked to detect the appearance of targets presented in the center of a screen. At the same time, words can be presented on the periphery of the screen. Typically, subjects do not notice these peripheral targets. But they can induce semantic priming effects. One such experiment was conducted by Bargh and PIetromonaco (1982), and discussed later in the Lecture Supplement on "Automaticity and Free Will".

In the classic instances of preattentive processing, the subject's attention is deliberately focused elsewhere -- on one channel of a dichotic listening task rather than the other, or to some portion of the visual field rather than somewhere else. However, there are also other circumstances in which people do not consciously see or hear things, even when their attention is not distracted by a competing task. Although some of these phenomena appear to reflect the fact that subjects' attention is focused on one aspect of a stimulus rather than another, in every case the subjects appear to be blind to some object or feature, despite the fact that they are staring right at it.

One

of these phenomena was discovered by Mack, Rock, and

their colleagues, as part of a large study of the

limits of preattentive processing (e.g., Rock et al.,

1992; Mack & Rock, 1998). Their experiments

employed an inattention paradigm in which

subjects were presented with a seemingly

straightforward but actually rather difficult task: to

determine which of two crossed lines, one horizontal

and one vertical, was larger. Subjects focused

on a central fixation point, the cross appeared

briefly (200 milliseconds) either at fixation or in

the parafoveal region, and then the subjects made

their response. Of course, this was just a cover

task. On the first two or three trials, the

subjects were simply presented with lines of different

length, and asked to identify which was the

longer. After establishing this attentional set,

the subjects were unexpectedly presented with a new

stimulus which also appeared briefly, somewhere in the

region marked by the contours of the cross.

After reporting their length judgment, the subjects

were also are asked whether there was another object

presented, and if so where it was. Because the

subjects were not expecting the new stimulus, and were

focusing their attention on the crossed lines (in

order to make the comparative judgment of length),

this trial is called the inattention trial.

It is important to know that this target was presented

within one of the quadrants created by the cross -- so

that, if subjects were scanning the lines to compare

their lengths, as they were instructed to do, they

should also have noticed its appearance.

Nevertheless, about 25% of the time, subjects failed

to note the presence of the target. Regardless

of their response, they were then given a recognition

test in which they were asked to select, from a set of

alternatives, the one that most closely resembled the

new stimulus.

At this point, now that subjects had been effectively warned by the inattention trial that some other stimulus might also appear, the task shifted. In addition to making a judgment about the cross, the subjects were asked report whether another stimulus appeared as well. After two trials with only the cross, the new object dutifully appeared. These are called divided attention trials: the subjects had to pay attention to the cross, in order to complete their manifest task, but they were also looking out for any target. Finally, there were full attention trials in which subjects were instructed to ignore the cross entirely and simply detect the presence of a target. Subjects also performed the recognition task after the divided attention and full attention trials, just as they had after the inattention trial.

For technical reasons, the cross was masked after 200 milliseconds, but this did not prevent it from being clearly seen by the subjects. The judgment task was complicated somewhat by the well-known horizontal-vertical illusion, in which a vertical line appears longer than a horizontal line of the same length. For symmetry, it might have been interesting if the subjects had been surprised by a request to make a length judgment on a subsequent full attention trial, which would effectively would have been an inattention trial with respect to the cross. But because the judgment task is only a cover for the real experiment, none of this really matters.

In performing these experiments, Rock et al. (1992) were initially interested in asking a question about the relation between perception and attention -- to wit, which aspects of perception require attention, and which do not? Studies of a phenomenon known as pop out had already indicated that such elementary features as color, motion, and simple shape (e.g., distinguishing between Ts and Os -- could be perceived independent of attention (Treisman, 1988), but at the time there was a dispute over whether location was also processed preattentively. The Rock et al. (1992) studies showed that targets were successfully detected and located about 75% of the time on the inattention trials, a rate that was not significantly different from that observed on the divided attention or full attention trials. Accordingly, they concluded that location was also processed preattentively -- a reasonable enough conclusion, given the fact that -- as they themselves noted -- you have to know where something is before you can direct your attention to it.

On the other hand, if 75% of subjects detected the target, then 25% missed it. Initially, Rock and Mack dismissed this phenomenon merely as noise" in their data. But the 25% "miss" rate continued to appear, in experiment after experiment. Moreover, the rate of misses on inattention trials increased to as much as 80% when the new object was presented right at the point of fixation, rather than when it was presented a little off-center, in the parafoveal region. Because a substantial number of misses occurred when clearly supraliminal stimuli were presented in that portion of the visual field where visual acuity is optimal, they realized that the misses were a substantive phenomenon in and of themselves. And because they attributed the misses to the fact that subjects were focusing their attention on the contours of the crosses, they named the phenomenon inattentional blindness (IB).

It

may seem difficult to accept that subjects will

entirely miss an object that it entirely within their

field of vision, just because they are paying

attention to something else. However, an

experiment by Neisser (1979) obtained similar effects:

when subjects viewed a videotape of people passing a

basketball back and forth, counting the number of

passes, only 21% reported seeing a clearly visible

woman who walked through the basketball court carrying

an open umbrella. A replication experiment

substituted a chest-thumping gorilla for the woman:

fully half of subjects failed to report the presence

of the beast (Simons & Chabris, 1999, 2009).

Inattentional blindness is theoretically important

because it shows that attention can be directed to

specific objects, not just to particular locations in

space. But in the present context, the

phenomenon is mostly interesting as a dramatic lapse

of conscious perception.

It

may seem difficult to accept that subjects will

entirely miss an object that it entirely within their

field of vision, just because they are paying

attention to something else. However, an

experiment by Neisser (1979) obtained similar effects:

when subjects viewed a videotape of people passing a

basketball back and forth, counting the number of

passes, only 21% reported seeing a clearly visible

woman who walked through the basketball court carrying

an open umbrella. A replication experiment

substituted a chest-thumping gorilla for the woman:

fully half of subjects failed to report the presence

of the beast (Simons & Chabris, 1999, 2009).

Inattentional blindness is theoretically important

because it shows that attention can be directed to

specific objects, not just to particular locations in

space. But in the present context, the

phenomenon is mostly interesting as a dramatic lapse

of conscious perception.

Inattentional blindness may be defined as the failure to consciously perceive an object, otherwise salient, when the subject's attention and expectation are otherwise engaged. Mack and Rock's subjects' attention is focused on the horizontal and vertical lines, and they miss the third object presented within their contours. Inattentional blindness has at least two implications:

Once the phenomenon of IB itself was established to their satisfaction, Mack and Rock (1998) conducted an extensive series of studies designed to discover its properties and limits). For example, in a series of studies paralleling Cherry's (1953) work on the cocktail party phenomenon, these investigators determined that blindness occurred less frequently when the new stimulus was the subject's own name (12.7% of trials), compared to someone else's name (35%) or a common noun (50%). If the subject's name was even slightly modified -- e.g., for example substituting Kon for Ken -- the rate of IB rose considerably. On the other hand, similar modifications to common nouns -- e.g., substituting teme for time -- had little effect on the rate of IB. These studies were exceptionally arduous and time-consuming, because -- unlike the usual experiment in psychology -- the subjects can provide data for only a single inattention trial. After that first trial, they know that the new stimulus can appear, and they are "spoiled" for future research. Based on such findings, Mack and Rock gravitated toward a late-selection view of attention that allowed for preattentive analysis of meaning as well as of physical features -- or, at least, a multilevel attentional system that allowed for both early and late selection.

Mack and Rock (1998) began their program of research with the view that conscious perception requires attention, and thus that inattention produces a kind of blindness -- a state of "looking without seeing" (p. 1). From their point of view, attention is "the process that brings a stimulus into consciousness... that permits us to notice something" (p. 25). Accordingly, there is "no conscious perception at all in the absence of attention" (p. 227). Objects may capture attention involuntarily, or attention may be deliberately directed to an object (by virtue, for example, of expectations, intentions, or mental set); in either case they enter into phenomenal awareness.

But are Mack and Rock really right, that attention

is required for consciousness? And did their

ostensibly blind subjectws really

fail to see the stimuli in question? There are

reasons to be doubtful on both

points. Writing in the tradition

of Eriksen (1960) and Holender (1986), Cuylany

(2001) emphatically denies both claims.

The term "demand characteristics" was introduced into psychological discourse by Orne (1962), as part of a broad critique of methodology in social-psychological research (see also Orne, 1969, 1972, 1973, 1981). By "demand characteristics", Orne meant the totality of cues available in the experimental situation that communicate to the subject the experimenter's design, hypotheses, and predictions {for an analysis, see Kihlstrom, 2002). Many social psychologists employ deception to hide their real intentions, but as Mack and Rock's experiments show, social psychologists are not the only ones to do so. By fabricating the cover task of comparing the lengths of crossed lines, when they are really interested in identification of the extraneous stimulus, Mack and Rock engaged in no less a deception than did Milgram (1963) in his famous experiments on obedience to authority. And just as Milgram's subjects may have caught on to his deception (1968), so Mack and Rock's subjects may have caught on to theirs.

Certain details of Mack and Rock's procedures and

results lend weight to Dulany's critique.

As attractive as the notion of inattentional blindness might be, it has to be said that there are as yet no good answers to many of Dulany's criticisms. To a great extent, the intransigence of Dulany's arguments stems from the fact that, like Eriksen before him, he resists the identification of conscious awareness with introspective self-report. (In fact, Dulany was a graduate student of Eriksen's at the University of Illinois before joining him on the faculty there. In their one joint publication, Dulany and Eriksen (1959) argued that verbal self-reports were no more than accurate than covert psychophysiological responses as indices of stimulus discrimination.)

Dulany expresses a positivist's reserve about introspection, but he is no radical behaviorist. Far from denying the existence or importance of consciousness, Dulany has asserted a thoroughgoing mentalism that identifies consciousness with symbolic representation, and he has defined symbolic processing so broadly -- encompassing everything that happens after sensory transduction and before motor transduction (Dulany, 1991, 1997). Some symbolic codes are literal, close to a perceptual representation, while others are symbolic, more abstract and full of meaning, but none of them are any more or less conscious than the others because from his point of view all symbolic codes are represented in consciousness.

In the end, Dulany adheres to the "grand principle" {Dulany, 1997, p. 184} that "mental episodes consist of nonconscious operation upon conscious intentional states, yielding other conscious intentional states". In other words, mental processes may be unconscious, but mental contents are conscious. This position comports well with the traditional view, in cognitive psychology, that procedural knowledge is unconscious, inaccessible to introspective conscious access in principle, but that declarative knowledge is accessible to conscious awareness. But a major thrust of this course is that mental contents -- percepts, memories, and thoughts -- can also be unconscious, in the sense that they can interact with ongoing experience, thought, and action in the absence of, or independent of, conscious awareness. That is what implicit memory, implicit perception, and other, cognate phenomena (yet to be discussed) are all about.

It is possible that Dulany is right, that only mental processes are unconscious, and that mental states, as symbolic representations, are always conscious. But there is a danger here, and it is of the same kind that Eriksen's arguments posed for studies of subliminal perception. Eriksen identified consciousness with discriminative behavior; but if the evidence for unconscious percepts, memories, and the like consists in observations of discriminative behavior in the absence of self-reported awareness, he has ruled the psychological unconscious out of existence by definitional fiat. Similarly, Dulany identifies consciousness with symbolic representations; but if the evidence for unconscious percepts, memories, and the like consists in observations like priming outside of awareness, then he too has ruled the psychological unconscious out of existence. We are left with the traditional view in cognitive psychology, which identifies the unconscious with automaticity and procedural knowledge, and consciousness with all mental states and contents and states.

Like Eriksen's (1960) before them,

Holender's (1986) critique of preattentive semantic

processing and Dulany's (1997) criticisms of

inattentional blindness are not trivial, and should

have the effect of forcing researchers to clean up

their methodologies, putting evidence for

preattentive, preconscious processing on ever-firmer

empirical grounds. This exactly what happened

with research on subliminal perception (Greenwald et

al.,1996; Draine & Greenwald, 1998). In the

meantime, other investigators have opened several new

lines of research on the general questions of

attention, conscious awareness, and preattentive,

preconscious processing. The studies described

previously in this chapter examined processing when

subjects' attention was directed elsewhere -- to one

channel in dichotic listening, to foveal rather than

parafoveal regions, to some objects but not

others. By contrast, these studies document

anomalies of awareness even when subjects are

directing their attention right at the target.

For this reason, I classify these studies as concerned

with attentional blindness --

deficits in conscious visual processing that occur despite

the subject's appropriate deployment of attention, and

accurate expectations (see

also Kanai et al., 2010).

Paralleling the

conclusions of Mack and Rock

(1997) concerning inattentional blindness, we can

conclude from the phenomena of attentional

blindness that Attention

does not guarantee conscious

perception. But

again, in the present context, we are interested not

so much in the blindness itself -- though that's

interesting. What we're interested in

is whether there is unconscious, implicit

perception of the stimuli in question. Can you

get priming from a stimulus for

which a subject is attentionally blind?

The

phenomena of attentional blindness are most commonly

observed in laboratory conditions employing a

technique called rapid serial visual presentation,

or RSVP (Potter, 1969; Forster, 1970), in which

subjects view a series of visual stimuli presented one

after another in very quick succession -- about 100

milliseconds each. In an early experiment by

Kanwisher and her colleagues, subjects viewed

sentences such as It was work time so work had to

get done, presented one word at a time, and

then were asked to report verbatim what they had

seen. Note that the sentence contains a

repetition of the word work. We can

call the first instance of the repeated word R1, and

the second instance R2. Under RSVP conditions,

streaming about 10 words per second, a large number of

subjects -- about 60% -- omit R2: they report "It was

work time so had to get done", despite the fact that

the sentence is obviously incomplete (Kanwisher,

1987). When R2 is not the same as R1, it is

missed only about 10% of the time.

The procedure here is a little tricky: because most skilled readers do not read every word in a sentence, there is a tendency for them to fill in the gaps with meaningful words -- just as we generally ignore misspellings, missed words, and other typographical errors. To counteract this tendency, Kanwisher carefully instructed subjects to report what they saw verbatim. And she also included a number of sentences that actually had words missing, so that subjects would not be surprised when they reported an incomplete or anomalous sentence.

A later experiment by Kanwisher and Potter(1990, Experiment 1) makes the effect even clearer. In this study, subjects saw three different kinds of sentences.

Across the three conditions, subjects correctly reported R1 approximately 94% of the time; however, they reported R2 only 40% of the time in the repeated condition, compared to an average of about 88% for synonyms and unrepeated condition. The fact that RB occurs for a repetition, but not a synonym, indicates that RB occurs at the level of a perceptual (or possibly lexical) representation, but not at the level of meaning.

RB occurs when one instance of a word -- a token of the type -- appears fairly quickly after the first -- within one or two words, in fact, corresponding to a lag of about 200-300 milliseconds at a presentation rate of eight words per second. If the repeated word is delayed by about 500 milliseconds, either by positioning the word later in the sentence or by slowing the rate of presentation, RB does not occur.

Such findings led Kanwisher (1987, 2001) to propose a type-token individuation hypothesis of RB. According to this hypothesis, conscious awareness requires both the activation of a memory representation of an event (the type) and the individuation of that activated structure as the representation of a discrete event (a token of the type). The first time the subject sees the word autumn, the perceptual representation of that word is activated and a token individuated; but when autumn is repeated so quickly, individuation does not occur, and so the subject does not see it as a different, discrete event -- as another token of the type. The event need not be a word: RB has also been observed for digits and letters, pictures, and simple and complex shapes -- including impossible figures According to Kanwisher, the lack of individuation creates a failure of conscious perception: the subject is not consciously aware of the token's repetition.

Another

phenomenon of attentional blindness is known as the attentional

blink (AB), -- a phenomenon initially noticed

by Broadbent and Broadbent (1987), but given its name

by Raymond, Shapiro, and their associates (Raymond et

al., 1992; Shapiro et al., 1994; for a review, see

Shapiro, 1994, 1997}. In AB experiments,

subjects view a series of stimuli (such as letters)

presented in rapid succession -- say, 30 items over a

period of a three seconds, or a rate of about 100

milliseconds per item (this is roughly the same rate

as in RB experiments). On each trial, the subject's

task is to report the identity of a distinctive target

letter -- for example, the one printed in red as

opposed to black. However, the subjects are also

asked to detect whether a second probe -- for example,

the letter "R" -- also appeared in the letter

series. Of course, the presence of the primary

target (T1) and secondary probe (T2), and their

locations within the string of letters, varied from

trial to trial. Control subjects performed the

probe-detection task only, without the

target-identification task. In a typical

experiment (Shapiro et al., 1994), control subjects

showed a high level of accuracy, approximately 85%,

regardless of where in the sequence T2 was

placed. However, the experimental subjects

missed T2 about half the time when it was presented

within about 500 milliseconds (i.e., within five

items) after T1. If T2 was presented six to

eight items later, outside the 500-millisecond

interval, detection rates for experimental subjects

were essentially the same as those of the

controls.

Of course, the attentional blink is not an actual eyeblink: subjects rarely blink their eyes during an RSVP trial. Nor is the attentional blink a phenomenon of visual masking, because it does not occur if the subject is not instructed to identify (i.e., pay attention to) the preceding T1. Instead, it is as if the subject has blinked, by analogy to the actual eye-blinks that occur after subjects shift their gaze from one location to another. Shapiro and Raymond suggested that T1 is identified preattentively, on the basis of its physical characteristics -- e.g., that it is red as opposed to black. Attention is then directed toward T1 in support of the identification process (e.g., that it is a T rather than an L), but it takes time for attention to shift back to the stream of RSVP stimuli, giving T2 time to be lost through decay or interference. If T2 occurs soon enough after the target, then, it is likely to be missed. However, T2 probe is not missed if the subject is instructed to ignore T1 -- thus supporting an attentional interpretation of the effect.

Although AB is not an actual eyeblink, it is nonetheless a real blink. Sergeant and Dehaene (2004) asked subjects to rate the visibility of the T2 at various lags after T1. After presentation of T1, the visibility of T2 decreased immediately at a lag of 2 (that is, with only a single stimulus between T1 and T2, and returned to baseline immediately at a a lag of six (that is, with five stimuli between the two targets). The disappearance and reappearance of T2 occurred in a discontinuous, all-or-none fashion, with not even a hint of continuous degradation and recovery. This pattern was quite different from that observed with backward masking, which produced an immediate degradation of the preceding stimulus (that is, at a lag of 1), and a gradual increase as the SOA lengthened. Sergent and Dehaene suggest that their results are most compatible with a two-stage model of the AB in which each stimulus in the RSVP display is subject to automatic, preconscious pickup, but conscious processing of T1 consumes cognitive resources that are necessary for conscious required for conscious processing of T2 (Chun & Potter, 1995).

Another

variant on attentional blindness is change

blindness (CB) , which occurs when subjects

fail to notice rather large alterations in familiar

scenes (Rensink, 1997; for comprehensive reviews, see

Rensink, 2004; Simons, 2000; Simons, 2005; Simons,

1997). In the flicker paradigm, subjects are

presented with a picture of a natural scene rapidly

alternating with a slightly modified picture in which

there has been some change in the presence or absence

of some object or feature, its color, orientation, or

location -- about 240 msec per stimulus. In this

case, subjects will readily detect the change,

apparently because the alteration creates a "luminance

transient" that draws attention to the spatial

location in which the change has occurred. But

if the two images are separated by a blank gray field,

presented for only 80 msecs, images the situation is a

little different. In the laboratory, the

difference between the two stimuli is typically

restricted to only a single modification in appearance

(i.e., the presence or absence of some object or

feature), color, or location. The two versions

alternate back and forth on a random schedule,

each version appearing for only a very short time

(typically 240 msec), and separated by a gray field

that appears for an even shorter period of time

(typically 80 msec). On a single trial,

presentation continues in this manner for 60 seconds,

or until the subject detects the change. The

purpose of this procedure is to simulate the situation

in real-world vision, in which the observer must

integrate information across saccadic eye movements --

in this case, simulated by the gray field interposed

between the two versions of the stimulus. In the

absence of the gray field, subjects will immediately

notice the change

Change blindness falls under the rubric of attentional blindness because the subject is not aware that the second image is different from the first. That is a lapse of consciousness.

Can Attention and Consciousness Be Dissociated?

Kahneman's theory laid the foundation for current interest in automaticity: in his view, enduring dispositions influenced allocation policy automatically, outside awareness and voluntary control, while momentary intentions were applied consciously.

The traditional view, associated with early-selection "filter" theories, was that elementary information-processing functions are preattentive, performed unconsciously (or, perhaps better put, preconsciously), and requiring no attention. By the same token, complex information-processing functions, including (most) semantic analyses, must be performed post-attentively, or consciously.

The revisionist view, associated with late-selection theories and capacity theories, agreed that elementary information-processing functions were preattentive, performed unconsciously or preconsciously. But it asserted that complex processes, including semantic analyses, could be performed unconsciously too, so long as they were performed automatically.

Early in the history of cognitive psychology, there was a tacit identification of cognition with consciousness. Elementary processes might be unconscious, in the sense of preattentive, but complex processes must be conscious, in the sense of post-attentive. But the evolution of attention theories implied that a lot of cognitive processing was, or at least might be, unconscious.

LaBerge & Samuels (1974;

LaBerge, 1975) argued that complex cognitive and motoric

skills cannot be executed consciously, because their

constituent steps exceed the capacity of attention. Thus, at

least some components of skilled performance must be performed

automatically and unconsciously. LaBerge & Samuels defined automatic processes as

those which permit an event to be immediately processed into

long-term memory, even if attention is deployed elsewhere.

LaBerge and Samuels illustrated

their concept of automaticity with a model of the hierarchical

coding of stimulus input in reading.

LaBerge and Samuels illustrated

their concept of automaticity with a model of the hierarchical

coding of stimulus input in reading.

The

The  automatization of word-reading is

illustrated by the Stroop effect. In the Stroop

experiment, subjects are presented with an array of letter

strings printed in different colors, and their task is to name

the color of the ink in which the string is printed.

automatization of word-reading is

illustrated by the Stroop effect. In the Stroop

experiment, subjects are presented with an array of letter

strings printed in different colors, and their task is to name

the color of the ink in which the string is printed.

The classic Stroop effect emerged in

Experiment 2, where subjects were asked to name colors.

In the experimental condition, the color was presented as a

word (blue), which named a

different color than that in which the word was printed, as

above. In the control condition, the color was presented

simply as a solid block of color (||||||||||).

In a third experiment, the subjects practiced color-naming over a period of 14 days. The usual Stroop interference effect was apparent on Day 1, not surprisingly. Interference diminished over the subsequent two weeks, but was still apparent on the final day of testing.

In the Stroop effect, the presence of a word interferes with color-naming -- especially if the word is itself a contradictory color name (but, interestingly, even if the word and the color are congruent). The "Stroop interference effect" occurs regardless of intention: Clear instructions to ignore the word, and focus attention exclusively on the ink color, do not eliminate the interference effect. The explanation is that we can't help but read the words -- it occurs automatically, despite our intentions to the contrary.

The Stroop effect

comes in many forms, not all of which involve colors and color

words. For example, Stroop interference can be observed

when subjects are asked to report the number of elements in a

string, and the elements themselves consist of digits rather

than symbols that have nothing to do with numerosity. In

the emotional Stroop test, subjects show particular

interference with color-naming if the words refer to some

topic that is personally meaningful to the subject.

The Stroop effect

comes in many forms, not all of which involve colors and color

words. For example, Stroop interference can be observed

when subjects are asked to report the number of elements in a

string, and the elements themselves consist of digits rather

than symbols that have nothing to do with numerosity. In

the emotional Stroop test, subjects show particular

interference with color-naming if the words refer to some

topic that is personally meaningful to the subject.

The more recent roots of

automaticity are to be found in the literature on skill

acquisition. For example, Fitts and Posner (1967)

identified three stages that subjects went through when

acquiring a new skill:

Posner &

Snyder (1975a, 1975b) contrasted automatic and strategic

processing. Automatic processes:

Schneider & Shiffrin argued that while processing specific pieces of information could be automatized, generalized skills could not.

In contrast, Spelke, Hirst, & Neisser (1976) demonstrated

that a generalized skill (taking dictation while reading at a

high level of comprehension) could be automatized, given

sufficient practice. In their experiment, subjects (actually

paid as work-study students!) performed a divided attention

task in which they read prose passages, and simultaneously

took dictation for words. The subjects practiced this

task for 17 weeks, 5 sessions per week -- with every session

employing different stories and lists. The result was

that reading speed progressively improved, with no decline in

comprehension. Apparently, the subjects automatized the

dictation process, so that it no longer interfered with

reading for comprehension.

In contrast, Spelke, Hirst, & Neisser (1976) demonstrated

that a generalized skill (taking dictation while reading at a

high level of comprehension) could be automatized, given

sufficient practice. In their experiment, subjects (actually

paid as work-study students!) performed a divided attention

task in which they read prose passages, and simultaneously

took dictation for words. The subjects practiced this

task for 17 weeks, 5 sessions per week -- with every session

employing different stories and lists. The result was

that reading speed progressively improved, with no decline in

comprehension. Apparently, the subjects automatized the

dictation process, so that it no longer interfered with

reading for comprehension.

But what about the dictated lists? Initially, the subjects had very poor memory for the words presented on the dictation task. On later trials, they showed better memory for the dictation list, and were able to report when the list items contained rhymes or sentences. They also showed integration errors, such that they remembered lists like The rope broke Spot got free Father chased him as Spot's rope broke. Integration errors indicate that the subjects processes the meaning of the individual sentences and the relations among the sentences automatically -- ordinarily this would be considered to be a very complex task.

Although Spelke et al. wished to cast doubt on the whole notion of attention as a fixed capacity, they also expanded the boundaries of automaticity by showing that even highly complex skilled performance could be automatized, given enough practice.

The general notion of

automaticity has attracted a great deal of interest, based on

the widely shared belief that there are cognitive processes

which generally share the following properties:

The concept of automaticity seems very modern, but in fact its roots go far back in scientific psychology and even further back in the philosophy of mind. For example, William James devoted an entire chapter of the Principles (1880) to refuting "The Automaton Theory" that consciousness is epiphenomenal and plays no causal role in behavior. James agreed that certain habits were automatic, in some sense, but he didn't believe that automaticity was everything.

Automaticity plays an important role in Descartes' notion of the reflex. In his view, the reflex was the basis of the mechanical behavior of beasts: stimulus energy from the environment, falling on sensory surfaces, was reflected by the nervous system and sent back to the environment through the skeletal musculature.

In modern psychology, reflexes

have two primary characteristics:

Early conditioning theorists, such as Pavlov and Watson, lacked a detailed technical concept of automaticity. But they had the underlying idea of automaticity -- that certain response processes were automatically elicited by appropriate stimulus conditions, independent of other activities, including the organism's "intentions".

The psychic activities that lead us to infer that there in front of us at a certain place there is a certain object of a certain character, are generally not conscious activities, but unconscious ones. In their result they are the equivalent to conclusion, to the extent that the observed action on our senses enables us to form an idea as to the possible cause of this action.... But what seems to differentiate them from a conclusion, in the ordinary sense of that word, is that a conclusion is an act of conscious thought.... Still it may be permissible to speak of the psychic acts of ordinary perception as unconscious conclusions... (p. 174).

For Helmholtz, then, perception results from a kind of syllogistic reasoning, in which the major premise consists of knowledge about the world acquired through experience, and the minor premise consists of the information provided by the proximal stimulus. The perception of this proximal stimulus, then, is the conclusion of the syllogism -- except, in the case of perception, we are not aware of the reasoning process. Hence, Helmholtz's phrase, "unconscious conclusions". The fact that the reasoning underlying perception is unconscious is what makes us feel that we are seeing the world directly, the way it really is. But our percepts are not, in fact, immediate products of stimulation -- they are mediated by unconscious reasoning processes.

With the notion of unconscious inferences, Helmholtz broke down the distinction between "lower" mental processes, like sensation and perception, that are closely tied to the stimulus, and "higher" mental processes, like memory and reasoning, that go on in the absence of a sensory stimulus. Reasoning is involved in perception as well as in thinking and problem-solving. But Helmholtz never suggested that perception involved conscious, deliberate, acts of reasoning. These days, Helmholtz might not have described the reasoning as unconscious. Instead, he would probably have described it as automatic.By 1984, the

notion of automaticity had gained widespread acceptance. But

while the criteria for automaticity were clear, the

underlying mechanisms were not: what makes an automatic

process automatic?

One

prominent theory (actually, a whole class of theories) of

automaticity is the memory-based view, according to

which automaticity reflects a change in the cognitive basis

of task performance:

There are at least two prominent versions of this theory.

In Logan's (1988) instance-based theory of automaticity, practice with a task encodes an increasingly strong memory trace of the appropriate response. Practice, then, doesn't just speed up processing. Rather, repeated practice lays down an increasingly strong memory for the correct response to a stimulus. Actually, there is not just one such memory, strengthened through practice. Instead, there is a separate memory for each repeated instance. As these instances accumulate, so does the strength of the memory.

Instance theory makes the seemingly paradoxical prediction that automatic processes can be controlled by varying the retrieval cues present in the environment, which in turn make the underlying memory more or less accessible. So, automatic processes aren't so automatic after all -- they are executed only if the appropriate retrieval cues are available in the environment, but also only if the person processes them as such.

In Anderson's (1992) ACT theory of cognition, conscious processes are represented in declarative memory by sentence-like propositions, while automatic processes are represented in procedural memory by systems of "If-Then" conditional productions.

Productions, representing

procedural knowledge, consist of three elements:

Most procedural knowledge is comprised of whole systems of productions, rather than of a production. In this way, the output of each production, which changes the current state of the actor, serves as input to the next production in the system.

Both the processing goal and the current state are represented in working memory as activated bits of declarative knowledge, such as: I desire to shift into first gear and My foot is pressing down on the clutch.

The production consists of a

conditional rule, such as:

Of course, the whole process is more complicated than a single production, which is why we speak of production systems.

The transformation of declarative knowledge into procedural knowledge, through extensive practice, is known as proceduralization.

Automaticity, Proceduralization and Being-in-the-WorldUCB philosophy

professor Hubert Dreyfuss, and his brother Stuart,

UCB professor of engineering sciences, have traced

the process of automatization in the Dreyfus

skill model (in their book Mind

Over Machine: The Power of Human Intuition and

Expertise in the Era of the Computer, 1986).

According to their model, the acquisition of a

skill proceeds through five stages:

Basically, skill acquisition begins with conscious, deliberate rule-following, and proceeds to the point where a person can perform the skilled activity without thinking about it at all. As illustrations, they point to driving a standard-shift car, playing basketball, playing chess, and what the psychologist Mikhail Csikszentmihalyi (1990) calls flow. Up to this point, the Dreyfus skill model is pretty standard cognitive psychology. Both Logan's instance-based theory of automaticity and Anderson's ACT theory of proceduralization say pretty much the same thing. But Hubert Dreyfus is is a noted scholar of the German philosopher Martin Heidegger, author of the pretty-much-unreadable Being and Time (see his Being-in-the-World, 1991, based on a course Dreyfus still offers at Berkeley), and he takes the argument one step further: automaticity is the way of being-in-the-world. Like Heidegger, Dreyfus opposes the Cartesian model of rational man using his mind to know the world. Like Heidegger, Dreyfus believes that automatic, intuitive behavior breaks down the distinction between the external world and internal mental representations of it. Through automaticity, we don't think about the world: we are just in it. The Cartesian model only applies to beginners. For Dreyfus, automatization isn't just a matter of internalizing a set of rules, like shift into second gear when you get to 10 miles per hour -- because there are lots of circumstances, like going up a hill or carrying a heavy load, when that rule doesn't apply. Expert drivers of standard shift cars don't follow rules -- they just intuitively know when it's right to shift. When we become experts, we behave automatically and intuitively, absorbed in what we are doing, not thinking about it at all. |

General acceptance of the

distinction between automatic and controlled processes has led to

the development of a large number of dual-process theories

in psychology and cognitive science. The basic feature of

all these theories is that any cognitive task -- reading,

impression-formation, whatever -- can be performed in either a

controlled or automatic manner -- that is, performed either

consciously or unconsciously. (In this respect, dual-process

theories could be considered to be a form of conscious

inessentialism, as defined by the philosopher Owen

Flanagan.)

Precisely how these two processes are characterized depends on the theory. Perhaps the most detailed description has been provided by Smith and DeCoster (1999, 2000).

As an example of a dual-process

theory, consider consider Kahneman's view of dual systems in

thinking, based on his Nobel Prize-winning research on judgment

and decision-making.

Whenever a person is presented with a judgment task, or a problem

to solve, these two systems go into operation, and essentially

"race" to a conclusion. But because System 1 is inherently

faster than System 2, System 1 almost always wins the race.

If we slow the process down, System 2 can take over -- but in the

ordinary course of everyday living, this doesn't happen very

often.

Most work on automaticity

assumes that there are two categories of tasks (and,

correspondingly, two categories of underlying cognitive

processes), automatic and controlled. An alternative view,

proposed by Larry Jacoby, is that every task has both

automatic and controlled components to it, in varying

degree. Jacoby has developed a process-dissociation

procedure, based in turn on the method of

opposition, to determine the extent to which

performance reflects the operation of controlled and

automatic processes.

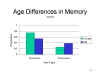

To see how the

process-dissociation procedure works in practice, consider

the matter of age differences in memory. It is widely

known that old people have poorer memories than the

young. Moreover, it turns out that age differences in

memory are greatest on tests of free recall; recognition

testing often abolishes the age difference

entirely.

To see how the

process-dissociation procedure works in practice, consider

the matter of age differences in memory. It is widely

known that old people have poorer memories than the

young. Moreover, it turns out that age differences in

memory are greatest on tests of free recall; recognition

testing often abolishes the age difference

entirely.

The question is why

this is so, and there are lots of theories. One,

based on Mandler's (1980) two-process theory of recognition,

is that recall requires active, conscious retrieval of trace

information from memory. By contrast, recognition can

be mediated by two quite different processes:

One explanation of the age difference in memory, then, is that aging impairs retrieval but spares familiarity. This will produce a decrement in recall among the elderly, but not in recognition -- not so long as the elderly rely on the automatic familiarity process, anyway.

To determine whether age

differences in memory reflected age differences in the

controlled or automatic components of memory processing,

Jacoby and his colleagues put young and old subjects in the

stem-completion task, under both Inclusion and Exclusion

conditions.

To get

concrete, assume that the word density was

in the study list. Then they might receive a

stem-completion test, in which they are presented with

three-letter stems, and asked to complete these stems with

the first word that comes to mind.

A typical finding is that subjects are more likely to complete old stems with items from the study list, such as density (as opposed to dentist). This is known as a priming effect. Priming is often held to be an automatic consequence of stimulus presentation. And, at least according to some theories, this priming effect is the basis of the automatic feeling of familiarity.

Jacoby

contrasted stem-completion performance under two different

experimental conditions.

Here are the

results of the experiment.

Here are the

results of the experiment.

In other

words, the elderly performed more poorly than the young in

two different ways:

The process-dissociation procedure is a set of mathematical formulas that allow task performance to be decomposed into its automatic and controlled components. You will not be responsible for memorizing these formulas, but you should understand the logic of the process-dissociation procedure.

Inc = C + A(1 - C),

|

|

Inc |

= the proportion of targets produced on the inclusion task; |

C |

= the strength of the controlled process, represented by the proportion of targets produced through controlled retrieval; |

(1 - C) |

= the proportion of targets not consciously retrieved. |

A |

= the strength of the automatic process, represented by the proportion of target items produced through automatic priming; |

Notice that Jacoby assumes that any single item is either consciously or automatically generated. Thus, the pool of items available for automatic generation is 1 - C, or the proportion of items not generated through conscious retrieval. Because not all items available for automatic generation are actually generated automatically, the value (1 - C) has to be multiplied by the strength of the automatic process, A.

Similarly, Jacoby assumes that

targets can appear on the Exclusion task only by

virtue of automatic priming. If the subject

consciously remembered that the item had been on the

study list, he would have excluded it from his

stem-completion. Thus,

Exc = A(1 - C).By simple algebra, then, |

|

C = Inc - Exc;

|

this is the estimate of conscious, controlled component of task performance |

A = Exc / (1 - C); |

And this is the estimate of the automatic component of task performance. |

Transforming

the percentages into proportions, and applying the

formulas above, we get the following estimates of

controlled and automatic processing for young and old

subjects, respectively:

Transforming

the percentages into proportions, and applying the

formulas above, we get the following estimates of

controlled and automatic processing for young and old

subjects, respectively:

Group |

Controlled |

Automatic |

Young |

.44 | .46 |

Old |

.16 | .46 |

In other

words, recognition in the young subjects was mediated by a

mix of controlled and automatic processes. But

recognition by the elderly was mediated mostly by

automatic processes. Put another way, the age

difference in memory performance is due entirely to the

conscious, strategic component (where the old have lower

values than the young). There are absolutely no age

differences in the automatic component (as Hasher &

Zacks would predict).

In other

words, recognition in the young subjects was mediated by a

mix of controlled and automatic processes. But

recognition by the elderly was mediated mostly by

automatic processes. Put another way, the age

difference in memory performance is due entirely to the

conscious, strategic component (where the old have lower

values than the young). There are absolutely no age

differences in the automatic component (as Hasher &

Zacks would predict).

Since its

introduction in the 1990s, Jacoby's

process-dissociation procedure has become very popular as

a means of teasing apart the differential contributions of

automatic and controlled processes to task performance

(Yonelinas & Jacoby, 2012). Most of these applications

have been in memory and closely related domains, which is

natural enough, but there have been uses outside the

confines of cognitive psychology, as we'll see in the next

set of lectures, on Automaticity

and Free Will.

At the same time, the process-dissociation procedure has been challenged in terms of its underlying assumptions. For example, Jacoby assumes that automatic and controlled processes are independent of each other. On the other hand, it might be that they are redundant -- specifically, that automatic processes are components of controlled processes. Moreover, the PDP assumes that there are only two categories of processes, automatic and controlled. If there are more than two component processes, then the traditional formulas of PDP won't work..

On theoretical grounds, the most important criticism has been of the independence assumption. Formal comparisons show that "independence" and "redundancy" models can give somewhat different parameter estimates for automatic and controlled processes, but the differences are, admittedly, not great.

Personally, I find a version of the redundancy view more appealing than the independence assumption. It seems to me that automatic processes are embedded in controlled processes, which is why dissociations between them go only one way: you can reduce controlled processing without affecting automatic processing, but you can't reduce automatic processing without reducing controlled processing (and, truth be told, you can't reduce automatic processing at all). Still, Yonelinas and Jacoby (2012) summarize evidence from two other paradigms -- employing "remember/know" judgments and signal-detection theory -- that seem to support the independence assumption. Their findings are pretty persuasive. But still, if automatic and controlled processes are really independent of each other, you'd like to see a dissociation in which you can get controlled processing without automatic processing. Which, just to repeat, you can't.

As for the possibility that there might be more than two processes involved, Jeffrey Sherman at UC Davis (interestingly, a colleague of Andy Yonelinas) has developed an alternative QUAD model that has four parameters, not just two (e.g., Sherman et al., 2008). Discussion of this model makes more sense in the lectures on Explicit and the Implicit Emotion and Motivation, so I'll defer until then.

For present purposes, however, the PDP serves as an excellent example of how investigators have gone about differentiating between controlled, conscious processing and automatic, unconscious processing.

In the context of this course, automatic processes are of interest because they were the first landmark in the rediscovery of the unconscious. Scientific psychology began as the study of consciousness, but fairly quickly the earliest psychologists began to talk about the nature of unconscious mental life. In particular, Helmholtz argued that conscious perception was mediated by unconscious inferences about the object of perception -- it's location, motion, and shape.

Watson and other radical behaviorists attempted to take consciousness out of psychology, and to redefine psychology as a science of behavior. And when psychology lost consciousness, to paraphrase R.S. Woodworth, the unconscious went with it.

True, Freud put unconscious mental life at the center of his psychoanalytic theory of mind, personality, and mental illness. But Freud was uninterested in experimental psychology, and the disregard was largely returned. Those who were interested in unconscious mental life found themselves tainted with the Freudian brush, and had a very difficult time making careers in academic psychology.

As discussed in the Introduction, one of the consequences of the cognitive revolution in psychology was a renewed interest in consciousness -- in attention, in short-term memory, and in mental imagery. Even if cognitive psychologists rarely used the word, they were talking mostly about conscious perception, memory, and thought; and they were talking about attention as a means for bringing some information into consciousness, to the exclusion of other information.

The introduction of the concept of automaticity into cognitive psychology completed the cycle, by reviving interest in unconscious processes mediating conscious cognition.

Automatic processes are unconscious processes,

executed outside of conscious awareness and outside of conscious control.

For more, continue to the Lecture Supplement on Automaticity and Free Will.

This page last revised

12/17/2017.